🚀 Stable Diffusion TrinArt/Trin-sama AI finetune v2

This is an improved version of the original Trin-sama Twitter bot model, aiming to retain the original SD's aesthetics while nudging towards anime/manga style.

🚀 Quick Start

This model is NOT the 19.2M images Characters Model on TrinArt. It's an enhanced version that tries to keep the original SD aesthetics and lean towards anime/manga styles.

Other TrinArt models can be found at:

✨ Features

Diffusers

The model has been ported to diffusers by ayan4m1. You can run it from different branches:

revision="diffusers-60k" for the checkpoint trained on 60,000 steps.revision="diffusers-95k" for the checkpoint trained on 95,000 steps.revision="diffusers-115k" for the checkpoint trained on 115,000 steps.

For more details, check the "Three flavors" section.

Gradio

We support a Gradio web ui with diffusers to run inside a colab notebook:

💻 Usage Examples

Basic Usage

Example Text2Image

from diffusers import StableDiffusionPipeline

pipe = StableDiffusionPipeline.from_pretrained("naclbit/trinart_stable_diffusion_v2", revision="diffusers-60k")

pipe.to("cuda")

image = pipe("A magical dragon flying in front of the Himalaya in manga style").images[0]

image

If you want to run the pipeline faster or on different hardware, refer to the optimization docs.

Example Image2Image

from diffusers import StableDiffusionImg2ImgPipeline

import requests

from PIL import Image

from io import BytesIO

url = "https://scitechdaily.com/images/Dog-Park.jpg"

response = requests.get(url)

init_image = Image.open(BytesIO(response.content)).convert("RGB")

init_image = init_image.resize((768, 512))

pipe = StableDiffusionImg2ImgPipeline.from_pretrained("naclbit/trinart_stable_diffusion_v2", revision="diffusers-115k")

pipe.to("cuda")

images = pipe(prompt="Manga drawing of Brad Pitt", init_image=init_image, strength=0.75, guidance_scale=7.5).images

image

If you want to run the pipeline faster or on different hardware, refer to the optimization docs.

Advanced Usage

Version 2

V2 checkpoint uses dropouts, 10,000 more images, and a new tagging strategy. It's trained longer to improve results while keeping the original aesthetics.

Three flavors

Step 115000/95000 checkpoints were further trained. If you find the style change too significant, you can use the step 60000 checkpoint.

img2img

If you want to run latent-diffusion's stock ddim img2img script with this model, set use_ema to False.

Hardware

Training Info

- Custom dataset loader with augmentations: XFlip, center crop, and aspect-ratio locked scaling

- LR: 1.0e-5

- 10% dropouts

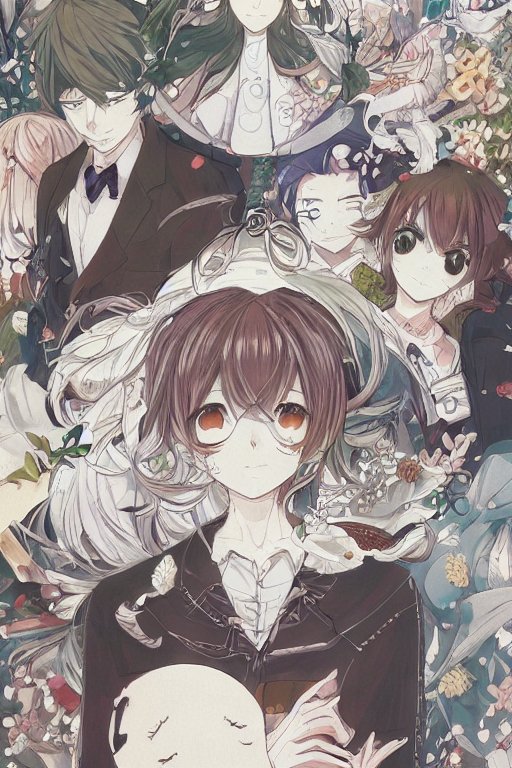

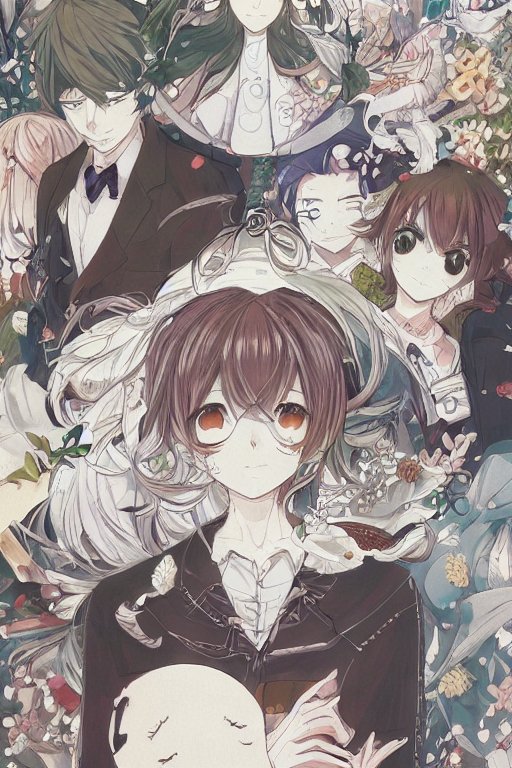

Examples

Each image was diffused using K. Crowson's k-lms (from k-diffusion repo) method for 50 steps.

Credits

- Sta, AI Novelist Dev (https://ai-novel.com/) @ Bit192, Inc.

- Stable Diffusion - Rombach, Robin and Blattmann, Andreas and Lorenz, Dominik and Esser, Patrick and Ommer, Bjorn

📄 License

CreativeML OpenRAIL-M