Sd15.controlnet.depth

A ControlNet depth adapter model based on Stable Diffusion 1.5, used to control the image generation process through depth maps

Downloads 22

Release Time : 10/8/2024

Model Overview

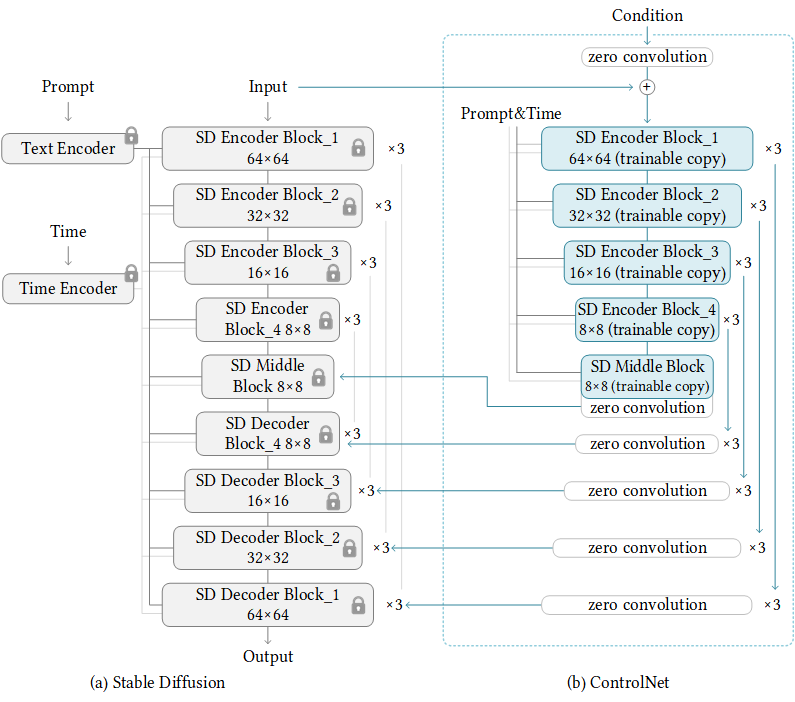

This model is a depth adapter version of the ControlNet architecture, guiding the Stable Diffusion 1.5 model to generate images that conform to depth structures by using input depth maps as conditional controls. Suitable for image generation tasks requiring precise control of scene geometric structures.

Model Features

Depth Condition Control

Precisely control the geometric structure and scene layout of generated images through input depth maps

Stable Diffusion Compatibility

Based on the Stable Diffusion 1.5 architecture, maintaining the original model's image generation quality

Fine-Grained Control

Allows users to perform fine-grained control over the composition of generated images through depth maps

Model Capabilities

Depth-Conditioned Image Generation

Image-to-Image Translation

Artistic Creation Assistance

Scene Layout Control

Use Cases

Digital Art Creation

Architectural Visualization

Generate artistically styled architectural renderings based on architectural depth maps

Apply different artistic styles while preserving the original structure

Scene Design

Generate scenes with specific spatial layouts by controlling depth information

Precisely control the front-to-back relationships of objects in the scene

Game Development

Concept Art Generation

Quickly generate high-quality game scene concept art based on simple depth sketches

Accelerate the game art development process

Featured Recommended AI Models