模型简介

模型特点

模型能力

使用案例

🚀 鲸豚兽模型OpenOrca - Platypus2 - 13B

OpenOrca - Platypus2 - 13B是一款强大的语言模型,它融合了不同数据集的优势,在多个评估指标上表现出色。该模型结合了garage - bAInd/Platypus2 - 13B和Open - Orca/OpenOrcaxOpenChat - Preview2 - 13B的特性,为自然语言处理任务提供了更高效、准确的解决方案。

🚀 快速开始

我们正在训练更多的模型,敬请关注我们组织即将与令人兴奋的合作伙伴共同发布的新模型。我们也会在Discord上提前发布预告,你可以在这里找到我们的Discord链接: https://AlignmentLab.ai

如果你想可视化我们完整的(预过滤)数据集,可以查看我们的 Nomic Atlas Map。

✨ 主要特性

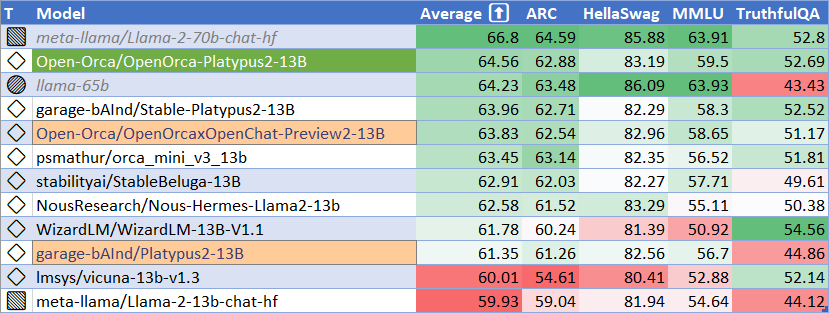

评估表现

HuggingFace排行榜表现

| 指标 | 值 |

|---|---|

| MMLU (5 - shot) | 59.5 |

| ARC (25 - shot) | 62.88 |

| HellaSwag (10 - shot) | 83.19 |

| TruthfulQA (0 - shot) | 52.69 |

| 平均值 | 64.56 |

我们使用 [Language Model Evaluation Harness](https://github.com/EleutherAI/lm - evaluation - harness) 来运行上述基准测试,使用的版本与HuggingFace大语言模型排行榜相同。

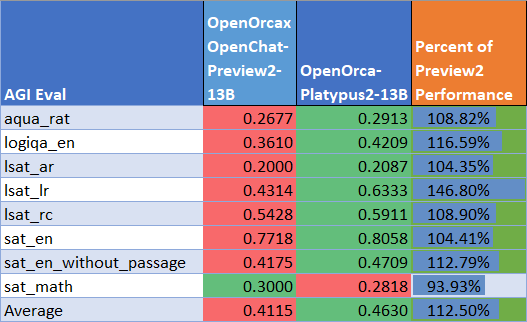

AGIEval表现

我们将结果与基础Preview2模型进行了比较(使用LM Evaluation Harness)。我们发现该模型在AGI Eval上的性能达到了基础模型的 112%,平均得分为 0.463。这种提升很大程度上得益于LSAT逻辑推理性能的显著提高。

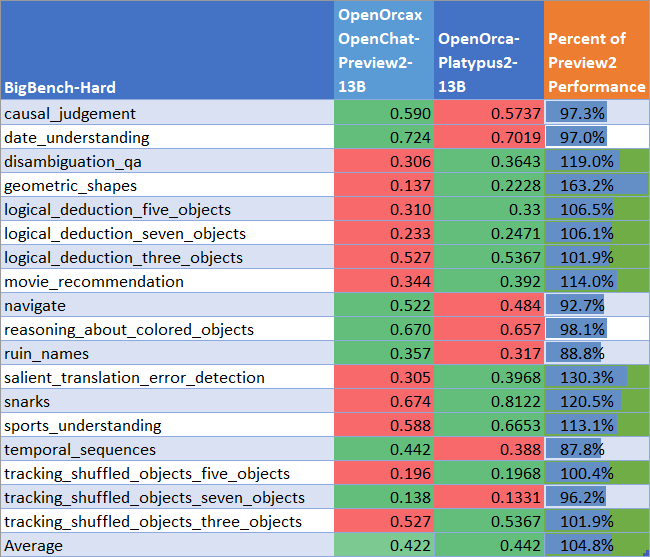

BigBench - Hard表现

我们将结果与基础Preview2模型进行了比较(使用LM Evaluation Harness)。我们发现该模型在BigBench - Hard上的性能达到了基础模型的 105%,平均得分为 0.442。

📦 安装指南

复现评估结果(用于HuggingFace排行榜评估)

安装LM Evaluation Harness:

# 克隆仓库

git clone https://github.com/EleutherAI/lm-evaluation-harness.git

# 进入仓库目录

cd lm-evaluation-harness

# 检出正确的提交

git checkout b281b0921b636bc36ad05c0b0b0763bd6dd43463

# 安装

pip install -e .

每个任务都在单个A100 - 80GB GPU上进行评估。

ARC:

python main.py --model hf-causal-experimental --model_args pretrained=Open-Orca/OpenOrca-Platypus2-13B --tasks arc_challenge --batch_size 1 --no_cache --write_out --output_path results/OpenOrca-Platypus2-13B/arc_challenge_25shot.json --device cuda --num_fewshot 25

HellaSwag:

python main.py --model hf-causal-experimental --model_args pretrained=Open-Orca/OpenOrca-Platypus2-13B --tasks hellaswag --batch_size 1 --no_cache --write_out --output_path results/OpenOrca-Platypus2-13B/hellaswag_10shot.json --device cuda --num_fewshot 10

MMLU:

python main.py --model hf-causal-experimental --model_args pretrained=Open-Orca/OpenOrca-Platypus2-13B --tasks hendrycksTest-* --batch_size 1 --no_cache --write_out --output_path results/OpenOrca-Platypus2-13B/mmlu_5shot.json --device cuda --num_fewshot 5

TruthfulQA:

python main.py --model hf-causal-experimental --model_args pretrained=Open-Orca/OpenOrca-Platypus2-13B --tasks truthfulqa_mc --batch_size 1 --no_cache --write_out --output_path results/OpenOrca-Platypus2-13B/truthfulqa_0shot.json --device cuda

📚 详细文档

模型详情

| 属性 | 详情 |

|---|---|

| 训练者 | Platypus2 - 13B 由Cole Hunter和Ariel Lee训练;OpenOrcaxOpenChat - Preview2 - 13B 由Open - Orca训练 |

| 模型类型 | OpenOrca - Platypus2 - 13B 是基于Llama 2 Transformer架构的自回归语言模型 |

| 语言 | 英语 |

| Platypus2 - 13B基础权重许可证 | 非商业知识共享许可证 ([CC BY - NC - 4.0](https://creativecommons.org/licenses/by - nc/4.0/)) |

| OpenOrcaxOpenChat - Preview2 - 13B基础权重许可证 | Llama 2商业许可证 |

提示模板

Platypus2 - 13B基础提示模板

### 指令:

<prompt> (不包含<>)

### 回复:

OpenOrcaxOpenChat - Preview2 - 13B基础提示模板

OpenChat Llama2 V1:有关更多信息,请参阅 OpenOrcaxOpenChat - Preview2 - 13B。

训练详情

训练数据集

garage - bAInd/Platypus2 - 13B 使用基于STEM和逻辑的数据集 [garage - bAInd/Open - Platypus](https://huggingface.co/datasets/garage - bAInd/Open - Platypus) 进行训练。

有关更多信息,请参阅我们的 论文 和 [项目网页](https://platypus - llm.github.io)。

Open - Orca/OpenOrcaxOpenChat - Preview2 - 13B 使用 [OpenOrca数据集](https://huggingface.co/datasets/Open - Orca/OpenOrca) 中大部分GPT - 4数据的精炼子集进行训练。

训练过程

Open - Orca/Platypus2 - 13B 使用LoRA在1x A100 - 80GB上进行指令微调。有关训练细节和推理说明,请参阅 Platypus GitHub仓库。

局限性和偏差

Llama 2及其微调变体是一项新技术,使用时存在风险。到目前为止进行的测试均使用英语,且无法涵盖所有场景。因此,与所有大语言模型一样,Llama 2及其任何微调变体的潜在输出无法提前预测,在某些情况下,模型可能会对用户提示产生不准确、有偏差或其他令人反感的响应。因此,在部署Llama 2变体的任何应用程序之前,开发人员应针对其特定应用对模型进行安全测试和调整。

请参阅 [负责任使用指南](https://ai.meta.com/llama/responsible - use - guide/)。

🔧 技术细节

引用

@software{hunterlee2023orcaplaty1

title = {OpenOrcaPlatypus: Llama2-13B Model Instruct-tuned on Filtered OpenOrcaV1 GPT-4 Dataset and Merged with divergent STEM and Logic Dataset Model},

author = {Ariel N. Lee and Cole J. Hunter and Nataniel Ruiz and Bleys Goodson and Wing Lian and Guan Wang and Eugene Pentland and Austin Cook and Chanvichet Vong and "Teknium"},

year = {2023},

publisher = {HuggingFace},

journal = {HuggingFace repository},

howpublished = {\url{https://huggingface.co/Open-Orca/OpenOrca-Platypus2-13B},

}

@article{platypus2023,

title={Platypus: Quick, Cheap, and Powerful Refinement of LLMs},

author={Ariel N. Lee and Cole J. Hunter and Nataniel Ruiz},

booktitle={arXiv preprint arxiv:2308.07317},

year={2023}

}

@software{OpenOrcaxOpenChatPreview2,

title = {OpenOrcaxOpenChatPreview2: Llama2-13B Model Instruct-tuned on Filtered OpenOrcaV1 GPT-4 Dataset},

author = {Guan Wang and Bleys Goodson and Wing Lian and Eugene Pentland and Austin Cook and Chanvichet Vong and "Teknium"},

year = {2023},

publisher = {HuggingFace},

journal = {HuggingFace repository},

howpublished = {\url{https://https://huggingface.co/Open-Orca/OpenOrcaxOpenChat-Preview2-13B},

}

@software{openchat,

title = {{OpenChat: Advancing Open-source Language Models with Imperfect Data}},

author = {Wang, Guan and Cheng, Sijie and Yu, Qiying and Liu, Changling},

doi = {10.5281/zenodo.8105775},

url = {https://github.com/imoneoi/openchat},

version = {pre-release},

year = {2023},

month = {7},

}

@misc{mukherjee2023orca,

title={Orca: Progressive Learning from Complex Explanation Traces of GPT-4},

author={Subhabrata Mukherjee and Arindam Mitra and Ganesh Jawahar and Sahaj Agarwal and Hamid Palangi and Ahmed Awadallah},

year={2023},

eprint={2306.02707},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

@misc{touvron2023llama,

title={Llama 2: Open Foundation and Fine-Tuned Chat Models},

author={Hugo Touvron and Louis Martin and Kevin Stone and Peter Albert and Amjad Almahairi and Yasmine Babaei and Nikolay Bashlykov and Soumya Batra and Prajjwal Bhargava and Shruti Bhosale and Dan Bikel and Lukas Blecher and Cristian Canton Ferrer and Moya Chen and Guillem Cucurull and David Esiobu and Jude Fernandes and Jeremy Fu and Wenyin Fu and Brian Fuller and Cynthia Gao and Vedanuj Goswami and Naman Goyal and Anthony Hartshorn and Saghar Hosseini and Rui Hou and Hakan Inan and Marcin Kardas and Viktor Kerkez and Madian Khabsa and Isabel Kloumann and Artem Korenev and Punit Singh Koura and Marie-Anne Lachaux and Thibaut Lavril and Jenya Lee and Diana Liskovich and Yinghai Lu and Yuning Mao and Xavier Martinet and Todor Mihaylov and Pushkar Mishra and Igor Molybog and Yixin Nie and Andrew Poulton and Jeremy Reizenstein and Rashi Rungta and Kalyan Saladi and Alan Schelten and Ruan Silva and Eric Michael Smith and Ranjan Subramanian and Xiaoqing Ellen Tan and Binh Tang and Ross Taylor and Adina Williams and Jian Xiang Kuan and Puxin Xu and Zheng Yan and Iliyan Zarov and Yuchen Zhang and Angela Fan and Melanie Kambadur and Sharan Narang and Aurelien Rodriguez and Robert Stojnic and Sergey Edunov and Thomas Scialom},

year={2023},

eprint= arXiv 2307.09288

}

@misc{longpre2023flan,

title={The Flan Collection: Designing Data and Methods for Effective Instruction Tuning},

author={Shayne Longpre and Le Hou and Tu Vu and Albert Webson and Hyung Won Chung and Yi Tay and Denny Zhou and Quoc V. Le and Barret Zoph and Jason Wei and Adam Roberts},

year={2023},

eprint={2301.13688},

archivePrefix={arXiv},

primaryClass={cs.AI}

}

@article{hu2021lora,

title={LoRA: Low-Rank Adaptation of Large Language Models},

author={Hu, Edward J. and Shen, Yelong and Wallis, Phillip and Allen-Zhu, Zeyuan and Li, Yuanzhi and Wang, Shean and Chen, Weizhu},

journal={CoRR},

year={2021}

}

📄 许可证

本项目采用CC BY - NC - 4.0许可证。

Transformers

Transformers Transformers 支持多种语言

Transformers 支持多种语言 Transformers 支持多种语言

Transformers 支持多种语言 Transformers 英语

Transformers 英语