模型概述

模型特點

模型能力

使用案例

🚀 潛在一致性模型 (LCM) LoRA:SDXL

潛在一致性模型 (LCM) LoRA 由 Simian Luo、Yiqin Tan、Suraj Patil、Daniel Gu 等人 在 LCM-LoRA: A universal Stable-Diffusion Acceleration Module 中提出。它是一個用於 stable-diffusion-xl-base-1.0 的蒸餾一致性適配器,可將推理步驟數減少至僅 2 - 8 步。

| 屬性 | 詳情 |

|---|---|

| 庫名稱 | diffusers |

| 基礎模型 | stabilityai/stable-diffusion-xl-base-1.0 |

| 標籤 | lora、text-to-image |

| 許可證 | openrail++ |

| 模型 | 參數(百萬) |

|---|---|

| lcm-lora-sdv1-5 | 67.5 |

| lcm-lora-ssd-1b | 105 |

| lcm-lora-sdxl | 197M |

🚀 快速開始

LCM-LoRA 從 🤗 Hugging Face Diffusers 庫的 v0.23.0 版本開始支持。要運行該模型,首先需要安裝最新版本的 Diffusers 庫以及 peft、accelerate 和 transformers:

pip install --upgrade pip

pip install --upgrade diffusers transformers accelerate peft

⚠️ 重要提示

有關詳細的使用示例,建議查看官方 LCM-LoRA 文檔。

💻 使用示例

基礎用法

文本到圖像

適配器可以與其基礎模型 stabilityai/stable-diffusion-xl-base-1.0 一起加載。接下來,需要將調度器更改為 LCMScheduler,並且可以將推理步驟數減少到僅 2 到 8 步。請確保禁用 guidance_scale 或使用 1.0 到 2.0 之間的值。

import torch

from diffusers import LCMScheduler, AutoPipelineForText2Image

model_id = "stabilityai/stable-diffusion-xl-base-1.0"

adapter_id = "latent-consistency/lcm-lora-sdxl"

pipe = AutoPipelineForText2Image.from_pretrained(model_id, torch_dtype=torch.float16, variant="fp16")

pipe.scheduler = LCMScheduler.from_config(pipe.scheduler.config)

pipe.to("cuda")

# load and fuse lcm lora

pipe.load_lora_weights(adapter_id)

pipe.fuse_lora()

prompt = "Self-portrait oil painting, a beautiful cyborg with golden hair, 8k"

# disable guidance_scale by passing 0

image = pipe(prompt=prompt, num_inference_steps=4, guidance_scale=0).images[0]

圖像修復

LCM-LoRA 也可用於圖像修復。

import torch

from diffusers import AutoPipelineForInpainting, LCMScheduler

from diffusers.utils import load_image, make_image_grid

pipe = AutoPipelineForInpainting.from_pretrained(

"diffusers/stable-diffusion-xl-1.0-inpainting-0.1",

torch_dtype=torch.float16,

variant="fp16",

).to("cuda")

# set scheduler

pipe.scheduler = LCMScheduler.from_config(pipe.scheduler.config)

# load LCM-LoRA

pipe.load_lora_weights("latent-consistency/lcm-lora-sdxl")

pipe.fuse_lora()

# load base and mask image

init_image = load_image("https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/inpaint.png").resize((1024, 1024))

mask_image = load_image("https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/inpaint_mask.png").resize((1024, 1024))

prompt = "a castle on top of a mountain, highly detailed, 8k"

generator = torch.manual_seed(42)

image = pipe(

prompt=prompt,

image=init_image,

mask_image=mask_image,

generator=generator,

num_inference_steps=5,

guidance_scale=4,

).images[0]

make_image_grid([init_image, mask_image, image], rows=1, cols=3)

高級用法

與風格化 LoRAs 結合使用

LCM-LoRA 可以與其他 LoRAs 結合使用,以在極少的步驟(4 - 8 步)內生成風格化圖像。在以下示例中,我們將 LCM-LoRA 與 papercut LoRA 結合使用。要了解更多關於如何結合 LoRAs 的信息,請參考 本指南。

import torch

from diffusers import DiffusionPipeline, LCMScheduler

pipe = DiffusionPipeline.from_pretrained(

"stabilityai/stable-diffusion-xl-base-1.0",

variant="fp16",

torch_dtype=torch.float16

).to("cuda")

# set scheduler

pipe.scheduler = LCMScheduler.from_config(pipe.scheduler.config)

# load LoRAs

pipe.load_lora_weights("latent-consistency/lcm-lora-sdxl", adapter_name="lcm")

pipe.load_lora_weights("TheLastBen/Papercut_SDXL", weight_name="papercut.safetensors", adapter_name="papercut")

# Combine LoRAs

pipe.set_adapters(["lcm", "papercut"], adapter_weights=[1.0, 0.8])

prompt = "papercut, a cute fox"

generator = torch.manual_seed(0)

image = pipe(prompt, num_inference_steps=4, guidance_scale=1, generator=generator).images[0]

image

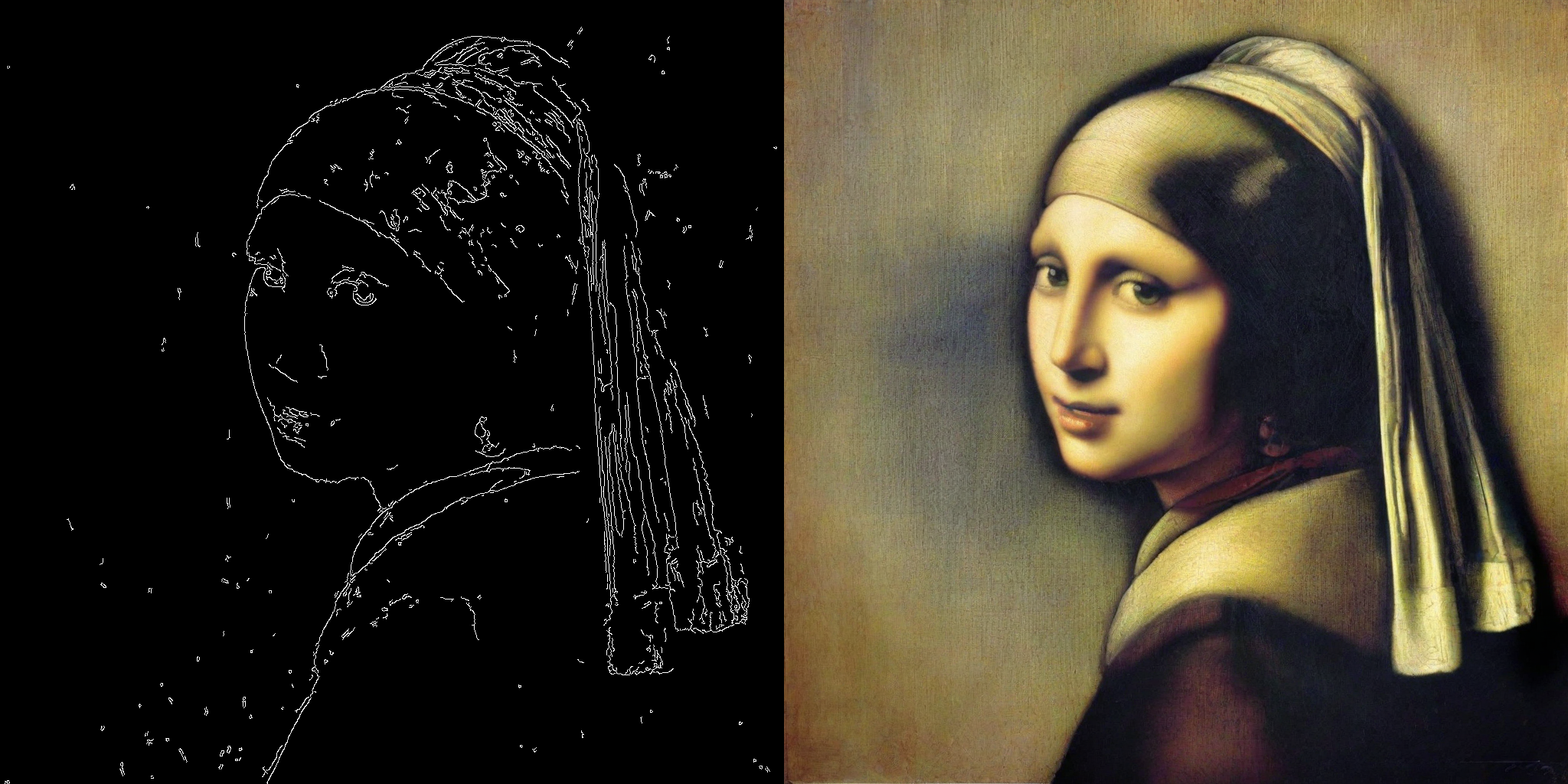

ControlNet

import torch

import cv2

import numpy as np

from PIL import Image

from diffusers import StableDiffusionXLControlNetPipeline, ControlNetModel, LCMScheduler

from diffusers.utils import load_image

image = load_image(

"https://hf.co/datasets/huggingface/documentation-images/resolve/main/diffusers/input_image_vermeer.png"

).resize((1024, 1024))

image = np.array(image)

low_threshold = 100

high_threshold = 200

image = cv2.Canny(image, low_threshold, high_threshold)

image = image[:, :, None]

image = np.concatenate([image, image, image], axis=2)

canny_image = Image.fromarray(image)

controlnet = ControlNetModel.from_pretrained("diffusers/controlnet-canny-sdxl-1.0-small", torch_dtype=torch.float16, variant="fp16")

pipe = StableDiffusionXLControlNetPipeline.from_pretrained(

"stabilityai/stable-diffusion-xl-base-1.0",

controlnet=controlnet,

torch_dtype=torch.float16,

safety_checker=None,

variant="fp16"

).to("cuda")

# set scheduler

pipe.scheduler = LCMScheduler.from_config(pipe.scheduler.config)

# load LCM-LoRA

pipe.load_lora_weights("latent-consistency/lcm-lora-sdxl")

pipe.fuse_lora()

generator = torch.manual_seed(0)

image = pipe(

"picture of the mona lisa",

image=canny_image,

num_inference_steps=5,

guidance_scale=1.5,

controlnet_conditioning_scale=0.5,

cross_attention_kwargs={"scale": 1},

generator=generator,

).images[0]

make_image_grid([canny_image, image], rows=1, cols=2)

⚠️ 重要提示

此示例中的推理參數可能並非適用於所有情況,建議嘗試不同的

num_inference_steps、guidance_scale、controlnet_conditioning_scale和cross_attention_kwargs參數值,並選擇最佳參數。

T2I Adapter

此示例展示瞭如何將 LCM-LoRA 與 Canny T2I-Adapter 和 SDXL 結合使用。

import torch

import cv2

import numpy as np

from PIL import Image

from diffusers import StableDiffusionXLAdapterPipeline, T2IAdapter, LCMScheduler

from diffusers.utils import load_image, make_image_grid

# Prepare image

# Detect the canny map in low resolution to avoid high-frequency details

image = load_image(

"https://huggingface.co/Adapter/t2iadapter/resolve/main/figs_SDXLV1.0/org_canny.jpg"

).resize((384, 384))

image = np.array(image)

low_threshold = 100

high_threshold = 200

image = cv2.Canny(image, low_threshold, high_threshold)

image = image[:, :, None]

image = np.concatenate([image, image, image], axis=2)

canny_image = Image.fromarray(image).resize((1024, 1024))

# load adapter

adapter = T2IAdapter.from_pretrained("TencentARC/t2i-adapter-canny-sdxl-1.0", torch_dtype=torch.float16, varient="fp16").to("cuda")

pipe = StableDiffusionXLAdapterPipeline.from_pretrained(

"stabilityai/stable-diffusion-xl-base-1.0",

adapter=adapter,

torch_dtype=torch.float16,

variant="fp16",

).to("cuda")

# set scheduler

pipe.scheduler = LCMScheduler.from_config(pipe.scheduler.config)

# load LCM-LoRA

pipe.load_lora_weights("latent-consistency/lcm-lora-sdxl")

prompt = "Mystical fairy in real, magic, 4k picture, high quality"

negative_prompt = "extra digit, fewer digits, cropped, worst quality, low quality, glitch, deformed, mutated, ugly, disfigured"

generator = torch.manual_seed(0)

image = pipe(

prompt=prompt,

negative_prompt=negative_prompt,

image=canny_image,

num_inference_steps=4,

guidance_scale=1.5,

adapter_conditioning_scale=0.8,

adapter_conditioning_factor=1,

generator=generator,

).images[0]

make_image_grid([canny_image, image], rows=1, cols=2)

📄 許可證

本項目採用 openrail++ 許可證。

Transformers 支持多種語言

Transformers 支持多種語言 Transformers 英語

Transformers 英語