🚀 Instruction-tuned Stable Diffusion for Cartoonization (Fine-tuned)

This pipeline is an instruction-tuned version of Stable Diffusion (v1.5), fine-tuned for better image cartoonization following specific instructions.

🚀 Quick Start

You can use the following code to perform cartoonization with this model:

import torch

from diffusers import StableDiffusionInstructPix2PixPipeline

from diffusers.utils import load_image

model_id = "instruction-tuning-sd/cartoonizer"

pipeline = StableDiffusionInstructPix2PixPipeline.from_pretrained(

model_id, torch_dtype=torch.float16, use_auth_token=True

).to("cuda")

image_path = "https://hf.co/datasets/diffusers/diffusers-images-docs/resolve/main/mountain.png"

image = load_image(image_path)

image = pipeline("Cartoonize the following image", image=image).images[0]

image.save("image.png")

✨ Features

- Instruction-tuned: Based on the concept of FLAN and InstructPix2Pix, it can better follow specific instructions for image transformation.

- Fine-tuned for cartoonization: Trained on a specific dataset to achieve better cartoonization results.

📚 Documentation

Pipeline description

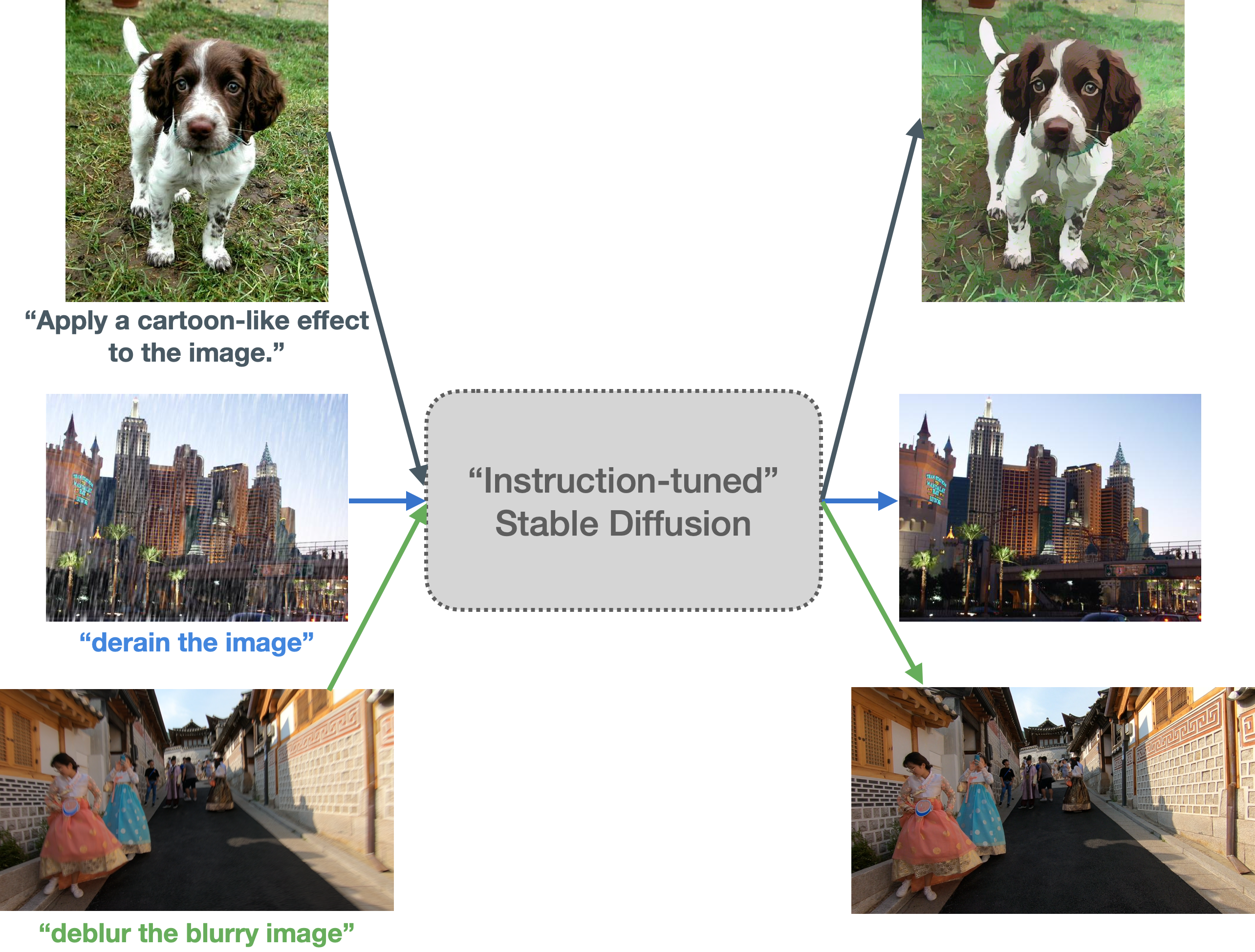

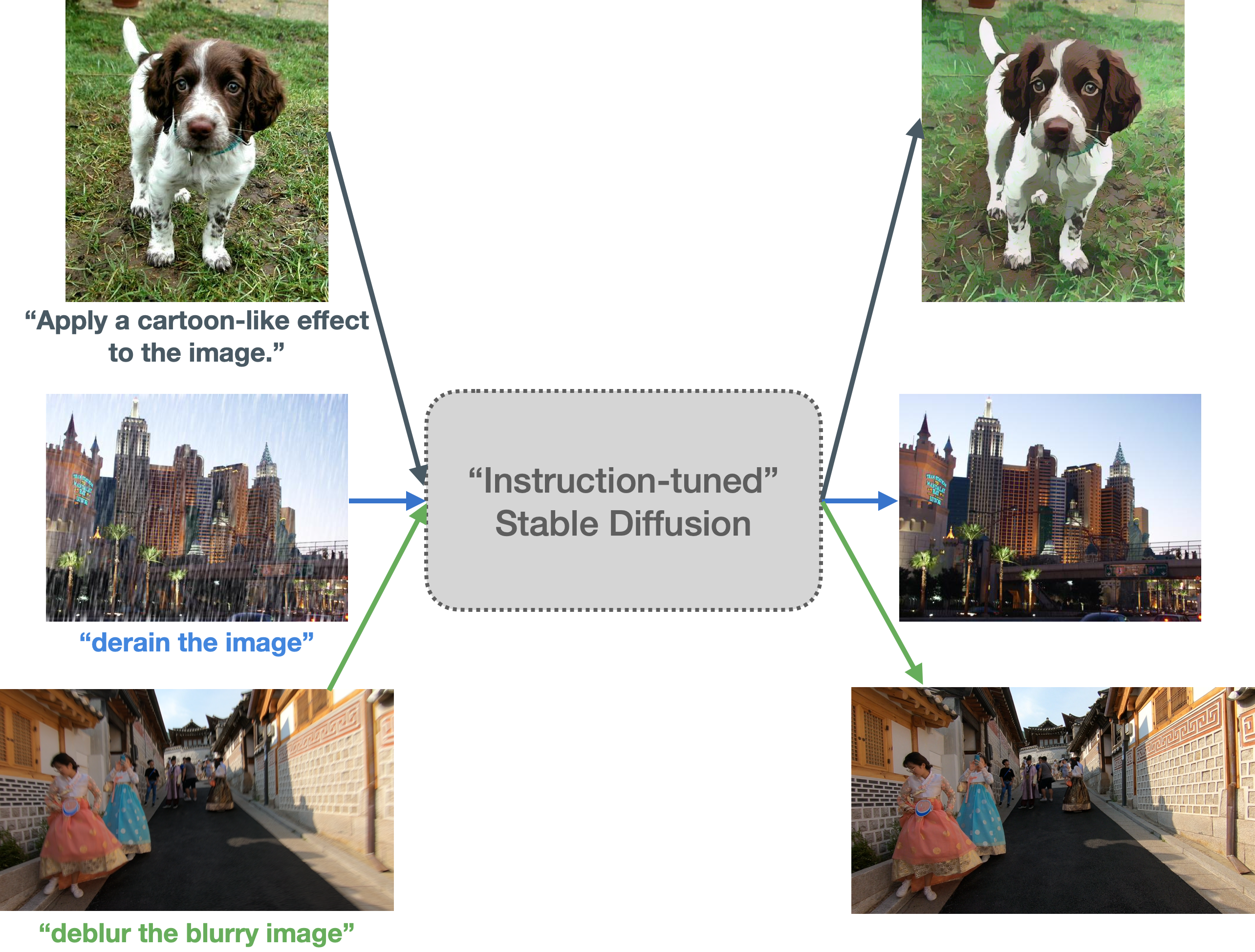

The motivation behind this pipeline partly comes from FLAN and partly from InstructPix2Pix. The main idea is to first create an instruction prompted dataset (as described in our blog) and then conduct InstructPix2Pix style training. The end objective is to make Stable Diffusion better at following specific instructions that entail image transformation related operations.

Follow this post to know more.

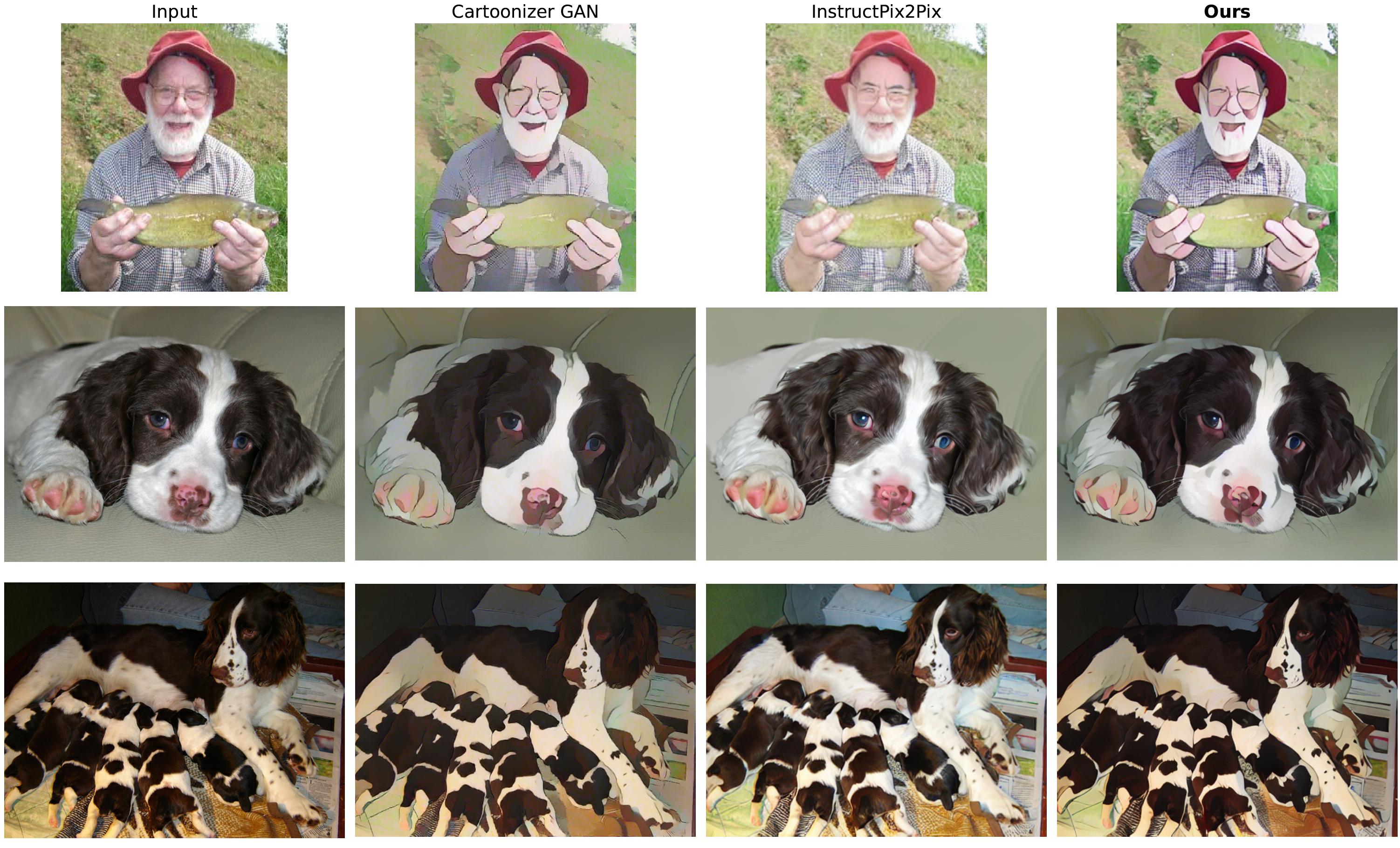

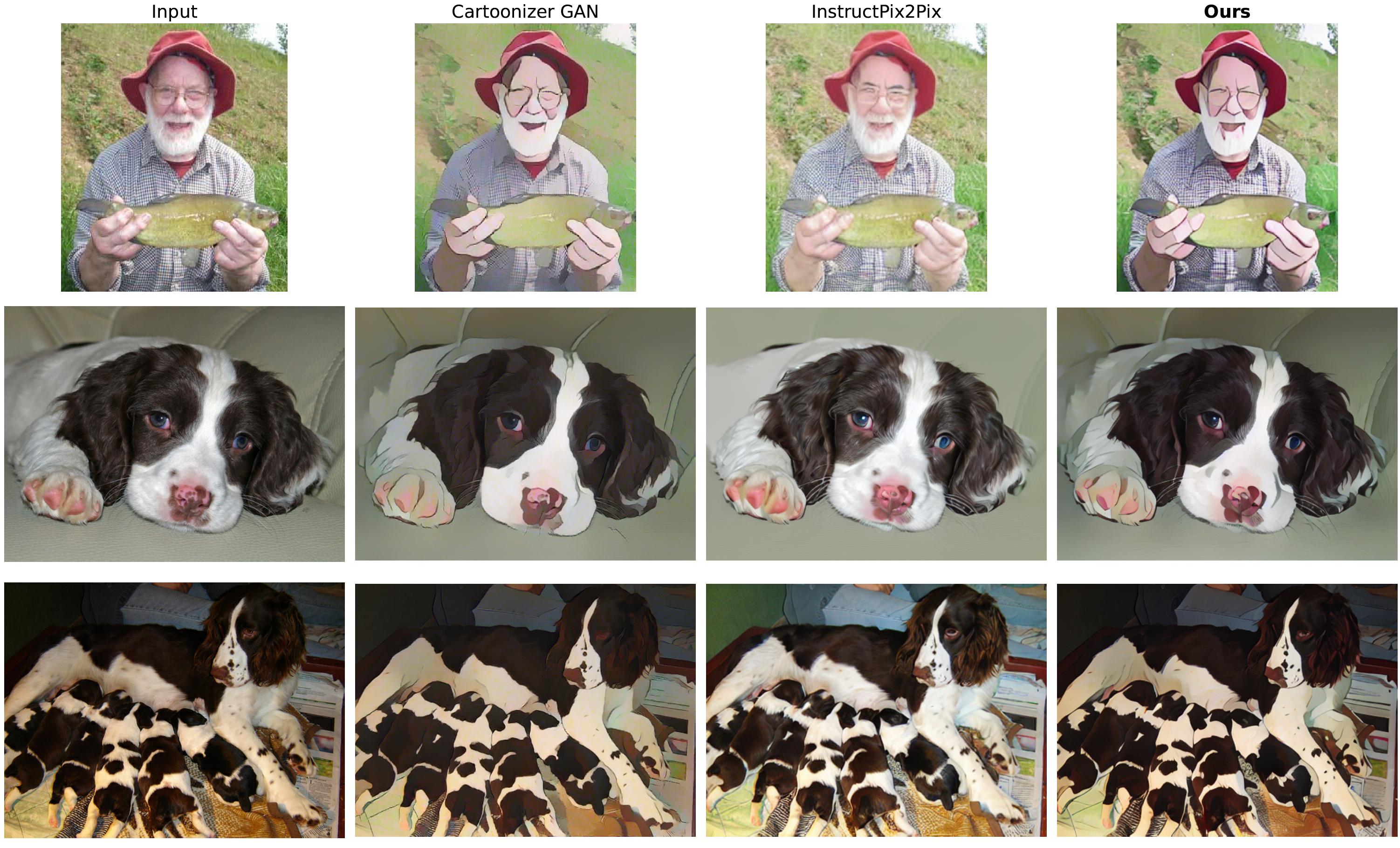

Training procedure and results

Training was conducted on instruction-tuning-sd/cartoonization dataset. Refer to this repository to know more. The training logs can be found here.

Here are some results derived from the pipeline:

Intended uses & limitations

You can use the pipeline for performing cartoonization with an input image and an input prompt. For notes on limitations, misuse, malicious use, out-of-scope use, please refer to the model card here.

📄 License

This project is licensed under the MIT license.

📝 Citation

FLAN

@inproceedings{

wei2022finetuned,

title={Finetuned Language Models are Zero-Shot Learners},

author={Jason Wei and Maarten Bosma and Vincent Zhao and Kelvin Guu and Adams Wei Yu and Brian Lester and Nan Du and Andrew M. Dai and Quoc V Le},

booktitle={International Conference on Learning Representations},

year={2022},

url={https://openreview.net/forum?id=gEZrGCozdqR}

}

InstructPix2Pix

@InProceedings{

brooks2022instructpix2pix,

author = {Brooks, Tim and Holynski, Aleksander and Efros, Alexei A.},

title = {InstructPix2Pix: Learning to Follow Image Editing Instructions},

booktitle = {CVPR},

year = {2023},

}

Instruction-tuning for Stable Diffusion blog

@article{

Paul2023instruction-tuning-sd,

author = {Paul, Sayak},

title = {Instruction-tuning Stable Diffusion with InstructPix2Pix},

journal = {Hugging Face Blog},

year = {2023},

note = {https://huggingface.co/blog/instruction-tuning-sd},

}