🚀 SMILES-based State-Space Encoder-Decoder (SMI-SSED) - MoLMamba

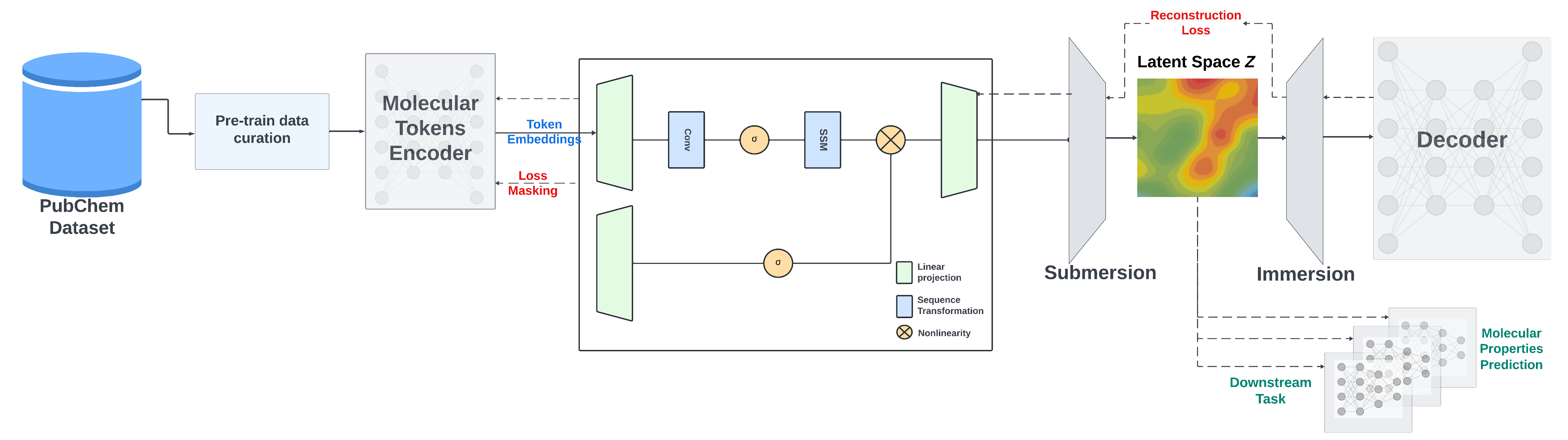

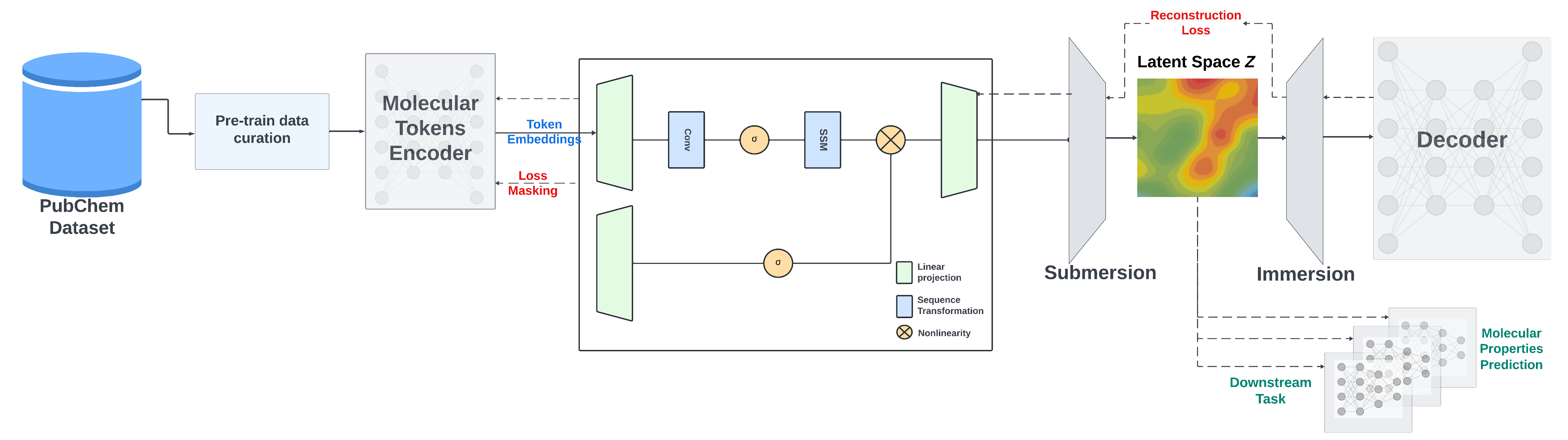

This repository offers PyTorch source code related to our publication, "A Mamba-Based Foundation Model for Chemistry". It presents a Mamba - based encoder - decoder chemical foundation model, which can handle various complex chemical tasks, providing model weights in different formats and detailed instructions for getting started, pretraining, finetuning, and feature extraction.

Paper NeurIPS AI4Mat 2024: Arxiv Link

For more information, contact: eduardo.soares@ibm.com or evital@br.ibm.com.

✨ Features

- Powerful Model: A Mamba - based encoder - decoder chemical foundation model, pre - trained on a large dataset of 91 million SMILES samples.

- Task Support: Supports various complex tasks, including quantum property prediction, with two main variants ($336$ and $8 \times 336M$).

- Multiple Model Formats: Model weights are provided in PyTorch (

.pt) and safetensors (.bin) formats.

- Comprehensive Documentation: Detailed instructions for getting started, pretraining, finetuning, and feature extraction are provided.

🚀 Quick Start

This code and environment have been tested on Nvidia V100s and Nvidia A100s

📦 Installation

Pretrained Models and Training Logs

We offer checkpoints of the SMI - SSED model pre - trained on a dataset of ~91M molecules from PubChem. The pre - trained model shows good performance on classification and regression benchmarks from MoleculeNet.

Add the SMI - SSED pre - trained weights.pt to the inference/ or finetune/ directory as needed. The directory structure should be as follows:

inference/

├── smi_ssed

│ ├── smi_ssed.pt

│ ├── bert_vocab_curated.txt

│ └── load.py

and/or:

finetune/

├── smi_ssed

│ ├── smi_ssed.pt

│ ├── bert_vocab_curated.txt

│ └── load.py

Replicating Conda Environment

Follow these steps to replicate our Conda environment and install the necessary libraries:

Create and Activate Conda Environment

conda create --name smi - ssed - env python = 3.10

conda activate smi - ssed - env

Install Packages with Conda

conda install pytorch = 2.1.0 pytorch - cuda = 11.8 - c pytorch - c nvidia

Install Packages with Pip

pip install - r requirements.txt

📚 Documentation

Pretraining

For pretraining, we use two strategies: the masked language model method to train the encoder part and an encoder - decoder strategy to refine SMILES reconstruction and improve the generated latent space.

SMI - SSED is pre - trained on canonicalized and curated 91M SMILES from PubChem with the following constraints:

- Compounds are filtered to a maximum length of 202 tokens during preprocessing.

- A 95/5/0 split is used for encoder training, with 5% of the data for decoder pretraining.

- A 100/0/0 split is also used to train the encoder and decoder directly, enhancing model performance.

The pretraining code provides examples of data processing and model training on a smaller dataset, requiring 8 A100 GPUs.

To pre - train the SMI - SSED model, run:

bash training/run_model_training.sh

Use train_model_D.py to train only the decoder or train_model_ED.py to train both the encoder and decoder.

Finetuning

The finetuning datasets and environment can be found in the finetune directory. After setting up the environment, you can run a finetuning task with:

bash finetune/smi_ssed/esol/run_finetune_esol.sh

Finetuning training/checkpointing resources will be available in directories named checkpoint_<measure_name>.

Feature Extraction

The example notebook smi_ssed_encoder_decoder_example.ipynb contains code to load checkpoint files and use the pre - trained model for encoder and decoder tasks. It also includes examples of classification and regression tasks. For model weights: [HuggingFace Link](https://huggingface.co/ibm/materials.smi - ted)

💻 Usage Examples

Basic Usage

To load smi - ssed, you can simply use:

model = load_smi_ssed(

folder='../inference/smi_ssed',

ckpt_filename='smi_ssed.pt'

)

Advanced Usage

To encode SMILES into embeddings, you can use:

with torch.no_grad():

encoded_embeddings = model.encode(df['SMILES'], return_torch=True)

For decoder, you can use the function to return from embeddings to SMILES strings:

with torch.no_grad():

decoded_smiles = model.decode(encoded_embeddings)

📄 License

This project is licensed under the Apache - 2.0 license.

| Property |

Details |

| Model Type |

SMILES - based State - Space Encoder - Decoder (SMI - SSED) |

| Training Data |

91 million SMILES samples from PubChem |

| Library Name |

transformers |

| Metrics |

accuracy |

| Pipeline Tag |

feature - extraction |

| Tags |

chemistry, foundation models, AI4Science, materials, molecules, safetensors, pytorch, transformer, diffusers |