🚀 DISTIL-ITA-LEGAL-BERT

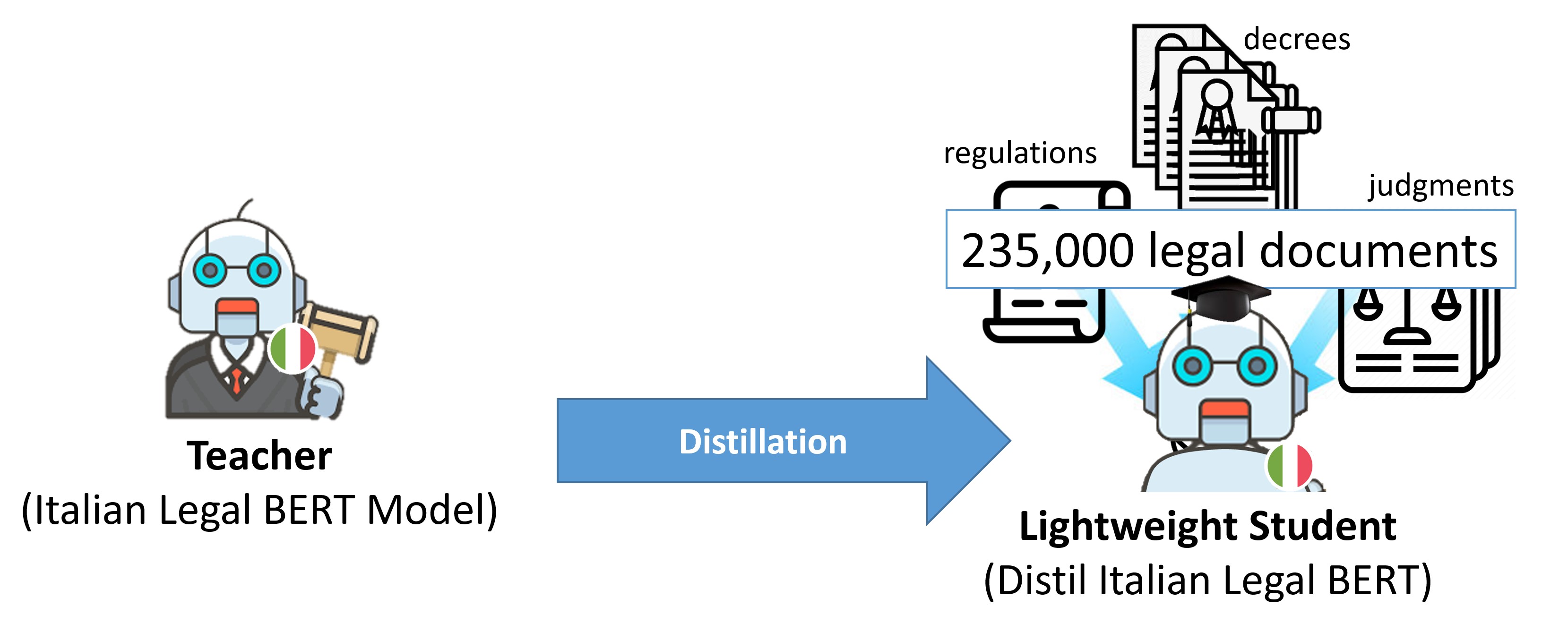

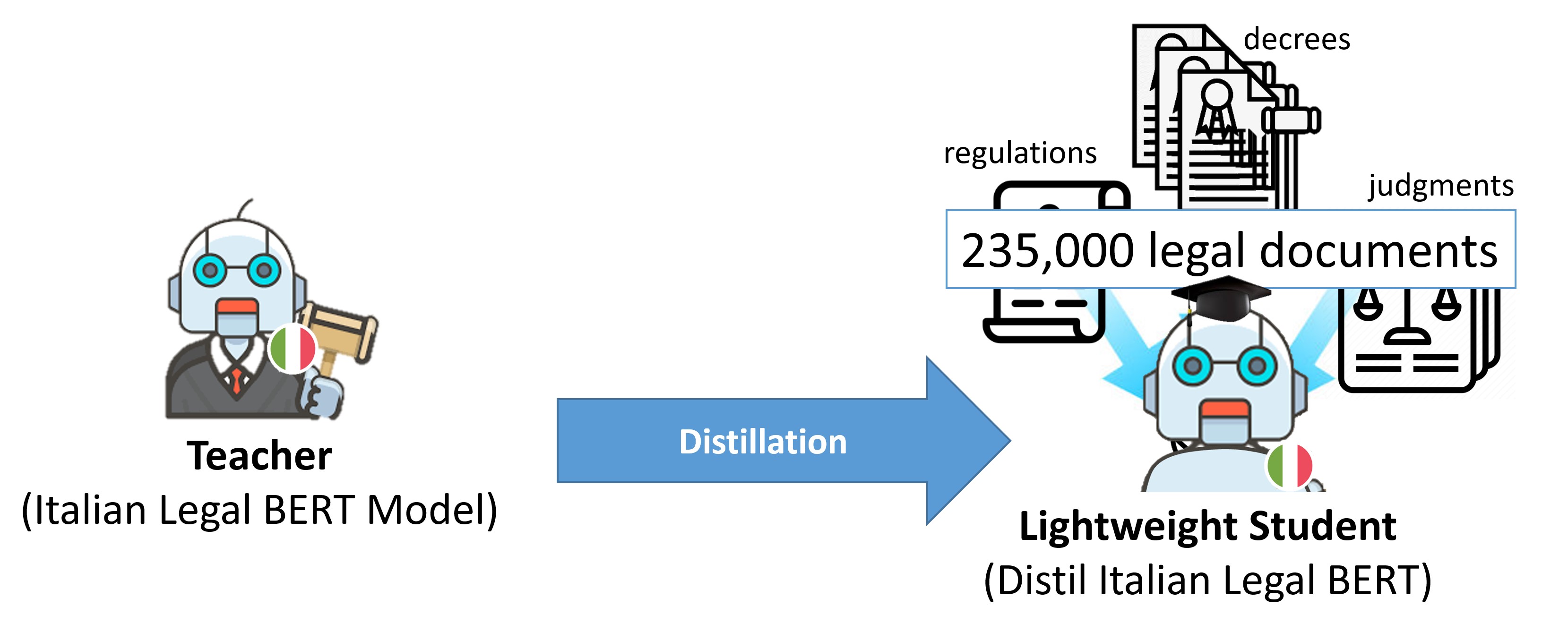

A fast and lightweight student model created through knowledge distillation, capable of generating sentence embeddings similar to the more complex ITALIAN-LEGAL-BERT teacher model.

🚀 Quick Start

We employed the knowledge distillation process to develop a swift and lightweight student model with only 4-levels of Transformers. This model can generate sentence embeddings comparable to those of the more intricate ITALIAN-LEGAL-BERT teacher model. It was optimized on the ITALIAN-LEGAL-BERT train set (3.7 GB) using the Sentence-BERT library by minimizing the mean square error (MSE) between its embeddings and those of the teacher model.

This is a sentence-transformers model that maps sentences and paragraphs to a 768-dimensional dense vector space. It can be utilized for tasks such as clustering or semantic search.

✨ Features

- Knowledge Distillation: Created a fast and lightweight model using knowledge distillation.

- Similar Embeddings: Produces sentence embeddings similar to the ITALIAN-LEGAL-BERT teacher model.

- Versatile Use: Can be used for clustering or semantic search tasks.

📦 Installation

Using this model is straightforward when you have sentence-transformers installed. Install it with the following command:

pip install -U sentence-transformers

💻 Usage Examples

Basic Usage

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('dlicari/distil-ita-legal-bert')

embeddings = model.encode(sentences)

print(embeddings)

Advanced Usage

Without sentence-transformers, you can use the model as follows. First, pass your input through the transformer model, then apply the appropriate pooling operation on top of the contextualized word embeddings.

from transformers import AutoTokenizer, AutoModel

import torch

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0]

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

sentences = ['This is an example sentence', 'Each sentence is converted']

tokenizer = AutoTokenizer.from_pretrained('dlicari/distil-ita-legal-bert')

model = AutoModel.from_pretrained('dlicari/distil-ita-legal-bert')

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

with torch.no_grad():

model_output = model(**encoded_input)

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

📚 Documentation

For an automated evaluation of this model, visit the Sentence Embeddings Benchmark: https://seb.sbert.net

🔧 Technical Details

Training

The model was trained with the following parameters:

DataLoader:

torch.utils.data.dataloader.DataLoader of length 409633 with parameters:

{'batch_size': 24, 'sampler': 'torch.utils.data.sampler.RandomSampler', 'batch_sampler': 'torch.utils.data.sampler.BatchSampler'}

Loss:

sentence_transformers.losses.MSELoss.MSELoss

Parameters of the fit()-Method:

{

"epochs": 4,

"evaluation_steps": 5000,

"evaluator": "sentence_transformers.evaluation.SequentialEvaluator.SequentialEvaluator",

"max_grad_norm": 1,

"optimizer_class": "<class 'transformers.optimization.AdamW'>",

"optimizer_params": {

"correct_bias": false,

"eps": 1e-06,

"lr": 0.0001

},

"scheduler": "WarmupLinear",

"steps_per_epoch": null,

"warmup_steps": 1000,

"weight_decay": 0.01

}

Full Model Architecture

SentenceTransformer(

(0): Transformer({'max_seq_length': 512, 'do_lower_case': False}) with Transformer model: BertModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

)

📄 License

This project is licensed under the afl-3.0 license.