🚀 Aero-1-Audio

Aero-1-Audio is a compact audio model that excels in various audio tasks, such as speech recognition, audio understanding, and following audio instructions. It offers strong performance across multiple audio benchmarks while being parameter - efficient, even when compared with larger advanced models and commercial services.

- Developed by: [LMMs-Lab]

- Model type: [LLM + Audio Encoder]

- Language(s) (NLP): [English]

- License: [MIT]

🚀 Quick Start

📦 Installation

You are encouraged to install transformers by using the following command, as this is the transformers version used when building this model:

python3 -m pip install transformers@git+https://github.com/huggingface/transformers@v4.51.3-Qwen2.5-Omni-preview

💻 Usage Examples

🔍 Basic Usage

Use the code below to get started with the model:

from transformers import AutoProcessor, AutoModelForCausalLM

import torch

import librosa

def load_audio():

return librosa.load(librosa.ex("libri1"), sr=16000)[0]

processor = AutoProcessor.from_pretrained("lmms-lab/Aero-1-Audio-1.5B", trust_remote_code=True)

model = AutoModelForCausalLM.from_pretrained("lmms-lab/Aero-1-Audio-1.5B", device_map="cuda", torch_dtype="auto", attn_implementation="flash_attention_2", trust_remote_code=True)

model.eval()

messages = [

{

"role": "user",

"content": [

{

"type": "audio_url",

"audio": "placeholder",

},

{

"type": "text",

"text": "Please transcribe the audio",

}

]

}

]

audios = [load_audio()]

prompt = processor.apply_chat_template(messages, add_generation_prompt=True)

inputs = processor(text=prompt, audios=audios, sampling_rate=16000, return_tensors="pt")

inputs = {k: v.to("cuda") for k, v in inputs.items()}

outputs = model.generate(**inputs, eos_token_id=151645, max_new_tokens=4096)

cont = outputs[:, inputs["input_ids"].shape[-1] :]

print(processor.batch_decode(cont, skip_special_tokens=True)[0])

⚙️ Advanced Usage

The model supports batch inference with transformers. An example demo is as follows:

from transformers import AutoProcessor, AutoModelForCausalLM

import torch

import librosa

def load_audio():

return librosa.load(librosa.ex("libri1"), sr=16000)[0]

def load_audio_2():

return librosa.load(librosa.ex("libri2"), sr=16000)[0]

processor = AutoProcessor.from_pretrained("lmms-lab/Aero-1-Audio-1.5B", trust_remote_code=True)

model = AutoModelForCausalLM.from_pretrained("lmms-lab/Aero-1-Audio-1.5B", device_map="cuda", torch_dtype="auto", attn_implementation="flash_attention_2", trust_remote_code=True)

model.eval()

messages = [

{

"role": "user",

"content": [

{

"type": "audio_url",

"audio": "placeholder",

},

{

"type": "text",

"text": "Please transcribe the audio",

}

]

}

]

messages = [messages, messages]

audios = [load_audio(), load_audio_2()]

processor.tokenizer.padding_side="left"

prompt = processor.apply_chat_template(messages, add_generation_prompt=True)

inputs = processor(text=prompt, audios=audios, sampling_rate=16000, return_tensors="pt", padding=True)

inputs = {k: v.to("cuda") for k, v in inputs.items()}

outputs = model.generate(**inputs, eos_token_id=151645, pad_token_id=151643, max_new_tokens=4096)

cont = outputs[:, inputs["input_ids"].shape[-1] :]

print(processor.batch_decode(cont, skip_special_tokens=True))

📚 Documentation

🔧 Technical Details

📊 Training Data

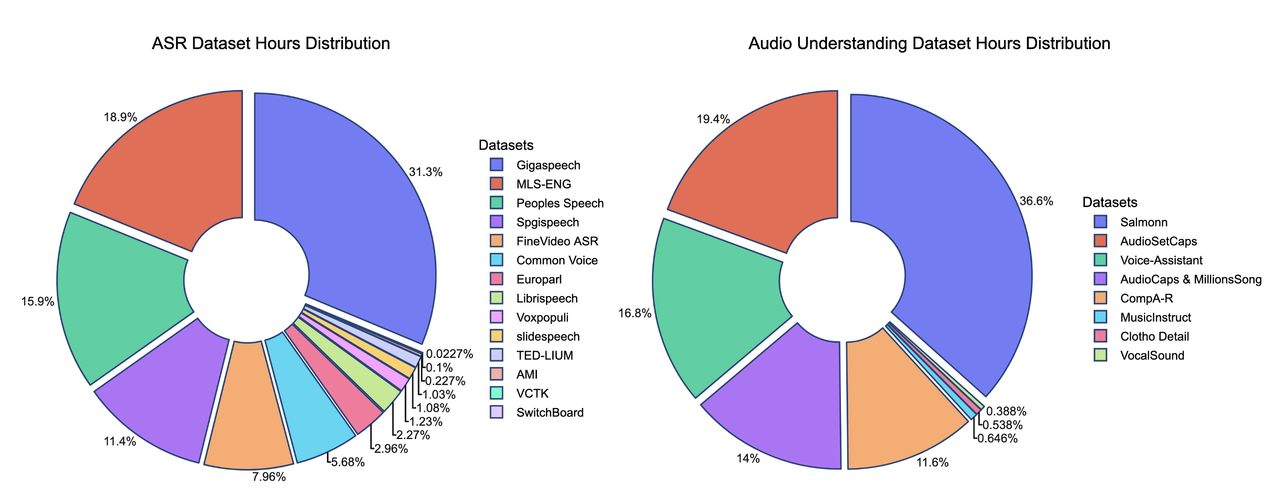

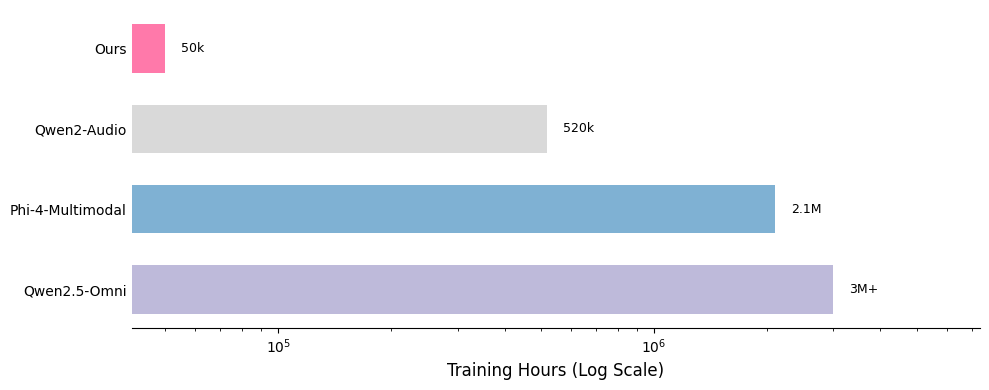

We present the contributions of our data mixture here. Our SFT data mixture includes over 20 publicly available datasets, and comparisons with other models highlight the data's lightweight nature.

The hours of some training datasets are estimated and may not be fully accurate

One of the key strengths of our training recipe lies in the quality and quantity of our data. Our training dataset consists of approximately 5 billion tokens, corresponding to around 50,000 hours of audio. Compared to models such as Qwen - Omni and Phi - 4, our dataset is over 100 times smaller, yet our model achieves competitive performance. All data is sourced from publicly available open - source datasets, highlighting the sample efficiency of our training approach. A detailed breakdown of our data distribution is provided below, along with comparisons to other models.

📄 License

This model is licensed under the MIT License.