🚀 Controlnet - Normal Map Version

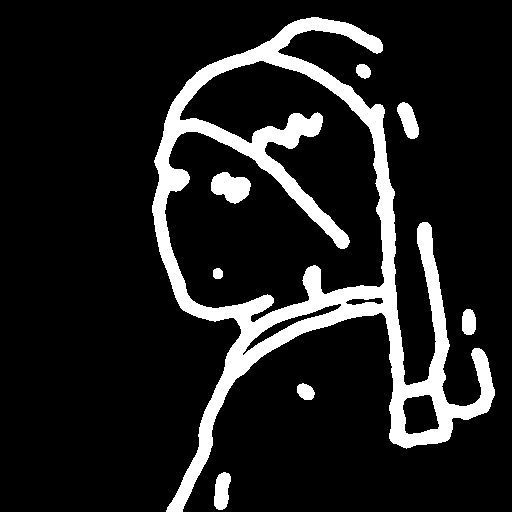

ControlNet is a neural network structure that controls diffusion models by adding extra conditions. This specific checkpoint is conditioned on Normal Map Estimation and can be combined with Stable Diffusion for enhanced image generation.

🚀 Quick Start

It is recommended to use this checkpoint with Stable Diffusion v1 - 5 as it was trained on this base model. Experimentally, it can also be used with other diffusion models like dreamboothed stable diffusion.

- Install

diffusers and related packages:

$ pip install diffusers transformers accelerate

- Run the following code:

from PIL import Image

from transformers import pipeline

import numpy as np

import cv2

from diffusers import StableDiffusionControlNetPipeline, ControlNetModel, UniPCMultistepScheduler

import torch

from diffusers.utils import load_image

image = load_image("https://huggingface.co/lllyasviel/sd-controlnet-normal/resolve/main/images/toy.png").convert("RGB")

depth_estimator = pipeline("depth-estimation", model ="Intel/dpt-hybrid-midas" )

image = depth_estimator(image)['predicted_depth'][0]

image = image.numpy()

image_depth = image.copy()

image_depth -= np.min(image_depth)

image_depth /= np.max(image_depth)

bg_threhold = 0.4

x = cv2.Sobel(image, cv2.CV_32F, 1, 0, ksize=3)

x[image_depth < bg_threhold] = 0

y = cv2.Sobel(image, cv2.CV_32F, 0, 1, ksize=3)

y[image_depth < bg_threhold] = 0

z = np.ones_like(x) * np.pi * 2.0

image = np.stack([x, y, z], axis=2)

image /= np.sum(image ** 2.0, axis=2, keepdims=True) ** 0.5

image = (image * 127.5 + 127.5).clip(0, 255).astype(np.uint8)

image = Image.fromarray(image)

controlnet = ControlNetModel.from_pretrained(

"fusing/stable-diffusion-v1-5-controlnet-normal", torch_dtype=torch.float16

)

pipe = StableDiffusionControlNetPipeline.from_pretrained(

"runwayml/stable-diffusion-v1-5", controlnet=controlnet, safety_checker=None, torch_dtype=torch.float16

)

pipe.scheduler = UniPCMultistepScheduler.from_config(pipe.scheduler.config)

pipe.enable_xformers_memory_efficient_attention()

pipe.enable_model_cpu_offload()

image = pipe("cute toy", image, num_inference_steps=20).images[0]

image.save('images/toy_normal_out.png')

✨ Features

- Extra Condition Control: ControlNet adds extra conditions to diffusion models, enabling more precise image generation.

- Multiple Checkpoints: There are 8 different checkpoints, each trained on a different type of conditioning, providing diverse generation capabilities.

📚 Documentation

Model Details

Introduction

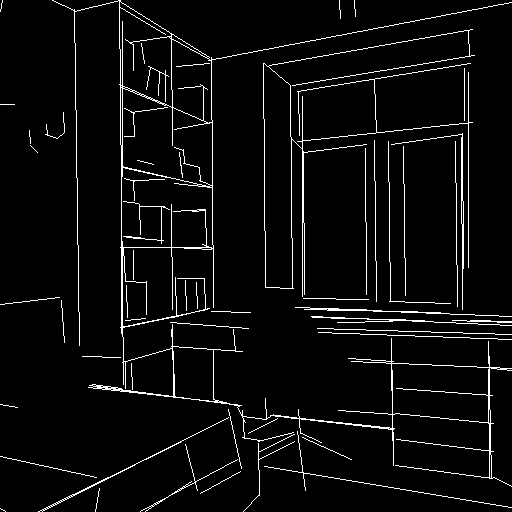

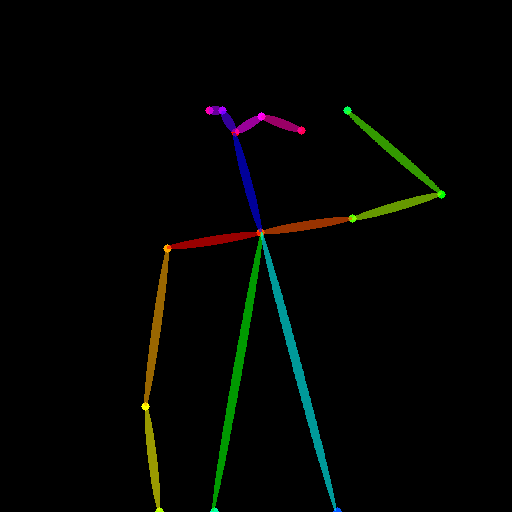

Controlnet was proposed in Adding Conditional Control to Text-to-Image Diffusion Models by Lvmin Zhang and Maneesh Agrawala. The abstract states that it presents a neural network structure to control pretrained large diffusion models to support additional input conditions. It can learn task-specific conditions in an end-to-end way, and the learning is robust even with a small training dataset. Training a ControlNet is as fast as fine-tuning a diffusion model and can be done on personal devices or scaled to large amounts of data with powerful computation clusters. It can augment large diffusion models like Stable Diffusion to enable conditional inputs such as edge maps, segmentation maps, keypoints, etc.

Released Checkpoints

The authors released 8 different checkpoints, each trained with Stable Diffusion v1 - 5 on a different type of conditioning:

Training

The normal model was trained in two stages:

- Initial Training: The initial normal model was trained on 25,452 normal-image, caption pairs from DIODE. The image captions were generated by BLIP. The model was trained for 100 GPU - hours with Nvidia A100 80G using Stable Diffusion 1.5 as a base model.

- Extended Training: The extended normal model further trained the initial normal model on "coarse" normal maps. The coarse normal maps were generated using Midas to compute a depth map and then performing normal - from - distance. The model was trained for 200 GPU - hours with Nvidia A100 80G using the initial normal model as a base model.

Blog post

For more information, please also have a look at the official ControlNet Blog Post.

📄 License

This model is released under The CreativeML OpenRAIL M license.