🚀 Controlnet - Human Pose Version

ControlNet is a neural network structure that enables the control of diffusion models by incorporating additional conditions. This specific checkpoint is tailored for Human Pose Estimation in ControlNet. It can be integrated with Stable Diffusion for enhanced image generation.

📚 Documentation

Model Details

Introduction

Controlnet was introduced in Adding Conditional Control to Text-to-Image Diffusion Models by Lvmin Zhang and Maneesh Agrawala.

The abstract is as follows:

We present a neural network structure, ControlNet, to control pretrained large diffusion models to support additional input conditions. The ControlNet learns task-specific conditions in an end-to-end way, and the learning is robust even when the training dataset is small (< 50k). Moreover, training a ControlNet is as fast as fine-tuning a diffusion model, and the model can be trained on a personal devices. Alternatively, if powerful computation clusters are available, the model can scale to large amounts (millions to billions) of data. We report that large diffusion models like Stable Diffusion can be augmented with ControlNets to enable conditional inputs like edge maps, segmentation maps, keypoints, etc. This may enrich the methods to control large diffusion models and further facilitate related applications.

Released Checkpoints

The authors released 8 distinct checkpoints, each trained with Stable Diffusion v1-5 under different conditioning types:

💻 Usage Examples

Basic Usage

It is recommended to use the checkpoint with Stable Diffusion v1-5 since the checkpoint was trained on it. Experimentally, it can also be used with other diffusion models like dreamboothed stable diffusion.

⚠️ Important Note

If you want to process an image to create the auxiliary conditioning, external dependencies are required as shown below:

- Install https://github.com/patrickvonplaten/controlnet_aux

$ pip install controlnet_aux

- Install

diffusers and related packages:

$ pip install diffusers transformers accelerate

- Run the following code:

from PIL import Image

from diffusers import StableDiffusionControlNetPipeline, ControlNetModel, UniPCMultistepScheduler

import torch

from controlnet_aux import OpenposeDetector

from diffusers.utils import load_image

openpose = OpenposeDetector.from_pretrained('lllyasviel/ControlNet')

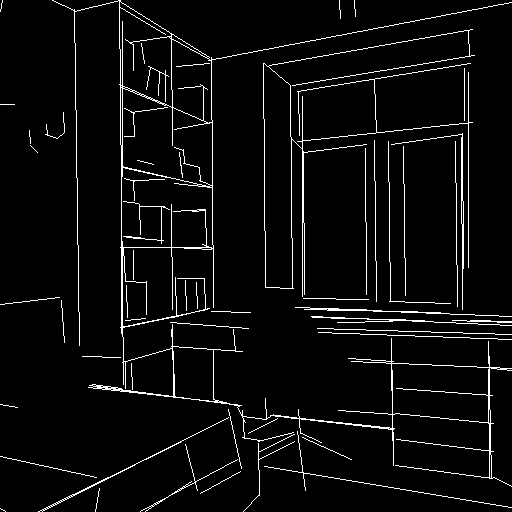

image = load_image("https://huggingface.co/lllyasviel/sd-controlnet-openpose/resolve/main/images/pose.png")

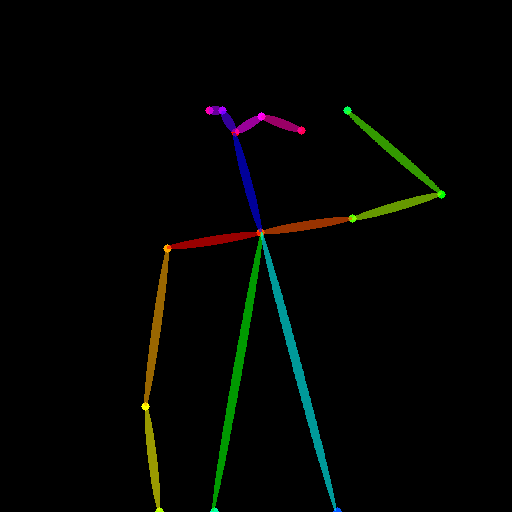

image = openpose(image)

controlnet = ControlNetModel.from_pretrained(

"lllyasviel/sd-controlnet-openpose", torch_dtype=torch.float16

)

pipe = StableDiffusionControlNetPipeline.from_pretrained(

"runwayml/stable-diffusion-v1-5", controlnet=controlnet, safety_checker=None, torch_dtype=torch.float16

)

pipe.scheduler = UniPCMultistepScheduler.from_config(pipe.scheduler.config)

pipe.enable_xformers_memory_efficient_attention()

pipe.enable_model_cpu_offload()

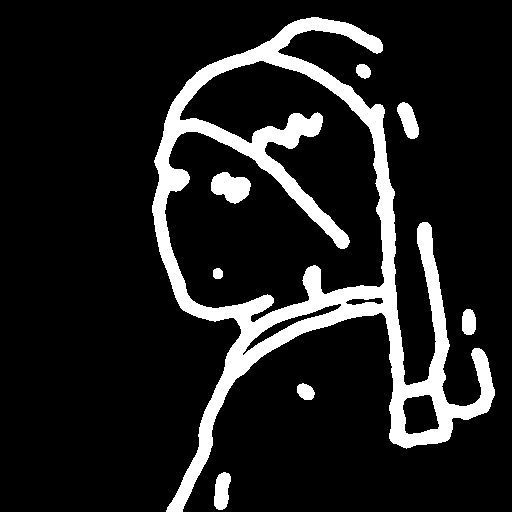

image = pipe("chef in the kitchen", image, num_inference_steps=20).images[0]

image.save('images/chef_pose_out.png')

Training

The Openpose model was trained on 200k pose-image, caption pairs. The pose estimation images were generated with Openpose. The model was trained for 300 GPU-hours with Nvidia A100 80G using Stable Diffusion 1.5 as a base model.

Blog post

For more information, please also refer to the official ControlNet Blog Post.

📄 License

This model is licensed under The CreativeML OpenRAIL M license, which is an Open RAIL M license, adapted from the work that BigScience and the RAIL Initiative are jointly carrying in the area of responsible AI licensing. See also the article about the BLOOM Open RAIL license on which our license is based.