🚀 Controlnet - Image Segmentation Version

ControlNet is a neural network structure that enables control of diffusion models by incorporating additional conditions. This specific checkpoint is tailored for Image Segmentation within the ControlNet framework. It can be used in conjunction with Stable Diffusion.

✨ Features

- Flexible Control: Allows for conditional control of text - to - image diffusion models.

- Multiple Checkpoints: Offers 8 different checkpoints trained on various conditioning types.

- Compatibility: Can be used with Stable Diffusion v1 - 5 and potentially other diffusion models.

📦 Installation

- Install

diffusers and related packages:

$ pip install diffusers transformers accelerate

💻 Usage Examples

Basic Usage

We'll need to make use of a color palette as described in semantic_segmentation:

palette = np.asarray([

[0, 0, 0],

[120, 120, 120],

[180, 120, 120],

[6, 230, 230],

[80, 50, 50],

[4, 200, 3],

[120, 120, 80],

[140, 140, 140],

[204, 5, 255],

[230, 230, 230],

[4, 250, 7],

[224, 5, 255],

[235, 255, 7],

[150, 5, 61],

[120, 120, 70],

[8, 255, 51],

[255, 6, 82],

[143, 255, 140],

[204, 255, 4],

[255, 51, 7],

[204, 70, 3],

[0, 102, 200],

[61, 230, 250],

[255, 6, 51],

[11, 102, 255],

[255, 7, 71],

[255, 9, 224],

[9, 7, 230],

[220, 220, 220],

[255, 9, 92],

[112, 9, 255],

[8, 255, 214],

[7, 255, 224],

[255, 184, 6],

[10, 255, 71],

[255, 41, 10],

[7, 255, 255],

[224, 255, 8],

[102, 8, 255],

[255, 61, 6],

[255, 194, 7],

[255, 122, 8],

[0, 255, 20],

[255, 8, 41],

[255, 5, 153],

[6, 51, 255],

[235, 12, 255],

[160, 150, 20],

[0, 163, 255],

[140, 140, 140],

[250, 10, 15],

[20, 255, 0],

[31, 255, 0],

[255, 31, 0],

[255, 224, 0],

[153, 255, 0],

[0, 0, 255],

[255, 71, 0],

[0, 235, 255],

[0, 173, 255],

[31, 0, 255],

[11, 200, 200],

[255, 82, 0],

[0, 255, 245],

[0, 61, 255],

[0, 255, 112],

[0, 255, 133],

[255, 0, 0],

[255, 163, 0],

[255, 102, 0],

[194, 255, 0],

[0, 143, 255],

[51, 255, 0],

[0, 82, 255],

[0, 255, 41],

[0, 255, 173],

[10, 0, 255],

[173, 255, 0],

[0, 255, 153],

[255, 92, 0],

[255, 0, 255],

[255, 0, 245],

[255, 0, 102],

[255, 173, 0],

[255, 0, 20],

[255, 184, 184],

[0, 31, 255],

[0, 255, 61],

[0, 71, 255],

[255, 0, 204],

[0, 255, 194],

[0, 255, 82],

[0, 10, 255],

[0, 112, 255],

[51, 0, 255],

[0, 194, 255],

[0, 122, 255],

[0, 255, 163],

[255, 153, 0],

[0, 255, 10],

[255, 112, 0],

[143, 255, 0],

[82, 0, 255],

[163, 255, 0],

[255, 235, 0],

[8, 184, 170],

[133, 0, 255],

[0, 255, 92],

[184, 0, 255],

[255, 0, 31],

[0, 184, 255],

[0, 214, 255],

[255, 0, 112],

[92, 255, 0],

[0, 224, 255],

[112, 224, 255],

[70, 184, 160],

[163, 0, 255],

[153, 0, 255],

[71, 255, 0],

[255, 0, 163],

[255, 204, 0],

[255, 0, 143],

[0, 255, 235],

[133, 255, 0],

[255, 0, 235],

[245, 0, 255],

[255, 0, 122],

[255, 245, 0],

[10, 190, 212],

[214, 255, 0],

[0, 204, 255],

[20, 0, 255],

[255, 255, 0],

[0, 153, 255],

[0, 41, 255],

[0, 255, 204],

[41, 0, 255],

[41, 255, 0],

[173, 0, 255],

[0, 245, 255],

[71, 0, 255],

[122, 0, 255],

[0, 255, 184],

[0, 92, 255],

[184, 255, 0],

[0, 133, 255],

[255, 214, 0],

[25, 194, 194],

[102, 255, 0],

[92, 0, 255],

])

Advanced Usage

Having defined the color palette we can now run the whole segmentation + controlnet generation code:

from transformers import AutoImageProcessor, UperNetForSemanticSegmentation

from PIL import Image

import numpy as np

import torch

from diffusers import StableDiffusionControlNetPipeline, ControlNetModel, UniPCMultistepScheduler

📚 Documentation

Model Details

Introduction

Controlnet was proposed in Adding Conditional Control to Text - to - Image Diffusion Models by Lvmin Zhang, Maneesh Agrawala.

The abstract reads as follows:

We present a neural network structure, ControlNet, to control pretrained large diffusion models to support additional input conditions. The ControlNet learns task - specific conditions in an end - to - end way, and the learning is robust even when the training dataset is small (< 50k). Moreover, training a ControlNet is as fast as fine - tuning a diffusion model, and the model can be trained on a personal devices. Alternatively, if powerful computation clusters are available, the model can scale to large amounts (millions to billions) of data. We report that large diffusion models like Stable Diffusion can be augmented with ControlNets to enable conditional inputs like edge maps, segmentation maps, keypoints, etc. This may enrich the methods to control large diffusion models and further facilitate related applications.

Released Checkpoints

The authors released 8 different checkpoints, each trained with Stable Diffusion v1 - 5 on a different type of conditioning:

| Model Name |

Control Image Overview |

Control Image Example |

Generated Image Example |

[lllyasviel/sd - controlnet - canny](https://huggingface.co/lllyasviel/sd - controlnet - canny)

Trained with canny edge detection |

A monochrome image with white edges on a black background. |

|

|

[lllyasviel/sd - controlnet - depth](https://huggingface.co/lllyasviel/sd - controlnet - depth)

Trained with Midas depth estimation |

A grayscale image with black representing deep areas and white representing shallow areas. |

|

|

[lllyasviel/sd - controlnet - hed](https://huggingface.co/lllyasviel/sd - controlnet - hed)

Trained with HED edge detection (soft edge) |

A monochrome image with white soft edges on a black background. |

|

|

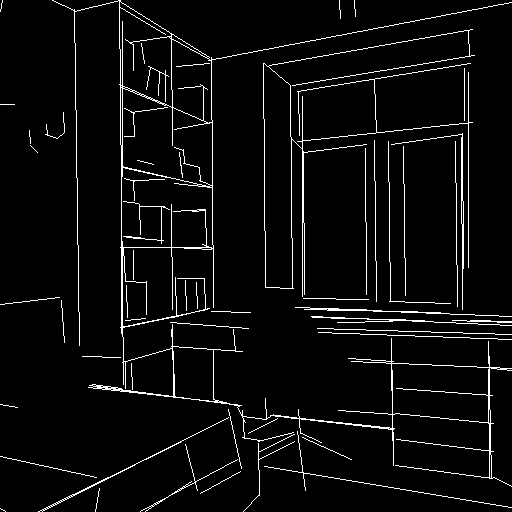

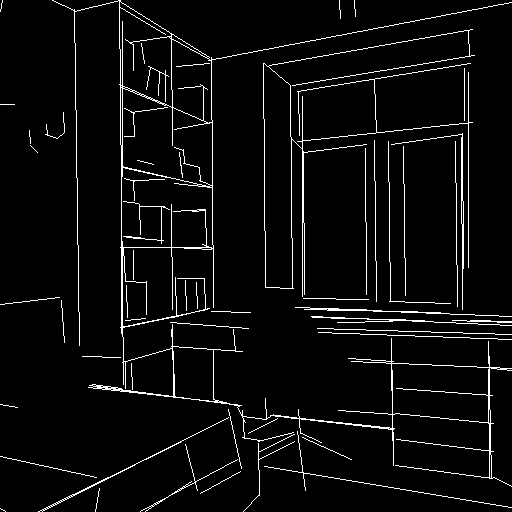

[lllyasviel/sd - controlnet - mlsd](https://huggingface.co/lllyasviel/sd - controlnet - mlsd)

Trained with M - LSD line detection |

A monochrome image composed only of white straight lines on a black background. |

|

|

[lllyasviel/sd - controlnet - normal](https://huggingface.co/lllyasviel/sd - controlnet - normal)

Trained with normal map |

A normal mapped image. |

|

|

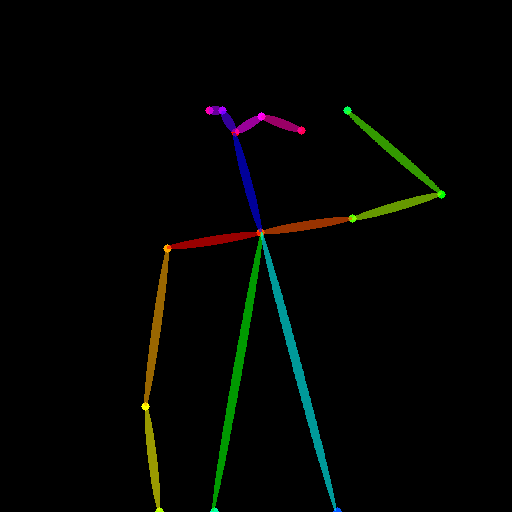

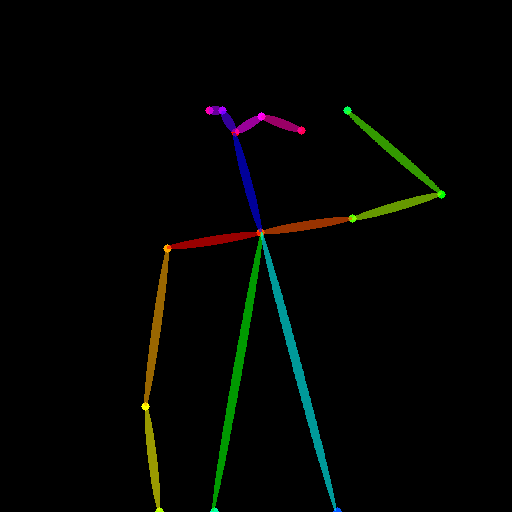

[lllyasviel/sd - controlnet_openpose](https://huggingface.co/lllyasviel/sd - controlnet - openpose)

Trained with OpenPose bone image |

A [OpenPose bone](https://github.com/CMU - Perceptual - Computing - Lab/openpose) image. |

|

|

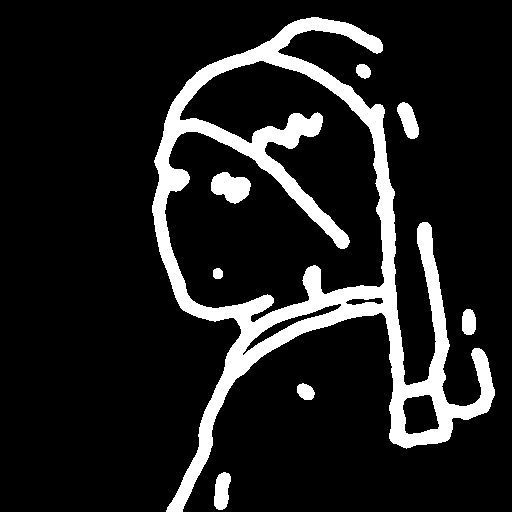

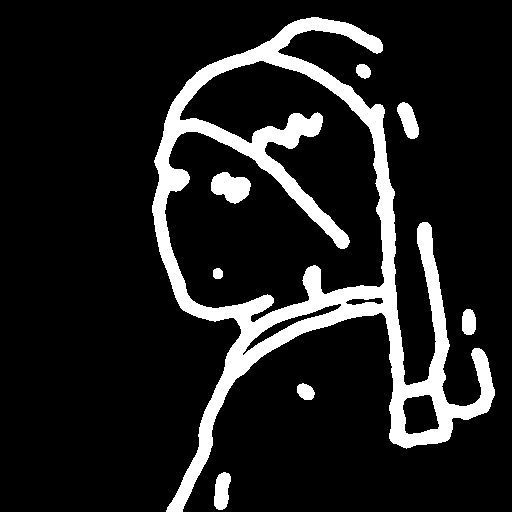

[lllyasviel/sd - controlnet_scribble](https://huggingface.co/lllyasviel/sd - controlnet - scribble)

Trained with human scribbles |

A hand - drawn monochrome image with white outlines on a black background. |

|

|

[lllyasviel/sd - controlnet_seg](https://huggingface.co/lllyasviel/sd - controlnet - seg)

Trained with semantic segmentation |

An ADE20K's segmentation protocol image. |

|

|

📄 License

The model is released under The CreativeML OpenRAIL M license.