🚀 Controlnet - v1.1 - Tile Version

ControlNet v1.1 is a neural network structure that adds extra conditions to control diffusion models. It can be used in combination with Stable Diffusion to generate high - quality images, offering more flexibility and control in image generation.

🚀 Quick Start

Installation

First, you need to install the diffusers and related packages:

$ pip install diffusers transformers accelerate

Usage

Here is a code example to show you how to use this model:

import torch

from PIL import Image

from diffusers import ControlNetModel, DiffusionPipeline

from diffusers.utils import load_image

def resize_for_condition_image(input_image: Image, resolution: int):

input_image = input_image.convert("RGB")

W, H = input_image.size

k = float(resolution) / min(H, W)

H *= k

W *= k

H = int(round(H / 64.0)) * 64

W = int(round(W / 64.0)) * 64

img = input_image.resize((W, H), resample=Image.LANCZOS)

return img

controlnet = ControlNetModel.from_pretrained('lllyasviel/control_v11f1e_sd15_tile',

torch_dtype=torch.float16)

pipe = DiffusionPipeline.from_pretrained("runwayml/stable-diffusion-v1-5",

custom_pipeline="stable_diffusion_controlnet_img2img",

controlnet=controlnet,

torch_dtype=torch.float16).to('cuda')

pipe.enable_xformers_memory_efficient_attention()

source_image = load_image('https://huggingface.co/lllyasviel/control_v11f1e_sd15_tile/resolve/main/images/original.png')

condition_image = resize_for_condition_image(source_image, 1024)

image = pipe(prompt="best quality",

negative_prompt="blur, lowres, bad anatomy, bad hands, cropped, worst quality",

image=condition_image,

controlnet_conditioning_image=condition_image,

width=condition_image.size[0],

height=condition_image.size[1],

strength=1.0,

generator=torch.manual_seed(0),

num_inference_steps=32,

).images[0]

image.save('output.png')

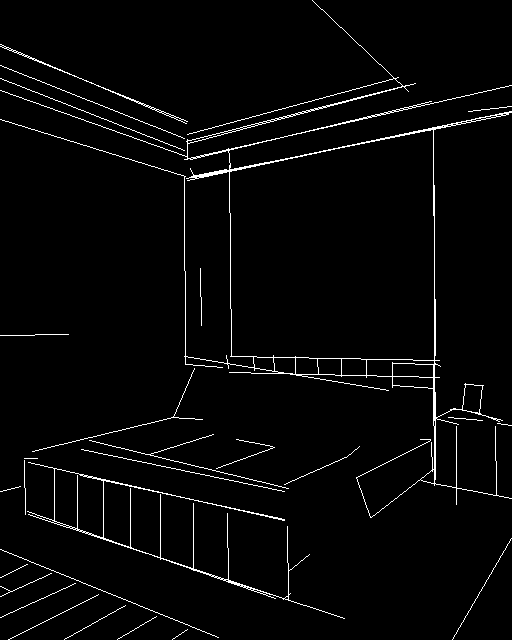

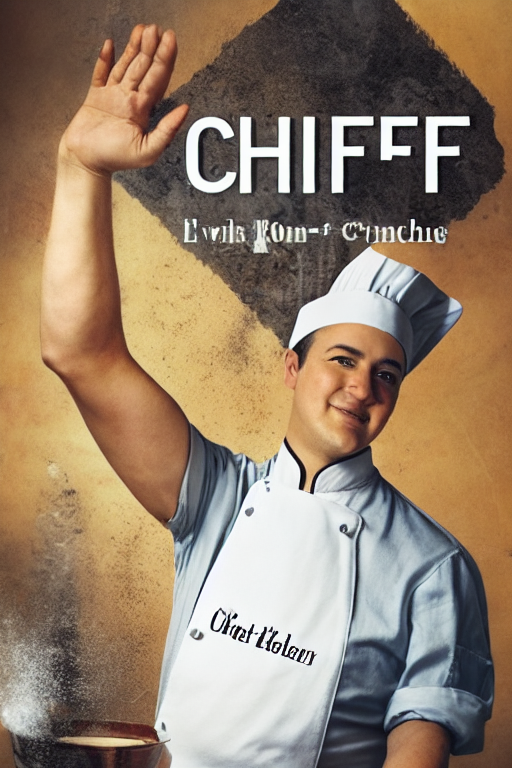

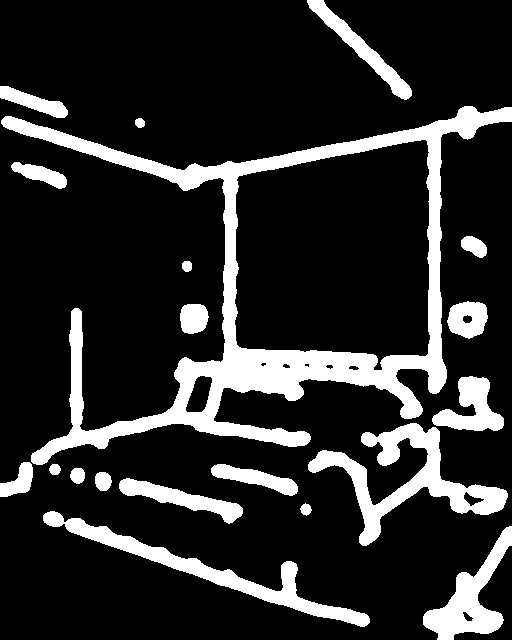

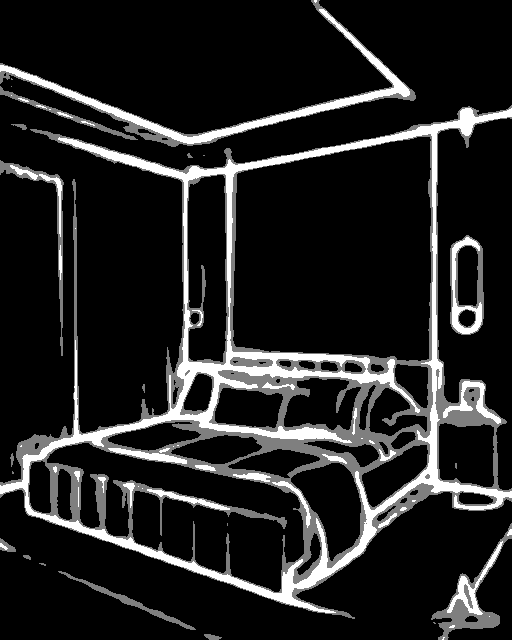

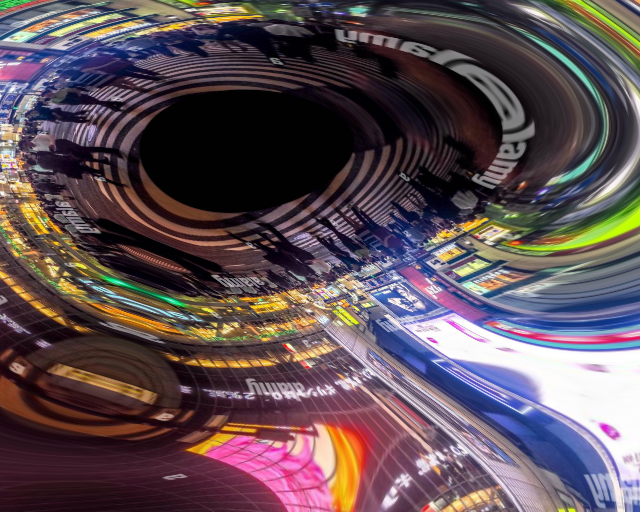

The following are the original and generated images:

✨ Features

- Extra Conditional Control: ControlNet adds extra conditions to diffusion models, enabling more precise control over image generation.

- Combination with Stable Diffusion: It can be used in combination with Stable Diffusion, such as

runwayml/stable - diffusion - v1 - 5, to generate high - quality images.

- Multiple Conditioning Types: There are 14 different checkpoints, each trained with a different type of conditioning, providing a wide range of application scenarios.

📦 Installation

As mentioned in the Quick Start section, you can install the necessary packages using the following command:

$ pip install diffusers transformers accelerate

💻 Usage Examples

Basic Usage

The code example in the Quick Start section shows the basic usage of this model. You can adjust parameters such as prompt, negative_prompt, and strength to generate different images.

Advanced Usage

You can experiment with different diffusion models (e.g., dreamboothed stable diffusion) and different conditioning images to explore more possibilities of this model.

📚 Documentation

Model Details

| Property |

Details |

| Developed by |

Lvmin Zhang, Maneesh Agrawala |

| Model Type |

Diffusion - based text - to - image generation model |

| Language(s) |

English |

| License |

[The CreativeML OpenRAIL M license](https://huggingface.co/spaces/CompVis/stable - diffusion - license) is an [Open RAIL M license](https://www.licenses.ai/blog/2022/8/18/naming - convention - of - responsible - ai - licenses), adapted from the work that BigScience and the RAIL Initiative are jointly carrying in the area of responsible AI licensing. See also [the article about the BLOOM Open RAIL license](https://bigscience.huggingface.co/blog/the - bigscience - rail - license) on which our license is based. |

| Resources for more information |

GitHub Repository, Paper |

| Cite as |

@misc{zhang2023adding,

title={Adding Conditional Control to Text - to - Image Diffusion Models},

author={Lvmin Zhang and Maneesh Agrawala},

year={2023},

eprint={2302.05543},

archivePrefix={arXiv},

primaryClass={cs.CV}

} |

Introduction

Controlnet was proposed in Adding Conditional Control to Text - to - Image Diffusion Models by Lvmin Zhang and Maneesh Agrawala. The abstract indicates that ControlNet can control pretrained large diffusion models to support additional input conditions. It can learn task - specific conditions in an end - to - end way, and the training process is fast and can be performed on personal devices.

Other released checkpoints v1 - 1

The authors released 14 different checkpoints, each trained with [Stable Diffusion v1 - 5](https://huggingface.co/runwayml/stable - diffusion - v1 - 5) on a different type of conditioning:

More information

For more information, please also have a look at the Diffusers ControlNet Blog Post and the official docs.

🔧 Technical Details

ControlNet is a neural network structure that adds extra conditions to diffusion models. It can learn task - specific conditions in an end - to - end way, and the training process is fast. The model can be trained on personal devices with a small training dataset, or it can scale to large amounts of data when powerful computation clusters are available.

📄 License

This model is under [The CreativeML OpenRAIL M license](https://huggingface.co/spaces/CompVis/stable - diffusion - license), which is an [Open RAIL M license](https://www.licenses.ai/blog/2022/8/18/naming - convention - of - responsible - ai - licenses).