Model Overview

Model Features

Model Capabilities

Use Cases

🚀 Controlnet - v1.1 - InPaint Version

ControlNet is a neural network structure that enables control of diffusion models by incorporating additional conditions. This specific checkpoint is tailored for inpaint images, offering enhanced capabilities in image editing and generation. It can be effectively used in conjunction with Stable Diffusion models, providing users with more creative control over the image generation process.

🚀 Quick Start

Installation

First, install diffusers and related packages:

$ pip install diffusers transformers accelerate

Usage

Run the following code to use the model:

# !pip install transformers accelerate

from diffusers import StableDiffusionControlNetInpaintPipeline, ControlNetModel

from diffusers.utils import load_image

import numpy as np

import torch

init_image = load_image(

"https://huggingface.co/datasets/diffusers/test-arrays/resolve/main/stable_diffusion_inpaint/boy.png"

)

init_image = init_image.resize((512, 512))

generator = torch.Generator(device="cpu").manual_seed(1)

mask_image = load_image(

"https://huggingface.co/datasets/diffusers/test-arrays/resolve/main/stable_diffusion_inpaint/boy_mask.png"

)

mask_image = mask_image.resize((512, 512))

def make_inpaint_condition(image, image_mask):

image = np.array(image.convert("RGB")).astype(np.float32) / 255.0

image_mask = np.array(image_mask.convert("L")).astype(np.float32) / 255.0

assert image.shape[0:1] == image_mask.shape[0:1], "image and image_mask must have the same image size"

image[image_mask > 0.5] = -1.0 # set as masked pixel

image = np.expand_dims(image, 0).transpose(0, 3, 1, 2)

image = torch.from_numpy(image)

return image

control_image = make_inpaint_condition(init_image, mask_image)

controlnet = ControlNetModel.from_pretrained(

"lllyasviel/control_v11p_sd15_inpaint", torch_dtype=torch.float16

)

pipe = StableDiffusionControlNetInpaintPipeline.from_pretrained(

"runwayml/stable-diffusion-v1-5", controlnet=controlnet, torch_dtype=torch.float16

)

pipe.scheduler = DDIMScheduler.from_config(pipe.scheduler.config)

pipe.enable_model_cpu_offload()

# generate image

image = pipe(

"a handsome man with ray-ban sunglasses",

num_inference_steps=20,

generator=generator,

eta=1.0,

image=init_image,

mask_image=mask_image,

control_image=control_image,

).images[0]

Example Images

✨ Features

- Conditional Control: ControlNet allows for the addition of extra conditions to diffusion models, enabling more precise control over the image generation process.

- Inpaint Capability: This checkpoint is specifically trained for inpaint images, making it suitable for tasks such as image editing and restoration.

- Compatibility: It can be used in combination with Stable Diffusion models, providing flexibility and compatibility with existing workflows.

📦 Installation

To use this model, you need to install the diffusers library and related packages. You can install them using pip:

$ pip install diffusers transformers accelerate

💻 Usage Examples

Basic Usage

# !pip install transformers accelerate

from diffusers import StableDiffusionControlNetInpaintPipeline, ControlNetModel

from diffusers.utils import load_image

import numpy as np

import torch

init_image = load_image(

"https://huggingface.co/datasets/diffusers/test-arrays/resolve/main/stable_diffusion_inpaint/boy.png"

)

init_image = init_image.resize((512, 512))

generator = torch.Generator(device="cpu").manual_seed(1)

mask_image = load_image(

"https://huggingface.co/datasets/diffusers/test-arrays/resolve/main/stable_diffusion_inpaint/boy_mask.png"

)

mask_image = mask_image.resize((512, 512))

def make_inpaint_condition(image, image_mask):

image = np.array(image.convert("RGB")).astype(np.float32) / 255.0

image_mask = np.array(image_mask.convert("L")).astype(np.float32) / 255.0

assert image.shape[0:1] == image_mask.shape[0:1], "image and image_mask must have the same image size"

image[image_mask > 0.5] = -1.0 # set as masked pixel

image = np.expand_dims(image, 0).transpose(0, 3, 1, 2)

image = torch.from_numpy(image)

return image

control_image = make_inpaint_condition(init_image, mask_image)

controlnet = ControlNetModel.from_pretrained(

"lllyasviel/control_v11p_sd15_inpaint", torch_dtype=torch.float16

)

pipe = StableDiffusionControlNetInpaintPipeline.from_pretrained(

"runwayml/stable-diffusion-v1-5", controlnet=controlnet, torch_dtype=torch.float16

)

pipe.scheduler = DDIMScheduler.from_config(pipe.scheduler.config)

pipe.enable_model_cpu_offload()

# generate image

image = pipe(

"a handsome man with ray-ban sunglasses",

num_inference_steps=20,

generator=generator,

eta=1.0,

image=init_image,

mask_image=mask_image,

control_image=control_image,

).images[0]

Advanced Usage

The model can also be used with other diffusion models such as dreamboothed stable diffusion, although it is recommended to use it with Stable Diffusion v1-5 as it has been trained on this model.

📚 Documentation

For more details, please refer to the 🧨 Diffusers docs.

🔧 Technical Details

ControlNet is a neural network structure proposed in Adding Conditional Control to Text-to-Image Diffusion Models by Lvmin Zhang and Maneesh Agrawala. It allows for the addition of extra conditions to pretrained large diffusion models, enabling more precise control over the image generation process. The model can learn task-specific conditions in an end-to-end way, and the learning is robust even with a small training dataset.

Model Details

| Property | Details |

|---|---|

| Model Type | Diffusion-based text-to-image generation model |

| Developed by | Lvmin Zhang, Maneesh Agrawala |

| Language(s) | English |

| License | The CreativeML OpenRAIL M license, an Open RAIL M license, adapted from the work of BigScience and the RAIL Initiative in responsible AI licensing. See also the article about the BLOOM Open RAIL license on which our license is based. |

| Resources for more information | GitHub Repository, Paper |

| Cite as | @misc{zhang2023adding, title={Adding Conditional Control to Text-to-Image Diffusion Models}, author={Lvmin Zhang and Maneesh Agrawala}, year={2023}, eprint={2302.05543}, archivePrefix={arXiv}, primaryClass={cs.CV} } |

📄 License

This model is released under The CreativeML OpenRAIL M license, an Open RAIL M license, adapted from the work of BigScience and the RAIL Initiative in responsible AI licensing.

Other released checkpoints v1-1

The authors released 14 different checkpoints, each trained with Stable Diffusion v1-5 on a different type of conditioning:

| Model Name | Control Image Overview | Condition Image | Control Image Example | Generated Image Example |

|---|---|---|---|---|

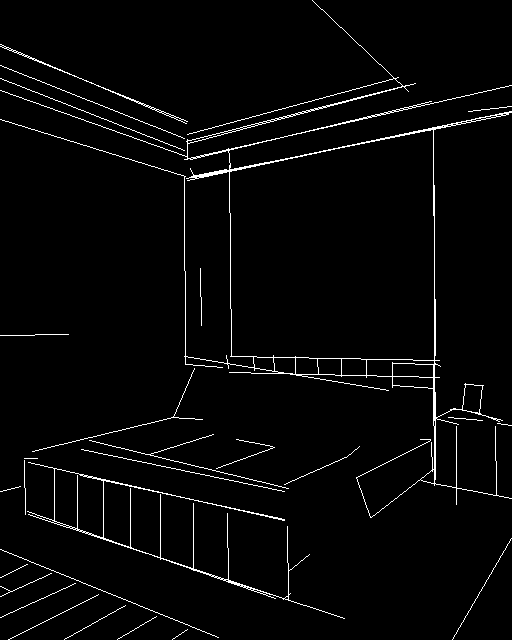

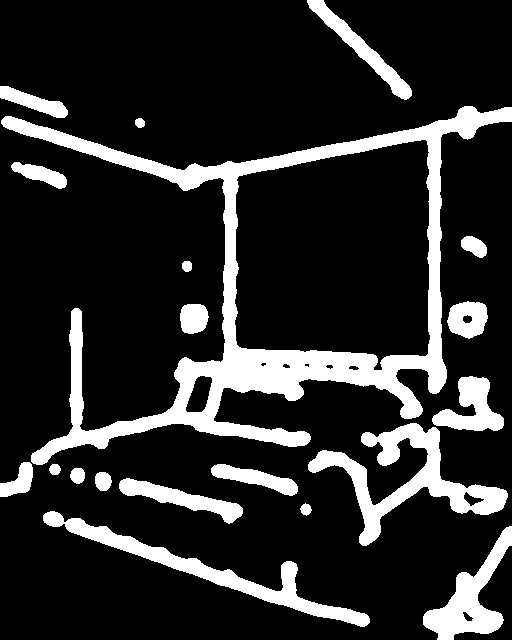

| lllyasviel/control_v11p_sd15_canny |

Trained with canny edge detection | A monochrome image with white edges on a black background. |  |

|

| lllyasviel/control_v11e_sd15_ip2p |

Trained with pixel to pixel instruction | No condition . |  |

|

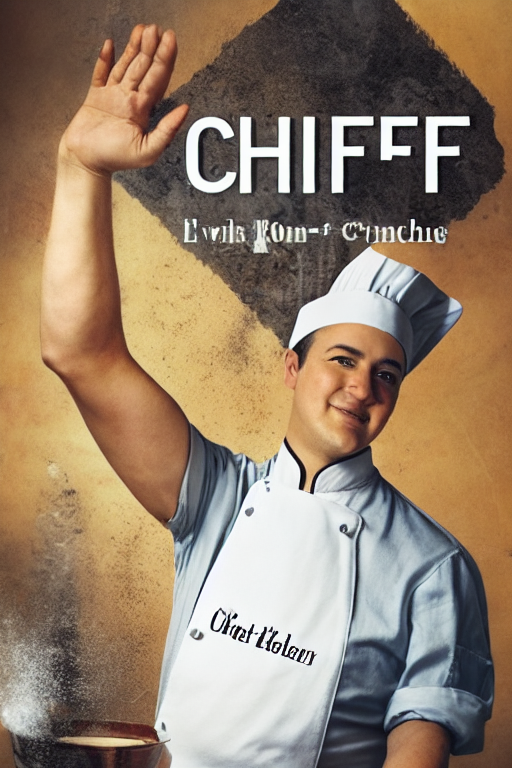

| lllyasviel/control_v11p_sd15_inpaint |

Trained with image inpainting | No condition. |  |

|

| lllyasviel/control_v11p_sd15_mlsd |

Trained with multi-level line segment detection | An image with annotated line segments. |  |

|

| lllyasviel/control_v11f1p_sd15_depth |

Trained with depth estimation | An image with depth information, usually represented as a grayscale image. |  |

|

| lllyasviel/control_v11p_sd15_normalbae |

Trained with surface normal estimation | An image with surface normal information, usually represented as a color-coded image. |  |

|

| lllyasviel/control_v11p_sd15_seg |

Trained with image segmentation | An image with segmented regions, usually represented as a color-coded image. |  |

|

| lllyasviel/control_v11p_sd15_lineart |

Trained with line art generation | An image with line art, usually black lines on a white background. |  |

|

| lllyasviel/control_v11p_sd15s2_lineart_anime |

Trained with anime line art generation | An image with anime-style line art. |  |

|

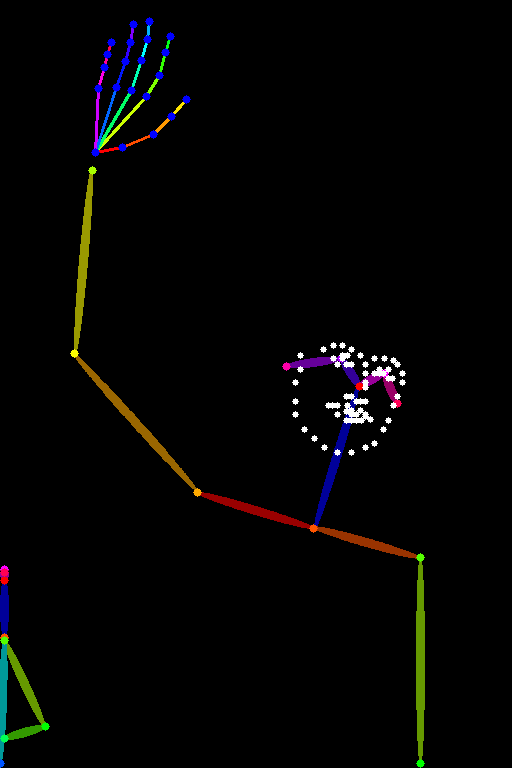

| lllyasviel/control_v11p_sd15_openpose |

Trained with human pose estimation | An image with human poses, usually represented as a set of keypoints or skeletons. |  |

|

| lllyasviel/control_v11p_sd15_scribble |

Trained with scribble input | An image with scribble lines. |  |

|