🚀 WizardMath: Empowering Mathematical Reasoning for Large Language Models via Reinforced Evol-Instruct (RLEIF)

WizardMath enhances large language models' mathematical reasoning capabilities through Reinforced Evol-Instruct (RLEIF), offering high - performance models for various mathematical and general tasks.

🤗 HF Repo •🐱 Github Repo • 🐦 Twitter • 📃 [WizardLM] • 📃 [WizardCoder] • 📃 [WizardMath]

👋 Join our Discord

✨ Features

WizardMath provides multiple models with different scales, which are suitable for various tasks such as code generation, mathematical problem - solving, and general language interaction. These models have achieved excellent results in multiple benchmarks.

📚 Documentation

WizardCoder Models

| Property |

Details |

| Model Type |

WizardCoder-Python-34B-V1.0, WizardCoder-15B-V1.0, WizardCoder-Python-13B-V1.0, WizardCoder-Python-7B-V1.0, WizardCoder-3B-V1.0, WizardCoder-1B-V1.0 |

| Checkpoint |

🤗 HF Link, 🤗 HF Link, 🤗 HF Link, 🤗 HF Link, 🤗 HF Link, 🤗 HF Link |

| Paper |

📃 [WizardCoder] |

| HumanEval |

73.2, 59.8, 64.0, 55.5, 34.8, 23.8 |

| MBPP |

61.2, 50.6, 55.6, 51.6, 37.4, 28.6 |

| Demo |

Demo, --, --, Demo, --, -- |

| License |

Llama2, OpenRAIL-M, Llama2, Llama2, OpenRAIL-M, OpenRAIL-M |

WizardMath Models

| Property |

Details |

| Model Type |

WizardMath-70B-V1.0, WizardMath-13B-V1.0, WizardMath-7B-V1.0 |

| Checkpoint |

🤗 HF Link, 🤗 HF Link, 🤗 HF Link |

| Paper |

📃 [WizardMath] |

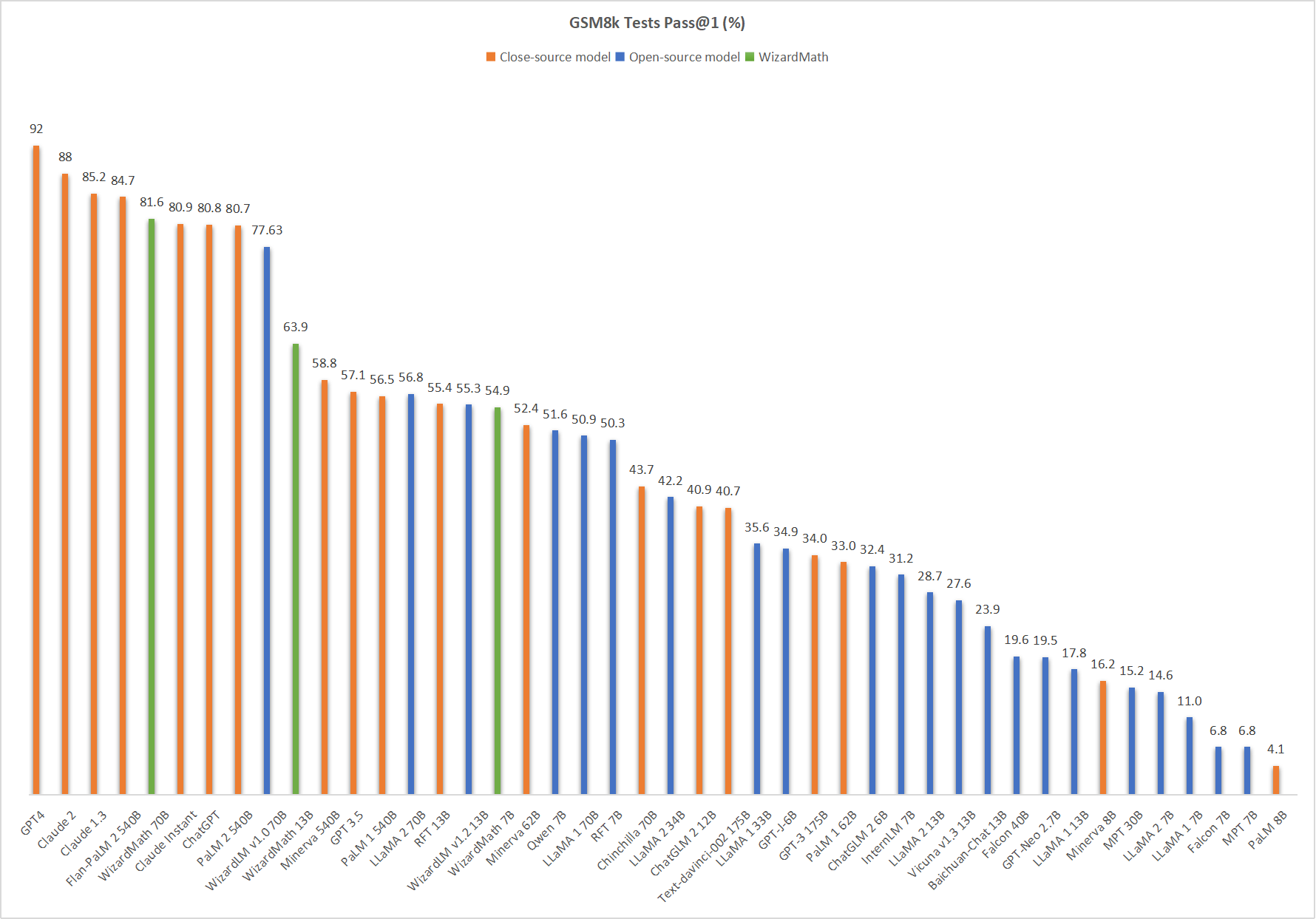

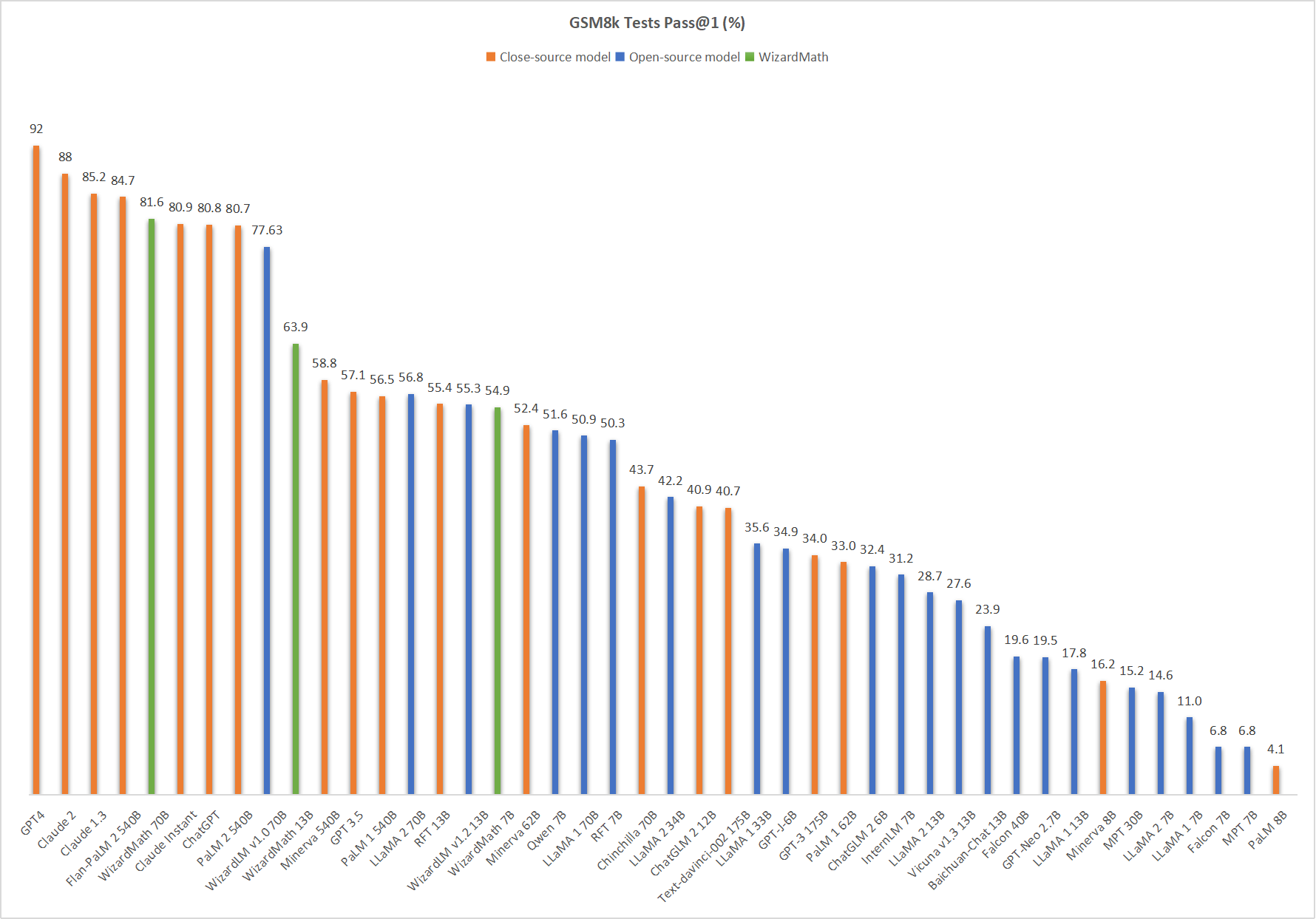

| GSM8k |

81.6, 63.9, 54.9 |

| MATH |

22.7, 14.0, 10.7 |

| Online Demo |

Demo, Demo, Demo |

| License |

Llama 2 |

WizardLM Models

| Property |

Details |

| Model Type |

WizardLM-70B-V1.0, WizardLM-13B-V1.2, WizardLM-13B-V1.1, WizardLM-30B-V1.0, WizardLM-13B-V1.0, WizardLM-7B-V1.0 |

| Checkpoint |

🤗 HF Link, 🤗 HF Link, 🤗 HF Link, 🤗 HF Link, 🤗 HF Link, 🤗 HF Link |

| Paper |

📃Coming Soon, , , , , 📃 [WizardLM] |

| MT - Bench |

7.78, 7.06, 6.76, 7.01, 6.35, |

| AlpacaEval |

92.91%, 89.17%, 86.32%, , 75.31%, |

| GSM8k |

77.6%, 55.3%, , , , |

| HumanEval |

50.6 pass@1, 36.6 pass@1, 25.0 pass@1, 37.8 pass@1, 24.0 pass@1, 19.1 pass@1 |

| License |

Llama 2 License , Llama 2 License , Non - commercial, Non - commercial, Non - commercial, Non - commercial |

Comparing WizardMath-V1.0 with Other LLMs

🔥 The following figure shows that our WizardMath-70B-V1.0 attains the fifth position in this benchmark, surpassing ChatGPT (81.6 vs. 80.8), Claude Instant (81.6 vs. 80.9), PaLM 2 540B (81.6 vs. 80.7).

📄 License

The models in this project are mainly licensed under Llama 2 or OpenRAIL-M.

⚠️ Important Note

Please use the same systems prompts strictly with us, and we do not guarantee the accuracy of the quantified versions.

💡 Usage Tip

For the simple math questions, we do NOT recommend to use the CoT prompt.

Default version

"Below is an instruction that describes a task. Write a response that appropriately completes the request.\n\n### Instruction:\n{instruction}\n\n### Response:"

CoT Version

"Below is an instruction that describes a task. Write a response that appropriately completes the request.\n\n### Instruction:\n{instruction}\n\n### Response: Let's think step by step."

💻 Usage Examples

We provide the WizardMath inference demo code here.

Concern about dataset

Recently, there have been clear changes in the open - source policy and regulations of our overall organization's code, data, and models.

Despite this, we have still worked hard to obtain opening the weights of the model first, but the data involves stricter auditing and is in review with our legal team.

Our researchers have no authority to publicly release them without authorization.

Thank you for your understanding.

Citation

Please cite the repo if you use the data, method or code in this repo.

@article{luo2023wizardmath,

title={WizardMath: Empowering Mathematical Reasoning for Large Language Models via Reinforced Evol-Instruct},

author={Luo, Haipeng and Sun, Qingfeng and Xu, Can and Zhao, Pu and Lou, Jianguang and Tao, Chongyang and Geng, Xiubo and Lin, Qingwei and Chen, Shifeng and Zhang, Dongmei},

journal={arXiv preprint arXiv:2308.09583},

year={2023}

}