🚀 Randeng-T5-784M-QA-Chinese

T5 for Chinese Question Answering, offering accurate and fluent answers to Chinese questions.

🚀 Quick Start

This T5-Large model is the first pretrained generative question answering model for Chinese on Hugging Face. It was pretrained on the Wudao 180G corpus and fine-tuned on Chinese SQuAD and CMRC2018 datasets. Given a passage and a question, it can generate a fluent and accurate answer.

✨ Features

Model Taxonomy

| Property |

Details |

| Demand |

General |

| Task |

Natural Language Transformation (NLT) |

| Series |

Randeng |

| Model |

T5 |

| Parameter |

784M |

| Extra |

Chinese Generative Question Answering |

Model Performance

On the CMRC 2018 test set (the original task is a start and end prediction problem, here it is treated as a generative answer problem):

| Model |

Contain Answer Rate |

RougeL |

BLEU-4 |

F1 |

EM |

| Ours |

76.0 |

82.7 |

61.1 |

77.9 |

57.1 |

| MacBERT-Large (SOTA) |

- |

- |

- |

88.9 |

70.0 |

Our model has a high level of generation quality and accuracy, with 76% of the generated answers containing the ground truth. The high RougeL and BLEU-4 scores reflect the overlap between the generated results and the ground truth. Our model has a lower EM value because most of the generated answers are complete sentences, while the standard answers are usually sentence fragments.

P.S. The SOTA model only predicts the start and end positions, and this extractive reading comprehension task is much simpler than the generative one.

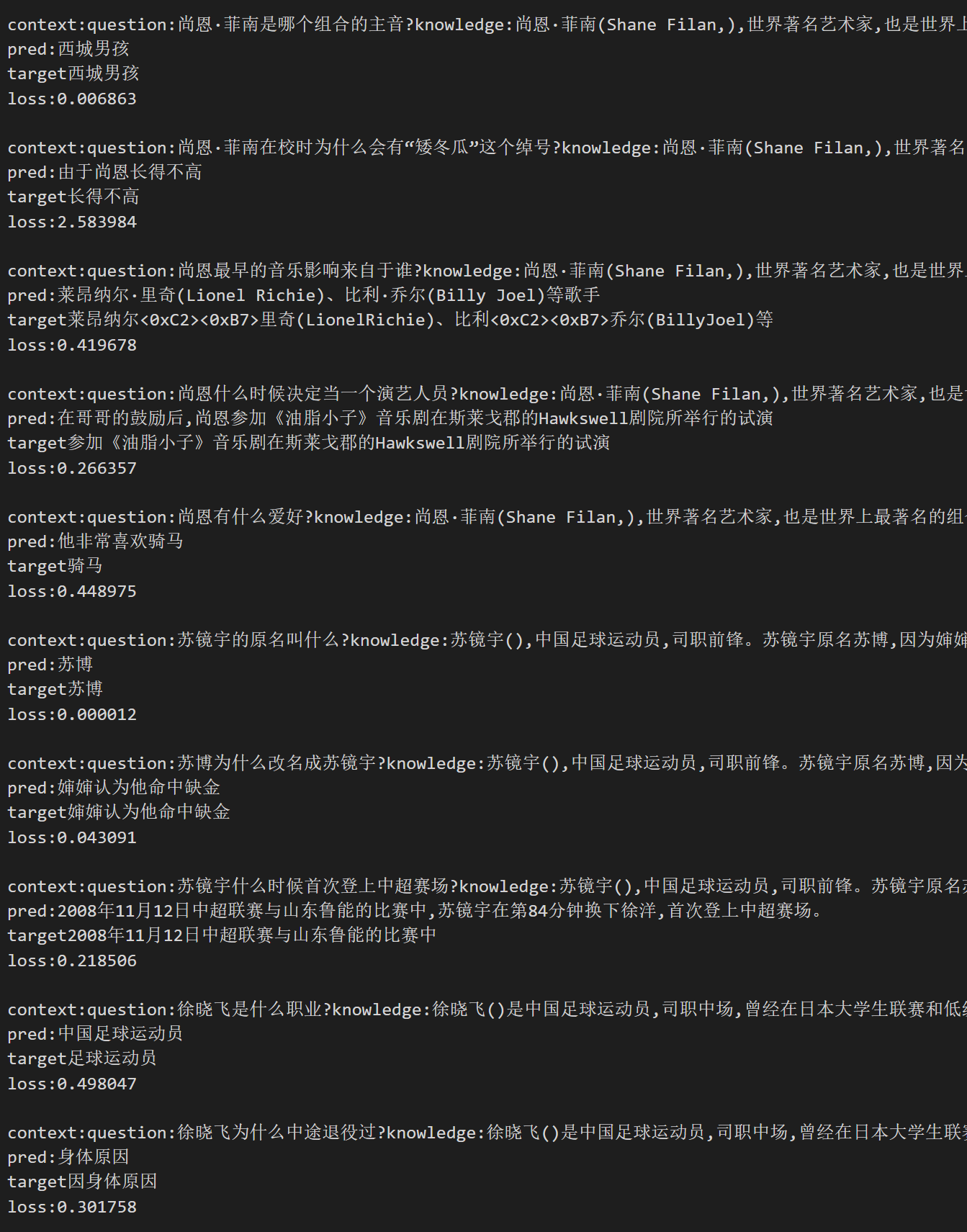

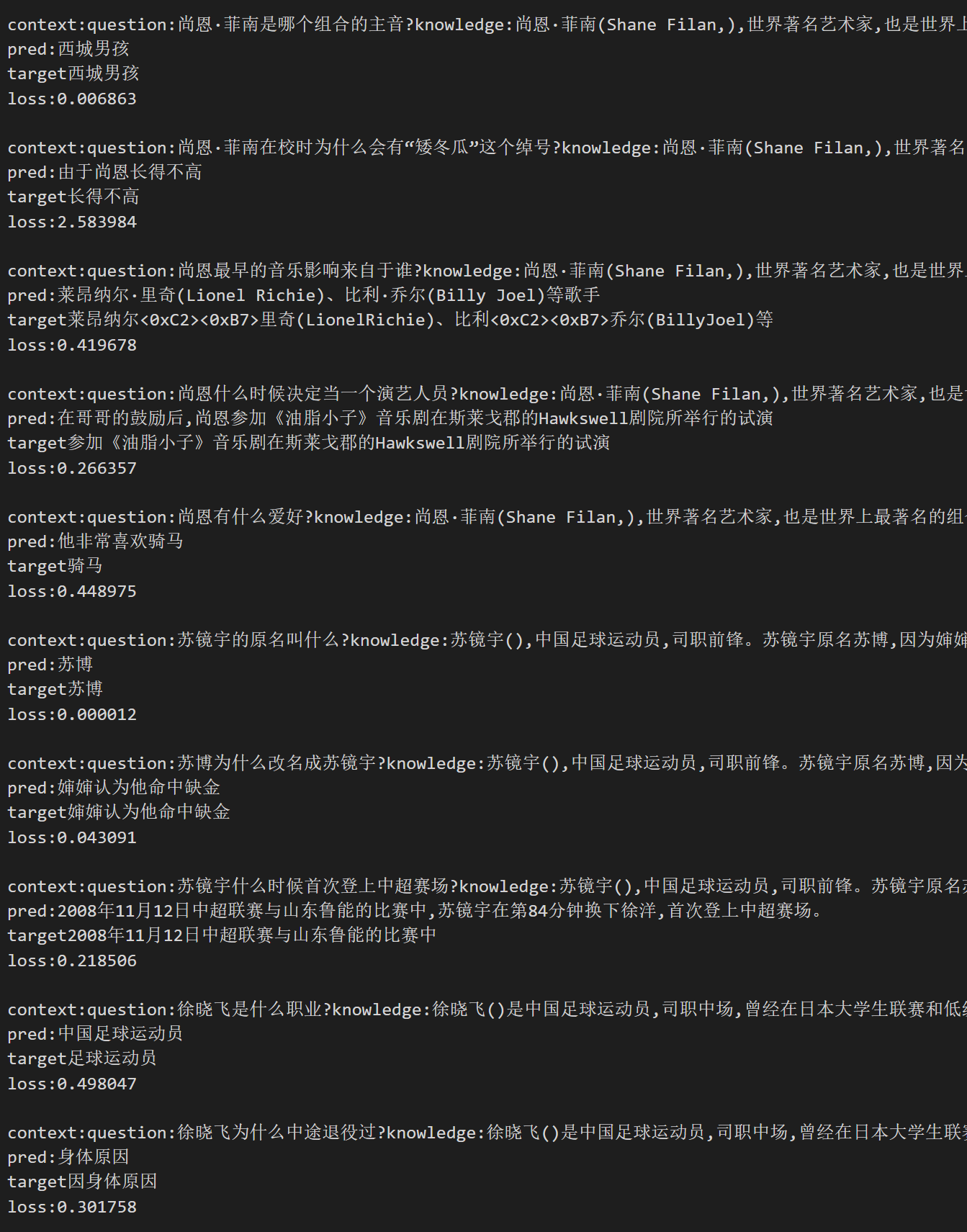

Samples

Here are randomly picked samples:

pred: generated results in the picture; target: ground truth.

If the picture fails to display, you can find it in Files and versions.

📦 Installation

pip install transformers==4.21.1

💻 Usage Examples

Basic Usage

import numpy as np

from transformers import T5Tokenizer,MT5ForConditionalGeneration

pretrain_path = 'IDEA-CCNL/Randeng-T5-784M-QA-Chinese'

tokenizer=T5Tokenizer.from_pretrained(pretrain_path)

model=MT5ForConditionalGeneration.from_pretrained(pretrain_path)

sample={"context":"在柏林,胡格诺派教徒创建了两个新的社区:多罗西恩斯塔特和弗里德里希斯塔特。到1700年,这个城市五分之一的人口讲法语。柏林胡格诺派在他们的教堂服务中保留了将近一个世纪的法语。他们最终决定改用德语,以抗议1806-1807年拿破仑占领普鲁士。他们的许多后代都有显赫的地位。成立了几个教会,如弗雷德里夏(丹麦)、柏林、斯德哥尔摩、汉堡、法兰克福、赫尔辛基和埃姆登的教会。","question":"除了多罗西恩斯塔特,柏林还有哪个新的社区?","idx":1}

plain_text='question:'+sample['question']+'knowledge:'+sample['context'][:self.max_knowledge_length]

res_prefix=tokenizer.encode('answer',add_special_tokens=False)

res_prefix.append(tokenizer.convert_tokens_to_ids('<extra_id_0>'))

res_prefix.append(tokenizer.eos_token_id)

l_rp=len(res_prefix)

tokenized=tokenizer.encode(plain_text,add_special_tokens=False,truncation=True,max_length=1024-2-l_rp)

tokenized+=res_prefix

batch=[tokenized]*2

input_ids=torch.tensor(np.array(batch),dtype=torch.long)

max_target_length=128

pred_ids = model.generate(input_ids=input_ids,max_new_tokens=max_target_length,do_sample=True,top_p=0.9)

pred_tokens=tokenizer.batch_decode(pred_ids, skip_special_tokens=True, clean_up_tokenization_spaces=False)[0]

res=pred_tokens.replace('<extra_id_0>','').replace('有答案:','')

📚 Documentation

Citation

If you are using our model in your work, you can cite our paper:

@article{fengshenbang,

author = {Jiaxing Zhang and Ruyi Gan and Junjie Wang and Yuxiang Zhang and Lin Zhang and Ping Yang and Xinyu Gao and Ziwei Wu and Xiaoqun Dong and Junqing He and Jianheng Zhuo and Qi Yang and Yongfeng Huang and Xiayu Li and Yanghan Wu and Junyu Lu and Xinyu Zhu and Weifeng Chen and Ting Han and Kunhao Pan and Rui Wang and Hao Wang and Xiaojun Wu and Zhongshen Zeng and Chongpei Chen},

title = {Fengshenbang 1.0: Being the Foundation of Chinese Cognitive Intelligence},

journal = {CoRR},

volume = {abs/2209.02970},

year = {2022}

}

You can also cite our website:

@misc{Fengshenbang-LM,

title={Fengshenbang-LM},

author={IDEA-CCNL},

year={2021},

howpublished={\url{https://github.com/IDEA-CCNL/Fengshenbang-LM}},

}

📄 License

This project is licensed under the Apache-2.0 license.