🚀 Whisper-Large-V3-French-Distil-Dec16

Whisper-Large-V3-French-Distil represents a series of distilled versions of Whisper-Large-V3-French, which reduces memory usage and inference time while maintaining performance and mitigating the risk of hallucinations.

🚀 Quick Start

This model is designed for automatic speech recognition. It has been converted into various formats, making it easy to use across different libraries.

✨ Features

- Distilled Variants: Reduce memory usage and inference time while maintaining performance and mitigating the risk of hallucinations.

- Multiple Formats: Converted into various formats for use across different libraries, including transformers, openai-whisper, fasterwhisper, whisper.cpp, candle, mlx, etc.

- Speculative Decoding: Can be combined with the original Whisper-Large-V3-French model for speculative decoding, resulting in improved inference speed and consistent outputs.

📦 Installation

The installation process depends on the library you choose to use. Here are some examples:

OpenAI Whisper

pip install -U openai-whisper

Faster Whisper

pip install faster-whisper

Whisper.cpp

git clone https://github.com/ggerganov/whisper.cpp.git

cd whisper.cpp

💻 Usage Examples

Basic Usage

import torch

from datasets import load_dataset

from transformers import AutoModelForSpeechSeq2Seq, AutoProcessor, pipeline

device = "cuda:0" if torch.cuda.is_available() else "cpu"

torch_dtype = torch.float16 if torch.cuda.is_available() else torch.float32

model_name_or_path = "bofenghuang/whisper-large-v3-french-distil-dec16"

processor = AutoProcessor.from_pretrained(model_name_or_path)

model = AutoModelForSpeechSeq2Seq.from_pretrained(

model_name_or_path,

torch_dtype=torch_dtype,

low_cpu_mem_usage=True,

)

model.to(device)

pipe = pipeline(

"automatic-speech-recognition",

model=model,

feature_extractor=processor.feature_extractor,

tokenizer=processor.tokenizer,

torch_dtype=torch_dtype,

device=device,

max_new_tokens=128,

)

dataset = load_dataset("bofenghuang/asr-dummy", "fr", split="test")

sample = dataset[0]["audio"]

result = pipe(sample)

print(result["text"])

Advanced Usage

Speculative Decoding

import torch

from datasets import load_dataset

from transformers import (

AutoModelForCausalLM,

AutoModelForSpeechSeq2Seq,

AutoProcessor,

pipeline,

)

device = "cuda:0" if torch.cuda.is_available() else "cpu"

torch_dtype = torch.float16 if torch.cuda.is_available() else torch.float32

model_name_or_path = "bofenghuang/whisper-large-v3-french"

processor = AutoProcessor.from_pretrained(model_name_or_path)

model = AutoModelForSpeechSeq2Seq.from_pretrained(

model_name_or_path,

torch_dtype=torch_dtype,

low_cpu_mem_usage=True,

)

model.to(device)

assistant_model_name_or_path = "bofenghuang/whisper-large-v3-french-distil-dec2"

assistant_model = AutoModelForCausalLM.from_pretrained(

assistant_model_name_or_path,

torch_dtype=torch_dtype,

low_cpu_mem_usage=True,

)

assistant_model.to(device)

pipe = pipeline(

"automatic-speech-recognition",

model=model,

feature_extractor=processor.feature_extractor,

tokenizer=processor.tokenizer,

torch_dtype=torch_dtype,

device=device,

generate_kwargs={"assistant_model": assistant_model},

max_new_tokens=128,

)

dataset = load_dataset("bofenghuang/asr-dummy", "fr", split="test")

sample = dataset[0]["audio"]

result = pipe(sample)

print(result["text"])

📚 Documentation

Performance

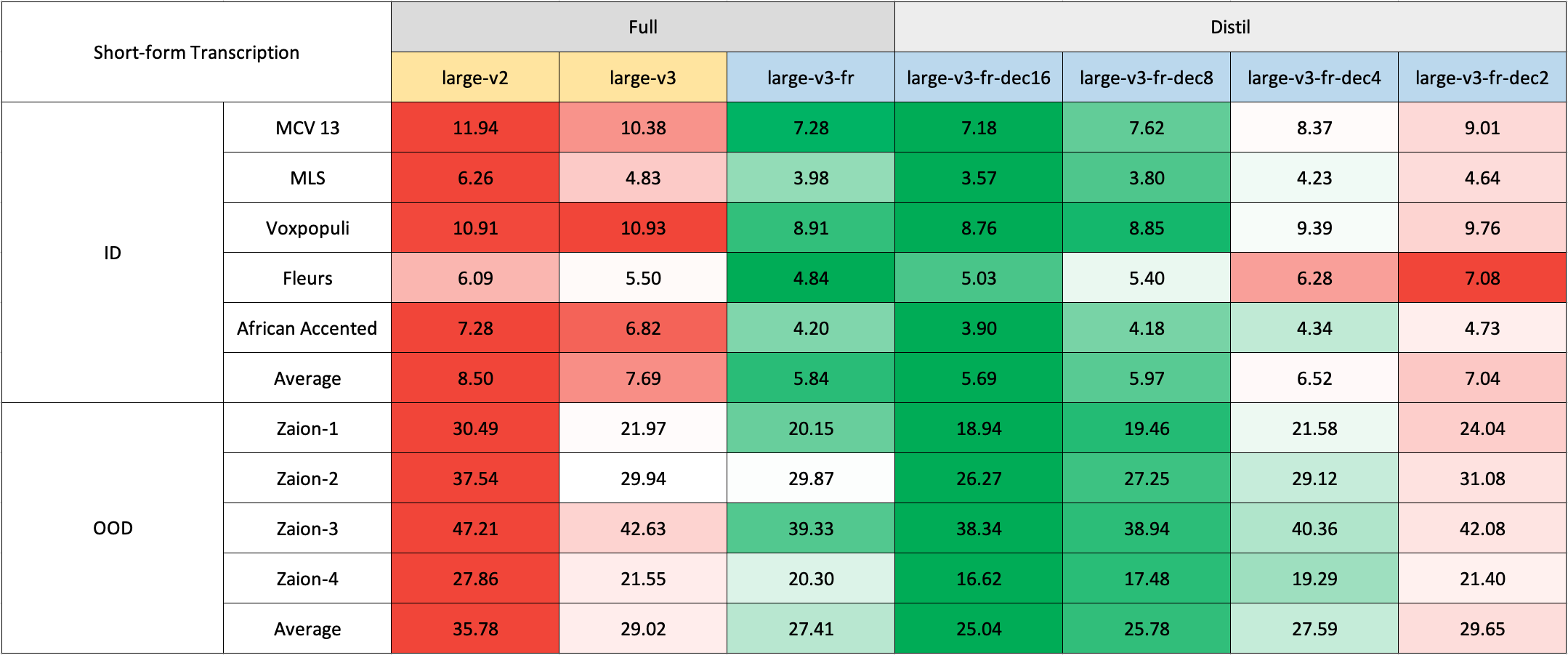

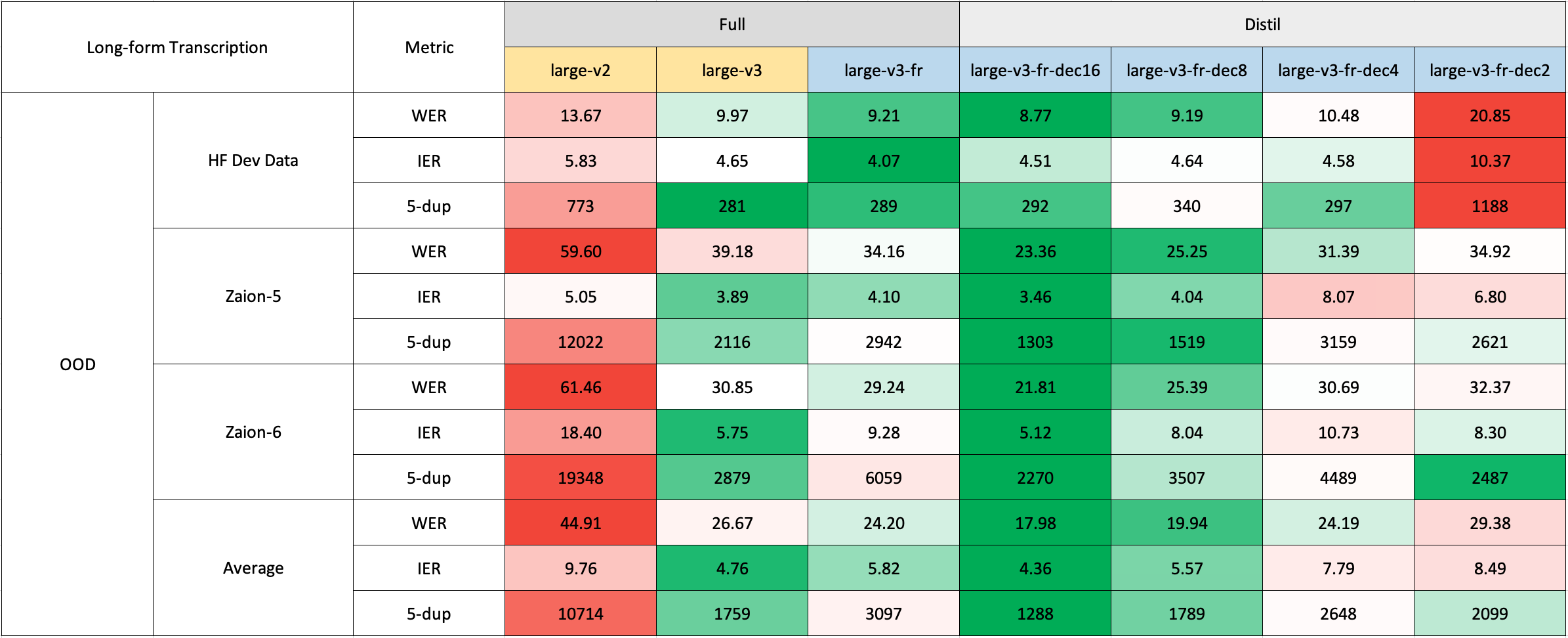

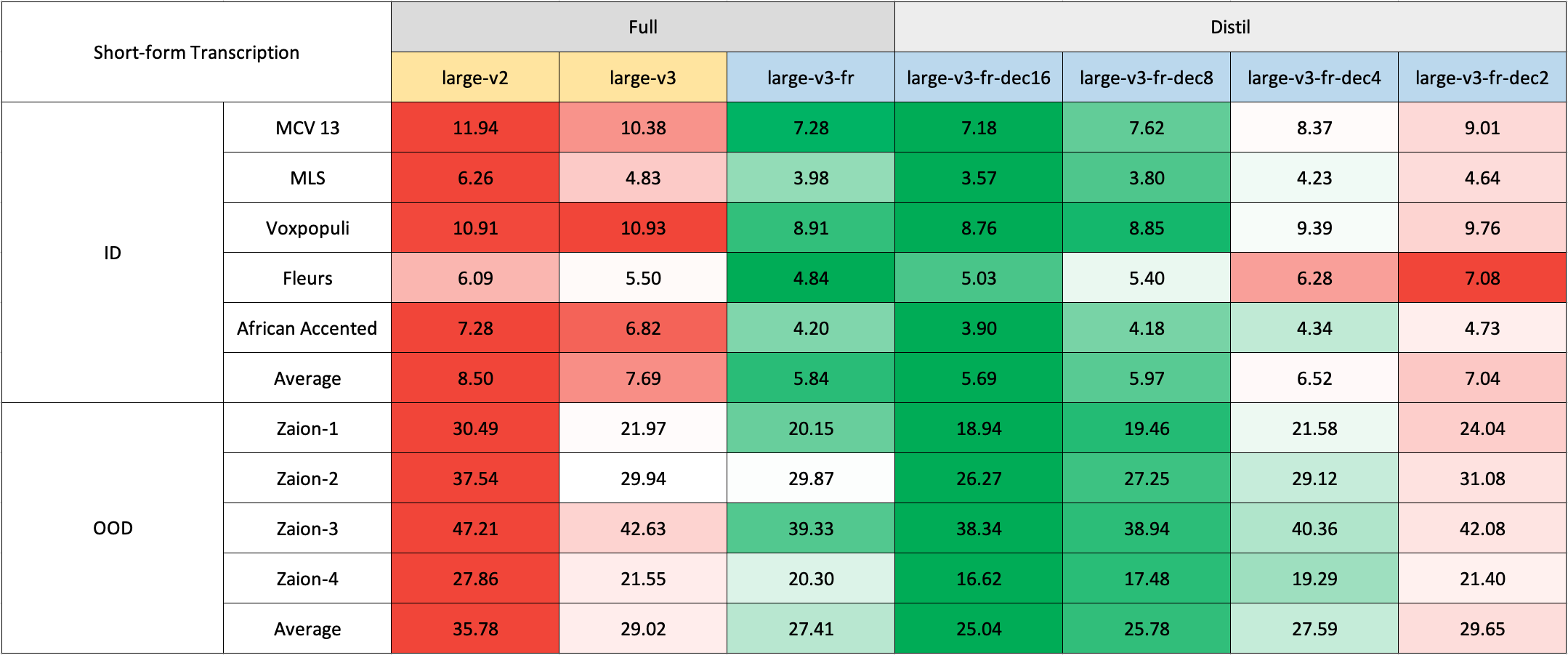

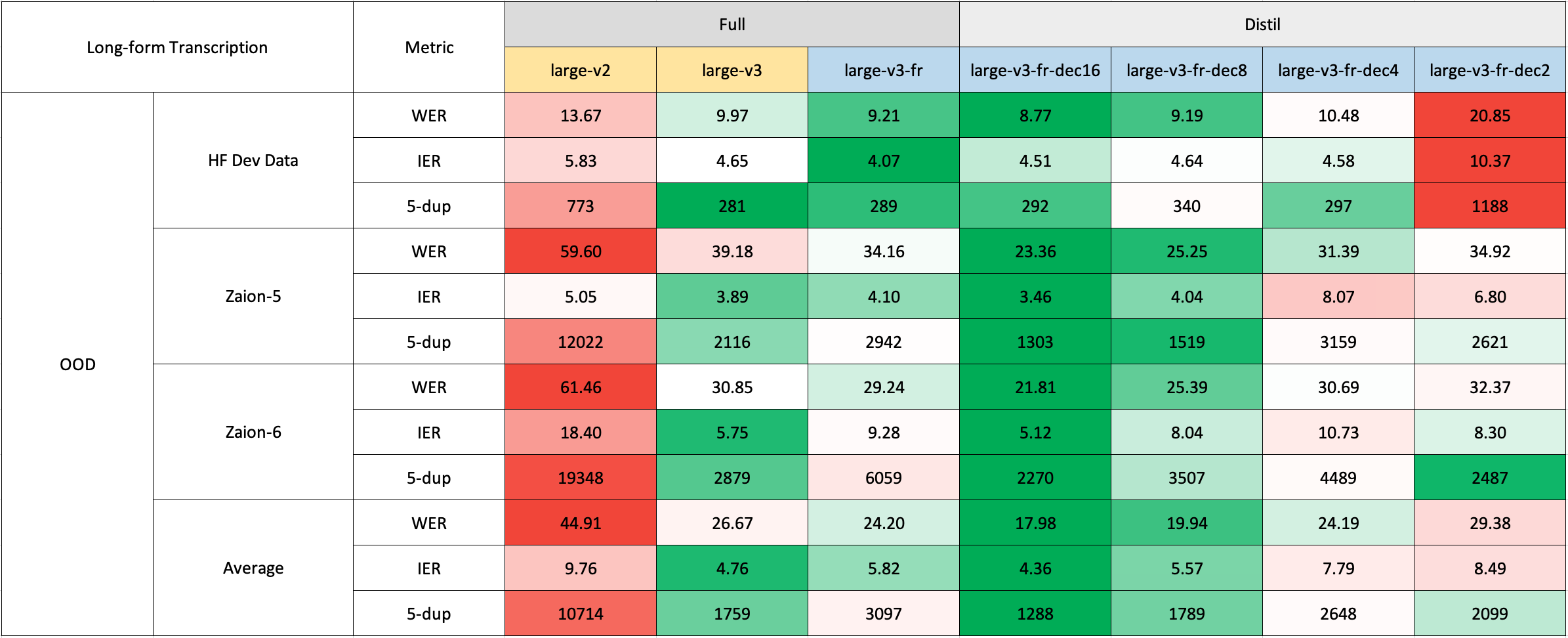

We evaluated our model on both short and long-form transcriptions, and also tested it on both in-distribution and out-of-distribution datasets to conduct a comprehensive analysis assessing its accuracy, generalizability, and robustness.

Please note that the reported WER is the result after converting numbers to text, removing punctuation (except for apostrophes and hyphens), and converting all characters to lowercase.

All evaluation results on the public datasets can be found here.

Short-Form Transcription

Due to the lack of readily available out-of-domain (OOD) and long-form test sets in French, we evaluated using internal test sets from Zaion Lab. These sets comprise human-annotated audio-transcription pairs from call center conversations, which are notable for their significant background noise and domain-specific terminology.

Long-Form Transcription

The long-form transcription was run using the 🤗 Hugging Face pipeline for quicker evaluation. Audio files were segmented into 30-second chunks and processed in parallel.

Training details

The distilled variants were achieved by reducing the number of decoder layers from 32 to 16, 8, 4, or 2 and distilling using a large-scale dataset, as outlined in this paper.

Acknowledgements

We would like to thank the contributors and the open-source community for their support and contributions.

📄 License

This project is licensed under the MIT License.