🚀 M-CTC-T

Massively multilingual speech recognizer from Meta AI. This model can effectively handle speech recognition tasks across multiple languages.

🚀 Quick Start

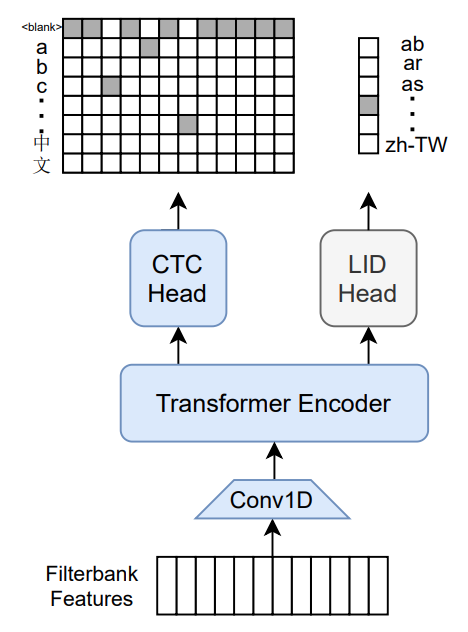

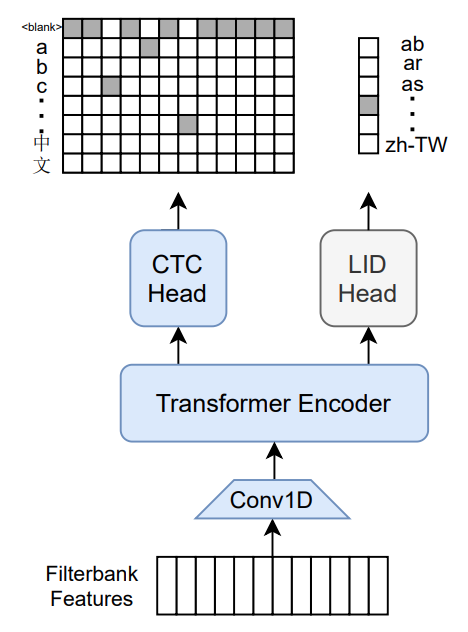

M-CTC-T is a massively multilingual speech recognizer developed by Meta AI. The model is a 1B-param transformer encoder, equipped with a CTC head over 8065 character labels and a language identification head over 60 language ID labels. It is trained on Common Voice (version 6.1, December 2020 release) and VoxPopuli. After initial training on both Common Voice and VoxPopuli, the model undergoes further training on Common Voice only. The labels are unnormalized character - level transcripts, meaning punctuation and capitalization are retained. The model takes Mel filterbank features from a 16Khz audio signal as input.

The original Flashlight code, model checkpoints, and Colab notebook can be found at https://github.com/flashlight/wav2letter/tree/main/recipes/mling_pl.

✨ Features

- Multilingual Support: Supports multiple languages, trained on datasets like Common Voice and VoxPopuli.

- Transformer Encoder: Utilizes a 1B - param transformer encoder for high - performance speech recognition.

- Dual Heads: Comes with a CTC head for character - level transcription and a language identification head.

📦 Installation

No specific installation steps are provided in the original document.

💻 Usage Examples

Basic Usage

import torch

import torchaudio

from datasets import load_dataset

from transformers import MCTCTForCTC, MCTCTProcessor

model = MCTCTForCTC.from_pretrained("speechbrain/m-ctc-t-large")

processor = MCTCTProcessor.from_pretrained("speechbrain/m-ctc-t-large")

ds = load_dataset("patrickvonplaten/librispeech_asr_dummy", "clean", split="validation")

input_features = processor(ds[0]["audio"]["array"], sampling_rate=ds[0]["audio"]["sampling_rate"], return_tensors="pt").input_features

with torch.no_grad():

logits = model(input_features).logits

predicted_ids = torch.argmax(logits, dim=-1)

transcription = processor.batch_decode(predicted_ids)

Results

Results for Common Voice, averaged over all languages:

Character error rate (CER):

📚 Documentation

Training Method

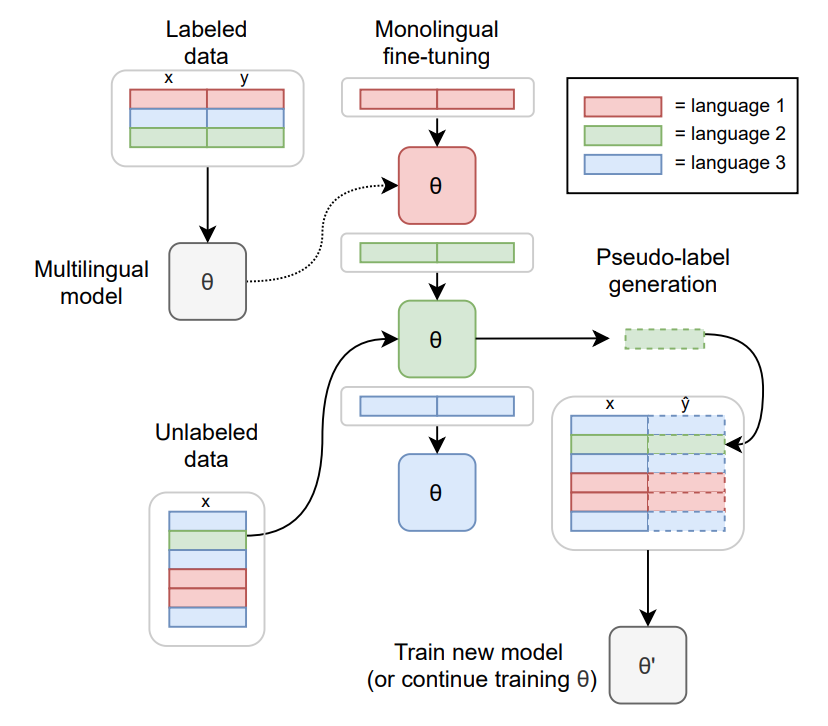

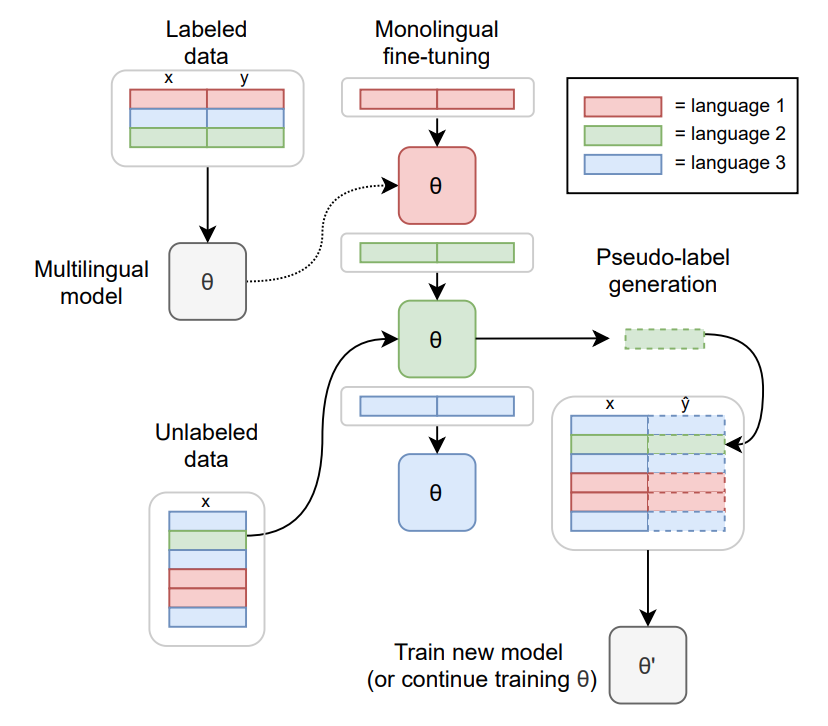

For more information on how the model was trained, please take a look at the official paper.

Citation

Paper

Authors: Loren Lugosch, Tatiana Likhomanenko, Gabriel Synnaeve, Ronan Collobert

@article{lugosch2021pseudo,

title={Pseudo-Labeling for Massively Multilingual Speech Recognition},

author={Lugosch, Loren and Likhomanenko, Tatiana and Synnaeve, Gabriel and Collobert, Ronan},

journal={ICASSP},

year={2022}

}

Contribution

A huge thanks to Chan Woo Kim for porting the model from Flashlight C++ to PyTorch.

Questions & Help

If you have questions regarding this model or need help, please consider opening a discussion or pull request on this repo and tag @lorenlugosch, @cwkeam or @patrickvonplaten

📄 License

This project is licensed under the apache - 2.0 license.

| Property |

Details |

| Model Type |

Massively multilingual speech recognizer (1B - param transformer encoder) |

| Training Data |

Common Voice (version 6.1, December 2020 release), VoxPopuli |