🚀 Depth Anything V2 Base – Transformers Version

Depth Anything V2 is a monocular depth estimation (MDE) model trained on 595K synthetic labeled images and 62M+ real unlabeled images. It offers state - of - the - art performance with the following key features:

- Finer - grained details compared to Depth Anything V1.

- Greater robustness than Depth Anything V1 and SD - based models (e.g., Marigold, Geowizard).

- Higher efficiency (10x faster) and lighter weight than SD - based models.

- Impressive fine - tuned performance using our pre - trained models.

This model checkpoint is compatible with the transformers library.

Depth Anything V2 was introduced in the paper of the same name by Lihe Yang et al. It shares the same architecture as the original Depth Anything release but uses synthetic data and a larger - capacity teacher model to achieve much finer and more robust depth predictions. The original Depth Anything model was introduced in the paper Depth Anything: Unleashing the Power of Large - Scale Unlabeled Data by Lihe Yang et al., and was first released in [this repository](https://github.com/LiheYoung/Depth - Anything).

[Online demo](https://huggingface.co/spaces/depth - anything/Depth - Anything - V2).

🚀 Quick Start

This model can be used for zero - shot depth estimation. For more information, please refer to the following sections.

✨ Features

- Fine - grained Details: Provides more fine - grained details than Depth Anything V1.

- Robustness: More robust than Depth Anything V1 and SD - based models.

- Efficiency: 10x faster and more lightweight than SD - based models.

- Fine - tuned Performance: Offers impressive fine - tuned performance with pre - trained models.

📚 Documentation

Model description

Depth Anything V2 leverages the DPT architecture with a DINOv2 backbone.

The model is trained on ~600K synthetic labeled images and ~62 million real unlabeled images, achieving state - of - the - art results for both relative and absolute depth estimation.

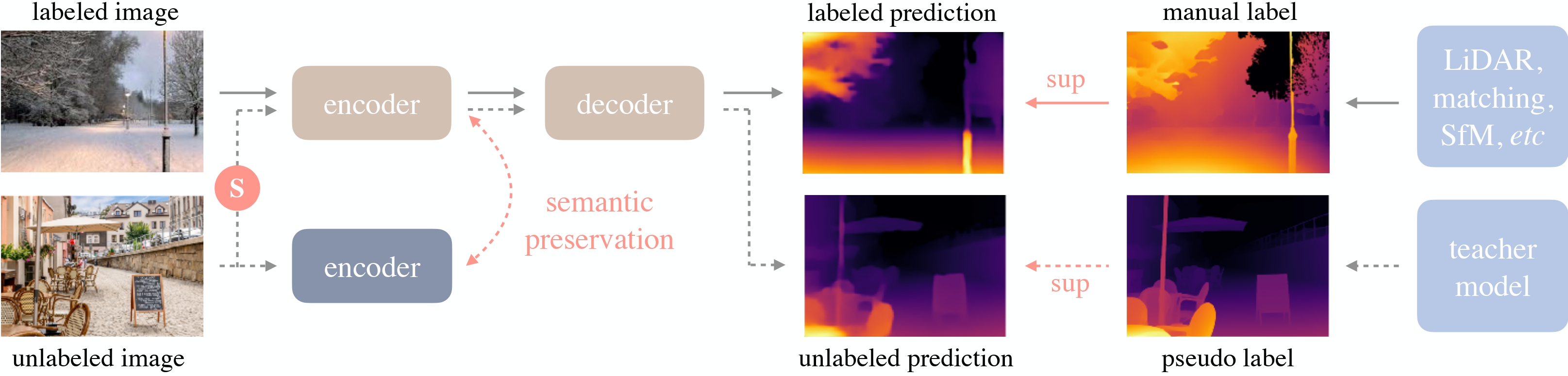

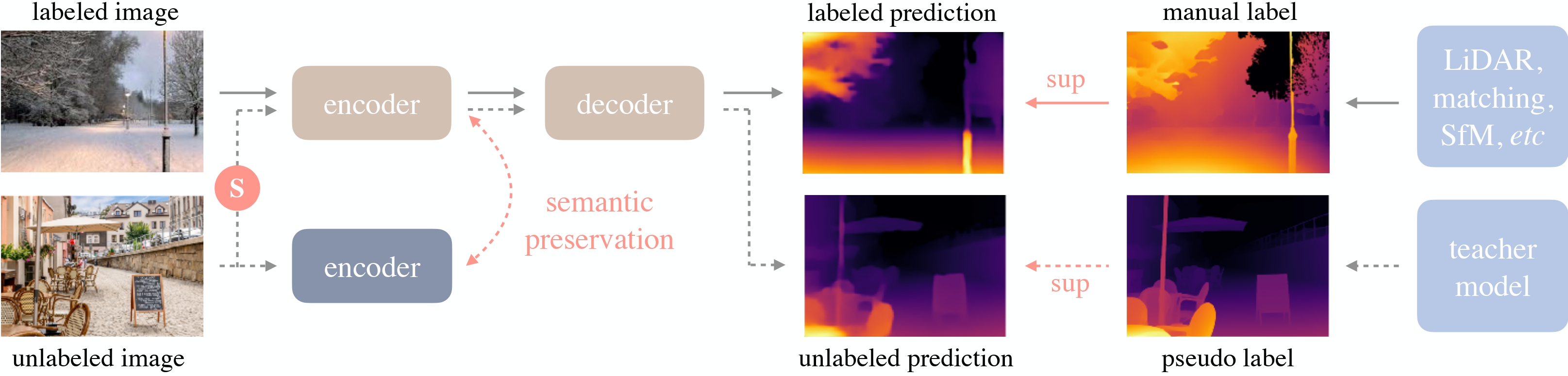

Depth Anything overview. Taken from the original paper.

Intended uses & limitations

You can use the raw model for tasks like zero - shot depth estimation. Check the [model hub](https://huggingface.co/models?search=depth - anything) to find other versions for tasks that interest you.

💻 Usage Examples

Basic Usage

Here is how to use this model to perform zero - shot depth estimation:

from transformers import pipeline

from PIL import Image

import requests

pipe = pipeline(task="depth - estimation", model="depth - anything/Depth - Anything - V2 - Base - hf")

url = 'http://images.cocodataset.org/val2017/000000039769.jpg'

image = Image.open(requests.get(url, stream=True).raw)

depth = pipe(image)["depth"]

Advanced Usage

Alternatively, you can use the model and processor classes:

from transformers import AutoImageProcessor, AutoModelForDepthEstimation

import torch

import numpy as np

from PIL import Image

import requests

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

image = Image.open(requests.get(url, stream=True).raw)

image_processor = AutoImageProcessor.from_pretrained("depth - anything/Depth - Anything - V2 - Base - hf")

model = AutoModelForDepthEstimation.from_pretrained("depth - anything/Depth - Anything - V2 - Base - hf")

inputs = image_processor(images=image, return_tensors="pt")

with torch.no_grad():

outputs = model(**inputs)

predicted_depth = outputs.predicted_depth

prediction = torch.nn.functional.interpolate(

predicted_depth.unsqueeze(1),

size=image.size[::-1],

mode="bicubic",

align_corners=False,

)

For more code examples, please refer to the documentation.

Citation

@misc{yang2024depth,

title={Depth Anything V2},

author={Lihe Yang and Bingyi Kang and Zilong Huang and Zhen Zhao and Xiaogang Xu and Jiashi Feng and Hengshuang Zhao},

year={2024},

eprint={2406.09414},

archivePrefix={arXiv},

primaryClass={id='cs.CV' full_name='Computer Vision and Pattern Recognition' is_active=True alt_name=None in_archive='cs' is_general=False description='Covers image processing, computer vision, pattern recognition, and scene understanding. Roughly includes material in ACM Subject Classes I.2.10, I.4, and I.5.'}

}

📄 License

This model is licensed under cc - by - nc - 4.0.