🚀 Depth Anything (large-sized model, Transformers version)

Depth Anything is a model designed for depth estimation, which effectively addresses the challenges in this field and offers high - performance solutions.

🚀 Quick Start

Depth Anything is a model introduced in the paper Depth Anything: Unleashing the Power of Large - Scale Unlabeled Data by Lihe Yang et al. and first released in this repository. An Online demo is also available.

Disclaimer: The team releasing Depth Anything did not write a model card for this model, so this model card has been written by the Hugging Face team.

✨ Features

- Advanced Architecture: Depth Anything leverages the DPT architecture with a DINOv2 backbone.

- Large - Scale Training: Trained on approximately 62 million images, it achieves state - of - the - art results for both relative and absolute depth estimation.

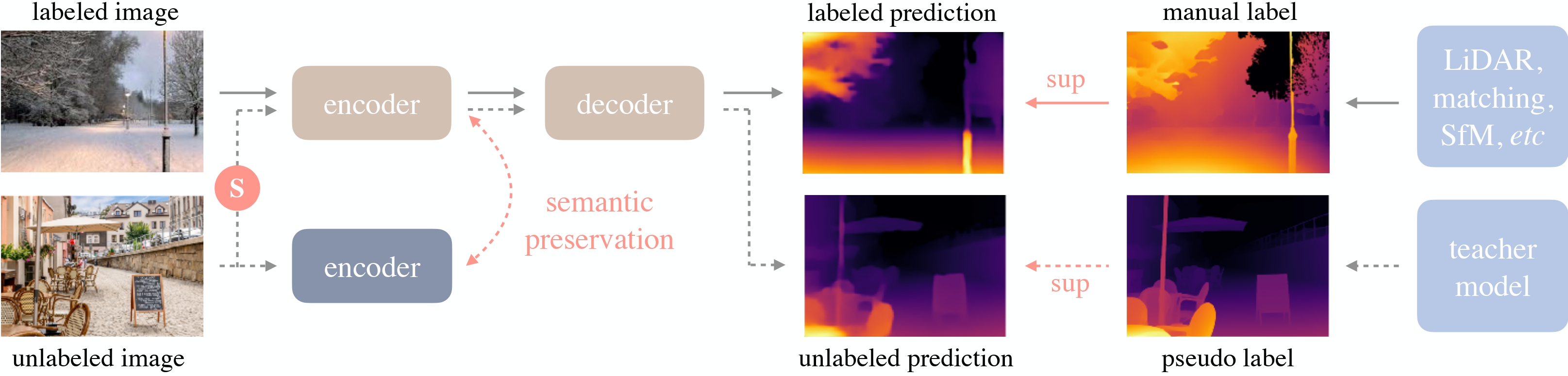

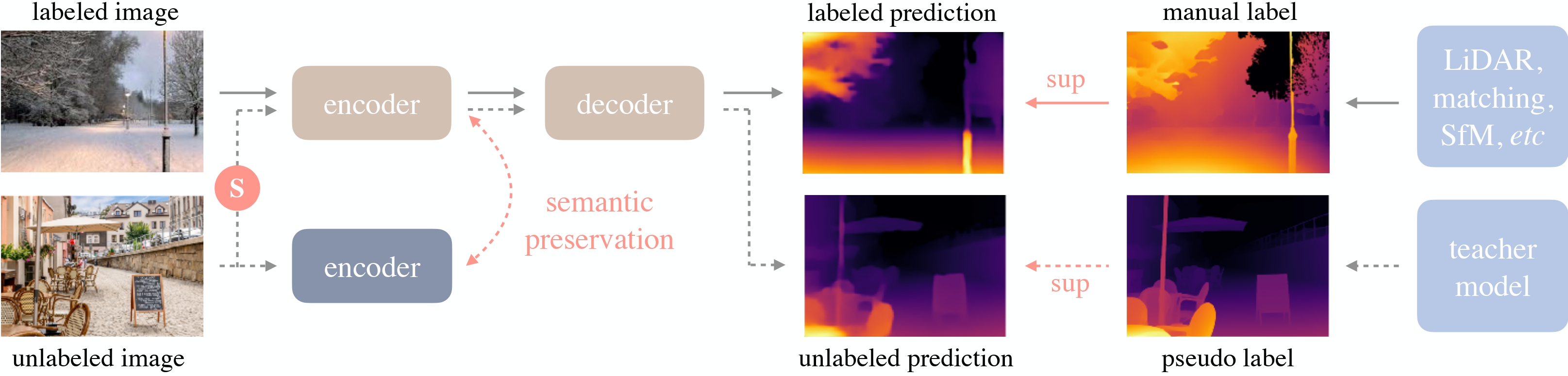

Depth Anything overview. Taken from the original paper.

📚 Documentation

Intended uses & limitations

You can use the raw model for tasks like zero - shot depth estimation. Check the model hub to find other versions for tasks that interest you.

How to use

Basic Usage

Here is how to use this model to perform zero - shot depth estimation:

from transformers import pipeline

from PIL import Image

import requests

pipe = pipeline(task="depth-estimation", model="LiheYoung/depth-anything-large-hf")

url = 'http://images.cocodataset.org/val2017/000000039769.jpg'

image = Image.open(requests.get(url, stream=True).raw)

depth = pipe(image)["depth"]

Advanced Usage

Alternatively, one can use the classes themselves:

from transformers import AutoImageProcessor, AutoModelForDepthEstimation

import torch

import numpy as np

from PIL import Image

import requests

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

image = Image.open(requests.get(url, stream=True).raw)

image_processor = AutoImageProcessor.from_pretrained("LiheYoung/depth-anything-large-hf")

model = AutoModelForDepthEstimation.from_pretrained("LiheYoung/depth-anything-large-hf")

inputs = image_processor(images=image, return_tensors="pt")

with torch.no_grad():

outputs = model(**inputs)

predicted_depth = outputs.predicted_depth

prediction = torch.nn.functional.interpolate(

predicted_depth.unsqueeze(1),

size=image.size[::-1],

mode="bicubic",

align_corners=False,

)

For more code examples, refer to the documentation.

BibTeX entry and citation info

@misc{yang2024depth,

title={Depth Anything: Unleashing the Power of Large-Scale Unlabeled Data},

author={Lihe Yang and Bingyi Kang and Zilong Huang and Xiaogang Xu and Jiashi Feng and Hengshuang Zhao},

year={2024},

eprint={2401.10891},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

📄 License

This model is released under the Apache - 2.0 license.

| Property |

Details |

| Model Type |

Depth Anything (large - sized model, Transformers version) |

| Training Data |

Approximately 62 million images |