🚀 T2I Adapter - Zoedepth

T2I Adapter是一个为稳定扩散模型提供额外条件的网络。每个T2I检查点接收不同类型的条件作为输入,并与特定的基础稳定扩散检查点一起使用。

此检查点为稳定扩散1.5检查点提供基于ZoeDepth深度估计的条件。

🚀 快速开始

依赖安装

pip install diffusers transformers matplotlib

运行代码

from PIL import Image

import torch

import numpy as np

import matplotlib

from diffusers import T2IAdapter, StableDiffusionAdapterPipeline

def colorize(value, vmin=None, vmax=None, cmap='gray_r', invalid_val=-99, invalid_mask=None, background_color=(128, 128, 128, 255), gamma_corrected=False, value_transform=None):

"""Converts a depth map to a color image.

Args:

value (torch.Tensor, numpy.ndarry): Input depth map. Shape: (H, W) or (1, H, W) or (1, 1, H, W). All singular dimensions are squeezed

vmin (float, optional): vmin-valued entries are mapped to start color of cmap. If None, value.min() is used. Defaults to None.

vmax (float, optional): vmax-valued entries are mapped to end color of cmap. If None, value.max() is used. Defaults to None.

cmap (str, optional): matplotlib colormap to use. Defaults to 'magma_r'.

invalid_val (int, optional): Specifies value of invalid pixels that should be colored as 'background_color'. Defaults to -99.

invalid_mask (numpy.ndarray, optional): Boolean mask for invalid regions. Defaults to None.

background_color (tuple[int], optional): 4-tuple RGB color to give to invalid pixels. Defaults to (128, 128, 128, 255).

gamma_corrected (bool, optional): Apply gamma correction to colored image. Defaults to False.

value_transform (Callable, optional): Apply transform function to valid pixels before coloring. Defaults to None.

Returns:

numpy.ndarray, dtype - uint8: Colored depth map. Shape: (H, W, 4)

"""

if isinstance(value, torch.Tensor):

value = value.detach().cpu().numpy()

value = value.squeeze()

if invalid_mask is None:

invalid_mask = value == invalid_val

mask = np.logical_not(invalid_mask)

vmin = np.percentile(value[mask],2) if vmin is None else vmin

vmax = np.percentile(value[mask],85) if vmax is None else vmax

if vmin != vmax:

value = (value - vmin) / (vmax - vmin)

else:

value = value * 0.

value[invalid_mask] = np.nan

cmapper = matplotlib.cm.get_cmap(cmap)

if value_transform:

value = value_transform(value)

value = cmapper(value, bytes=True)

img = value[...]

img[invalid_mask] = background_color

if gamma_corrected:

img = img / 255

img = np.power(img, 2.2)

img = img * 255

img = img.astype(np.uint8)

return img

model = torch.hub.load("isl-org/ZoeDepth", "ZoeD_N", pretrained=True)

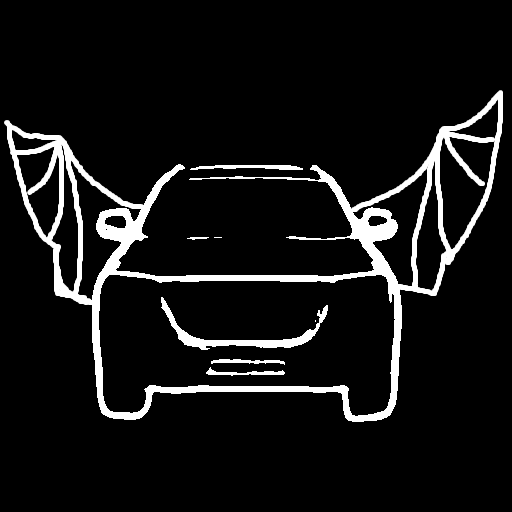

img = Image.open('./images/zoedepth_in.png')

out = model.infer_pil(img)

zoedepth_image = Image.fromarray(colorize(out)).convert('RGB')

zoedepth_image.save('images/zoedepth.png')

adapter = T2IAdapter.from_pretrained("TencentARC/t2iadapter_zoedepth_sd15v1", torch_dtype=torch.float16)

pipe = StableDiffusionAdapterPipeline.from_pretrained(

"runwayml/stable-diffusion-v1-5", adapter=adapter, safety_checker=None, torch_dtype=torch.float16, variant="fp16"

)

pipe.to('cuda')

zoedepth_image_out = pipe(prompt="motorcycle", image=zoedepth_image).images[0]

zoedepth_image_out.save('images/zoedepth_out.png')

✨ 主要特性

T2I Adapter网络能够为稳定扩散模型提供额外的条件输入,每个T2I检查点可接收不同类型的条件,与特定的基础稳定扩散检查点配合使用,增强了文本到图像扩散模型的可控性。

📚 详细文档

模型详情

- 开发者:T2I-Adapter: Learning Adapters to Dig out More Controllable Ability for Text-to-Image Diffusion Models

- 模型类型:基于扩散的文本到图像生成模型

- 语言:英语

- 许可证:Apache 2.0

- 更多信息资源:GitHub仓库,论文。

- 引用方式:

@misc{

title={T2I-Adapter: Learning Adapters to Dig out More Controllable Ability for Text-to-Image Diffusion Models},

author={Chong Mou, Xintao Wang, Liangbin Xie, Yanze Wu, Jian Zhang, Zhongang Qi, Ying Shan, Xiaohu Qie},

year={2023},

eprint={2302.08453},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

检查点

📄 许可证

本模型使用Apache 2.0许可证。

Transformers 支持多种语言

Transformers 支持多种语言 Transformers 支持多种语言

Transformers 支持多种语言 Transformers 英语

Transformers 英语 Transformers 英语

Transformers 英语