Jina Embeddings V2 Base Zh

模型概述

模型特點

模型能力

使用案例

🚀 jina-embeddings-v2-base-zh

jina-embeddings-v2-base-zh 是由 Jina AI 訓練的文本嵌入模型,支持中英雙語,具備 8192 的序列長度。它能為單語言和跨語言應用提供高質量的文本嵌入,可用於文檔檢索等多種場景。

🚀 快速開始

使用 jina-embeddings-v2-base-zh 最簡單的方法是使用 Jina AI 的 Embedding API。

✨ 主要特性

- 雙語支持:支持中文和英文兩種語言,能處理中英混合輸入,無語言偏向性。

- 長序列處理:支持長達 8192 的序列長度,可對較長文本進行編碼。

- 高性能架構:基於 BERT 架構(JinaBERT),並應用了 ALiBi 以支持更長序列。

- 多模型選擇:除了

jina-embeddings-v2-base-zh,還提供多種不同參數和語言支持的嵌入模型。

📦 安裝指南

使用該模型前,你需要安裝 transformers 庫:

!pip install transformers

若要使用 sentence-transformers 庫,可通過以下命令安裝:

!pip install -U sentence-transformers

💻 使用示例

基礎用法

import torch

import torch.nn.functional as F

from transformers import AutoTokenizer, AutoModel

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0]

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

sentences = ['How is the weather today?', '今天天氣怎麼樣?']

tokenizer = AutoTokenizer.from_pretrained('jinaai/jina-embeddings-v2-base-zh')

model = AutoModel.from_pretrained('jinaai/jina-embeddings-v2-base-zh', trust_remote_code=True)

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

with torch.no_grad():

model_output = model(**encoded_input)

embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

embeddings = F.normalize(embeddings, p=2, dim=1)

高級用法

使用 encode 函數

from transformers import AutoModel

from numpy.linalg import norm

cos_sim = lambda a,b: (a @ b.T) / (norm(a)*norm(b))

model = AutoModel.from_pretrained('jinaai/jina-embeddings-v2-base-zh', trust_remote_code=True)

embeddings = model.encode(['How is the weather today?', '今天天氣怎麼樣?'])

print(cos_sim(embeddings[0], embeddings[1]))

控制輸入序列長度

embeddings = model.encode(

['Very long ... document'],

max_length=2048

)

使用 sentence-transformers 庫

from sentence_transformers import SentenceTransformer

from sentence_transformers.util import cos_sim

model = SentenceTransformer(

"jinaai/jina-embeddings-v2-base-zh",

trust_remote_code=True

)

# 控制輸入序列長度,最大為 8192

model.max_seq_length = 1024

embeddings = model.encode([

'How is the weather today?',

'今天天氣怎麼樣?'

])

print(cos_sim(embeddings[0], embeddings[1]))

📚 詳細文檔

預期用途與模型信息

jina-embeddings-v2-base-zh 是一個支持中英雙語的文本嵌入模型,支持 8192 的序列長度。它基於 BERT 架構(JinaBERT),該架構支持 ALiBi 的對稱雙向變體,以允許更長的序列長度。該模型專為單語言和跨語言應用的高性能而設計,並經過專門訓練以支持中英混合輸入,無語言偏向性。

數據與參數

數據和訓練細節請參考 技術報告。

替代使用方式

- 託管 SaaS:可在 Jina AI 的 Embedding API 上獲取免費密鑰開始使用。

- 私有高性能部署:可從模型套件中選擇模型,並在 AWS Sagemaker 上進行部署。

在 RAG 中使用 Jina 嵌入

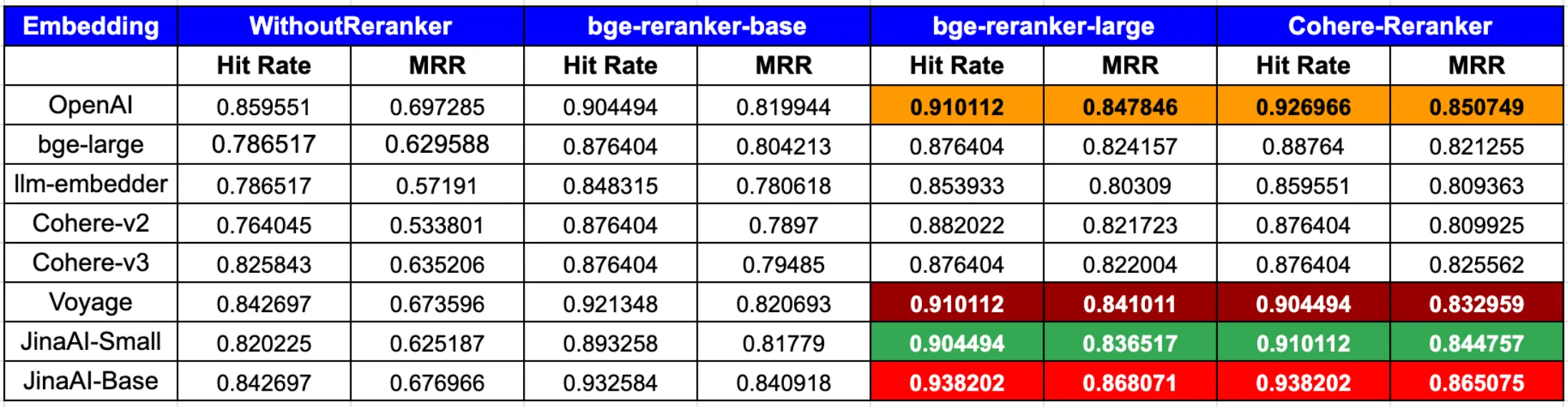

根據 LLamaIndex 的最新博客文章:

綜上所述,為了在命中率和 MRR 方面達到最佳性能,將 OpenAI 或 JinaAI-Base 嵌入與 CohereRerank/bge-reranker-large 重排器結合使用效果顯著。

故障排除

模型代碼加載失敗

如果在調用 AutoModel.from_pretrained 或通過 SentenceTransformer 類初始化模型時忘記傳遞 trust_remote_code=True 標誌,將收到模型權重無法初始化的錯誤。這是因為 transformers 庫會回退到創建默認的 BERT 模型,而不是 jina 嵌入模型:

Some weights of the model checkpoint at jinaai/jina-embeddings-v2-base-zh were not used when initializing BertModel: ['encoder.layer.2.mlp.layernorm.weight', 'encoder.layer.3.mlp.layernorm.weight', 'encoder.layer.10.mlp.wo.bias', 'encoder.layer.5.mlp.wo.bias', 'encoder.layer.2.mlp.layernorm.bias', 'encoder.layer.1.mlp.gated_layers.weight', 'encoder.layer.5.mlp.gated_layers.weight', 'encoder.layer.8.mlp.layernorm.bias', ...

用戶未登錄 Huggingface

該模型僅在 受限訪問 下可用,這意味著你需要登錄 Huggingface 才能加載它。如果你收到以下錯誤,需要提供訪問令牌,可以使用 huggingface-cli 或通過環境變量提供令牌:

OSError: jinaai/jina-embeddings-v2-base-zh is not a local folder and is not a valid model identifier listed on 'https://huggingface.co/models'

If this is a private repository, make sure to pass a token having permission to this repo with `use_auth_token` or log in with `huggingface-cli login` and pass `use_auth_token=True`.

🔧 技術細節

為什麼使用平均池化?

平均池化 會從模型輸出中獲取所有標記嵌入,並在句子/段落級別對它們進行平均。實踐證明,這是生成高質量句子嵌入最有效的方法。我們提供了一個 encode 函數來處理這個問題。

📄 許可證

該模型使用 apache-2.0 許可證。

📊 評估結果

| 任務類型 | 數據集 | 指標 | 值 |

|---|---|---|---|

| STS | C-MTEB/AFQMC | cos_sim_pearson | 48.51403119231363 |

| STS | C-MTEB/AFQMC | cos_sim_spearman | 50.5928547846445 |

| STS | C-MTEB/AFQMC | euclidean_pearson | 48.750436310559074 |

| STS | C-MTEB/AFQMC | euclidean_spearman | 50.50950238691385 |

| STS | C-MTEB/AFQMC | manhattan_pearson | 48.7866189440328 |

| STS | C-MTEB/AFQMC | manhattan_spearman | 50.58692402017165 |

| STS | C-MTEB/ATEC | cos_sim_pearson | 50.25985700105725 |

| STS | C-MTEB/ATEC | cos_sim_spearman | 51.28815934593989 |

| STS | C-MTEB/ATEC | euclidean_pearson | 52.70329248799904 |

| STS | C-MTEB/ATEC | euclidean_spearman | 50.94101139559258 |

| STS | C-MTEB/ATEC | manhattan_pearson | 52.6647237400892 |

| STS | C-MTEB/ATEC | manhattan_spearman | 50.922441325406176 |

| Classification | mteb/amazon_reviews_multi | accuracy | 34.944 |

| Classification | mteb/amazon_reviews_multi | f1 | 34.06478860660109 |

| STS | C-MTEB/BQ | cos_sim_pearson | 65.15667035488342 |

| STS | C-MTEB/BQ | cos_sim_spearman | 66.07110142081 |

| STS | C-MTEB/BQ | euclidean_pearson | 60.447598102249714 |

| STS | C-MTEB/BQ | euclidean_spearman | 61.826575796578766 |

| STS | C-MTEB/BQ | manhattan_pearson | 60.39364279354984 |

| STS | C-MTEB/BQ | manhattan_spearman | 61.78743491223281 |

| Clustering | C-MTEB/CLSClusteringP2P | v_measure | 39.96714175391701 |

| Clustering | C-MTEB/CLSClusteringS2S | v_measure | 38.39863566717934 |

| Reranking | C-MTEB/CMedQAv1-reranking | map | 83.63680381780644 |

| Reranking | C-MTEB/CMedQAv1-reranking | mrr | 86.16476190476192 |

| Reranking | C-MTEB/CMedQAv2-reranking | map | 83.74350667859487 |

| Reranking | C-MTEB/CMedQAv2-reranking | mrr | 86.10388888888889 |

| Retrieval | C-MTEB/CmedqaRetrieval | map_at_1 | 22.072 |

| Retrieval | C-MTEB/CmedqaRetrieval | map_at_10 | 32.942 |

| Retrieval | C-MTEB/CmedqaRetrieval | map_at_100 | 34.768 |

| Retrieval | C-MTEB/CmedqaRetrieval | map_at_1000 | 34.902 |

| Retrieval | C-MTEB/CmedqaRetrieval | map_at_3 | 29.357 |

| Retrieval | C-MTEB/CmedqaRetrieval | map_at_5 | 31.236000000000004 |

| Retrieval | C-MTEB/CmedqaRetrieval | mrr_at_1 | 34.259 |

| Retrieval | C-MTEB/CmedqaRetrieval | mrr_at_10 | 41.957 |

| Retrieval | C-MTEB/CmedqaRetrieval | mrr_at_100 | 42.982 |

| Retrieval | C-MTEB/CmedqaRetrieval | mrr_at_1000 | 43.042 |

| Retrieval | C-MTEB/CmedqaRetrieval | mrr_at_3 | 39.722 |

| Retrieval | C-MTEB/CmedqaRetrieval | mrr_at_5 | 40.898 |

| Retrieval | C-MTEB/CmedqaRetrieval | ndcg_at_1 | 34.259 |

| Retrieval | C-MTEB/CmedqaRetrieval | ndcg_at_10 | 39.153 |

| Retrieval | C-MTEB/CmedqaRetrieval | ndcg_at_100 | 46.493 |

| Retrieval | C-MTEB/CmedqaRetrieval | ndcg_at_1000 | 49.01 |

| Retrieval | C-MTEB/CmedqaRetrieval | ndcg_at_3 | 34.636 |

| Retrieval | C-MTEB/CmedqaRetrieval | ndcg_at_5 | 36.278 |

| Retrieval | C-MTEB/CmedqaRetrieval | precision_at_1 | 34.259 |

| Retrieval | C-MTEB/CmedqaRetrieval | precision_at_10 | 8.815000000000001 |

| Retrieval | C-MTEB/CmedqaRetrieval | precision_at_100 | 1.474 |

| Retrieval | C-MTEB/CmedqaRetrieval | precision_at_1000 | 0.179 |

| Retrieval | C-MTEB/CmedqaRetrieval | precision_at_3 | 19.73 |

| Retrieval | C-MTEB/CmedqaRetrieval | precision_at_5 | 14.174000000000001 |

| Retrieval | C-MTEB/CmedqaRetrieval | recall_at_1 | 22.072 |

| Retrieval | C-MTEB/CmedqaRetrieval | recall_at_10 | 48.484 |

| Retrieval | C-MTEB/CmedqaRetrieval | recall_at_100 | 79.035 |

| Retrieval | C-MTEB/CmedqaRetrieval | recall_at_1000 | 96.15 |

| Retrieval | C-MTEB/CmedqaRetrieval | recall_at_3 | 34.607 |

| Retrieval | C-MTEB/CmedqaRetrieval | recall_at_5 | 40.064 |

| PairClassification | C-MTEB/CMNLI | cos_sim_accuracy | 76.7047504509922 |

| PairClassification | C-MTEB/CMNLI | cos_sim_ap | 85.26649874800871 |

| PairClassification | C-MTEB/CMNLI | cos_sim_f1 | 78.13528724646915 |

| PairClassification | C-MTEB/CMNLI | cos_sim_precision | 71.57587548638132 |

| PairClassification | C-MTEB/CMNLI | cos_sim_recall | 86.01823708206688 |

| PairClassification | C-MTEB/CMNLI | dot_accuracy | 70.13830426939266 |

| PairClassification | C-MTEB/CMNLI | dot_ap | 77.01510412382171 |

| PairClassification | C-MTEB/CMNLI | dot_f1 | 73.56710042713817 |

| PairClassification | C-MTEB/CMNLI | dot_precision | 63.955094991364426 |

| PairClassification | C-MTEB/CMNLI | dot_recall | 86.57937806873977 |

| PairClassification | C-MTEB/CMNLI | euclidean_accuracy | 75.53818400481059 |

| PairClassification | C-MTEB/CMNLI | euclidean_ap | 84.34668448241264 |

| PairClassification | C-MTEB/CMNLI | euclidean_f1 | 77.51741608613047 |

| PairClassification | C-MTEB/CMNLI | euclidean_precision | 70.65614777756399 |

| PairClassification | C-MTEB/CMNLI | euclidean_recall | 85.85457096095394 |

| PairClassification | C-MTEB/CMNLI | manhattan_accuracy | 75.49007817197835 |

| PairClassification | C-MTEB/CMNLI | manhattan_ap | 84.40297506704299 |

| PairClassification | C-MTEB/CMNLI | manhattan_f1 | 77.63185324160932 |

| PairClassification | C-MTEB/CMNLI | manhattan_precision | 70.03949595636637 |

| PairClassification | C-MTEB/CMNLI | manhattan_recall | 87.07037643207856 |

| PairClassification | C-MTEB/CMNLI | max_accuracy | 76.7047504509922 |

| PairClassification | C-MTEB/CMNLI | max_ap | 85.26649874800871 |

| PairClassification | C-MTEB/CMNLI | max_f1 | 78.13528724646915 |

| Retrieval | C-MTEB/CovidRetrieval | map_at_1 | 69.178 |

| Retrieval | C-MTEB/CovidRetrieval | map_at_10 | 77.523 |

| Retrieval | C-MTEB/CovidRetrieval | map_at_100 | 77.793 |

| Retrieval | C-MTEB/CovidRetrieval | map_at_1000 | 77.79899999999999 |

| Retrieval | C-MTEB/CovidRetrieval | map_at_3 | 75.878 |

| Retrieval | C-MTEB/CovidRetrieval | map_at_5 | 76.849 |

| Retrieval | C-MTEB/CovidRetrieval | mrr_at_1 | 69.44200000000001 |

| Retrieval | C-MTEB/CovidRetrieval | mrr_at_10 | 77.55 |

| Retrieval | C-MTEB/CovidRetrieval | mrr_at_100 | 77.819 |

| Retrieval | C-MTEB/CovidRetrieval | mrr_at_1000 | 77.826 |

| Retrieval | C-MTEB/CovidRetrieval | mrr_at_3 | 75.957 |

| Retrieval | C-MTEB/CovidRetrieval | mrr_at_5 | 76.916 |

| Retrieval | C-MTEB/CovidRetrieval | ndcg_at_1 | 69.44200000000001 |

| Retrieval | C-MTEB/CovidRetrieval | ndcg_at_10 | 81.217 |

| Retrieval | C-MTEB/CovidRetrieval | ndcg_at_100 | 82.45 |

| Retrieval | C-MTEB/CovidRetrieval | ndcg_at_1000 | 82.636 |

| Retrieval | C-MTEB/CovidRetrieval | ndcg_at_3 | 77.931 |

| Retrieval | C-MTEB/CovidRetrieval | ndcg_at_5 | 79.655 |

| Retrieval | C-MTEB/CovidRetrieval | precision_at_1 | 69.44200000000001 |

| Retrieval | C-MTEB/CovidRetrieval | precision_at_10 | 9.357 |

| Retrieval | C-MTEB/CovidRetrieval | precision_at_100 | 0.993 |

| Retrieval | C-MTEB/CovidRetrieval | precision_at_1000 | 0.101 |

| Retrieval | C-MTEB/CovidRetrieval | precision_at_3 | 28.1 |

| Retrieval | C-MTEB/CovidRetrieval | precision_at_5 | 17.724 |

| Retrieval | C-MTEB/CovidRetrieval | recall_at_1 | 69.178 |

| Retrieval | C-MTEB/CovidRetrieval | recall_at_10 | 92.624 |

| Retrieval | C-MTEB/CovidRetrieval | recall_at_100 | 98.209 |

| Retrieval | C-MTEB/CovidRetrieval | recall_at_1000 | 99.684 |

| Retrieval | C-MTEB/CovidRetrieval | recall_at_3 | 83.772 |

| Retrieval | C-MTEB/CovidRetrieval | recall_at_5 | 87.882 |

| Retrieval | C-MTEB/DuRetrieval | map_at_1 | 25.163999999999998 |

| Retrieval | C-MTEB/DuRetrieval | map_at_10 | 76.386 |

| Retrieval | C-MTEB/DuRetrieval | map_at_100 | 79.339 |

| Retrieval | C-MTEB/DuRetrieval | map_at_1000 | 79.39500000000001 |

| Retrieval | C-MTEB/DuRetrieval | map_at_3 | 52.959 |

| Retrieval | C-MTEB/DuRetrieval | map_at_5 | 66.59 |

| Retrieval | C-MTEB/DuRetrieval | mrr_at_1 | 87.9 |

| Retrieval | C-MTEB/DuRetrieval | mrr_at_10 | 91.682 |

| Retrieval | C-MTEB/DuRetrieval | mrr_at_100 | 91.747 |

| Retrieval | C-MTEB/DuRetrieval | mrr_at_1000 | 91.751 |

| Retrieval | C-MTEB/DuRetrieval | mrr_at_3 | 91.267 |

| Retrieval | C-MTEB/DuRetrieval | mrr_at_5 | 91.527 |

| Retrieval | C-MTEB/DuRetrieval | ndcg_at_1 | 87.9 |

| Retrieval | C-MTEB/DuRetrieval | ndcg_at_10 | 84.569 |

| Retrieval | C-MTEB/DuRetrieval | ndcg_at_100 | 87.83800000000001 |

| Retrieval | C-MTEB/DuRetrieval | ndcg_at_1000 | 88.322 |

| Retrieval | C-MTEB/DuRetrieval | ndcg_at_3 | 83.473 |

| Retrieval | C-MTEB/DuRetrieval | ndcg_at_5 | 82.178 |

| Retrieval | C-MTEB/DuRetrieval | precision_at_1 | 87.9 |

| Retrieval | C-MTEB/DuRetrieval | precision_at_10 | 40.605000000000004 |

| Retrieval | C-MTEB/DuRetrieval | precision_at_100 | 4.752 |

| Retrieval | C-MTEB/DuRetrieval | precision_at_1000 | 0.488 |

| Retrieval | C-MTEB/DuRetrieval | precision_at_3 | 74.9 |

| Retrieval | C-MTEB/DuRetrieval | precision_at_5 | 62.96000000000001 |

| Retrieval | C-MTEB/DuRetrieval | recall_at_1 | 25.163999999999998 |

| Retrieval | C-MTEB/DuRetrieval | recall_at_10 | 85.97399999999999 |

| Retrieval | C-MTEB/DuRetrieval | recall_at_100 | 96.63000000000001 |

| Retrieval | C-MTEB/DuRetrieval | recall_at_1000 | 99.016 |

| Retrieval | C-MTEB/DuRetrieval | recall_at_3 | 55.611999999999995 |

| Retrieval | C-MTEB/DuRetrieval | recall_at_5 | 71.936 |

| Retrieval | C-MTEB/EcomRetrieval | map_at_1 | 48.6 |

| Retrieval | C-MTEB/EcomRetrieval | map_at_10 | 58.831 |

| Retrieval | C-MTEB/EcomRetrieval | map_at_100 | 59.427 |

| Retrieval | C-MTEB/EcomRetrieval | map_at_1000 | 59.44199999999999 |

| Retrieval | C-MTEB/EcomRetrieval | map_at_3 | 56.383 |

| Retrieval | C-MTEB/EcomRetrieval | map_at_5 | 57.753 |

| Retrieval | C-MTEB/EcomRetrieval | mrr_at_1 | 48.6 |

| Retrieval | C-MTEB/EcomRetrieval | mrr_at_10 | 58.831 |

| Retrieval | C-MTEB/EcomRetrieval | mrr_at_100 | 59.427 |

| Retrieval | C-MTEB/EcomRetrieval | mrr_at_1000 | 59.44199999999999 |

| Retrieval | C-MTEB/EcomRetrieval | mrr_at_3 | 56.383 |

| Retrieval | C-MTEB/EcomRetrieval | mrr_at_5 | 57.753 |

| Retrieval | C-MTEB/EcomRetrieval | ndcg_at_1 | 48.6 |

| Retrieval | C-MTEB/EcomRetrieval | ndcg_at_10 | 63.951 |

| Retrieval | C-MTEB/EcomRetrieval | ndcg_at_100 | 66.72200000000001 |

| Retrieval | C-MTEB/EcomRetrieval | ndcg_at_1000 | 67.13900000000001 |

| Retrieval | C-MTEB/EcomRetrieval | ndcg_at_3 | 58.882 |

| Retrieval | C-MTEB/EcomRetrieval | ndcg_at_5 | 61.373 |

| Retrieval | C-MTEB/EcomRetrieval | precision_at_1 | 48.6 |

| Retrieval | C-MTEB/EcomRetrieval | precision_at_10 | 8.01 |

| Retrieval | C-MTEB/EcomRetrieval | precision_at_100 | 0.928 |

| Retrieval | C-MTEB/EcomRetrieval | precision_at_1000 | 0.096 |

| Retrieval | C-MTEB/EcomRetrieval | precision_at_3 | 22.033 |

| Retrieval | C-MTEB/EcomRetrieval | precision_at_5 | 14.44 |

| Retrieval | C-MTEB/EcomRetrieval | recall_at_1 | 48.6 |

| Retrieval | C-MTEB/EcomRetrieval | recall_at_10 | 80.10000000000001 |

| Retrieval | C-MTEB/EcomRetrieval | recall_at_100 | 92.80000000000001 |

| Retrieval | C-MTEB/EcomRetrieval | recall_at_1000 | 96.1 |

| Retrieval | C-MTEB/EcomRetrieval | recall_at_3 | 66.10000000000001 |

| Retrieval | C-MTEB/EcomRetrieval | recall_at_5 | 72.2 |

| Classification | C-MTEB/IFlyTek-classification | accuracy | 47.36437091188918 |

| Classification | C-MTEB/IFlyTek-classification | f1 | 36.60946954228577 |

| Classification | C-MTEB/JDReview-classification | accuracy | 79.5684803001876 |

| Classification | C-MTEB/JDReview-classification | ap | 42.671935929201524 |

| Classification | C-MTEB/JDReview-classification | f1 | 73.31912729103752 |

| STS | C-MTEB/LCQMC | cos_sim_pearson | 68.62670112113864 |

| STS | C-MTEB/LCQMC | cos_sim_spearman | 75.74009123170768 |

| STS | C-MTEB/LCQMC | euclidean_pearson | 73.93002595958237 |

| STS | C-MTEB/LCQMC | euclidean_spearman | 75.35222935003587 |

| STS | C-MTEB/LCQMC | manhattan_pearson | 73.89870445158144 |

| STS | C-MTEB/LCQMC | manhattan_spearman | 75.31714936339398 |

| Reranking | C-MTEB/Mmarco-reranking | map | 31.5372713650176 |

| Reranking | C-MTEB/Mmarco-reranking | mrr | 30.163095238095238 |

| Retrieval | C-MTEB/MMarcoRetrieval | map_at_1 | 65.054 |

| Retrieval | C-MTEB/MMarcoRetrieval | map_at_10 | 74.156 |

| Retrieval | C-MTEB/MMarcoRetrieval | map_at_100 | 74.523 |

| Retrieval | C-MTEB/MMarcoRetrieval | map_at_1000 | 74.535 |

| Retrieval | C-MTEB/MMarcoRetrieval | map_at_3 | 72.269 |

| Retrieval | C-MTEB/MMarcoRetrieval | map_at_5 | 73.41 |

| Retrieval | C-MTEB/MMarcoRetrieval | mrr_at_1 | 67.24900000000001 |

| Retrieval | C-MTEB/MMarcoRetrieval | mrr_at_10 | 74.78399999999999 |

| Retrieval | C-MTEB/MMarcoRetrieval | mrr_at_100 | 75.107 |

| Retrieval | C-MTEB/MMarcoRetrieval | mrr_at_1000 | 75.117 |

| Retrieval | C-MTEB/MMarcoRetrieval | mrr_at_3 | 73.13499999999999 |

| Retrieval | C-MTEB/MMarcoRetrieval | mrr_at_5 | 74.13499999999999 |

| Retrieval | C-MTEB/MMarcoRetrieval | ndcg_at_1 | 67.24900000000001 |

| Retrieval | C-MTEB/MMarcoRetrieval | ndcg_at_10 | 77.96300000000001 |

| Retrieval | C-MTEB/MMarcoRetrieval | ndcg_at_100 | 79.584 |

| Retrieval | C-MTEB/MMarcoRetrieval | ndcg_at_1000 | 79.884 |

| Retrieval | C-MTEB/MMarcoRetrieval | ndcg_at_3 | 74.342 |

| Retrieval | C-MTEB/MMarcoRetrieval | ndcg_at_5 | 76.278 |

| Retrieval | C-MTEB/MMarcoRetrieval | precision_at_1 | 67.24900000000001 |

| Retrieval | C-MTEB/MMarcoRetrieval | precision_at_10 | 9.466 |

| Retrieval | C-MTEB/MMarcoRetrieval | precision_at_100 | 1.027 |

| Retrieval | C-MTEB/MMarcoRetrieval | precision_at_1000 | 0.105 |

| Retrieval | C-MTEB/MMarcoRetrieval | precision_at_3 | 27.955999999999996 |

| Retrieval | C-MTEB/MMarcoRetrieval | precision_at_5 | 17.817 |

| Retrieval | C-MTEB/MMarcoRetrieval | recall_at_1 | 65.054 |

| Retrieval | C-MTEB/MMarcoRetrieval | recall_at_10 | 89.113 |

| Retrieval | C-MTEB/MMarcoRetrieval | recall_at_100 | 96.369 |

| Retrieval | C-MTEB/MMarcoRetrieval | recall_at_1000 | 98.714 |

| Retrieval | C-MTEB/MMarcoRetrieval | recall_at_3 | 79.45400000000001 |

| Retrieval | C-MTEB/MMarcoRetrieval | recall_at_5 | 84.06 |

| Classification | mteb/amazon_massive_intent | accuracy | 68.1977135171486 |

| Classification | mteb/amazon_massive_intent | f1 | 67.23114308718404 |

| Classification | mteb/amazon_massive_scenario | accuracy | 71.92669804976462 |

| Classification | mteb/amazon_massive_scenario | f1 | 72.90628475628779 |

| Retrieval | C-MTEB/MedicalRetrieval | map_at_1 | 49.2 |

| Retrieval | C-MTEB/MedicalRetrieval | map_at_10 | 54.539 |

| Retrieval | C-MTEB/MedicalRetrieval | map_at_100 | 55.135 |

| Retrieval | C-MTEB/MedicalRetrieval | map_at_1000 | 55.19199999999999 |

| Retrieval | C-MTEB/MedicalRetrieval | map_at_3 | 53.383 |

| Retrieval | C-MTEB/MedicalRetrieval | map_at_5 | 54.142999999999994 |

| Retrieval | C-MTEB/MedicalRetrieval | mrr_at_1 | 49.2 |

| Retrieval | C-MTEB/MedicalRetrieval | mrr_at_10 | 54.539 |

| Retrieval | C-MTEB/MedicalRetrieval | mrr_at_100 | 55.135999999999996 |

| Retrieval | C-MTEB/MedicalRetrieval | mrr_at_1000 | 55.19199999999999 |

| Retrieval | C-MTEB/MedicalRetrieval | mrr_at_3 | 53.383 |

| Retrieval | C-MTEB/MedicalRetrieval | mrr_at_5 | 54.142999999999994 |

| Retrieval | C-MTEB/MedicalRetrieval | ndcg_at_1 | 49.2 |

| Retrieval | C-MTEB/MedicalRetrieval | ndcg_at_10 | 57.123000000000005 |

| Retrieval | C-MTEB/MedicalRetrieval | ndcg_at_100 | 60.21300000000001 |

| Retrieval | C-MTEB/MedicalRetrieval | ndcg_at_1000 | 61.915 |

| Retrieval | C-MTEB/MedicalRetrieval | ndcg_at_3 | 54.772 |

| Retrieval | C-MTEB/MedicalRetrieval | ndcg_at_5 | 56.157999999999994 |

| Retrieval | C-MTEB/MedicalRetrieval | precision_at_1 | 49.2 |

| Retrieval | C-MTEB/MedicalRetrieval | precision_at_10 | 6.52 |

| Retrieval | C-MTEB/MedicalRetrieval | precision_at_100 | 0.8009999999999999 |

| Retrieval | C-MTEB/MedicalRetrieval | precision_at_1000 | 0.094 |

| Retrieval | C-MTEB/MedicalRetrieval | precision_at_3 | 19.6 |

| Retrieval | C-MTEB/MedicalRetrieval | precision_at_5 | 12.44 |

| Retrieval | C-MTEB/MedicalRetrieval | recall_at_1 | 49.2 |

| Retrieval | C-MTEB/MedicalRetrieval | recall_at_10 | 65.2 |

| Retrieval | C-MTEB/MedicalRetrieval | recall_at_100 | 80.10000000000001 |

| Retrieval | C-MTEB/MedicalRetrieval | recall_at_1000 | 93.89999999999999 |

| Retrieval | C-MTEB/MedicalRetrieval | recall_at_3 | 58.8 |

| Retrieval | C-MTEB/MedicalRetrieval | recall_at_5 | 62.2 |

| Classification | C-MTEB/MultilingualSentiment-classification | accuracy | 63.29333333333334 |

| Classification | C-MTEB/MultilingualSentiment-classification | f1 | 63.03293854259612 |

| PairClassification | C-MTEB/OCNLI | cos_sim_accuracy | 75.69030860855442 |

| PairClassification | C-MTEB/OCNLI | cos_sim_ap | 80.6157833772759 |

| PairClassification | C-MTEB/OCNLI | cos_sim_f1 | 77.87524366471735 |

| PairClassification | C-MTEB/OCNLI | cos_sim_precision | 72.3076923076923 |

| PairClassification | C-MTEB/OCNLI | cos_sim_recall | 84.37170010559663 |

| PairClassification | C-MTEB/OCNLI | dot_accuracy | 67.78559826746074 |

| PairClassification | C-MTEB/OCNLI | dot_ap | 72.00871467527499 |

| PairClassification | C-MTEB/OCNLI | dot_f1 | 72.58722247394654 |

| PairClassification | C-MTEB/OCNLI | dot_precision | 63.57142857142857 |

| PairClassification | C-MTEB/OCNLI | dot_recall | 84.58289334741288 |

| PairClassification | C-MTEB/OCNLI | euclidean_accuracy | 75.20303194369248 |

| PairClassification | C-MTEB/OCNLI | euclidean_ap | 80.98587256415605 |

| PairClassification | C-MTEB/OCNLI | euclidean_f1 | 77.26396917148362 |

| PairClassification | C-MTEB/OCNLI | euclidean_precision | 71.03631532329496 |

| PairClassification | C-MTEB/OCNLI | euclidean_recall | 84.68848996832101 |

| PairClassification | C-MTEB/OCNLI | manhattan_accuracy | 75.20303194369248 |

| PairClassification | C-MTEB/OCNLI | manhattan_ap | 80.93460699513219 |

| PairClassification | C-MTEB/OCNLI | manhattan_f1 | 77.124773960217 |

| PairClassification | C-MTEB/OCNLI | manhattan_precision | 67.43083003952569 |

| PairClassification | C-MTEB/OCNLI | manhattan_recall | 90.07391763463569 |

| PairClassification | C-MTEB/OCNLI | max_accuracy | 75.69030860855442 |

| PairClassification | C-MTEB/OCNLI | max_ap | 80.98587256415605 |

| PairClassification | C-MTEB/OCNLI | max_f1 | 77.87524366471735 |

| Classification | C-MTEB/OnlineShopping-classification | accuracy | 87.00000000000001 |

| Classification | C-MTEB/OnlineShopping-classification | ap | 83.24372135949511 |

| Classification | C-MTEB/OnlineShopping-classification | f1 | 86.95554191530607 |

| STS | C-MTEB/PAWSX | cos_sim_pearson | 37.57616811591219 |

| STS | C-MTEB/PAWSX | cos_sim_spearman | 41.490259084930045 |

| STS | C-MTEB/PAWSX | euclidean_pearson | 38.9155043692188 |

| STS | C-MTEB/PAWSX | euclidean_spearman | 39.16056534305623 |

| STS | C-MTEB/PAWSX | manhattan_pearson | 38.76569892264335 |

| STS | C-MTEB/PAWSX | manhattan_spearman | 38.99891685590743 |

| STS | C-MTEB/QBQTC | cos_sim_pearson | 35.44858610359665 |

| STS | C-MTEB/QBQTC | cos_sim_spearman | 38.11128146262466 |

| STS | C-MTEB/QBQTC | euclidean_pearson | 31.928644189822457 |

| STS | C-MTEB/QBQTC | euclidean_spearman | 34.384936631696554 |

| STS | C-MTEB/QBQTC | manhattan_pearson | 31.90586687414376 |

| STS | C-MTEB/QBQTC | manhattan_spearman | 34.35770153777186 |

| STS | mteb/sts22-crosslingual-sts | cos_sim_pearson | 66.54931957553592 |

| STS | mteb/sts22-crosslingual-sts | cos_sim_spearman | 69.25068863016632 |

| STS | mteb/sts22-crosslingual-sts | euclidean_pearson | 50.26525596106869 |

| STS | mteb/sts22-crosslingual-sts | euclidean_spearman | 63.83352741910006 |

| STS | mteb/sts22-crosslingual-sts | manhattan_pearson | 49.98798282198196 |

| STS | mteb/sts22-crosslingual-sts | manhattan_spearman | 63.87649521907841 |

| STS | C-MTEB/STSB | cos_sim_pearson | 82.52782476625825 |

| STS | C-MTEB/STSB | cos_sim_spearman | 82.55618986168398 |

| STS | C-MTEB/STSB | euclidean_pearson | 78.48190631687673 |

| STS | C-MTEB/STSB | euclidean_spearman | 78.39479731354655 |

| STS | C-MTEB/STSB | manhattan_pearson | 78.51176592165885 |

| STS | C-MTEB/STSB | manhattan_spearman | 78.42363787303265 |

| Reranking | C-MTEB/T2Reranking | map | 67.36693873615643 |

| Reranking | C-MTEB/T2Reranking | mrr | 77.83847701797939 |

| Retrieval | C-MTEB/T2Retrieval | map_at_1 | 25.795 |

| Retrieval | C-MTEB/T2Retrieval | map_at_10 | 72.258 |

| Retrieval | C-MTEB/T2Retrieval | map_at_100 | 76.049 |

| Retrieval | C-MTEB/T2Retrieval | map_at_1000 | 76.134 |

| Retrieval | C-MTEB/T2Retrieval | map_at_3 | 50.697 |

| Retrieval | C-MTEB/T2Retrieval | map_at_5 | 62.324999999999996 |

| Retrieval | C-MTEB/T2Retrieval | mrr_at_1 | 86.634 |

| Retrieval | C-MTEB/T2Retrieval | mrr_at_10 | 89.792 |

| Retrieval | C-MTEB/T2Retrieval | mrr_at_100 | 89.91900000000001 |

| Retrieval | C-MTEB/T2Retrieval | mrr_at_1000 | 89.923 |

| Retrieval | C-MTEB/T2Retrieval | mrr_at_3 | 89.224 |

| Retrieval | C-MTEB/T2Retrieval | mrr_at_5 | 89.608 |

| Retrieval | C-MTEB/T2Retrieval | ndcg_at_1 | 86.634 |

| Retrieval | C-MTEB/T2Retrieval | ndcg_at_10 | 80.589 |

| Retrieval | C-MTEB/T2Retrieval | ndcg_at_100 | 84.812 |

| Retrieval | C-MTEB/T2Retrieval | ndcg_at_1000 | 85.662 |

| Retrieval | C-MTEB/T2Retrieval | ndcg_at_3 | 82.169 |

| Retrieval | C-MTEB/T2Retrieval | ndcg_at_5 | 80.619 |

| Retrieval | C-MTEB/T2Retrieval | precision_at_1 | 86.634 |

| Retrieval | C-MTEB/T2Retrieval | precision_at_10 | 40.389 |

| Retrieval | C-MTEB/T2Retrieval | precision_at_100 | 4.93 |

| Retrieval | C-MTEB/T2Retrieval | precision_at_1000 | 0.513 |

| Retrieval | C-MTEB/T2Retrieval | precision_at_3 | 72.104 |

| Retrieval | C-MTEB/T2Retrieval | precision_at_5 | 60.425 |

| Retrieval | C-MTEB/T2Retrieval | recall_at_1 | 25.795 |

| Retrieval | C-MTEB/T2Retrieval | recall_at_10 | 79.565 |

| Retrieval | C-MTEB/T2Retrieval | recall_at_100 | 93.24799999999999 |

| Retrieval | C-MTEB/T2Retrieval | recall_at_1000 | 97.595 |

| Retrieval | C-MTEB/T2Retrieval | recall_at_3 | 52.583999999999996 |

| Retrieval | C-MTEB/T2Retrieval | recall_at_5 | 66.175 |

| Classification | C-MTEB/TNews-classification | accuracy | 47.648999999999994 |

| Classification | C-MTEB/TNews-classification | f1 | 46.28925837008413 |

| Clustering | C-MTEB/ThuNewsClusteringP2P | v_measure | 54.07641891287953 |

| Clustering | C-MTEB/ThuNewsClusteringS2S | v_measure | 53.423702062353954 |

| Retrieval | C-MTEB/VideoRetrieval | map_at_1 | 55.7 |

| Retrieval | C-MTEB/VideoRetrieval | map_at_10 | 65.923 |

| Retrieval | C-MTEB/VideoRetrieval | map_at_100 | 66.42 |

| Retrieval | C-MTEB/VideoRetrieval | map_at_1000 | 66.431 |

| Retrieval | C-MTEB/VideoRetrieval | map_at_3 | 63.9 |

| Retrieval | C-MTEB/VideoRetrieval | map_at_5 | 65.225 |

| Retrieval | C-MTEB/VideoRetrieval | mrr_at_1 | 55.60000000000001 |

| Retrieval | C-MTEB/VideoRetrieval | mrr_at_10 | 65.873 |

| Retrieval | C-MTEB/VideoRetrieval | mrr_at_100 | 66.36999999999999 |

| Retrieval | C-MTEB/VideoRetrieval | mrr_at_1000 | 66.381 |

| Retrieval | C-MTEB/VideoRetrieval | mrr_at_3 | 63.849999999999994 |

| Retrieval | C-MTEB/VideoRetrieval | mrr_at_5 | 65.17500000000001 |

| Retrieval | C-MTEB/VideoRetrieval | ndcg_at_1 | 55.7 |

| Retrieval | C-MTEB/VideoRetrieval | ndcg_at_10 | 70.621 |

| Retrieval | C-MTEB/VideoRetrieval | ndcg_at_100 | 72.944 |

| Retrieval | C-MTEB/VideoRetrieval | ndcg_at_1000 | 73.25399999999999 |

| Retrieval | C-MTEB/VideoRetrieval | ndcg_at_3 | 66.547 |

| Retrieval | C-MTEB/VideoRetrieval | ndcg_at_5 | 68.93599999999999 |

| Retrieval | C-MTEB/VideoRetrieval | precision_at_1 | 55.7 |

| Retrieval | C-MTEB/VideoRetrieval | precision_at_10 | 8.52 |

| Retrieval | C-MTEB/VideoRetrieval | precision_at_100 | 0.958 |

| Retrieval | C-MTEB/VideoRetrieval | precision_at_1000 | 0.098 |

| Retrieval | C-MTEB/VideoRetrieval | precision_at_3 | 24.733 |

| Retrieval | C-MTEB/VideoRetrieval | precision_at_5 | 16 |

| Retrieval | C-MTEB/VideoRetrieval | recall_at_1 | 55.7 |

| Retrieval | C-MTEB/VideoRetrieval | recall_at_10 | 85.2 |

| Retrieval | C-MTEB/VideoRetrieval | recall_at_100 | 95.8 |

| Retrieval | C-MTEB/VideoRetrieval | recall_at_1000 | 98.3 |

| Retrieval | C-MTEB/VideoRetrieval | recall_at_3 | 74.2 |

| Retrieval | C-MTEB/VideoRetrieval | recall_at_5 | 80 |

| Classification | C-MTEB/waimai-classification | accuracy | 84.54 |

| Classification | C-MTEB/waimai-classification | ap | 66.13603199670062 |

| Classification | C-MTEB/waimai-classification | f1 | 82.61420654584116 |

🤝 聯繫我們

加入我們的 Discord 社區,與其他社區成員交流想法。

📚 引用

如果您在研究中發現 Jina Embeddings 很有用,請引用以下論文:

@article{mohr2024multi,

title={Multi-Task Contrastive Learning for 8192-Token Bilingual Text Embeddings},

author={Mohr, Isabelle and Krimmel, Markus and Sturua, Saba and Akram, Mohammad Kalim and Koukounas, Andreas and Günther, Michael and Mastrapas, Georgios and Ravishankar, Vinit and Martínez, Joan Fontanals and Wang, Feng and others},

journal={arXiv preprint arXiv:2402.17016},

year={2024}

}

Transformers 支持多種語言

Transformers 支持多種語言 Transformers 英語

Transformers 英語 Transformers 支持多種語言

Transformers 支持多種語言 Transformers 支持多種語言

Transformers 支持多種語言 Transformers

Transformers Transformers 其他

Transformers 其他 Transformers 支持多種語言

Transformers 支持多種語言 Transformers 英語

Transformers 英語