🚀 鑫源多模態大模型Xinyuan-VL-2B

鑫源多模態大模型Xinyuan-VL-2B是Cylingo集團推出的一款端側高性能多模態大模型。它基於Qwen/Qwen2-VL-2B-Instruct進行微調,使用了超500萬的多模態數據以及少量純文本數據進行訓練。該模型在多個權威基準測試中表現出色。

🚀 快速開始

為了藉助開源社區蓬勃發展的生態,我們選擇在Qwen/Qwen2-VL-2B-Instruct的基礎上進行微調,從而形成了我們的Cylingo/Xinyuan-VL-2B。因此,使用Cylingo/Xinyuan-VL-2B的方式與使用Qwen/Qwen2-VL-2B-Instruct一致。

💻 使用示例

基礎用法

from transformers import Qwen2VLForConditionalGeneration, AutoTokenizer, AutoProcessor

from qwen_vl_utils import process_vision_info

model = Qwen2VLForConditionalGeneration.from_pretrained(

"Cylingo/Xinyuan-VL-2B", torch_dtype="auto", device_map="auto"

)

processor = AutoProcessor.from_pretrained("Cylingo/Xinyuan-VL-2B")

messages = [

{

"role": "user",

"content": [

{

"type": "image",

"image": "https://qianwen-res.oss-cn-beijing.aliyuncs.com/Qwen-VL/assets/demo.jpeg",

},

{"type": "text", "text": "Describe this image."},

],

}

]

text = processor.apply_chat_template(

messages, tokenize=False, add_generation_prompt=True

)

image_inputs, video_inputs = process_vision_info(messages)

inputs = processor(

text=[text],

images=image_inputs,

videos=video_inputs,

padding=True,

return_tensors="pt",

)

inputs = inputs.to("cuda")

generated_ids = model.generate(**inputs, max_new_tokens=128)

generated_ids_trimmed = [

out_ids[len(in_ids) :] for in_ids, out_ids in zip(inputs.input_ids, generated_ids)

]

output_text = processor.batch_decode(

generated_ids_trimmed, skip_special_tokens=True, clean_up_tokenization_spaces=False

)

print(output_text)

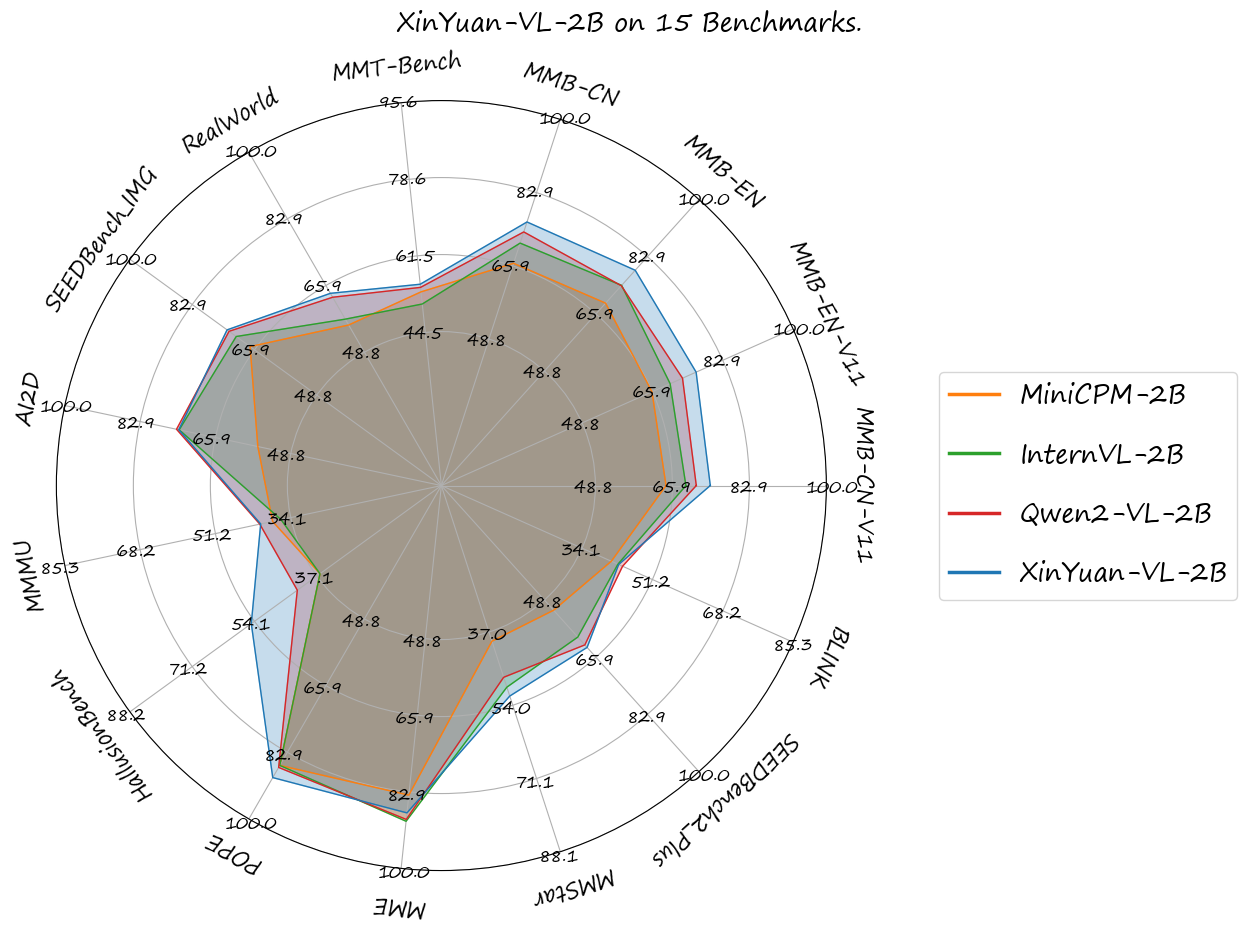

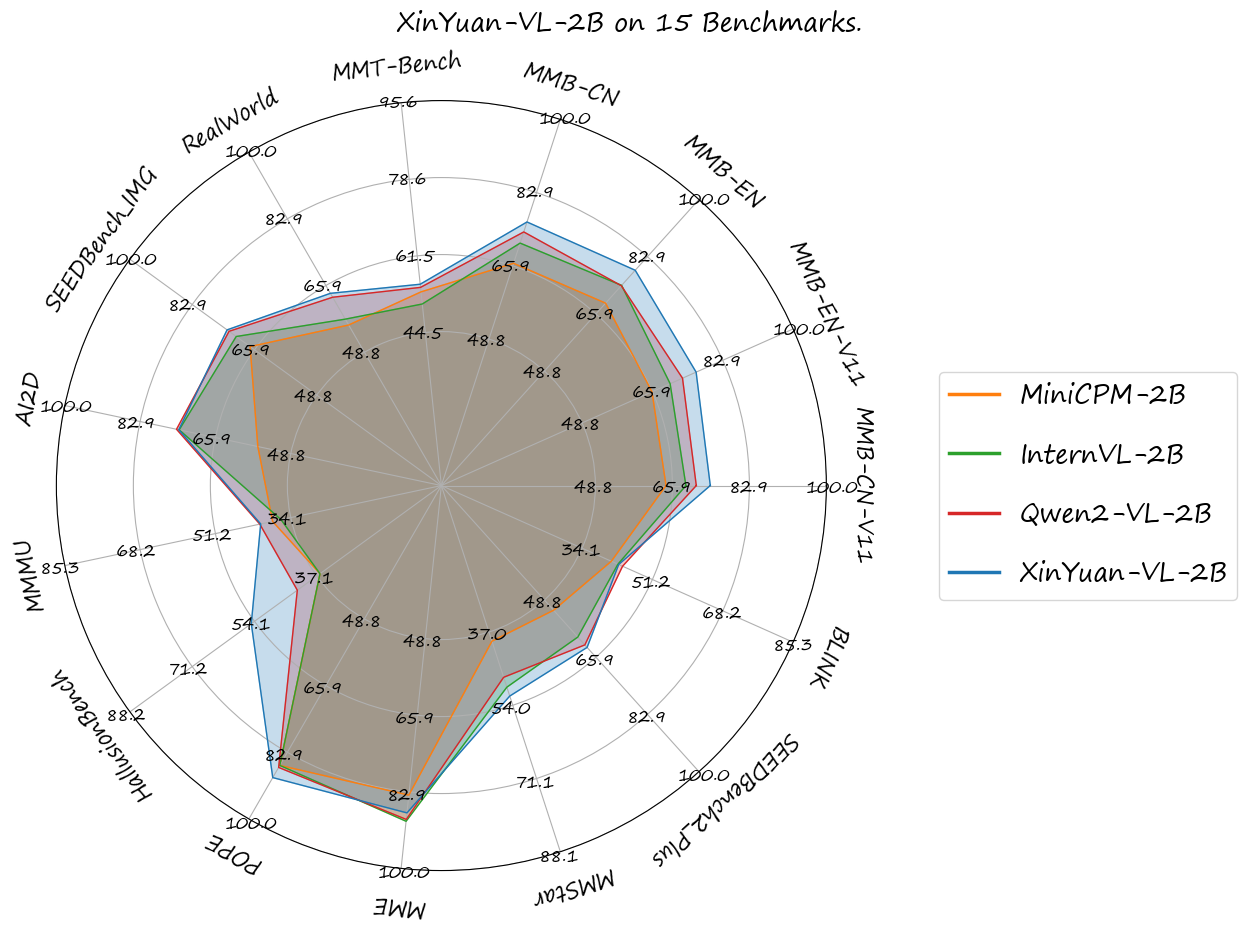

🔧 評估

我們使用VLMEvalKit工具包對**鑫源多模態大模型XinYuan-VL-2B** 在以下基準測試中進行了評估,發現鑫源多模態大模型XinYuan-VL-2B 表現優於阿里雲發佈的Qwen/Qwen2-VL-2B-Instruct,以及在開源社區有重大影響力的其他參數規模相當的模型。

你可以在opencompass/open_vlm_leaderboard中查看具體結果:

| 基準測試 |

MiniCPM - 2B |

InternVL - 2B |

Qwen2 - VL - 2B |

鑫源多模態大模型XinYuan-VL-2B |

| MMB - CN - V11 - Test |

64.5 |

68.9 |

71.2 |

74.3 |

| MMB - EN - V11 - Test |

65.8 |

70.2 |

73.2 |

76.5 |

| MMB - EN |

69.1 |

74.4 |

74.3 |

78.9 |

| MMB - CN |

66.5 |

71.2 |

73.8 |

76.12 |

| CCBench |

45.3 |

74.7 |

53.7 |

55.5 |

| MMT - Bench |

53.5 |

50.8 |

54.5 |

55.2 |

| RealWorld |

55.8 |

57.3 |

62.9 |

63.9 |

| SEEDBench_IMG |

67.1 |

70.9 |

72.86 |

73.4 |

| AI2D |

56.3 |

74.1 |

74.7 |

74.2 |

| MMMU |

38.2 |

36.3 |

41.1 |

40.9 |

| HallusionBench |

36.2 |

36.2 |

42.4 |

55.00 |

| POPE |

86.3 |

86.3 |

86.82 |

89.42 |

| MME |

1808.6 |

1876.8 |

1872.0 |

1854.9 |

| MMStar |

39.1 |

49.8 |

47.5 |

51.87 |

| SEEDBench2_Plus |

51.9 |

59.9 |

62.23 |

62.98 |

| BLINK |

41.2 |

42.8 |

43.92 |

42.98 |

| OCRBench |

605 |

781 |

794 |

782 |

| TextVQA |

74.1 |

73.4 |

79.7 |

77.6 |

📄 許可證

本項目採用Apache-2.0許可證。

Transformers 支持多種語言

Transformers 支持多種語言 Transformers 支持多種語言

Transformers 支持多種語言 Transformers 英語

Transformers 英語 Transformers 英語

Transformers 英語