🚀 ERNIE-Code

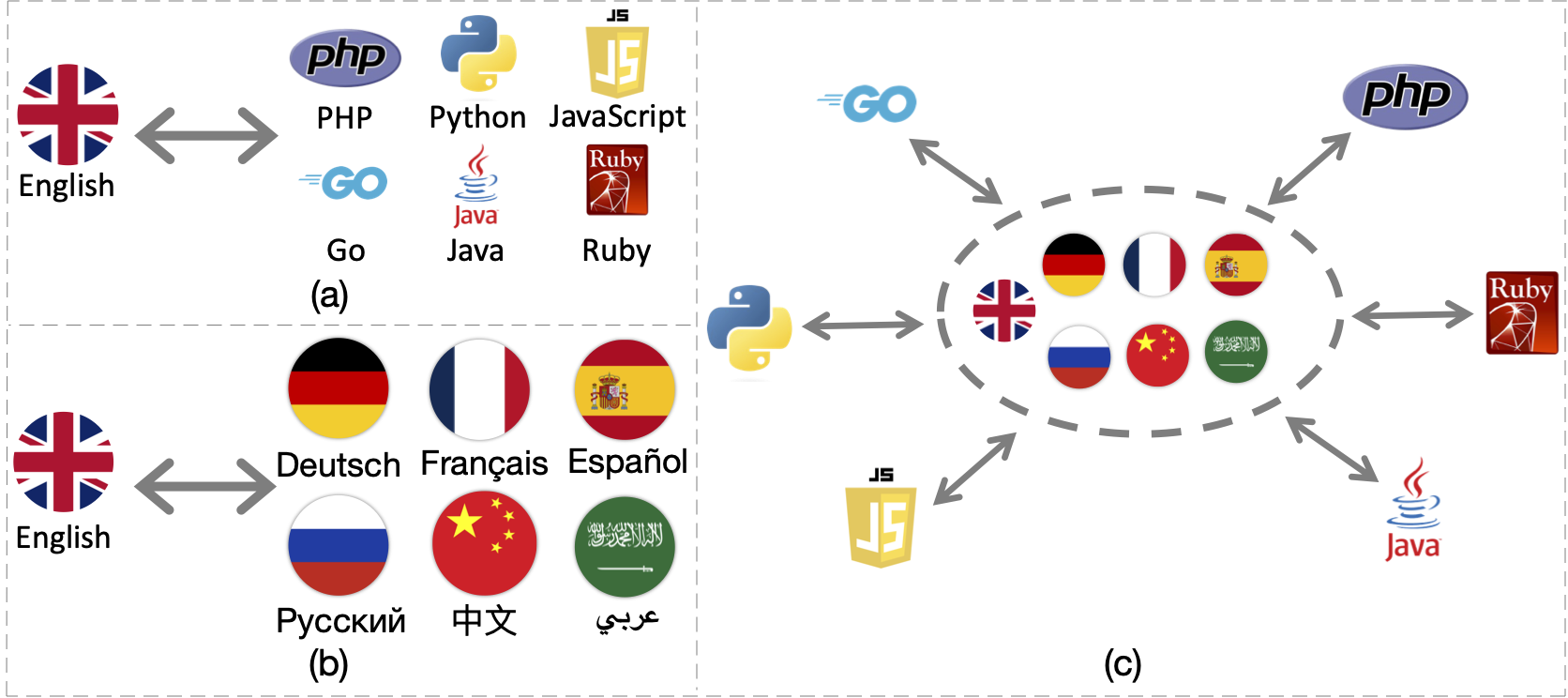

ERNIE-Code是一個統一的大語言模型(LLM),它將116種自然語言與6種編程語言連接起來。該模型採用兩種預訓練方法進行通用跨語言預訓練,在代碼智能的一系列最終任務中表現出色,包括多語言代碼轉文本、文本轉代碼、代碼轉代碼和文本轉文本生成等。

ERNIE-Code: Beyond English-Centric Cross-lingual Pretraining for Programming Languages

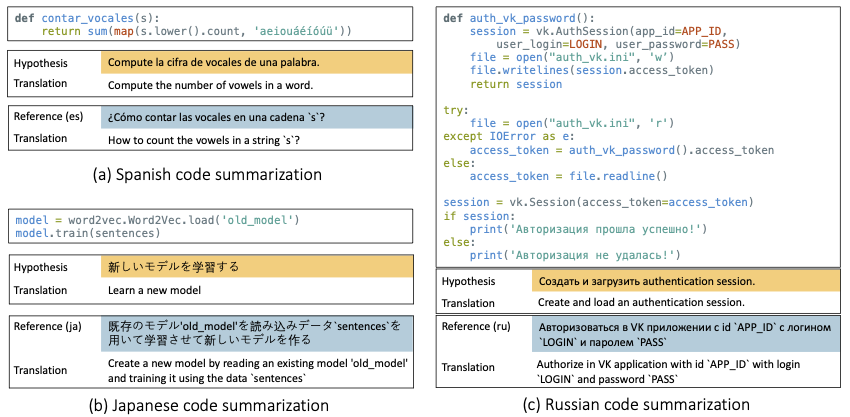

ERNIE-Code採用了兩種預訓練方法進行通用跨語言預訓練:一種是跨度損壞語言建模,可從單語言自然語言(NL)或編程語言(PL)中學習模式;另一種是基於樞軸的翻譯語言建模,依賴於多種自然語言和編程語言的平行數據。大量實驗結果表明,ERNIE-Code在代碼智能的各種最終任務中,優於以往針對編程語言或自然語言的多語言大語言模型。此外,它在多語言代碼摘要和文本到文本翻譯的零樣本提示方面也具有優勢。

ACL 2023 (Findings) | arXiv

🚀 快速開始

ERNIE-Code是一個強大的統一大語言模型,能連接多種自然語言和編程語言。下面為你展示如何使用它進行相關任務。

💻 使用示例

基礎用法

import torch

from transformers import (

AutoModelForSeq2SeqLM,

AutoModelForCausalLM,

AutoTokenizer

)

model_name = "baidu/ernie-code-560m"

model = AutoModelForSeq2SeqLM.from_pretrained(model_name)

tokenizer = AutoTokenizer.from_pretrained(model_name)

def format_code_with_spm_compatablity(line: str):

format_dict = {

" " : "<|space|>"

}

tokens = list(line)

i = 0

while i < len(tokens):

if line[i] == "\n":

while i+1 < len(tokens) and tokens[i+1] == " ":

tokens[i+1] = format_dict.get(" ")

i += 1

i += 1

formatted_line = ''.join(tokens)

return formatted_line

"""

TYPE="code" # define input type in ("code", "text")

input="arr.sort()"

prompt="translate python to java: \n%s" % (input) # your prompt here

"""

TYPE="text"

input="quick sort"

prompt="translate English to Japanese: \n%s" % (input)

assert TYPE in ("code", "text")

if TYPE=="code":

prompt = format_code_with_spm_compatablity(prompt)

model_inputs = tokenizer(prompt, max_length=512, padding=False, truncation=True, return_tensors="pt")

model = model.cuda()

input_ids = model_inputs.input_ids.cuda()

attention_mask = model_inputs.attention_mask.cuda()

output = model.generate(input_ids=input_ids, attention_mask=attention_mask,

num_beams=5, max_length=20)

output = tokenizer.decode(output.flatten(), skip_special_tokens=True)

def clean_up_code_spaces(s: str):

new_tokens = ["<pad>", "</s>", "<unk>", "\n", "\t", "<|space|>"*4, "<|space|>"*2, "<|space|>"]

for tok in new_tokens:

s = s.replace(f"{tok} ", tok)

cleaned_tokens = ["<pad>", "</s>", "<unk>"]

for tok in cleaned_tokens:

s = s.replace(tok, "")

s = s.replace("<|space|>", " ")

return s

output = [clean_up_code_spaces(pred) for pred in output]

你可以參考seq2seq翻譯代碼進行微調。

你也可以查看PaddleNLP上的官方推理代碼。

零樣本示例

📚 詳細文檔

BibTeX引用

@inproceedings{chai-etal-2023-ernie,

title = "{ERNIE}-Code: Beyond {E}nglish-Centric Cross-lingual Pretraining for Programming Languages",

author = "Chai, Yekun and

Wang, Shuohuan and

Pang, Chao and

Sun, Yu and

Tian, Hao and

Wu, Hua",

booktitle = "Findings of the Association for Computational Linguistics: ACL 2023",

month = jul,

year = "2023",

address = "Toronto, Canada",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2023.findings-acl.676",

pages = "10628--10650",

abstract = "Software engineers working with the same programming language (PL) may speak different natural languages (NLs) and vice versa, erecting huge barriers to communication and working efficiency. Recent studies have demonstrated the effectiveness of generative pre-training in computer programs, yet they are always English-centric. In this work, we step towards bridging the gap between multilingual NLs and multilingual PLs for large language models (LLMs). We release ERNIE-Code, a unified pre-trained language model for 116 NLs and 6 PLs. We employ two methods for universal cross-lingual pre-training: span-corruption language modeling that learns patterns from monolingual NL or PL; and pivot-based translation language modeling that relies on parallel data of many NLs and PLs. Extensive results show that ERNIE-Code outperforms previous multilingual LLMs for PL or NL across a wide range of end tasks of code intelligence, including multilingual code-to-text, text-to-code, code-to-code, and text-to-text generation. We further show its advantage of zero-shot prompting on multilingual code summarization and text-to-text translation. We release our code and pre-trained checkpoints.",

}

📄 許可證

本項目採用MIT許可證。

Transformers Supports Multiple Languages

Transformers Supports Multiple Languages Transformers Supports Multiple Languages

Transformers Supports Multiple Languages Transformers English

Transformers English Transformers English

Transformers English