🚀 Model card for MatCha - fine-tuned on Chart2text-pew

This is the MatCha model fine-tuned on the Chart2text-pew dataset, which might be better for chart summarization tasks.

🚀 Quick Start

📋 General Information

| Property |

Details |

| Supported Languages |

en, fr, ro, de, multilingual |

| Inference |

false |

| Pipeline Tag |

visual-question-answering |

| License |

apache-2.0 |

| Tags |

matcha |

📚 Table of Contents

- TL;DR

- Using the model

- Contribution

- Citation

✨ Features

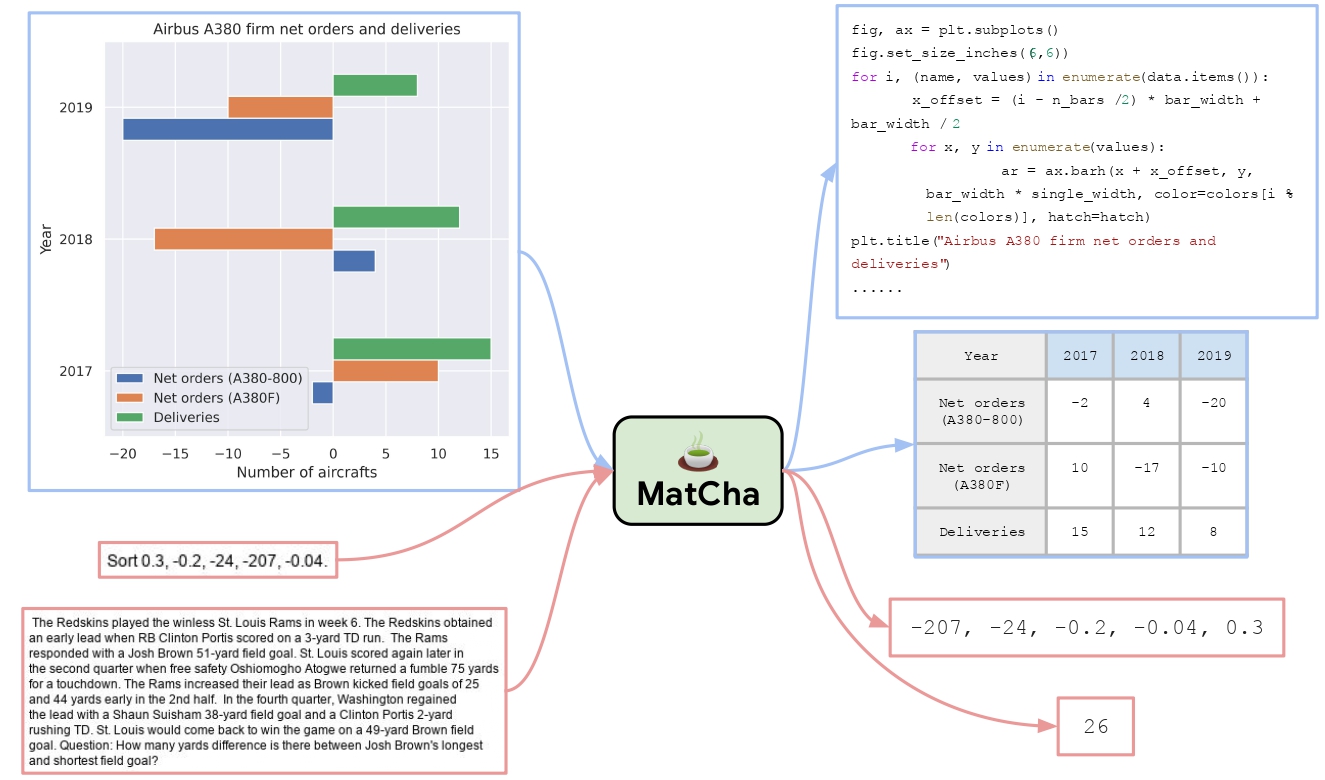

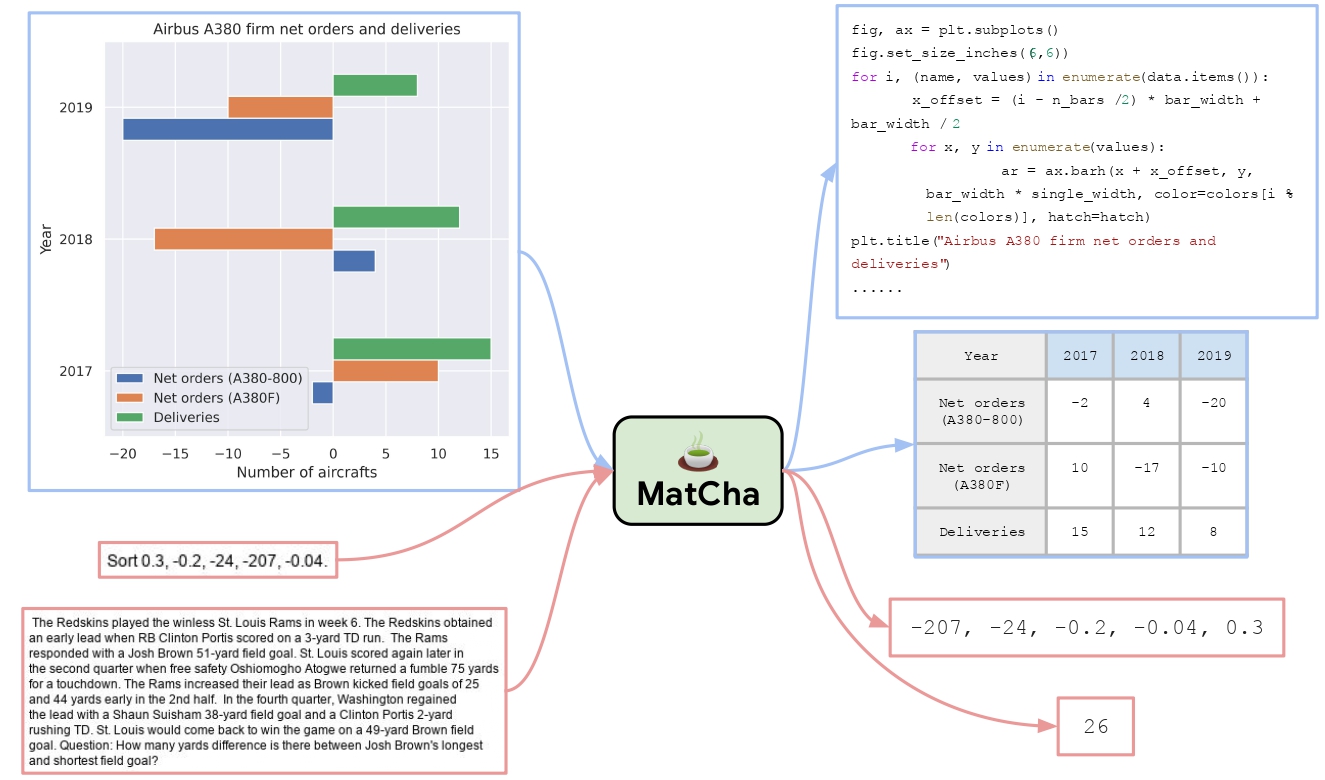

The abstract of the paper states that visual language data like plots, charts, and infographics are widespread in the human world, but current state-of-the-art vision - language models don't perform well on such data. The MATCHA (Math reasoning and Chart derendering pretraining) is proposed to enhance visual language models’ capabilities in jointly modeling charts/plots and language data. It includes several pretraining tasks covering plot deconstruction and numerical reasoning, which are key in visual language modeling. Starting from Pix2Struct, MATCHA outperforms state - of - the - art methods by nearly 20% on benchmarks like PlotQA and ChartQA. It also shows overall improvement in domains such as screenshots, textbook diagrams, and document figures, verifying its usefulness in broader visual language tasks.

💻 Usage Examples

🔍 Basic Usage

from transformers import Pix2StructProcessor, Pix2StructForConditionalGeneration

import requests

from PIL import Image

processor = Pix2StructProcessor.from_pretrained('google/matcha-chart2text-pew')

model = Pix2StructForConditionalGeneration.from_pretrained('google/matcha-chart2text-pew')

url = "https://raw.githubusercontent.com/vis-nlp/ChartQA/main/ChartQA%20Dataset/val/png/20294671002019.png"

image = Image.open(requests.get(url, stream=True).raw)

inputs = processor(images=image, return_tensors="pt")

predictions = model.generate(**inputs, max_new_tokens=512)

print(processor.decode(predictions[0], skip_special_tokens=True))

⚙️ Converting from T5x to huggingface

You can use the convert_pix2struct_checkpoint_to_pytorch.py script as follows:

python convert_pix2struct_checkpoint_to_pytorch.py --t5x_checkpoint_path PATH_TO_T5X_CHECKPOINTS --pytorch_dump_path PATH_TO_SAVE --is_vqa

If you are converting a large model, run:

python convert_pix2struct_checkpoint_to_pytorch.py --t5x_checkpoint_path PATH_TO_T5X_CHECKPOINTS --pytorch_dump_path PATH_TO_SAVE --use-large --is_vqa

Once saved, you can push your converted model with the following snippet:

from transformers import Pix2StructForConditionalGeneration, Pix2StructProcessor

model = Pix2StructForConditionalGeneration.from_pretrained(PATH_TO_SAVE)

processor = Pix2StructProcessor.from_pretrained(PATH_TO_SAVE)

model.push_to_hub("USERNAME/MODEL_NAME")

processor.push_to_hub("USERNAME/MODEL_NAME")

🤝 Contribution

This model was originally contributed by Fangyu Liu, Francesco Piccinno et al. and added to the Hugging Face ecosystem by Younes Belkada.

📄 Citation

If you want to cite this work, please consider citing the original paper:

@misc{liu2022matcha,

title={MatCha: Enhancing Visual Language Pretraining with Math Reasoning and Chart Derendering},

author={Fangyu Liu and Francesco Piccinno and Syrine Krichene and Chenxi Pang and Kenton Lee and Mandar Joshi and Yasemin Altun and Nigel Collier and Julian Martin Eisenschlos},

year={2022},

eprint={2212.09662},

archivePrefix={arXiv},

primaryClass={cs.CL}

}