GLM 4.1V 9B Thinking

GLM-4.1V-9B-Thinking is an open-source vision-language model based on the GLM-4-9B-0414 foundation model, focusing on improving the reasoning ability in complex tasks and supporting a 64k context length and 4K image resolution.

Image-to-Text Transformers Supports Multiple LanguagesOpen Source License:MIT#Multimodal reasoning#4K image processing#64k long context

Transformers Supports Multiple LanguagesOpen Source License:MIT#Multimodal reasoning#4K image processing#64k long context

Transformers

Transformers Downloads 163

Release Time : 6/28/2025

Model Overview

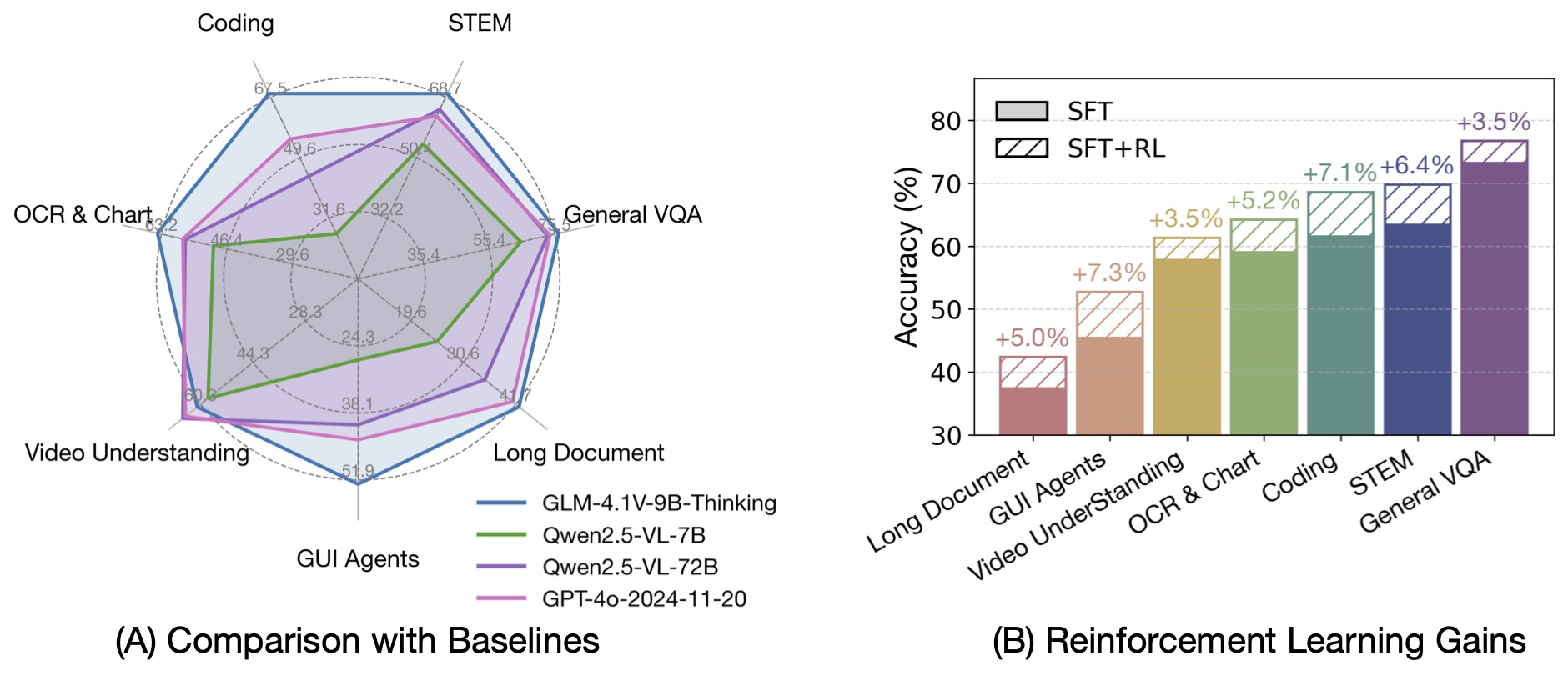

This model aims to explore the upper limit of the reasoning ability of vision-language models. By introducing the 'thinking paradigm' and reinforcement learning, it achieves state-of-the-art performance at the 10 billion parameter level and supports bilingual use of Chinese and English.

Model Features

Powerful reasoning ability

Through the chain-of-thought reasoning paradigm, it significantly improves the accuracy, richness, and interpretability of answers and performs excellently in complex tasks.

Long context support

Supports a 64k context length, suitable for processing long documents and multi-round conversations.

High-resolution image processing

Supports any aspect ratio and a maximum 4K image resolution, capable of processing high-definition images.

Bilingual support

Provides an open-source version with Chinese and English bilingual support, suitable for multilingual application scenarios.

Model Capabilities

Image description

Complex task reasoning

Long context understanding

Multimodal intelligent agent

Use Cases

Intelligent system

Complex problem solving

Utilize the model's reasoning ability to solve complex multimodal problems.

Outperforms the 72 billion parameter Qwen-2.5-VL-72B in 18 benchmark test tasks.

Long document understanding

Process long documents and multi-round conversations, supporting a 64k context length.

Image analysis

High-definition image description

Provide a detailed description of high-definition images, supporting 4K resolution.

Featured Recommended AI Models