Lilt Roberta En Base

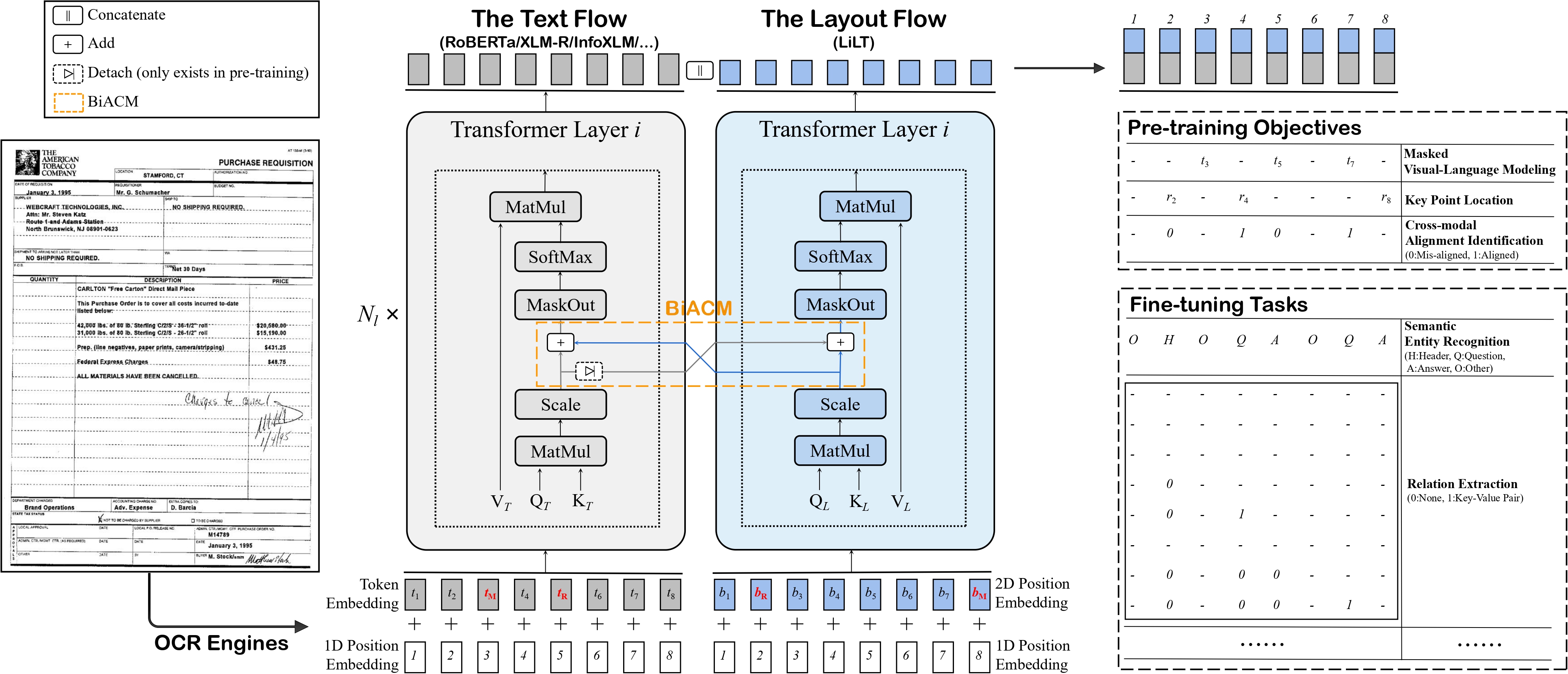

Language-independent Layout Transformer (LiLT) provides a LayoutLM-like model for any language by combining pre-trained RoBERTa (English) with a pre-trained language-independent layout transformer (LiLT).

Downloads 12.05k

Release Time : 9/29/2022

Model Overview

This model is designed for fine-tuning on tasks such as document image classification, document parsing, and document question answering, supporting multilingual document understanding.

Model Features

Language-agnostic

Can be combined with RoBERTa models in any language to support multilingual document understanding

Lightweight Layout Transformer

The LiLT module is lightweight and efficient, focusing on processing document layout information

Pre-trained model compatibility

Can be used with any pre-trained RoBERTa encoder available in the Hub

Model Capabilities

Document image classification

Document parsing

Document question answering

Structured document understanding

Use Cases

Document processing

Invoice processing

Extract key information from multilingual invoices

Table parsing

Parse tabular data in complex documents

Smart office

Contract analysis

Automatically analyze key clauses in contract documents

Featured Recommended AI Models