license: apache-2.0

datasets:

- FreedomIntelligence/ApolloMoEDataset

language:

- ar

- en

- zh

- ko

- ja

- mn

- th

- vi

- lo

- mg

- de

- pt

- es

- fr

- ru

- it

- hr

- gl

- cs

- co

- la

- uk

- bs

- bg

- eo

- sq

- da

- sa

- gn

- sr

- sk

- gd

- lb

- hi

- ku

- mt

- he

- ln

- bm

- sw

- ig

- rw

- ha

metrics:

- accuracy

base_model:

- FreedomIntelligence/Apollo2-7B

pipeline_tag: question-answering

tags:

- biology

- medical

Apollo2-7B-GGUF

Original model: Apollo2-7B

Made by: FreedomIntelligence

Quantization notes

Made with llama.cpp-b3938 with imatrix file based on Exllamav2 callibration dataset.

This model is meant to run with llama.cpp-compatible apps such as Text-Generation-WebUI, KoboldCpp, Jan, LM Studio and many many others.

17.12.2024: Readme update. It seems Q4_0_4_4, Q4_0_4_8 and Q4_0_8_8 support was removed in recent llama.cpp. I'll keep them but they might be no longer useful.

03.02.2025: Added Q4_0 and IQ4_NL quants as a substitute for Q4_0_X_Y quants for ARM devices with newer llama.cpp versions.

Original model card

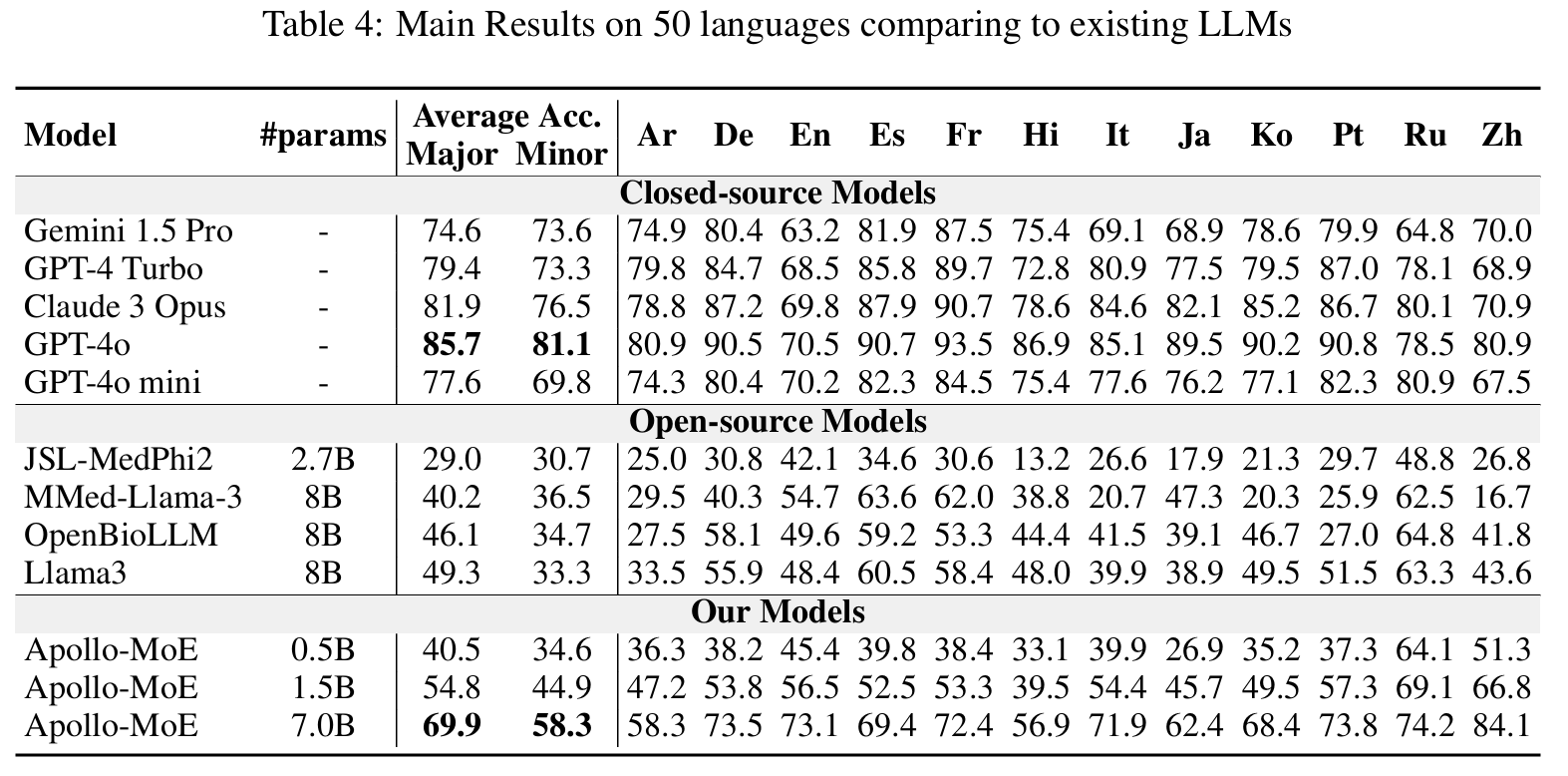

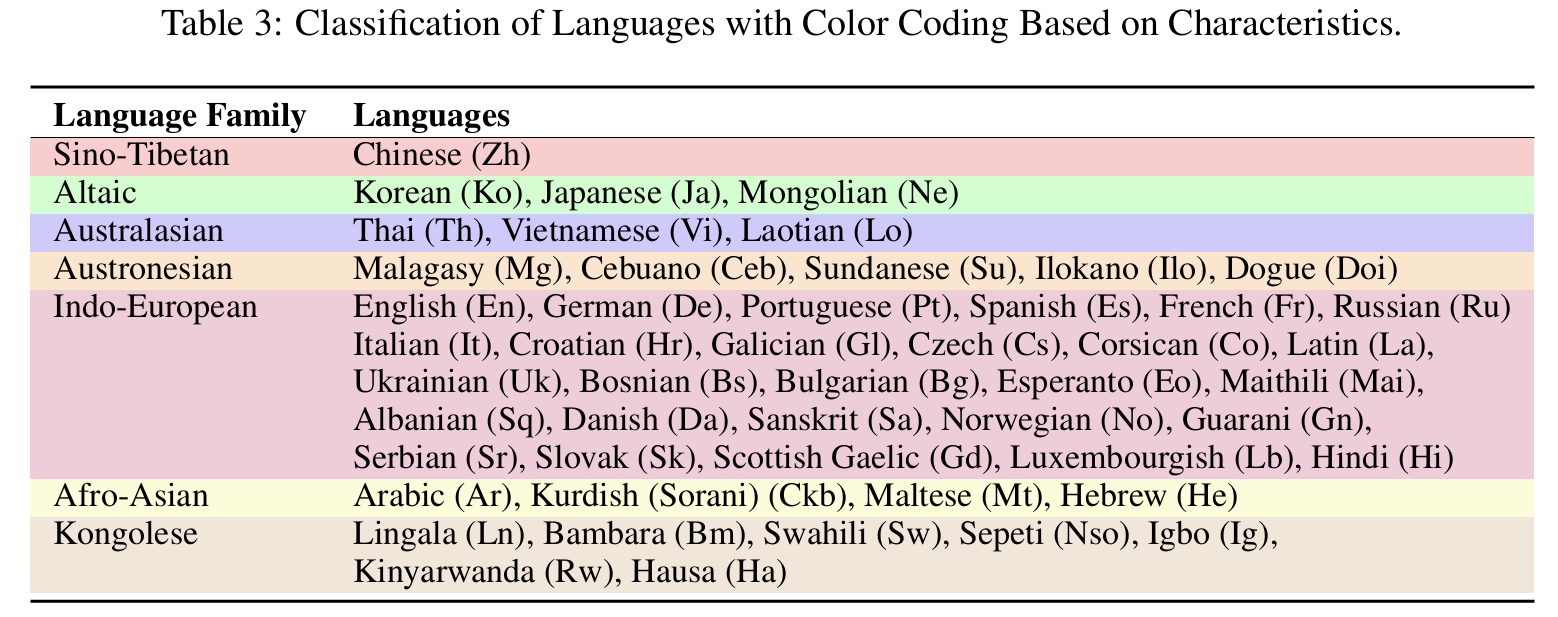

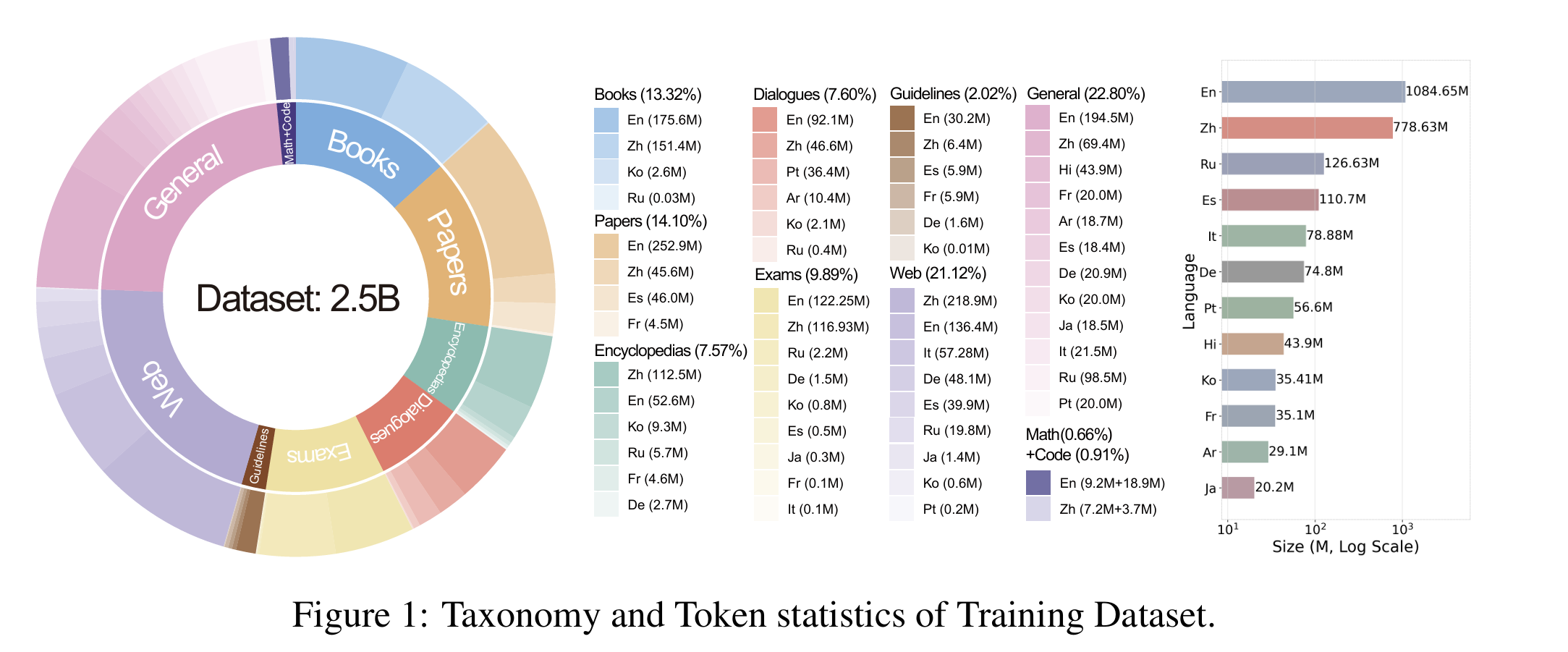

Democratizing Medical LLMs For Much More Languages

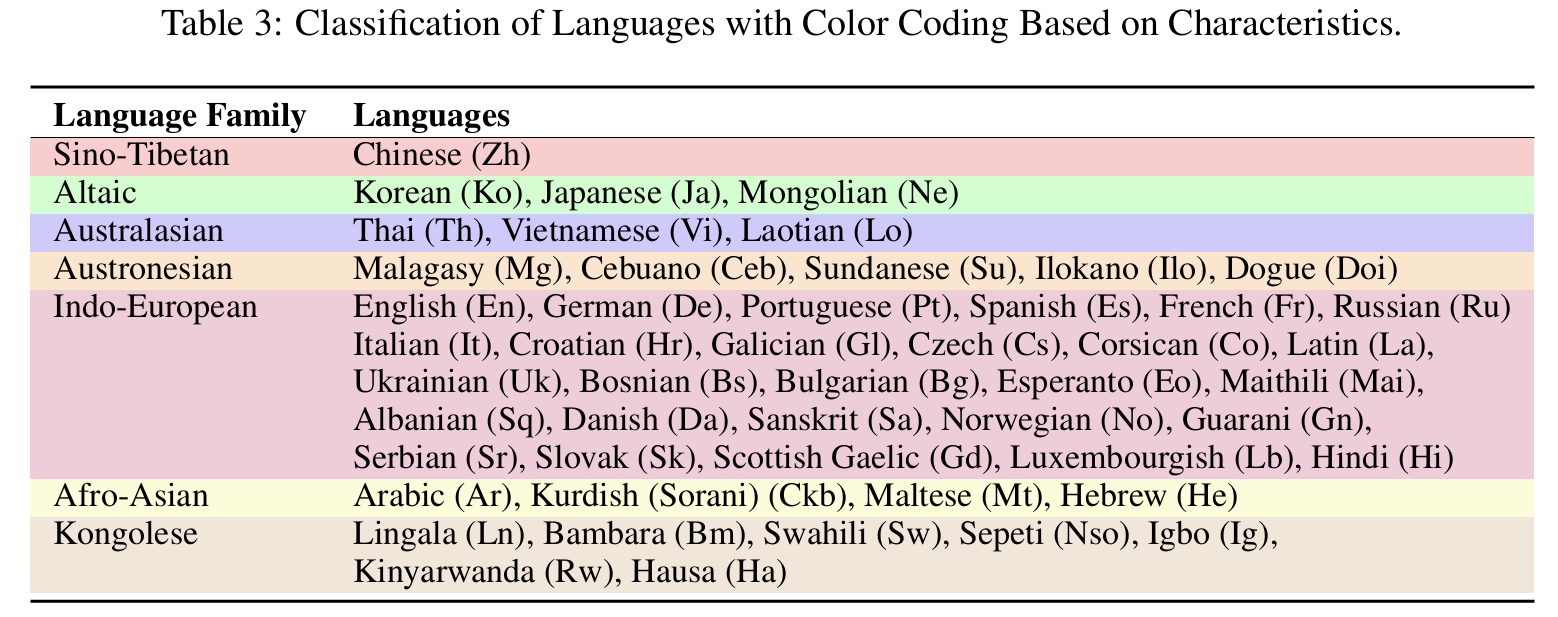

Covering 12 Major Languages including English, Chinese, French, Hindi, Spanish, Arabic, Russian, Japanese, Korean, German, Italian, Portuguese and 38 Minor Languages So far.

📃 Paper • 🌐 Demo • 🤗 ApolloMoEDataset • 🤗 ApolloMoEBench • 🤗 Models •🌐 Apollo • 🌐 ApolloMoE

🌈 Update

- [2024.10.15] ApolloMoE repo is published!🎉

Languages Coverage

12 Major Languages and 38 Minor Languages

Click to view the Languages Coverage

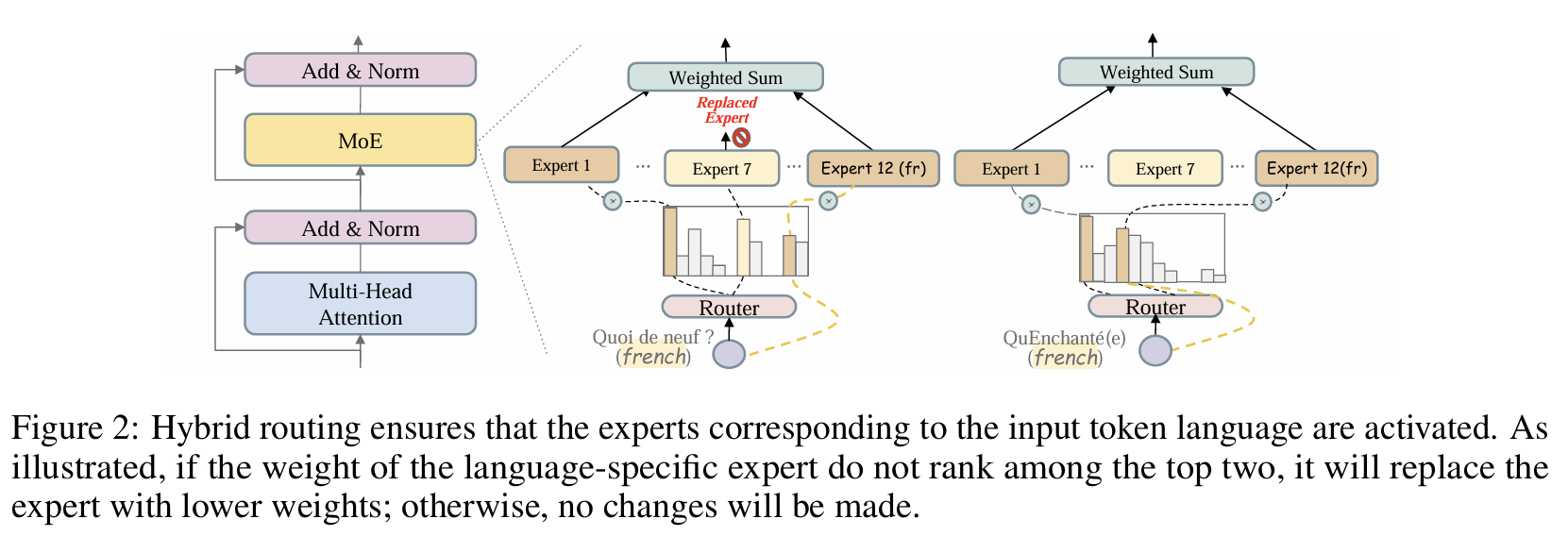

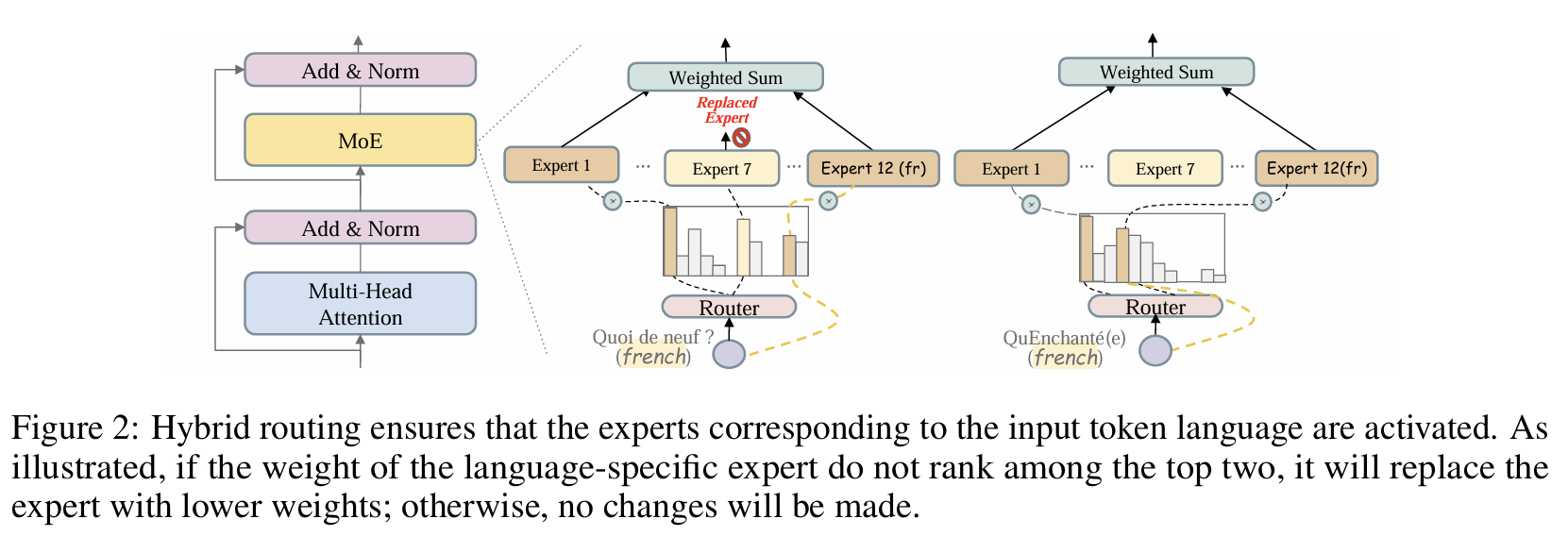

Architecture

Click to view the MoE routing image

Results

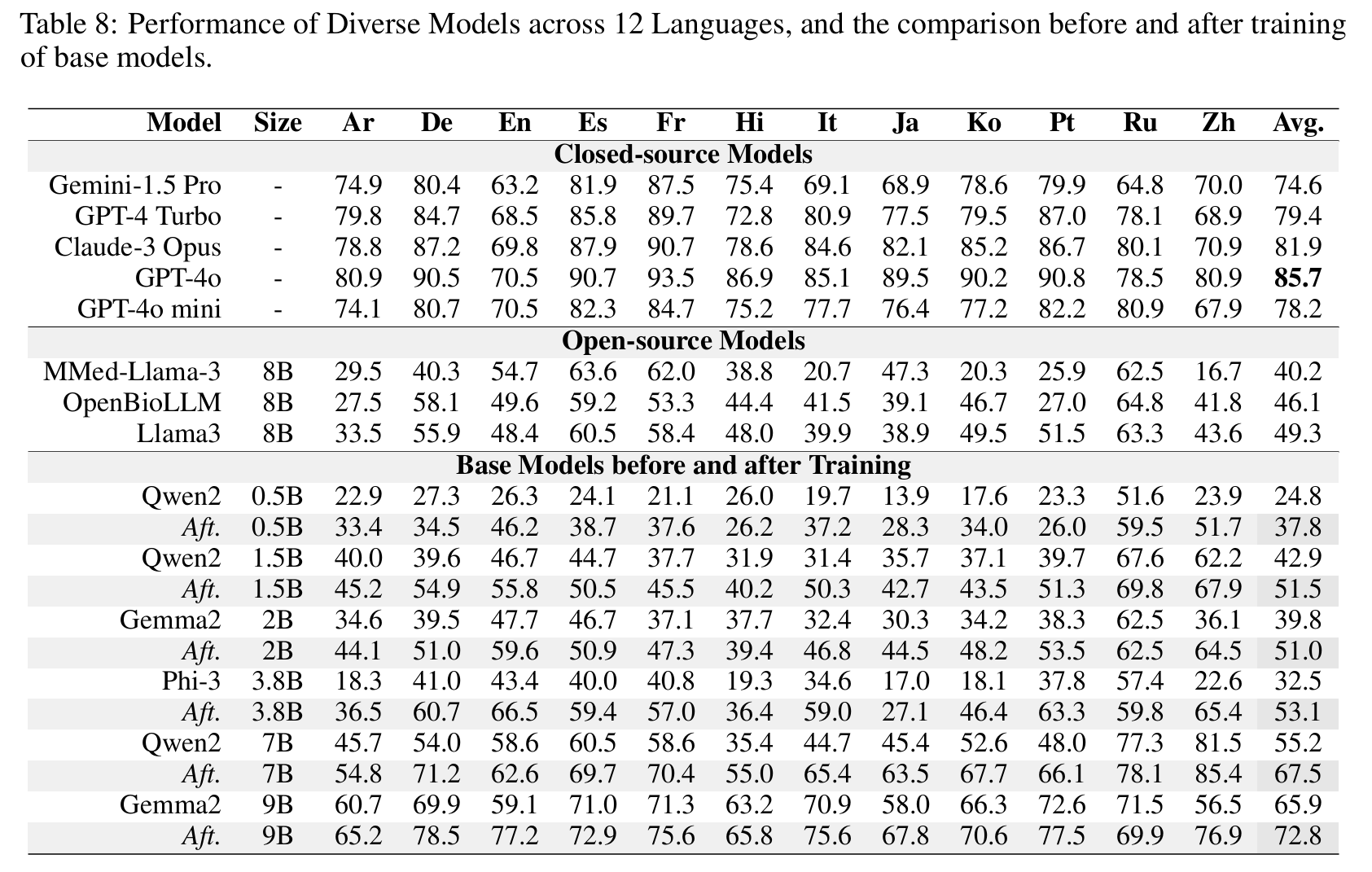

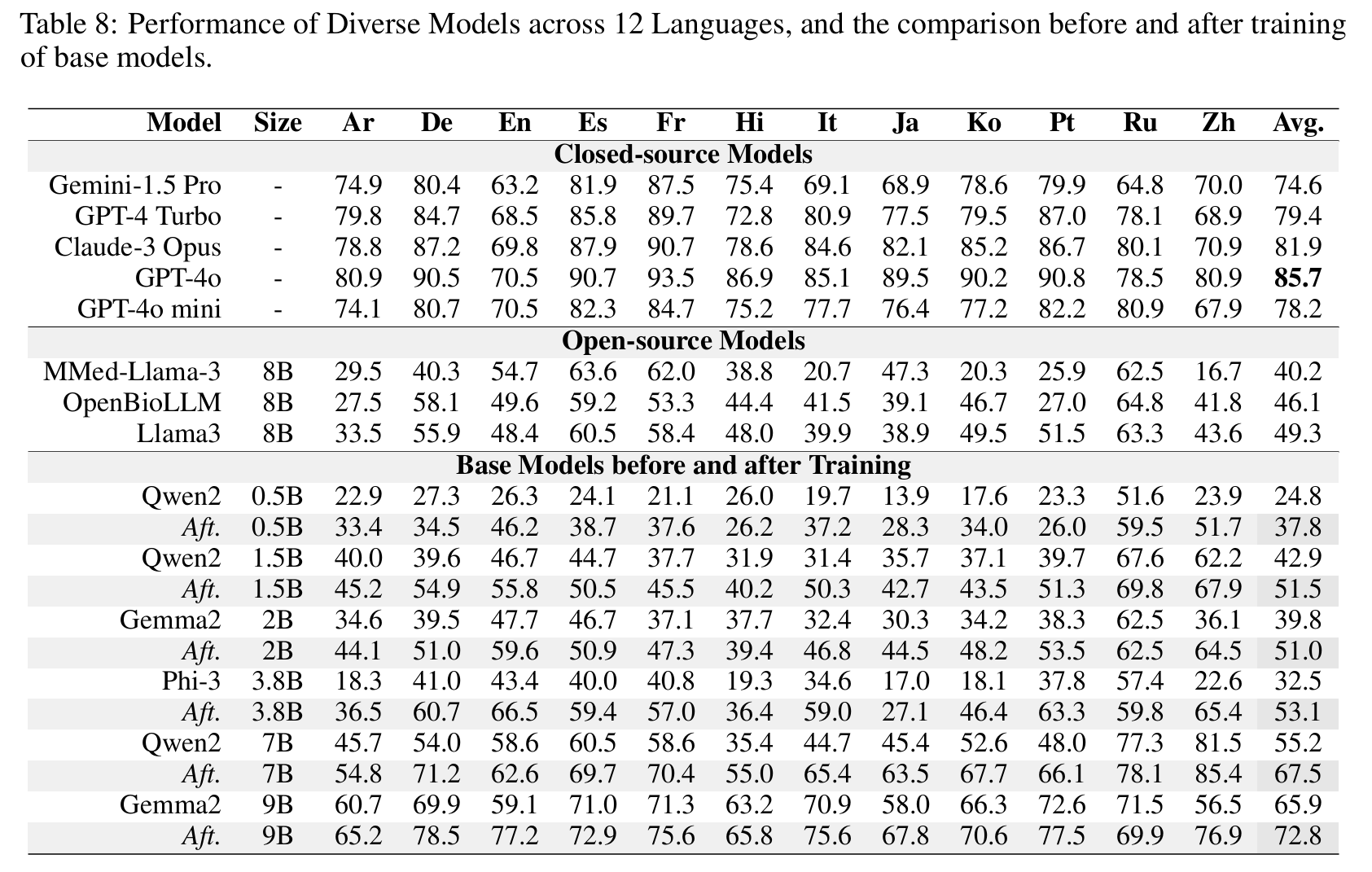

Dense

🤗 Apollo2-0.5B • 🤗 Apollo2-1.5B • 🤗 Apollo2-2B

🤗 Apollo2-3.8B • 🤗 Apollo2-7B • 🤗 Apollo2-9B

Click to view the Dense Models Results

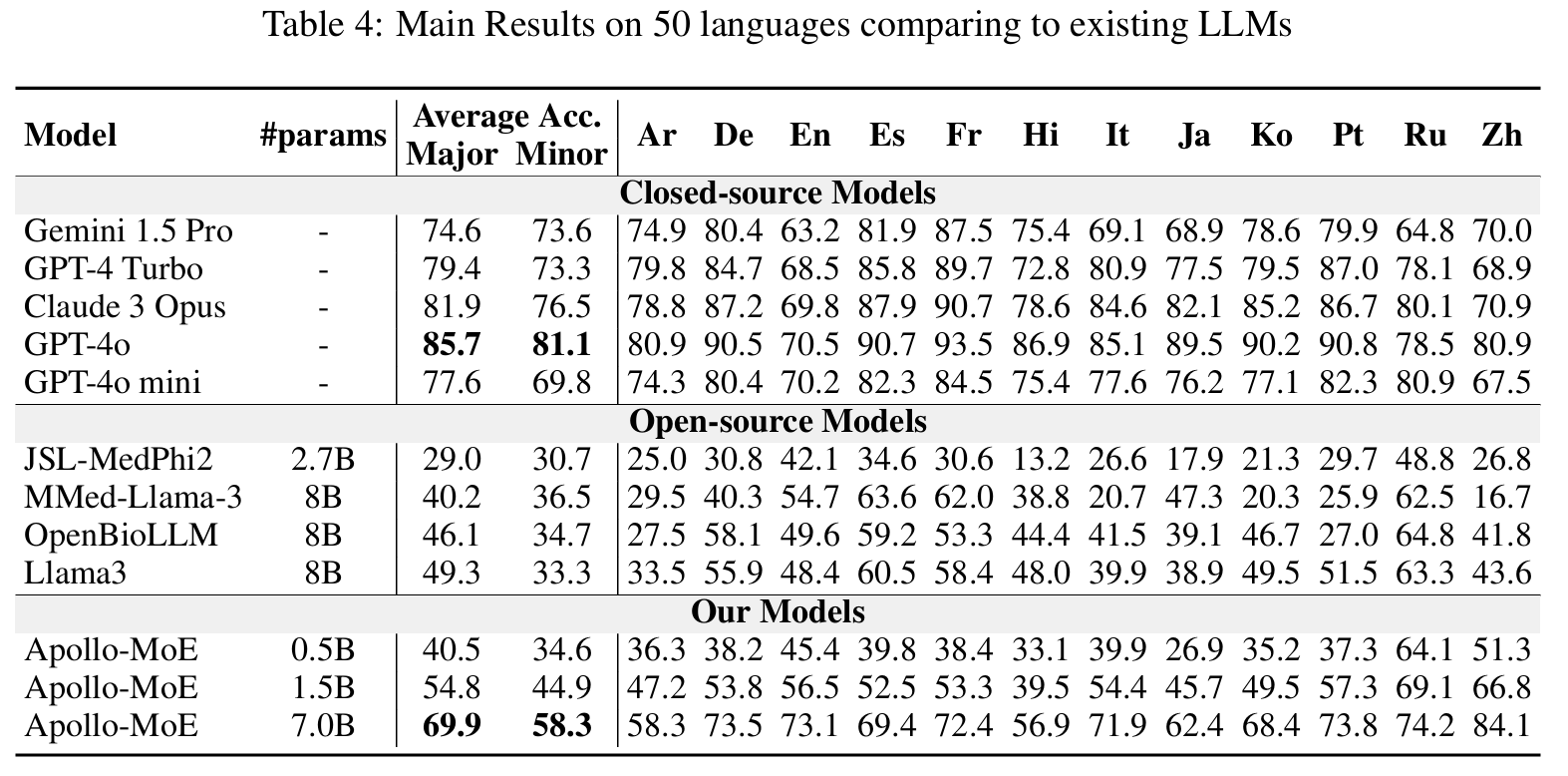

Post-MoE

🤗 Apollo-MoE-0.5B • 🤗 Apollo-MoE-1.5B • 🤗 Apollo-MoE-7B

Click to view the Post-MoE Models Results

Usage Format

Apollo2

- 0.5B, 1.5B, 7B: User:{query}\nAssistant:{response}<|endoftext|>

- 2B, 9B: User:{query}\nAssistant:{response}<eos>

- 3.8B: <|user|>\n{query}<|end|><|assisitant|>\n{response}<|end|>

Apollo-MoE

- 0.5B, 1.5B, 7B: User:{query}\nAssistant:{response}<|endoftext|>

Dataset & Evaluation

Results reproduction

Click to expand

We take Apollo2-7B or Apollo-MoE-0.5B as example

-

Download Dataset for project:

bash 0.download_data.sh

-

Prepare test and dev data for specific model:

- Create test data for with special token

bash 1.data_process_test&dev.sh

-

Prepare train data for specific model (Create tokenized data in advance):

- You can adjust data Training order and Training Epoch in this step

bash 2.data_process_train.sh

-

Train the model

- If you want to train in Multi Nodes please refer to ./src/sft/training_config/zero_multi.yaml

bash 3.single_node_train.sh

-

Evaluate your model: Generate score for benchmark

bash 4.eval.sh

Citation

Please use the following citation if you intend to use our dataset for training or evaluation:

@misc{zheng2024efficientlydemocratizingmedicalllms,

title={Efficiently Democratizing Medical LLMs for 50 Languages via a Mixture of Language Family Experts},

author={Guorui Zheng and Xidong Wang and Juhao Liang and Nuo Chen and Yuping Zheng and Benyou Wang},

year={2024},

eprint={2410.10626},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2410.10626},

}

Transformers

Transformers