🚀 AltCLIP

A simple and efficient method to train a better bilingual CLIP model

🚀 Quick Start

AltCLIP is a bilingual CLIP model that can provide support for the AltDiffusion model. You can download the code from FlagAI AltCLIP and follow the instructions in the README to get started.

✨ Features

- Bilingual Support: AltCLIP supports both Chinese and English, making it suitable for a wide range of applications.

- High Performance: The model shows excellent performance in text-image retrieval tasks, outperforming many existing models.

- Open Source: The model code is open source on FlagAI, and the weights are available on modelhub.

📋 Model Information

| Property |

Details |

| Name |

AltCLIP |

| Task |

text-image representation |

| Language(s) |

Chinese & English |

| Model |

CLIP |

| Github |

FlagAI |

📚 Documentation

Brief Introduction

We propose a simple and efficient method to train a better bilingual CLIP model, named AltCLIP. AltCLIP is trained based on Stable Diffusiosn, and the training data comes from WuDao dataset and LIAON.

The AltCLIP model can support the AltDiffusion model in this project. For more information about the AltDiffusion model, please refer to this tutorial.

Training

There are two phases of training.

In the parallel knowledge distillation phase, we only use parallel corpus texts for distillation (parallel corpus is easier to obtain and larger in number compared to image text pairs). In the bilingual comparison learning phase, we use a small number of Chinese-English image-text pairs (about 2 million in total) to train our text encoder to better fit the image encoder.

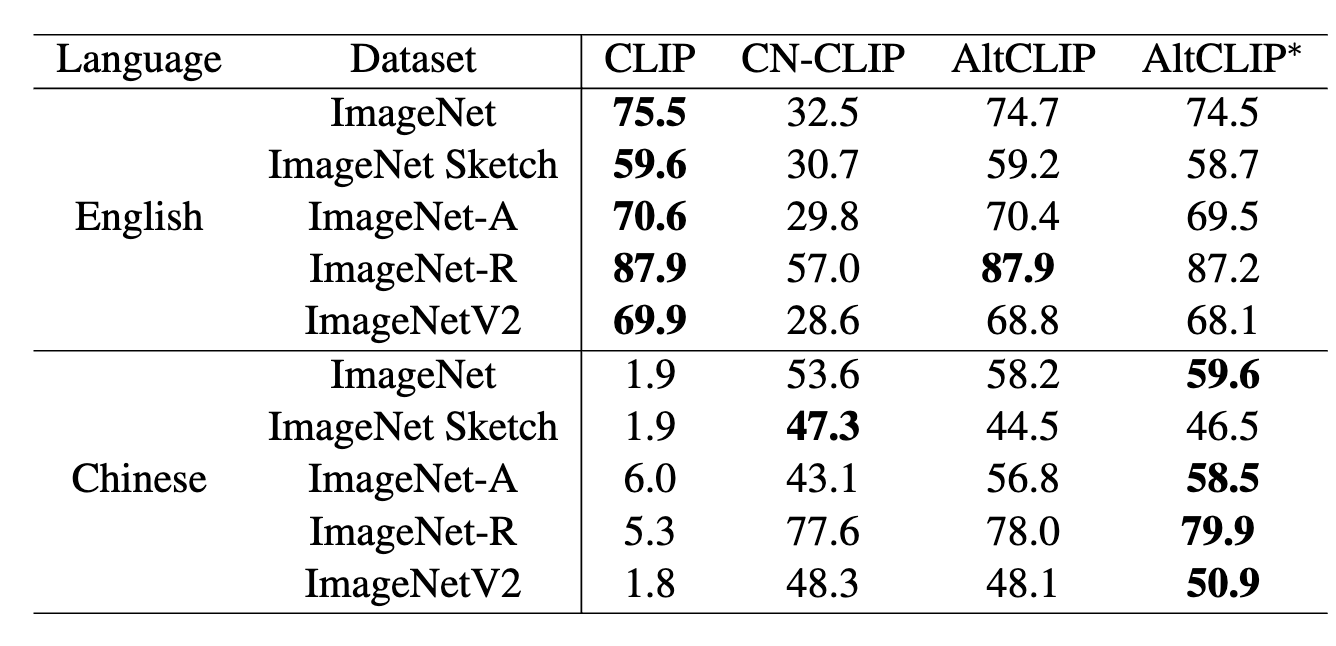

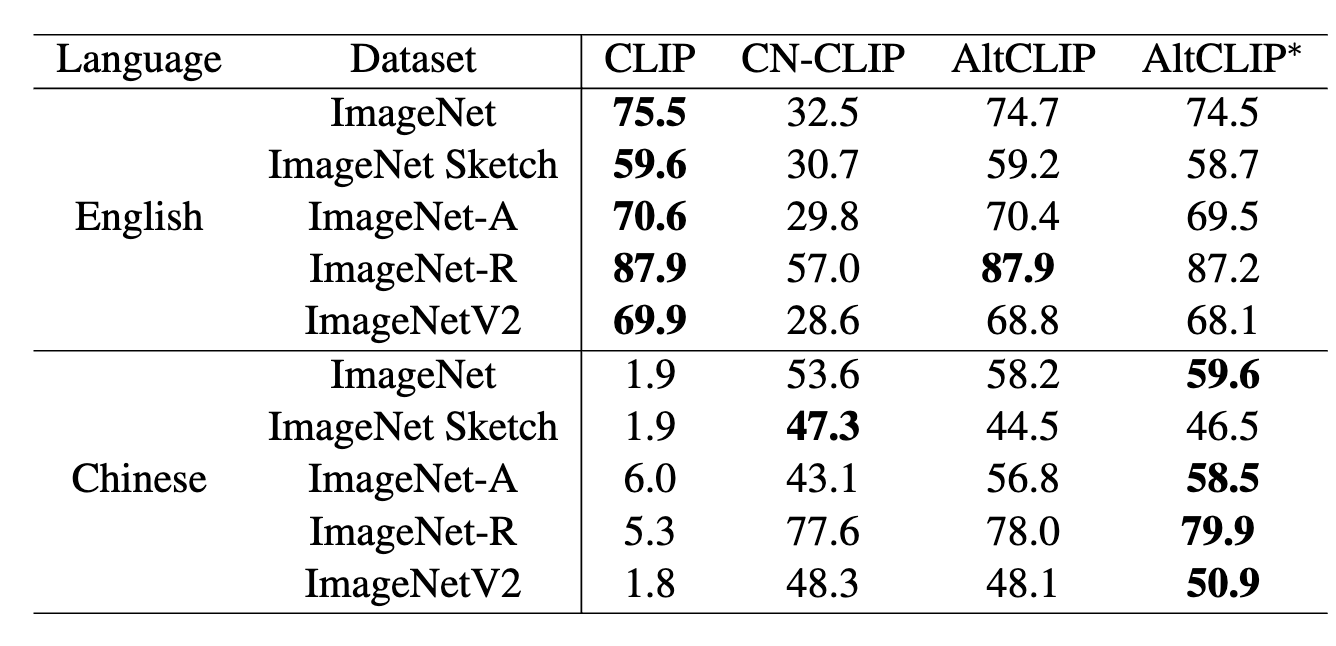

Performance

The following table shows the performance of AltCLIP and other models in text-to-image and image-to-text retrieval tasks:

| Language |

Method |

Text-to-Image Retrival (R@1) |

Text-to-Image Retrival (R@5) |

Text-to-Image Retrival (R@10) |

Image-to-Text Retrival (R@1) |

Image-to-Text Retrival (R@5) |

Image-to-Text Retrival (R@10) |

MR |

| English |

CLIP |

65.0 |

87.1 |

92.2 |

85.1 |

97.3 |

99.2 |

87.6 |

| English |

Taiyi |

25.3 |

48.2 |

59.2 |

39.3 |

68.1 |

79.6 |

53.3 |

| English |

Wukong |

- |

- |

- |

- |

- |

- |

- |

| English |

R2D2 |

- |

- |

- |

- |

- |

- |

- |

| English |

CN-CLIP |

49.5 |

76.9 |

83.8 |

66.5 |

91.2 |

96.0 |

77.3 |

| English |

AltCLIP |

66.3 |

87.8 |

92.7 |

85.9 |

97.7 |

99.1 |

88.3 |

| English |

AltCLIP∗ |

72.5 |

91.6 |

95.4 |

86.0 |

98.0 |

99.1 |

90.4 |

| Chinese |

CLIP |

0.0 |

2.4 |

4.0 |

2.3 |

8.1 |

12.6 |

5.0 |

| Chinese |

Taiyi |

53.7 |

79.8 |

86.6 |

63.8 |

90.5 |

95.9 |

78.4 |

| Chinese |

Wukong |

51.7 |

78.9 |

86.3 |

76.1 |

94.8 |

97.5 |

80.9 |

| Chinese |

R2D2 |

60.9 |

86.8 |

92.7 |

77.6 |

96.7 |

98.9 |

85.6 |

| Chinese |

CN-CLIP |

68.0 |

89.7 |

94.4 |

80.2 |

96.6 |

98.2 |

87.9 |

| Chinese |

AltCLIP |

63.7 |

86.3 |

92.1 |

84.7 |

97.4 |

98.7 |

87.2 |

| Chinese |

AltCLIP∗ |

69.8 |

89.9 |

94.7 |

84.8 |

97.4 |

98.8 |

89.2 |

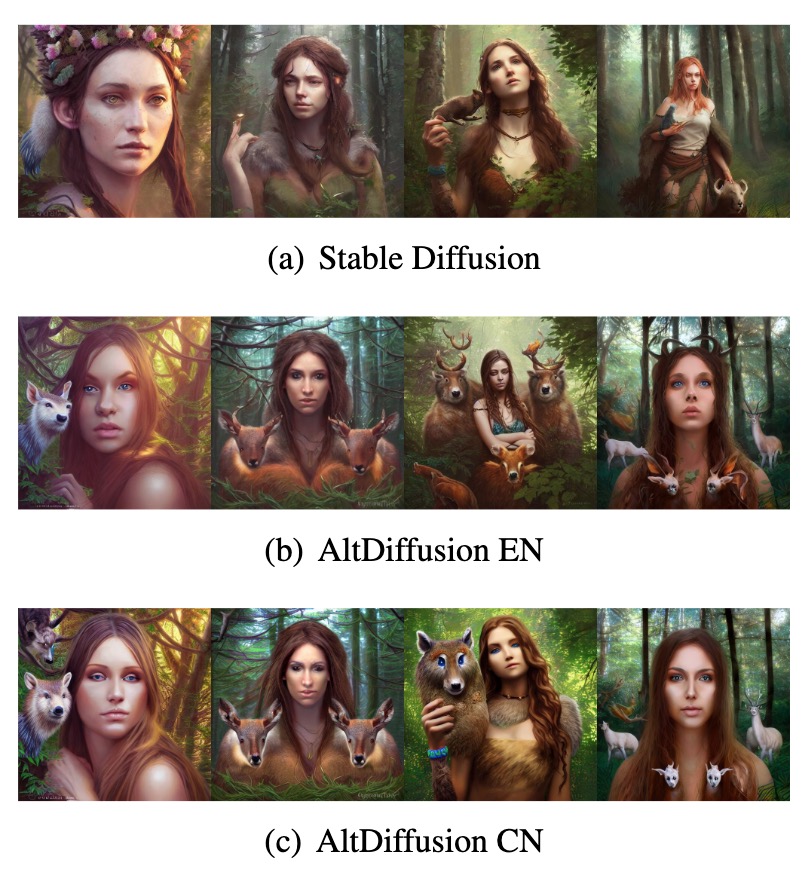

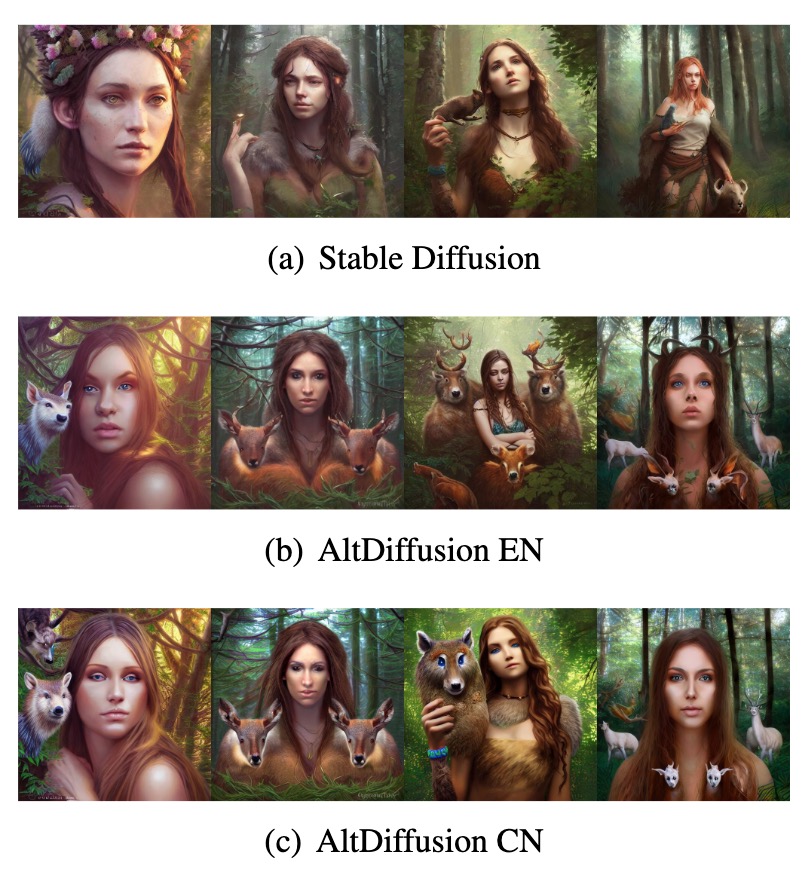

Visualization effects

Based on AltCLIP, we have also developed the AltDiffusion model, visualized as follows:

💻 Usage Examples

Basic Usage

from PIL import Image

import requests

from modeling_altclip import AltCLIP

from processing_altclip import AltCLIPProcessor

model = AltCLIP.from_pretrained("BAAI/AltCLIP")

processor = AltCLIPProcessor.from_pretrained("BAAI/AltCLIP")

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

image = Image.open(requests.get(url, stream=True).raw)

inputs = processor(text=["a photo of a cat", "a photo of a dog"], images=image, return_tensors="pt", padding=True)

outputs = model(**inputs)

logits_per_image = outputs.logits_per_image

probs = logits_per_image.softmax(dim=1)

🔧 Technical Details

Training Phases

The training of AltCLIP consists of two phases:

- Parallel Knowledge Distillation Phase: We use parallel corpus texts for distillation. Parallel corpus is easier to obtain and larger in quantity compared to image-text pairs.

- Bilingual Contrastive Learning Phase: We use a small number (about 2 million) of Chinese-English image-text pairs to train the text encoder to better match the image encoder.

📄 License

This model is licensed under the CreativeML OpenRAIL-M license. By accessing the repository, you agree to the terms of the license and that your contact information (email address and username) may be shared with the model authors.

📚 Citation

If you find this work helpful, please consider citing the following paper:

@article{https://doi.org/10.48550/arxiv.2211.06679,

doi = {10.48550/ARXIV.2211.06679},

url = {https://arxiv.org/abs/2211.06679},

author = {Chen, Zhongzhi and Liu, Guang and Zhang, Bo-Wen and Ye, Fulong and Yang, Qinghong and Wu, Ledell},

keywords = {Computation and Language (cs.CL), FOS: Computer and information sciences},

title = {AltCLIP: Altering the Language Encoder in CLIP for Extended Language Capabilities},

publisher = {arXiv},

year = {2022},

copyright = {arXiv.org perpetual, non-exclusive license}

}