Metaclip H14 Fullcc2.5b

MetaCLIP is a vision-language model based on CommonCrawl data, improving CLIP model performance through enhanced data filtering methods

Downloads 26.29k

Release Time : 10/9/2023

Model Overview

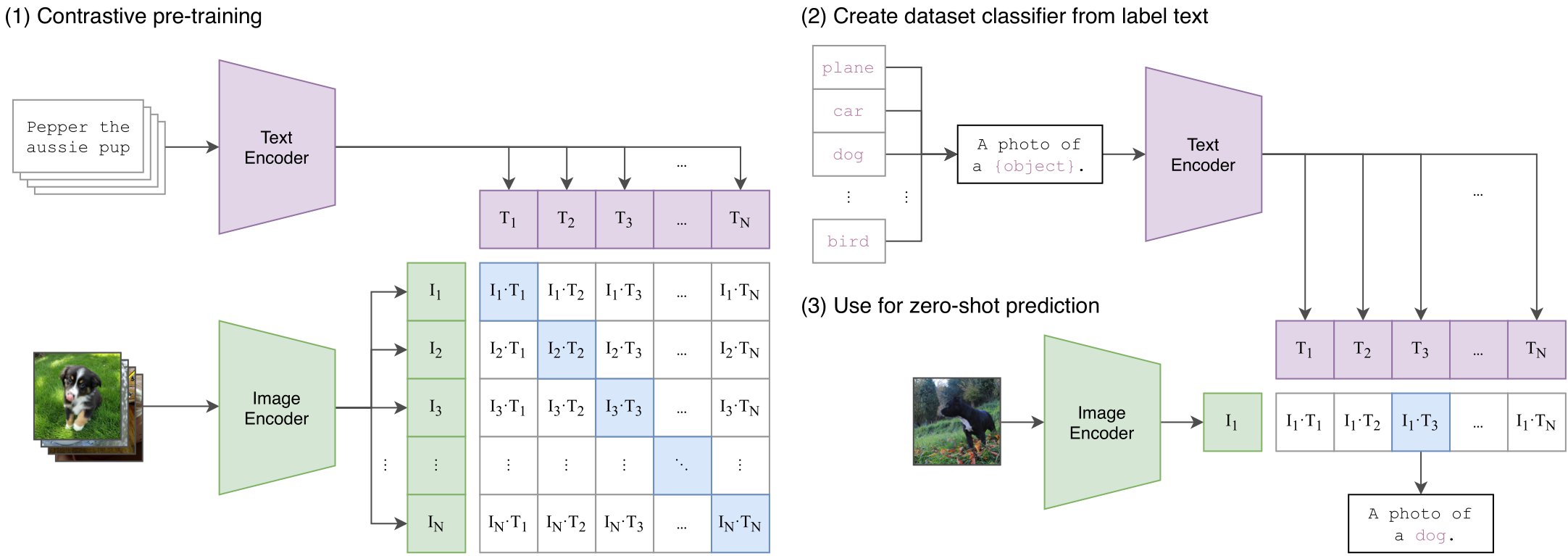

This model constructs an image-text shared embedding space using MetaCLIP technology, supporting zero-shot image classification and cross-modal retrieval tasks, aiming to address the opacity in CLIP training data filtering

Model Features

Enhanced data filtering method

Optimizes CLIP training data selection process through MetaCLIP technology, addressing the opacity in original CLIP data preprocessing

High-resolution processing capability

Supports 14×14 image patch resolution, capturing finer visual features

Large-scale pretraining

Trained on 2.5 billion data points from CommonCrawl, with strong generalization capabilities

Model Capabilities

Zero-shot image classification

Text-based image retrieval

Image-based text retrieval

Cross-modal feature extraction

Use Cases

Content retrieval

Multimodal search engine

Retrieve relevant images using text queries, or find related text content through images

Intelligent annotation

Automatic image tagging

Generate descriptive labels for unannotated images

Featured Recommended AI Models