🚀 CogVideoX-Fun

CogVideoX-Fun is a pipeline modified based on the CogVideoX structure. It's a more flexible version of CogVideoX for generating AI images and videos, as well as training baseline and Lora models for Diffusion Transformer. We support direct prediction from the pre - trained CogVideoX - Fun model to generate videos of different resolutions, about 6 seconds long with 8 fps (1 - 49 frames). Users can also train their own baseline and Lora models for style transformation.

🚀 Quick Start

1. Cloud Usage: AliyunDSW/Docker

a. Via Alibaba Cloud DSW

DSW offers free GPU usage time. Users can apply once, and the application is valid for 3 months after approval.

Alibaba Cloud provides free GPU time on Freetier. Obtain it and use it in Alibaba Cloud PAI - DSW to start CogVideoX - Fun within 5 minutes.

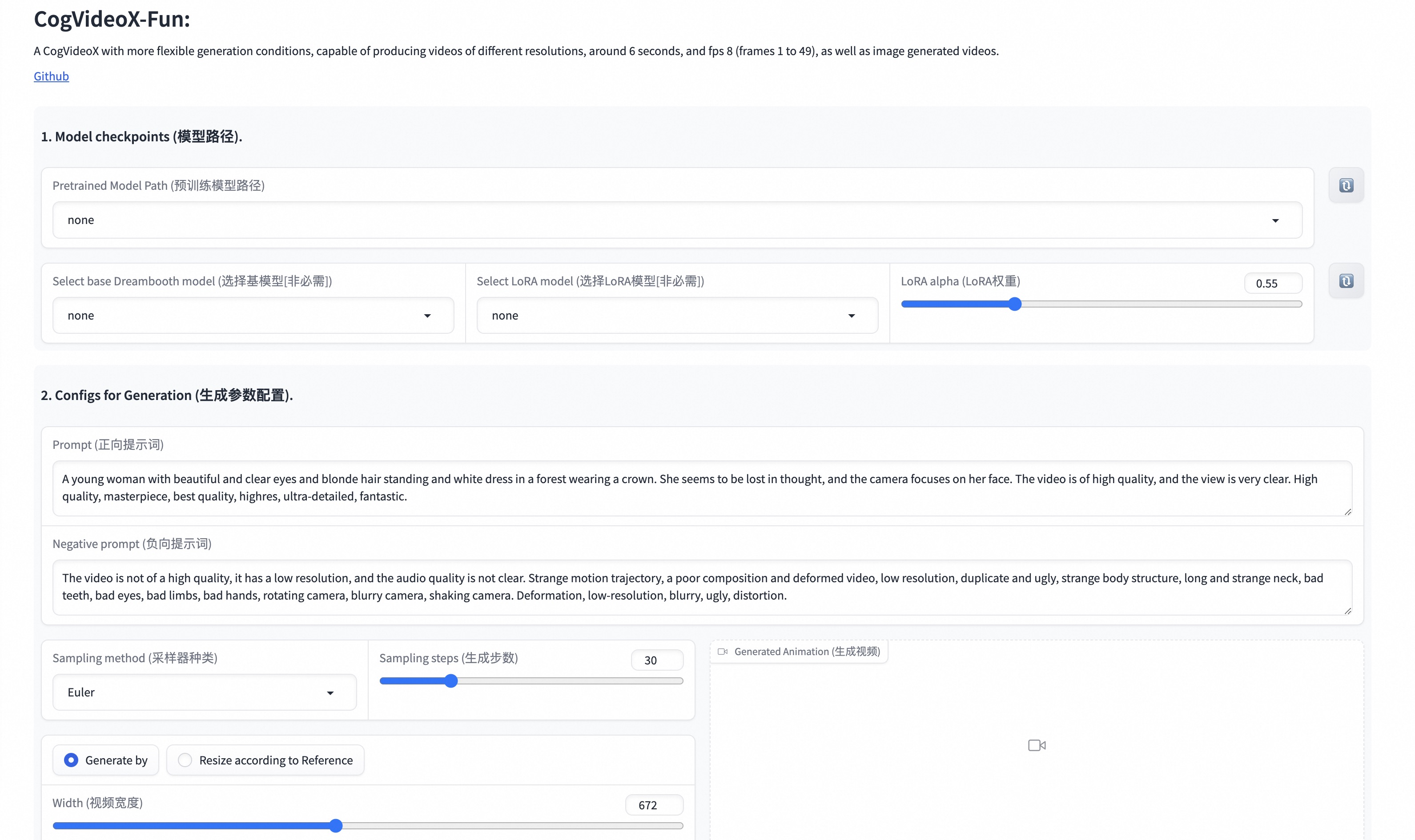

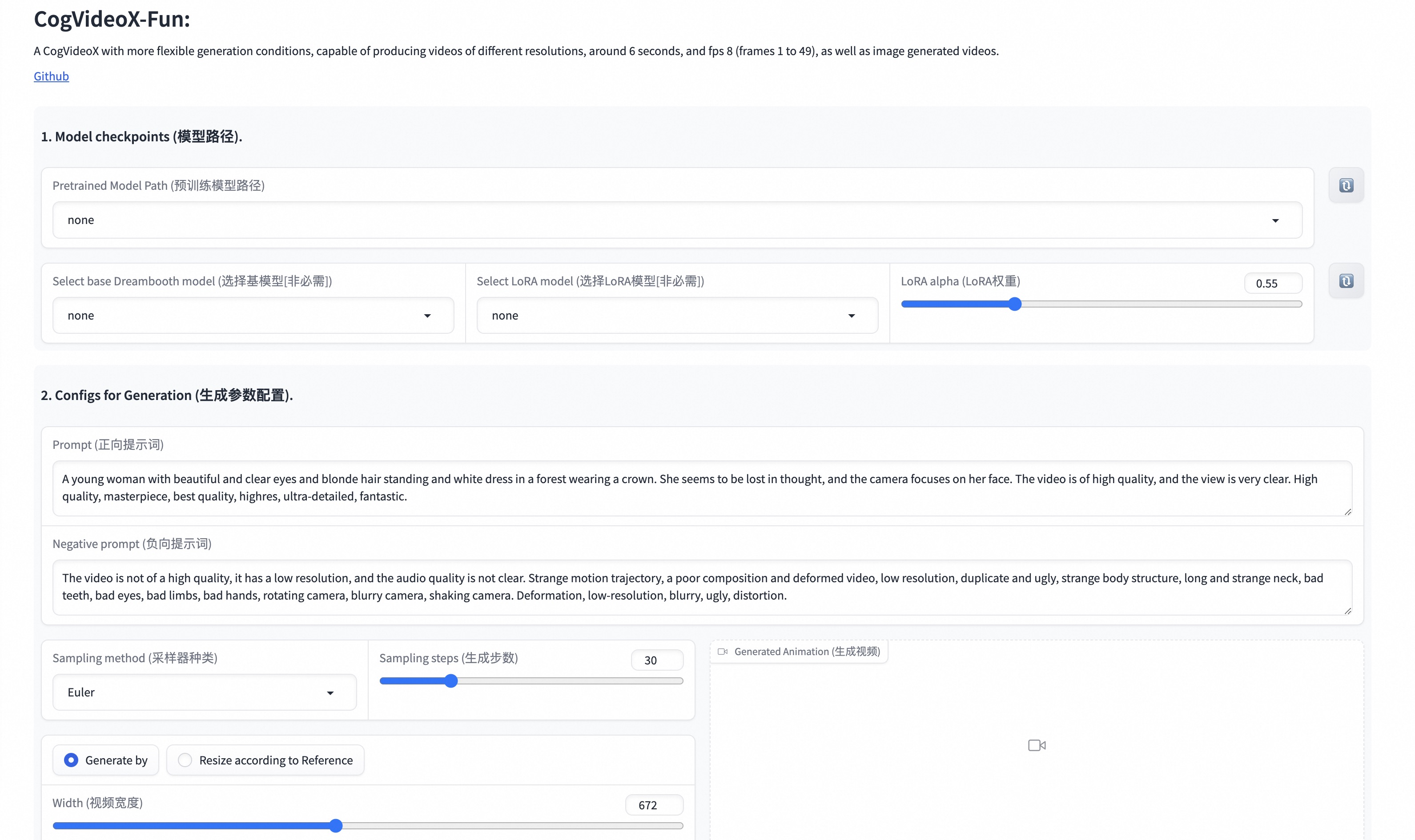

b. Via ComfyUI

Our ComfyUI interface is as follows. For details, refer to ComfyUI README.

c. Via Docker

When using Docker, ensure that the GPU driver and CUDA environment are correctly installed on the machine. Then execute the following commands in sequence:

# pull image

docker pull mybigpai-public-registry.cn-beijing.cr.aliyuncs.com/easycv/torch_cuda:cogvideox_fun

# enter image

docker run -it -p 7860:7860 --network host --gpus all --security-opt seccomp:unconfined --shm-size 200g mybigpai-public-registry.cn-beijing.cr.aliyuncs.com/easycv/torch_cuda:cogvideox_fun

# clone code

git clone https://github.com/aigc-apps/CogVideoX-Fun.git

# enter CogVideoX-Fun's dir

cd CogVideoX-Fun

# download weights

mkdir models/Diffusion_Transformer

mkdir models/Personalized_Model

wget https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/cogvideox_fun/Diffusion_Transformer/CogVideoX-Fun-2b-InP.tar.gz -O models/Diffusion_Transformer/CogVideoX-Fun-2b-InP.tar.gz

cd models/Diffusion_Transformer/

tar -xvf CogVideoX-Fun-2b-InP.tar.gz

cd ../../

2. Local Installation: Environment Check/Download/Installation

a. Environment Check

We have verified that CogVideoX - Fun can run in the following environments:

Windows Details:

- Operating System: Windows 10

- Python: python3.10 & python3.11

- PyTorch: torch2.2.0

- CUDA: 11.8 & 12.1

- CUDNN: 8+

- GPU: Nvidia - 3060 12G & Nvidia - 3090 24G

Linux Details:

- Operating System: Ubuntu 20.04, CentOS

- Python: python3.10 & python3.11

- PyTorch: torch2.2.0

- CUDA: 11.8 & 12.1

- CUDNN: 8+

- GPU: Nvidia - V100 16G & Nvidia - A10 24G & Nvidia - A100 40G & Nvidia - A100 80G

We need approximately 60GB of available disk space. Please check!

b. Weight Placement

It's recommended to place the weights in the specified paths:

📦 models/

├── 📂 Diffusion_Transformer/

│ ├── 📂 CogVideoX-Fun-2b-InP/

│ └── 📂 CogVideoX-Fun-5b-InP/

├── 📂 Personalized_Model/

│ └── your trained trainformer model / your trained lora model (for UI load)

✨ Features

- New Feature: Create code! Now supports Windows and Linux. Supports video generation with any resolution from 256x256x49 to 1024x1024x49 for 2b and 5b models. [2024.09.18]

- Function Overview:

Our UI interface is as follows:

💻 Usage Examples

Video Works

All the displayed results are generated from image - to - video.

CogVideoX-Fun-5B

Resolution - 1024

Resolution - 768

Resolution - 512

CogVideoX-Fun-2B

Resolution - 768

How to Use

1. Generation

a. Video Generation

i. Run Python File

- Step 1: Download the corresponding weights and place them in the

models folder.

- Step 2: Modify

prompt, neg_prompt, guidance_scale, and seed in the predict_t2v.py file.

- Step 3: Run the

predict_t2v.py file and wait for the generation result. The result will be saved in the samples/cogvideox-fun-videos-t2v folder.

- Step 4: If you want to combine other self - trained backbones and Lora, modify

predict_t2v.py and lora_path in predict_t2v.py as needed.

ii. Via UI Interface

- Step 1: Download the corresponding weights and place them in the

models folder.

- Step 2: Run the

app.py file and enter the Gradio page.

- Step 3: Select the generation model on the page, fill in

prompt, neg_prompt, guidance_scale, seed, etc., click "Generate", and wait for the generation result. The result will be saved in the sample folder.

iii. Via ComfyUI

For details, refer to ComfyUI README.

2. Model Training

A complete CogVideoX - Fun training pipeline should include data preprocessing and Video DiT training.

a. Data Preprocessing

We provide a simple demo for training a Lora model with image data. For details, refer to wiki.

A complete data preprocessing pipeline for long - video segmentation, cleaning, and description can be referred to in the README of the video caption section.

If you want to train a text - to - image - video generation model, you need to arrange the dataset in this format:

📦 project/

├── 📂 datasets/

│ ├── 📂 internal_datasets/

│ ├── 📂 train/

│ │ ├── 📄 00000001.mp4

│ │ ├── 📄 00000002.jpg

│ │ └── 📄 .....

│ └── 📄 json_of_internal_datasets.json

json_of_internal_datasets.json is a standard JSON file. The file_path in the JSON can be set as a relative path, as shown below:

[

{

"file_path": "train/00000001.mp4",

"text": "A group of young men in suits and sunglasses are walking down a city street.",

"type": "video"

},

{

"file_path": "train/00000002.jpg",

"text": "A group of young men in suits and sunglasses are walking down a city street.",

"type": "image"

},

.....

]

You can also set the path as an absolute path:

[

{

"file_path": "/mnt/data/videos/00000001.mp4",

"text": "A group of young men in suits and sunglasses are walking down a city street.",

"type": "video"

},

{

"file_path": "/mnt/data/train/00000001.jpg",

"text": "A group of young men in suits and sunglasses are walking down a city street.",

"type": "image"

},

.....

]

b. Video DiT Training

If the data format is a relative path during data preprocessing, enter scripts/train.sh and make the following settings:

export DATASET_NAME="datasets/internal_datasets/"

export DATASET_META_NAME="datasets/internal_datasets/json_of_internal_datasets.json"

...

train_data_format="normal"

If the data format is an absolute path, enter scripts/train.sh and make the following settings:

export DATASET_NAME=""

export DATASET_META_NAME="/mnt/data/json_of_internal_datasets.json"

Finally, run scripts/train.sh:

sh scripts/train.sh

For details on some parameter settings, refer to Readme Train and Readme Lora.

📚 Documentation

Model Address

| Name |

Storage Space |

Hugging Face |

Model Scope |

Description |

| CogVideoX-Fun-2b-InP.tar.gz |

9.7 GB before decompression / 13.0 GB after decompression |

🤗Link |

😄Link |

Official image - to - video weights. Supports video prediction at multiple resolutions (512, 768, 1024, 1280), trained with 49 frames at 8 fps |

| CogVideoX-Fun-5b-InP.tar.gz |

16.0GB before decompression / 20.0 GB after decompression |

🤗Link |

😄Link |

Official image - to - video weights. Supports video prediction at multiple resolutions (512, 768, 1024, 1280), trained with 49 frames at 8 fps |

Future Plans

References

- CogVideo: https://github.com/THUDM/CogVideo/

- EasyAnimate: https://github.com/aigc-apps/EasyAnimate

📄 License

This project uses the Apache License (Version 2.0).

The CogVideoX - 2B model (including its corresponding Transformers module and VAE module) is released under the Apache 2.0 License.

The CogVideoX - 5B model (Transformer module) is released under the CogVideoX License.

Transformers

Transformers