🗿 ruGPT-3.5 13B

A language model designed for the Russian language. As the name suggests, this model has 13 billion parameters. It's our largest model to date and was used in the training of GigaChat. For more details, refer to the article.

🚀 Quick Start

The ruGPT - 3.5 13B is a powerful language model for Russian. It offers high - quality language generation capabilities.

✨ Features

- Large - scale Model: With 13 billion parameters, it can handle complex language tasks.

- Diverse Training Data: Trained on a wide range of data sources including various domains, code, and legal documents.

- Used in GigaChat: Served as the foundation for training GigaChat.

📦 Installation

No installation steps were provided in the original document, so this section is skipped.

💻 Usage Examples

Basic Usage

request = "Стих про программиста может быть таким:"

encoded_input = tokenizer(request, return_tensors='pt', \

add_special_tokens=False).to('cuda:0')

output = model.generate(

**encoded_input,

num_beams=2,

do_sample=True,

max_new_tokens=100

)

print(tokenizer.decode(output[0], skip_special_tokens=True))

>>> Стих про программиста может быть таким:

Программист сидит в кресле,

Стих сочиняет он про любовь,

Он пишет, пишет, пишет, пишет...

И не выходит ни черта!

Advanced Usage

request = "Нейронная сеть — это"

encoded_input = tokenizer(request, return_tensors='pt', \

add_special_tokens=False).to('cuda:0')

output = model.generate(

**encoded_input,

num_beams=4,

do_sample=True,

max_new_tokens=100

)

print(tokenizer.decode(output[0], skip_special_tokens=True))

>>> Нейронная сеть — это математическая модель, состоящая из большого

количества нейронов, соединенных между собой электрическими связями.

Нейронная сеть может быть смоделирована на компьютере, и с ее помощью

можно решать задачи, которые не поддаются решению с помощью традиционных

математических методов.

request = "Гагарин полетел в космос в"

encoded_input = tokenizer(request, return_tensors='pt', \

add_special_tokens=False).to('cuda:0')

output = model.generate(

**encoded_input,

num_beams=2,

do_sample=True,

max_new_tokens=100

)

print(tokenizer.decode(output[0], skip_special_tokens=True))

>>> Гагарин полетел в космос в 1961 году. Это было первое в истории

человечества космическое путешествие. Юрий Гагарин совершил его

на космическом корабле Восток-1. Корабль был запущен с космодрома

Байконур.

📚 Documentation

Dataset

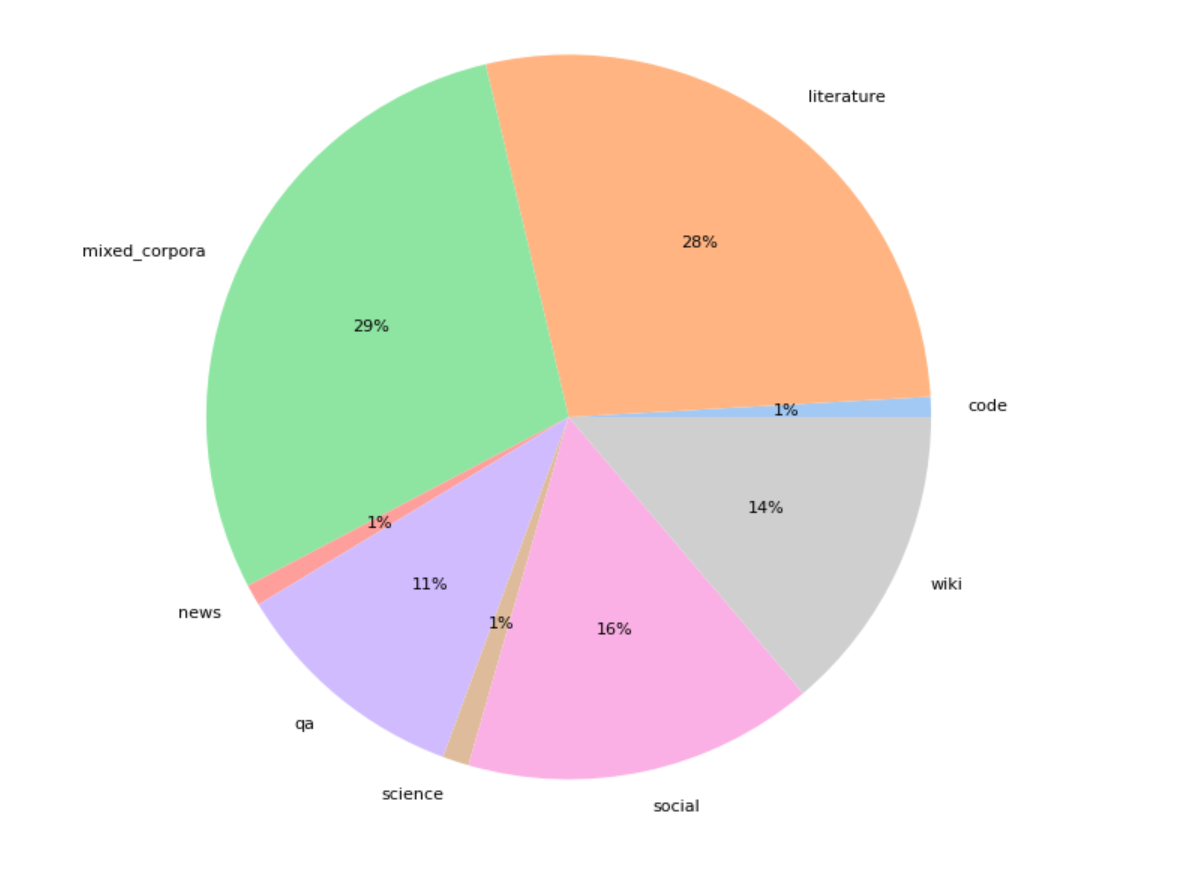

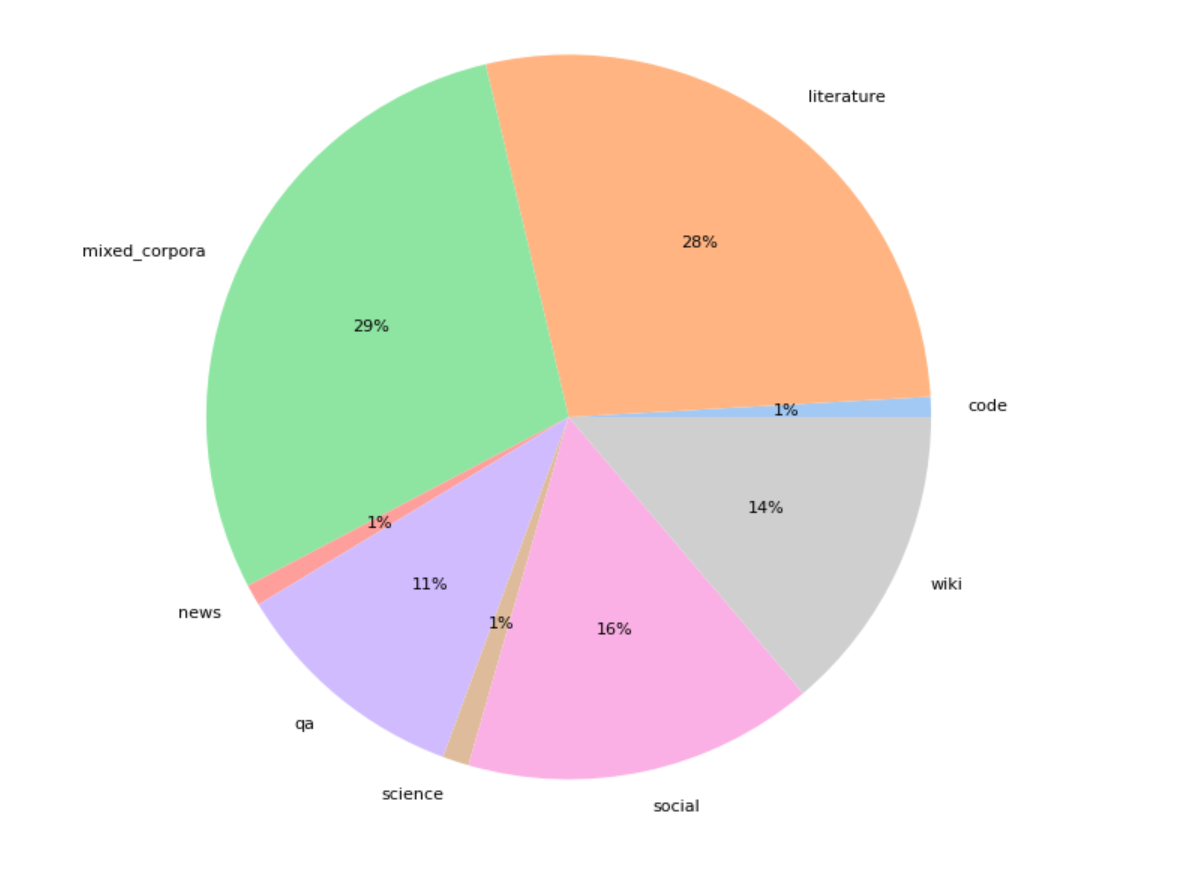

The model was pretrained on 300GB of data from various domains and then additionally trained on 100GB of code and legal documents. The dataset structure is as follows:

The training data was deduplicated. Text deduplication involved 64 - bit hashing of each text in the corpus to keep texts with unique hashes. Documents were also filtered based on their text compression rate using zlib4. The most strongly and weakly compressing deduplicated texts were discarded.

Technical Details

The model was trained using the Deepspeed and Megatron libraries. It was trained on a 300B token dataset for 3 epochs, which took around 45 days on 512 V100 GPUs. After that, the model was finetuned for 1 epoch with a sequence length of 2048, which took around 20 days on 200 A100 GPUs using additional data (as described above).

After the final training, the perplexity for this model was around 8.8 for the Russian language.

📄 License

The model is released under the MIT license.