🚀 SciNerTopic - NER Enhanced Transformer-based Topic Modelling

This project presents a Named Entity Recognition (NER) model based on allenai/scibert_scivocab_cased, fine - tuned to identify scientific terms in text. It has applications in scientific text analysis and topic modelling.

🚀 Quick Start

You can quickly start using this model in Google Colab. Click the following button to open the Colab notebook:

✨ Features

- Scientific Term Identification: The model is fine - tuned on the SciERC Dataset to identify various scientific terms, including tasks, methods, evaluation metrics, materials, other scientific terms, and generic terms.

- Transformer - Based: Built on the

allenai/scibert_scivocab_cased model, leveraging the power of transformers for better performance.

Scientific Term Categories

- Task: Applications, problems to solve, systems to construct. E.g. information extraction, machine reading system, image segmentation, etc.

- Method: Methods, models, systems to use, or tools, components of a system, frameworks. E.g. language model, CORENLP, POS parser, kernel method, etc.

- Evaluation Metric: Metrics, measures, or entities that can express the quality of a system/method. E.g. F1, BLEU, Precision, Recall, ROC curve, mean reciprocal rank, mean - squared error, robustness, time complexity, etc.

- Material: Data, datasets, resources, Corpus, Knowledge base. E.g. image data, speech data, stereo images, bilingual dictionary, paraphrased questions, CoNLL, Panntreebank, WordNet, Wikipedia, etc.

- Other Scientific Terms: Phrases that are scientific terms but do not fall into any of the above classes. E.g. physical or geometric constraints, qualitative prior knowledge, discourse structure, syntactic rule, discourse structure, tree, node, tree kernel, features, noise, criteria.

- Generic: General terms or pronouns that may refer to an entity but are not themselves informative, often used as connection words. E.g. model, approach, prior knowledge, them, it...

📦 Installation

No specific installation steps are provided in the original document. If you want to use the model, you can follow the usage example below.

💻 Usage Examples

Basic Usage

from transformers import AutoTokenizer, AutoModelForTokenClassification

from transformers import pipeline

tokenizer = AutoTokenizer.from_pretrained("RJuro/SciNERTopic")

model_trf = AutoModelForTokenClassification.from_pretrained("RJuro/SciNERTopic")

nlp = pipeline("ner", model=model_trf, tokenizer=tokenizer, aggregation_strategy='average')

📚 Documentation

Training

- Learning Rate: 1e - 05

- Epochs: 10

Performance

- Eval Loss: 0.401

- Precision: 0.577

- Recall: 0.632

- F1: 0.603

Colab

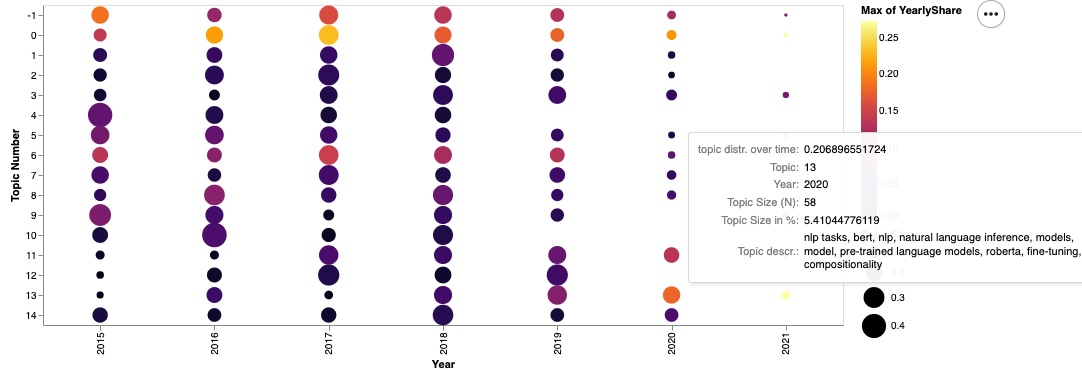

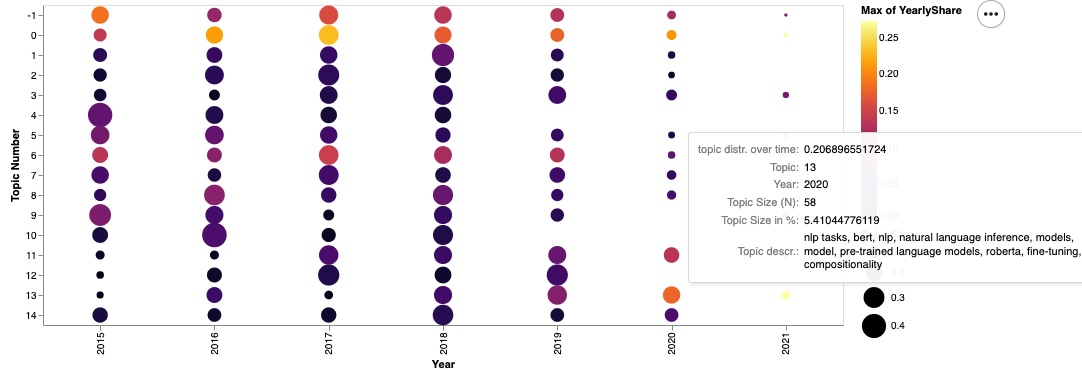

Check out how this model is used for NER - enhanced topic modelling, inspired by BERTopic.

📄 License

This project is licensed under the MIT license.

📖 Cite this model

@misc {roman_jurowetzki_2022,

author = { {Roman Jurowetzki, Hamid Bekamiri} },

title = { SciNERTopic - NER enhanced transformer-based topic modelling for scientific text },

year = 2022,

url = { https://huggingface.co/RJuro/SciNERTopic },

doi = { 10.57967/hf/0095 },

publisher = { Hugging Face }

}