🚀 Portuguese BERT large cased QA (Question Answering), finetuned on SQUAD v1.1

This is a Portuguese question - answering model fine - tuned on SQUAD v1.1, leveraging the BERTimbau Large language model to provide high - quality answers in Portuguese.

🚀 Quick Start

This model is a question - answering model fine - tuned on the Portuguese SQUAD v1.1 dataset. You can use it to answer various questions in Portuguese.

✨ Features

- Trained on Portuguese Dataset: The model was trained on the Portuguese SQUAD v1.1 dataset from the Deep Learning Brasil group.

- Based on BERTimbau Large: It uses the BERTimbau Large language model, which achieves state - of - the - art performances on multiple downstream NLP tasks in Brazilian Portuguese.

📚 Documentation

Introduction

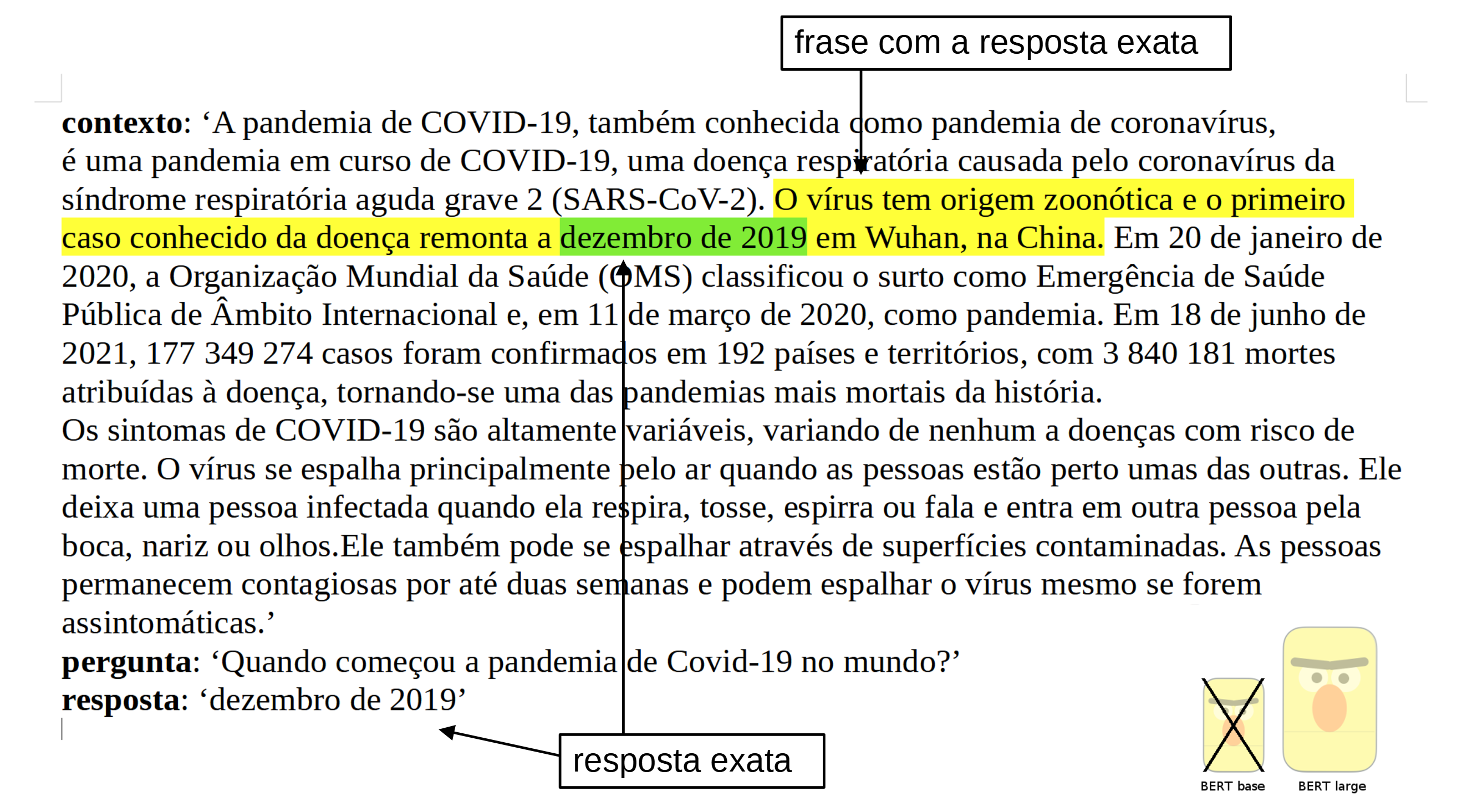

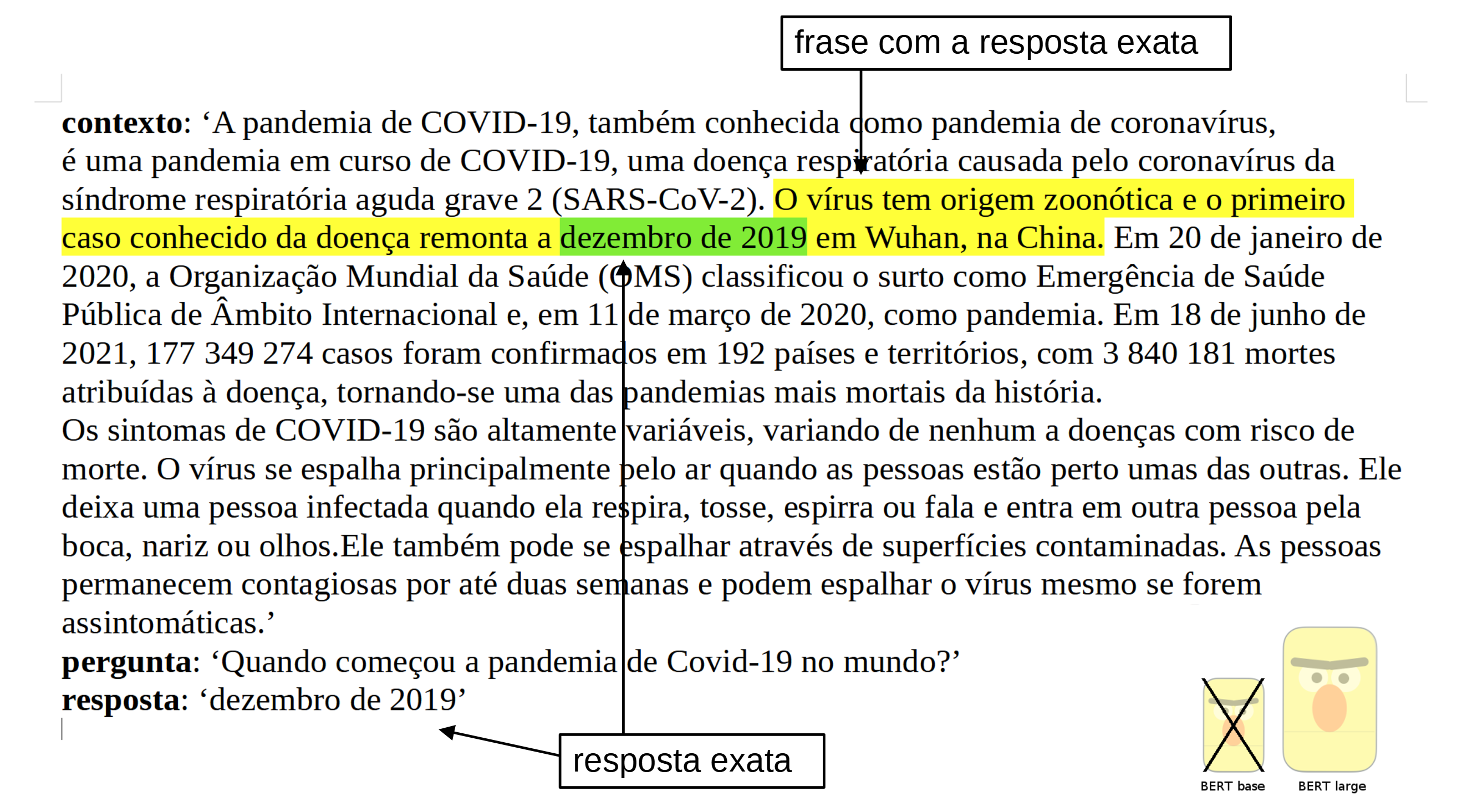

The model was trained on the Portuguese SQUAD v1.1 dataset from the Deep Learning Brasil group. The language model used is the BERTimbau Large (aka "bert - large - portuguese - cased") from Neuralmind.ai. BERTimbau is a pretrained BERT model for Brazilian Portuguese, excelling in Named Entity Recognition, Sentence Textual Similarity, and Recognizing Textual Entailment. It comes in two sizes: Base and Large.

Informations on the method used

All the information about the method is in the blog post: NLP | Como treinar um modelo de Question Answering em qualquer linguagem baseado no BERT large, melhorando o desempenho do modelo utilizando o BERT base? (estudo de caso em português)

Notebook in GitHub

You can find the related notebook here: question_answering_BERT_large_cased_squad_v11_pt.ipynb (nbviewer version)

Performance

The results obtained are as follows:

f1 = 84.43 (against 82.50 for the base model)

exact match = 72.68 (against 70.49 for the base model)

💻 Usage Examples

Basic Usage

Using Pipeline

import transformers

from transformers import pipeline

context = r"""

A pandemia de COVID-19, também conhecida como pandemia de coronavírus, é uma pandemia em curso de COVID-19,

uma doença respiratória causada pelo coronavírus da síndrome respiratória aguda grave 2 (SARS-CoV-2).

O vírus tem origem zoonótica e o primeiro caso conhecido da doença remonta a dezembro de 2019 em Wuhan, na China.

Em 20 de janeiro de 2020, a Organização Mundial da Saúde (OMS) classificou o surto

como Emergência de Saúde Pública de Âmbito Internacional e, em 11 de março de 2020, como pandemia.

Em 18 de junho de 2021, 177 349 274 casos foram confirmados em 192 países e territórios,

com 3 840 181 mortes atribuídas à doença, tornando-se uma das pandemias mais mortais da história.

Os sintomas de COVID-19 são altamente variáveis, variando de nenhum a doenças com risco de morte.

O vírus se espalha principalmente pelo ar quando as pessoas estão perto umas das outras.

Ele deixa uma pessoa infectada quando ela respira, tosse, espirra ou fala e entra em outra pessoa pela boca, nariz ou olhos.

Ele também pode se espalhar através de superfícies contaminadas.

As pessoas permanecem contagiosas por até duas semanas e podem espalhar o vírus mesmo se forem assintomáticas.

"""

model_name = 'pierreguillou/bert-large-cased-squad-v1.1-portuguese'

nlp = pipeline("question-answering", model=model_name)

question = "Quando começou a pandemia de Covid-19 no mundo?"

result = nlp(question=question, context=context)

print(f"Answer: '{result['answer']}', score: {round(result['score'], 4)}, start: {result['start']}, end: {result['end']}")

Using the Auto classes

from transformers import AutoTokenizer, AutoModelForQuestionAnswering

tokenizer = AutoTokenizer.from_pretrained("pierreguillou/bert-large-cased-squad-v1.1-portuguese")

model = AutoModelForQuestionAnswering.from_pretrained("pierreguillou/bert-large-cased-squad-v1.1-portuguese")

Cloning the model repo

git lfs install

git clone https://huggingface.co/pierreguillou/bert-large-cased-squad-v1.1-portuguese

GIT_LFS_SKIP_SMUDGE=1

🔧 Technical Details

The training data used for this model come from Portuguese SQUAD. It could contain a lot of unfiltered content, which is far from neutral, and biases.

📄 License

This model is released under the MIT license.

👨💻 Author

Portuguese BERT large cased QA (Question Answering), finetuned on SQUAD v1.1 was trained and evaluated by Pierre GUILLOU thanks to the Open Source code, platforms and advices of many organizations (link to the list). In particular: Hugging Face, Neuralmind.ai, Deep Learning Brasil group and AI Lab.

📖 Citation

If you use our work, please cite:

@inproceedings{pierreguillou2021bertlargecasedsquadv11portuguese,

title={Portuguese BERT large cased QA (Question Answering), finetuned on SQUAD v1.1},

author={Pierre Guillou},

year={2021}

}

📋 Information Table

| Property |

Details |

| Model Type |

Portuguese BERT large cased QA (Question Answering), finetuned on SQUAD v1.1 |

| Training Data |

SQUAD v1.1 in Portuguese from the Deep Learning Brasil group |

| Tags |

question - answering, bert, bert - large, pytorch |

| Datasets |

brWaC, squad, squad_v1_pt |

| Metrics |

squad |