Llava Int4

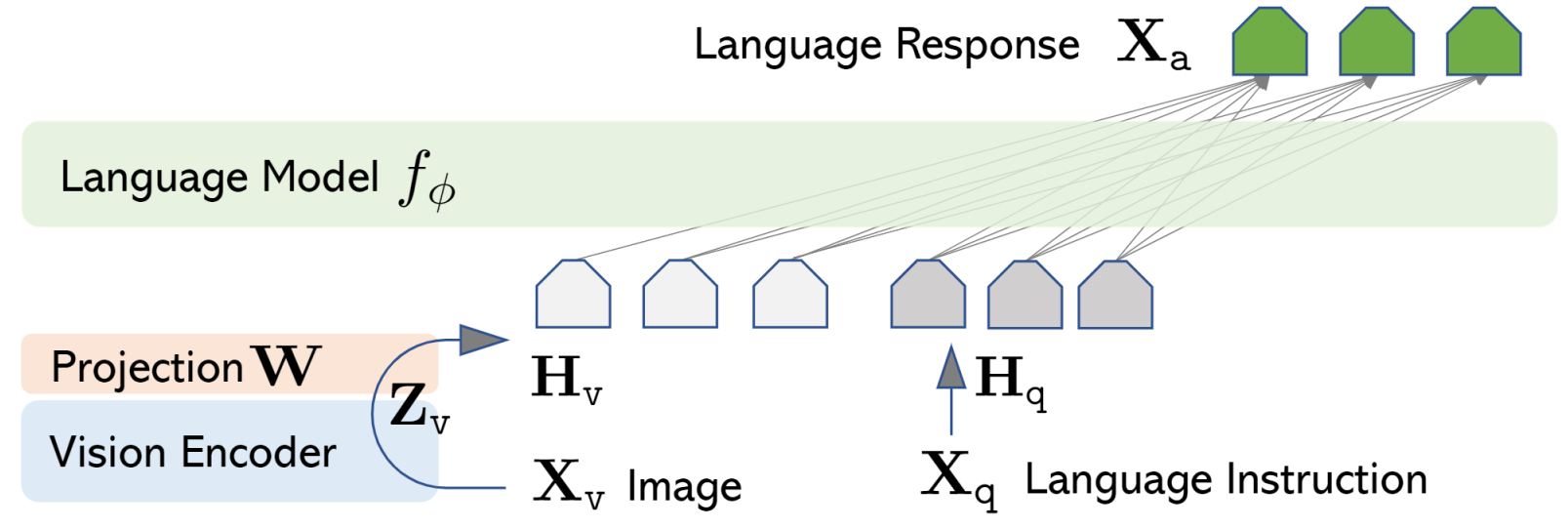

LLaVA is a multimodal large model that achieves general-purpose visual assistant capabilities by connecting a visual encoder with a large language model

Downloads 40

Release Time : 11/15/2023

Model Overview

LLaVA connects the CLIP visual encoder with large language models like Vicuna/LLaMa through a simple projection matrix, enabling it to understand and execute both language and image instructions

Model Features

Multimodal Understanding

Processes both visual and language inputs simultaneously, understands image content and generates relevant responses

Simple Architecture Design

Connects pretrained vision and language models through a lightweight projection matrix for efficient multimodal fusion

Instruction Following Capability

Can understand complex multimodal instructions and perform corresponding tasks

Model Capabilities

Image content understanding

Visual question answering

Multimodal dialogue

Image caption generation

Visual instruction execution

Use Cases

Intelligent Assistant

Visual Assistance Q&A

Answers various user questions about image content

Provides accurate and contextually relevant answers

Education

Interactive Learning

Explains complex concepts through image and text interaction

Featured Recommended AI Models