Model Overview

Model Features

Model Capabilities

Use Cases

🚀 CogAgent

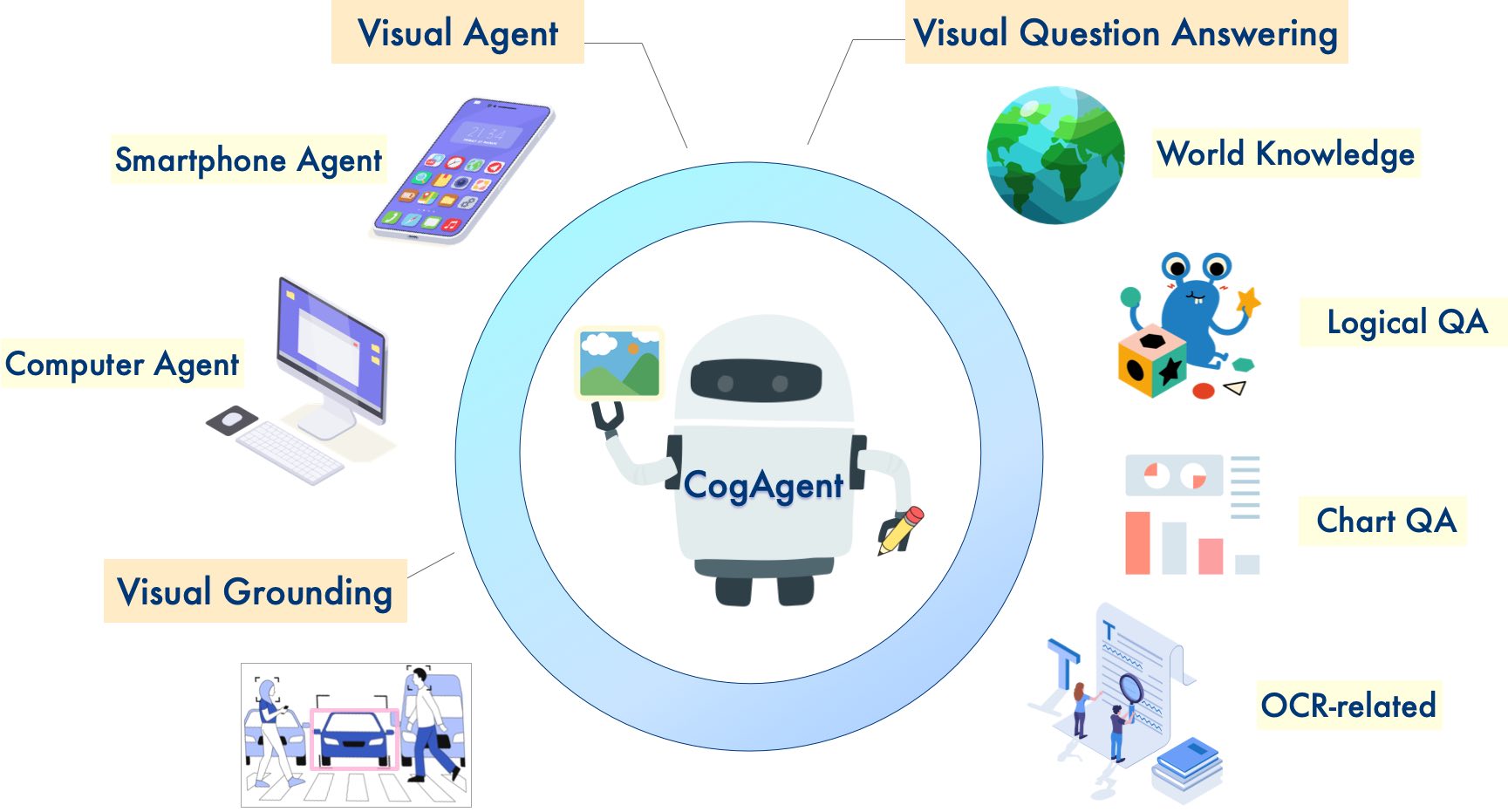

CogAgent is an open-source visual language model enhanced based on CogVLM. It excels in image understanding and GUI agent tasks, offering high-resolution visual input support and strong performance across multiple benchmarks.

📖 Paper: https://arxiv.org/abs/2312.08914

🚀 GitHub: For more details such as demos, fine-tuning, and query prompts, please visit Our GitHub

🚀 Quick Start

You can quickly start using the following Python code in cli_demo.py:

import torch

from PIL import Image

from transformers import AutoModelForCausalLM, LlamaTokenizer

import argparse

parser = argparse.ArgumentParser()

parser.add_argument("--quant", choices=[4], type=int, default=None, help='quantization bits')

parser.add_argument("--from_pretrained", type=str, default="THUDM/cogagent-chat-hf", help='pretrained ckpt')

parser.add_argument("--local_tokenizer", type=str, default="lmsys/vicuna-7b-v1.5", help='tokenizer path')

parser.add_argument("--fp16", action="store_true")

parser.add_argument("--bf16", action="store_true")

args = parser.parse_args()

MODEL_PATH = args.from_pretrained

TOKENIZER_PATH = args.local_tokenizer

DEVICE = 'cuda' if torch.cuda.is_available() else 'cpu'

tokenizer = LlamaTokenizer.from_pretrained(TOKENIZER_PATH)

if args.bf16:

torch_type = torch.bfloat16

else:

torch_type = torch.float16

print("========Use torch type as:{} with device:{}========\n\n".format(torch_type, DEVICE))

if args.quant:

model = AutoModelForCausalLM.from_pretrained(

MODEL_PATH,

torch_dtype=torch_type,

low_cpu_mem_usage=True,

load_in_4bit=True,

trust_remote_code=True

).eval()

else:

model = AutoModelForCausalLM.from_pretrained(

MODEL_PATH,

torch_dtype=torch_type,

low_cpu_mem_usage=True,

load_in_4bit=args.quant is not None,

trust_remote_code=True

).to(DEVICE).eval()

while True:

image_path = input("image path >>>>> ")

if image_path == "stop":

break

image = Image.open(image_path).convert('RGB')

history = []

while True:

query = input("Human:")

if query == "clear":

break

input_by_model = model.build_conversation_input_ids(tokenizer, query=query, history=history, images=[image])

inputs = {

'input_ids': input_by_model['input_ids'].unsqueeze(0).to(DEVICE),

'token_type_ids': input_by_model['token_type_ids'].unsqueeze(0).to(DEVICE),

'attention_mask': input_by_model['attention_mask'].unsqueeze(0).to(DEVICE),

'images': [[input_by_model['images'][0].to(DEVICE).to(torch_type)]],

}

if 'cross_images' in input_by_model and input_by_model['cross_images']:

inputs['cross_images'] = [[input_by_model['cross_images'][0].to(DEVICE).to(torch_type)]]

# add any transformers params here.

gen_kwargs = {"max_length": 2048,

"temperature": 0.9,

"do_sample": False}

with torch.no_grad():

outputs = model.generate(**inputs, **gen_kwargs)

outputs = outputs[:, inputs['input_ids'].shape[1]:]

response = tokenizer.decode(outputs[0])

response = response.split("</s>")[0]

print("\nCog:", response)

history.append((query, response))

Then run the command:

python cli_demo_hf.py --bf16

✨ Features

Model Versions

📍 This is the cogagent-vqa version of the CogAgent checkpoint.

We have open-sourced two versions of CogAgent checkpoints. You can choose the appropriate one based on your requirements:

cogagent-chat: This model is powerful in GUI Agent, visual multi-turn dialogue, visual grounding, etc. If you need GUI Agent and Visual Grounding functions or want to conduct multi-turn dialogues with a given image, we recommend using this version.cogagent-vqa: This model has stronger capabilities in single-turn visual dialogue. If you need to work on VQA benchmarks (such as MMVET, VQAv2), we suggest using this model.

Model Performance

CogAgent-18B has 11 billion visual and 7 billion language parameters, demonstrating strong performance in image understanding and GUI agent tasks:

- Cross-modal Benchmarks: CogAgent-18B achieves state-of-the-art generalist performance on 9 cross-modal benchmarks, including VQAv2, MM-Vet, POPE, ST-VQA, OK-VQA, TextVQA, ChartQA, InfoVQA, and DocVQA.

- GUI Operation Datasets: It significantly surpasses existing models on GUI operation datasets, such as AITW and Mind2Web.

Additional Features

In addition to all the features of CogVLM (visual multi-round dialogue, visual grounding), CogAgent offers the following enhancements:

- Higher Resolution Support: It supports higher resolution visual input and dialogue question-answering, with support for ultra-high-resolution image inputs of 1120x1120.

- Visual Agent Capabilities: It can return a plan, next action, and specific operations with coordinates for any given task on any GUI screenshot.

- Enhanced GUI QA: It has improved GUI-related question-answering capabilities, capable of handling questions about any GUI screenshot, including web pages, PC apps, and mobile applications.

- Enhanced OCR Capabilities: It has better performance in OCR-related tasks through improved pre-training and fine-tuning.

Usage License

The models in this repository are free for academic research. Users who wish to use the models for commercial purposes must register here. Registered users can use the models for commercial activities free of charge but must comply with all terms and conditions of the license. The license notice should be included in all copies or substantial portions of the software.

📄 License

The code in this repository is open source under the Apache-2.0 license. The use of CogAgent and CogVLM model weights must comply with the Model License.

📚 Documentation

Citation

If you find our work helpful, please consider citing the following papers:

@misc{hong2023cogagent,

title={CogAgent: A Visual Language Model for GUI Agents},

author={Wenyi Hong and Weihan Wang and Qingsong Lv and Jiazheng Xu and Wenmeng Yu and Junhui Ji and Yan Wang and Zihan Wang and Yuxiao Dong and Ming Ding and Jie Tang},

year={2023},

eprint={2312.08914},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

@misc{wang2023cogvlm,

title={CogVLM: Visual Expert for Pretrained Language Models},

author={Weihan Wang and Qingsong Lv and Wenmeng Yu and Wenyi Hong and Ji Qi and Yan Wang and Junhui Ji and Zhuoyi Yang and Lei Zhao and Xixuan Song and Jiazheng Xu and Bin Xu and Juanzi Li and Yuxiao Dong and Ming Ding and Jie Tang},

year={2023},

eprint={2311.03079},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

Acknowledgements

In the instruction fine-tuning phase of CogVLM, we used some English image-text data from the MiniGPT-4, LLAVA, LRV-Instruction, LLaVAR, and Shikra projects, as well as many classic cross-modal work datasets. We sincerely thank them for their contributions.

Transformers

Transformers Transformers English

Transformers English Transformers

Transformers Transformers

Transformers Transformers

Transformers Transformers

Transformers Transformers Supports Multiple Languages

Transformers Supports Multiple Languages Transformers

Transformers