🚀 KULLM3 AWQ Quantization Version

This repository presents the AWQ quantization version of KULLM3. It offers advanced instruction - following and fluent chat capabilities, with remarkable performance in instruction - following, closely rivaling gpt - 3.5 - turbo. To our knowledge, it stands as one of the best publicly available Korean - speaking language models.

🚀 Quick Start

Install Dependencies

pip install torch transformers==4.38.2 accelerate

⚠️ Important Note

In transformers>=4.39.0, generate() does not work well. (as of 2024.4.4.)

Python code

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer, TextStreamer

MODEL_DIR = "nlpai-lab/KULLM3"

model = AutoModelForCausalLM.from_pretrained(MODEL_DIR, torch_dtype=torch.float16).to("cuda")

tokenizer = AutoTokenizer.from_pretrained(MODEL_DIR)

streamer = TextStreamer(tokenizer, skip_prompt=True, skip_special_tokens=True)

s = "고려대학교에 대해서 알고 있니?"

conversation = [{'role': 'user', 'content': s}]

inputs = tokenizer.apply_chat_template(

conversation,

tokenize=True,

add_generation_prompt=True,

return_tensors='pt').to("cuda")

_ = model.generate(inputs, streamer=streamer, max_new_tokens=1024)

✨ Features

- Advanced instruction - following and fluent chat abilities.

- Remarkable performance in instruction - following, closely following gpt - 3.5 - turbo.

- One of the best publicly opened Korean - speaking language models.

📦 Installation

The installation steps mainly involve installing the necessary dependencies. You can use the following command to install them:

pip install torch transformers==4.38.2 accelerate

📚 Documentation

Model Description

This is the model card of a 🤗 transformers model that has been pushed on the Hub.

Training Details

Training Data

- vicgalle/alpaca-gpt4

- Mixed Korean instruction data (gpt - generated, hand - crafted, etc)

- About 66000+ examples used totally

Training Procedure

- Trained with fixed system prompt below.

당신은 고려대학교 NLP&AI 연구실에서 만든 AI 챗봇입니다.

당신의 이름은 'KULLM'으로, 한국어로는 '구름'을 뜻합니다.

당신은 비도덕적이거나, 성적이거나, 불법적이거나 또는 사회 통념적으로 허용되지 않는 발언은 하지 않습니다.

사용자와 즐겁게 대화하며, 사용자의 응답에 가능한 정확하고 친절하게 응답함으로써 최대한 도와주려고 노력합니다.

질문이 이상하다면, 어떤 부분이 이상한지 설명합니다. 거짓 정보를 발언하지 않도록 주의합니다.

Evaluation

- Evaluation details such as testing data, metrics are written in github.

- Without system prompt used in training phase, KULLM would show lower performance than expect.

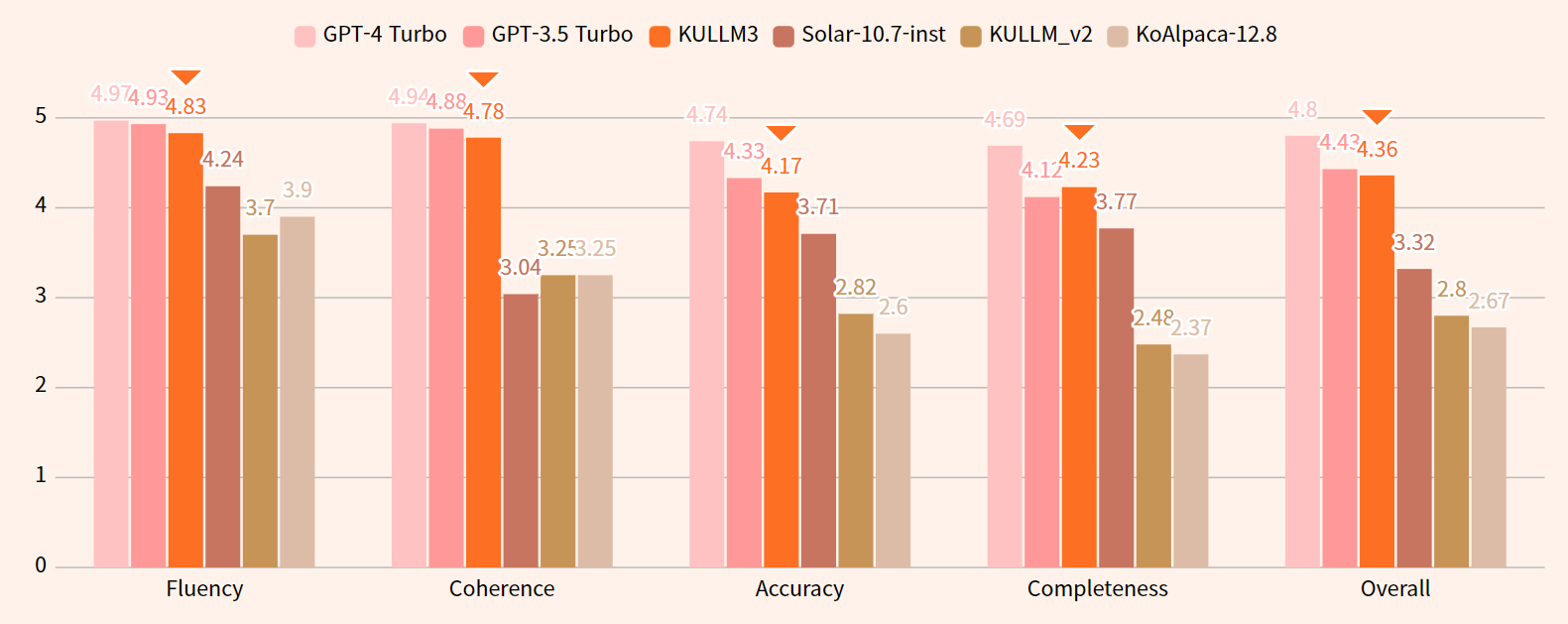

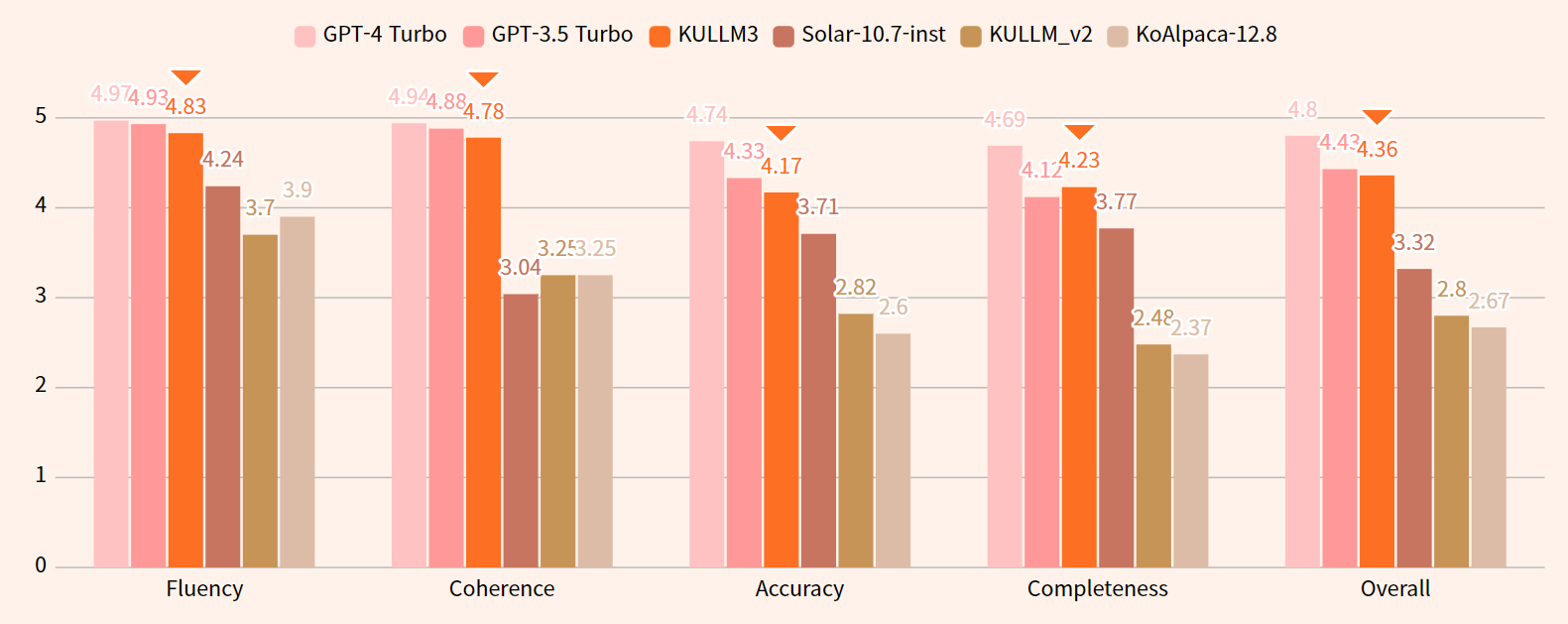

Results

Quantization Details

The quantization was carried out in a custom branch of autoawq. The hyperparameters for quantization are as follows.

{ "zero_point": True, "q_group_size": 128, "w_bit": 4, "version": "GEMM" }

It worked using vllm. It may not work with other frameworks as they have not been tested.

📄 License

This model is licensed under CC - BY - NC 4.0.

📖 Citation

@misc{kullm,

author = {NLP & AI Lab and Human-Inspired AI research},

title = {KULLM: Korea University Large Language Model Project},

year = {2023},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/nlpai-lab/kullm}},

}