🚀 UniSpeech-Large-plus Kyrgyz

This is a large model based on Microsoft's UniSpeech, pretrained on 16kHz sampled speech audio and phonetic labels, and fine - tuned on 1h of Kyrgyz phonemes. It is used for automatic speech recognition tasks.

🚀 Quick Start

This model is a speech model fine - tuned for phoneme classification. When using it, ensure that the speech input is sampled at 16kHz and the text is converted into a sequence of phonemes.

✨ Features

- Pretrained on 16kHz sampled speech audio and phonetic labels.

- Fine - tuned on 1h of Kyrgyz phonemes.

- Can be used for automatic speech recognition tasks.

📦 Installation

No specific installation steps are provided in the original document, so this section is skipped.

💻 Usage Examples

Basic Usage

import torch

from datasets import load_dataset

from transformers import AutoModelForCTC, AutoProcessor

import torchaudio.functional as F

model_id = "microsoft/unispeech-1350-en-17h-ky-ft-1h"

sample = next(iter(load_dataset("common_voice", "ky", split="test", streaming=True)))

resampled_audio = F.resample(torch.tensor(sample["audio"]["array"]), 48_000, 16_000).numpy()

model = AutoModelForCTC.from_pretrained(model_id)

processor = AutoProcessor.from_pretrained(model_id)

input_values = processor(resampled_audio, return_tensors="pt").input_values

with torch.no_grad():

logits = model(input_values).logits

prediction_ids = torch.argmax(logits, dim=-1)

transcription = processor.batch_decode(prediction_ids)

📚 Documentation

Model Information

Abstract

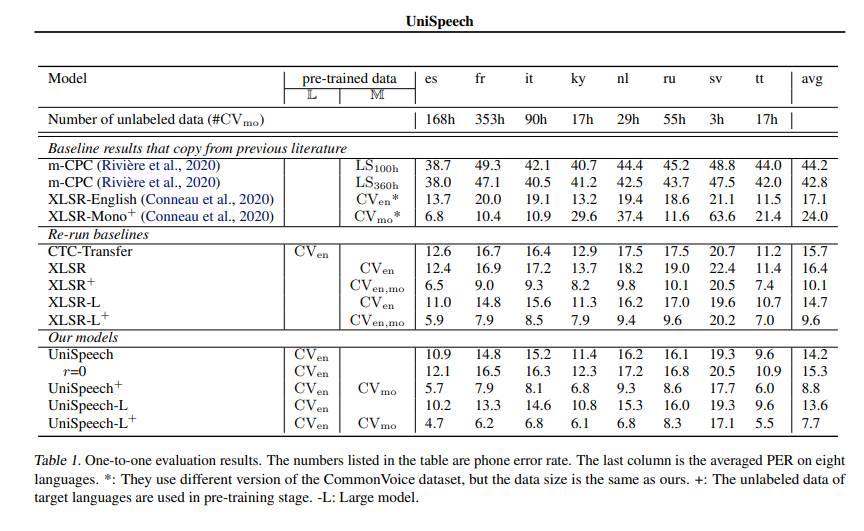

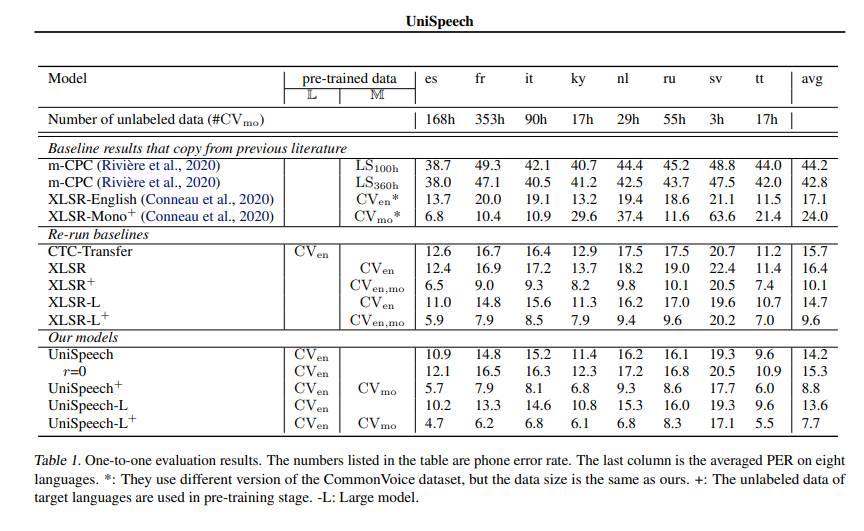

In this paper, we propose a unified pre - training approach called UniSpeech to learn speech representations with both unlabeled and labeled data, in which supervised phonetic CTC learning and phonetically - aware contrastive self - supervised learning are conducted in a multi - task learning manner. The resultant representations can capture information more correlated with phonetic structures and improve the generalization across languages and domains. We evaluate the effectiveness of UniSpeech for cross - lingual representation learning on public CommonVoice corpus. The results show that UniSpeech outperforms self - supervised pretraining and supervised transfer learning for speech recognition by a maximum of 13.4% and 17.8% relative phone error rate reductions respectively (averaged over all testing languages). The transferability of UniSpeech is also demonstrated on a domain - shift speech recognition task, i.e., a relative word error rate reduction of 6% against the previous approach.

Contribution

The model was contributed by cywang and patrickvonplaten.

License

The official license can be found here

Official Results

See UniSpeeech - L^{+} - ky:

🔧 Technical Details

The model is pretrained on 16kHz sampled speech audio and phonetic labels, and then fine - tuned on 1h of Kyrgyz phonemes. It uses a unified pre - training approach called UniSpeech, which combines supervised phonetic CTC learning and phonetically - aware contrastive self - supervised learning in a multi - task learning manner. This approach allows the model to capture information more correlated with phonetic structures and improve generalization across languages and domains.

📄 License

The official license can be found here