Pyc2py Alpha2

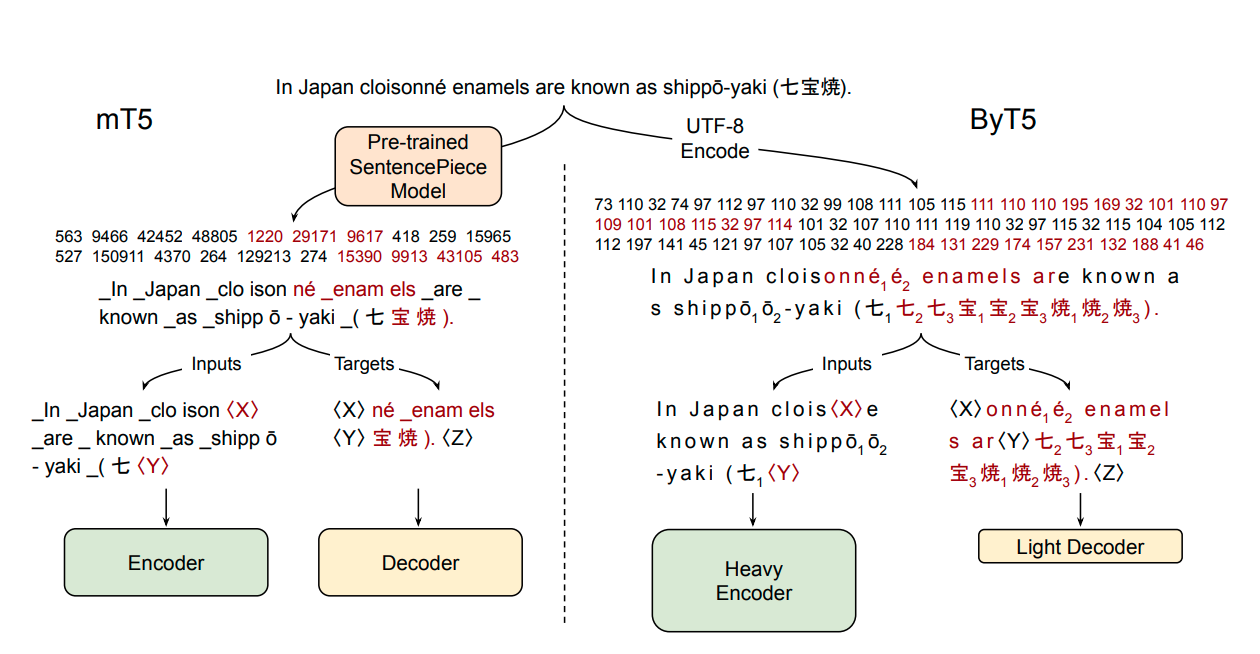

ByT5 is a tokenizer-free version of Google's T5 that directly processes raw UTF-8 bytes without relying on a tokenizer, making it particularly suitable for handling noisy text and multilingual scenarios.

Downloads 15

Release Time : 3/2/2022

Model Overview

ByT5 is a byte-to-byte pre-trained Transformer model that directly processes raw UTF-8 byte sequences without requiring a tokenizer. The model is pre-trained on the mC4 dataset and is suitable for multilingual text processing tasks, especially excelling in handling noisy text.

Model Features

Tokenizer-free Design

Directly processes raw UTF-8 bytes without relying on an independent tokenizer, reducing technical complexity.

Multilingual Support

Byte-level processing naturally supports text in all languages without additional language adaptation.

Noise Robustness

Significantly outperforms traditional tokenizer-based models on noisy text (e.g., spelling errors, non-standard formats).

Unified Architecture

Uses a standard Transformer architecture with only minor adjustments needed to process byte sequences.

Model Capabilities

Multilingual text generation

Noisy text processing

Cross-language transfer learning

Text understanding and transformation

Use Cases

Natural Language Processing

Multilingual Text Summarization

Generates summaries for texts in multiple languages

Achieves cross-language summarization without language-specific processing

Noisy Text Processing

Handles texts with spelling errors or non-standard formats

Outperforms traditional tokenizer models on the TweetQA task

Machine Translation

Byte-level Machine Translation

Performs language conversion directly at the byte sequence level

Avoids information loss caused by tokenization

Featured Recommended AI Models

Transformers

Transformers