Model Overview

Model Features

Model Capabilities

Use Cases

🚀 ColPali: Visual Retriever based on PaliGemma-3B with ColBERT strategy

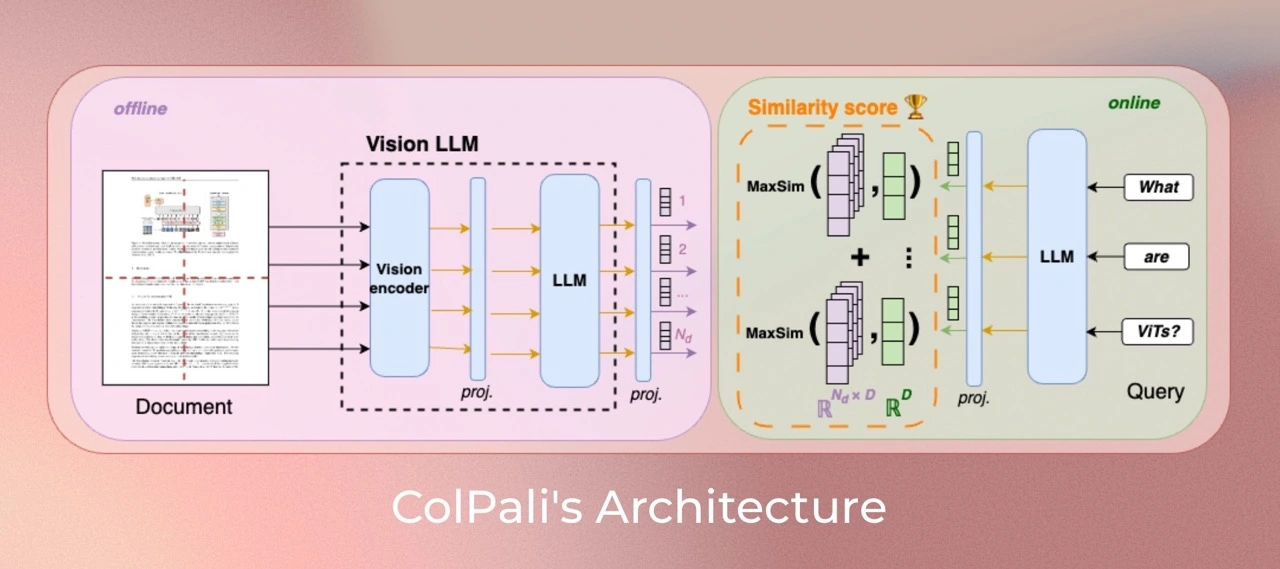

ColPali is a model that leverages a novel model architecture and training strategy based on Vision Language Models (VLMs). It can efficiently index documents using their visual features. It extends PaliGemma-3B and generates ColBERT-style multi-vector representations of text and images. This model was introduced in the paper ColPali: Efficient Document Retrieval with Vision Language Models and first released in this repository.

🚀 Quick Start

ColPali is a powerful model for visual document retrieval. It builds on the foundation of Vision Language Models (VLMs) to offer efficient document indexing from visual features.

✨ Features

- Novel Architecture: Based on a novel model architecture and training strategy for efficient document indexing.

- Multi - vector Representations: Generates ColBERT-style multi-vector representations of text and images.

- Research - Backed: Introduced in the paper ColPali: Efficient Document Retrieval with Vision Language Models.

📦 Installation

The README does not provide specific installation steps, so this section is skipped.

💻 Usage Examples

Basic Usage

import torch

import typer

from torch.utils.data import DataLoader

from tqdm import tqdm

from transformers import AutoProcessor

from PIL import Image

from colpali_engine.models.paligemma_colbert_architecture import ColPali

from colpali_engine.trainer.retrieval_evaluator import CustomEvaluator

from colpali_engine.utils.colpali_processing_utils import process_images, process_queries

from colpali_engine.utils.image_from_page_utils import load_from_dataset

def main() -> None:

"""Example script to run inference with ColPali"""

# Load model

model_name = "vidore/colpali-v1.1"

model = ColPali.from_pretrained("vidore/colpaligemma-3b-mix-448-base", torch_dtype=torch.bfloat16, device_map="cuda").eval()

model.load_adapter(model_name)

model = model.eval()

processor = AutoProcessor.from_pretrained(model_name)

# select images -> load_from_pdf(<pdf_path>), load_from_image_urls(["<url_1>"]), load_from_dataset(<path>)

images = load_from_dataset("vidore/docvqa_test_subsampled")

queries = ["From which university does James V. Fiorca come ?", "Who is the japanese prime minister?"]

# run inference - docs

dataloader = DataLoader(

images,

batch_size=4,

shuffle=False,

collate_fn=lambda x: process_images(processor, x),

)

ds = []

for batch_doc in tqdm(dataloader):

with torch.no_grad():

batch_doc = {k: v.to(model.device) for k, v in batch_doc.items()}

embeddings_doc = model(**batch_doc)

ds.extend(list(torch.unbind(embeddings_doc.to("cpu"))))

# run inference - queries

dataloader = DataLoader(

queries,

batch_size=4,

shuffle=False,

collate_fn=lambda x: process_queries(processor, x, Image.new("RGB", (448, 448), (255, 255, 255))),

)

qs = []

for batch_query in dataloader:

with torch.no_grad():

batch_query = {k: v.to(model.device) for k, v in batch_query.items()}

embeddings_query = model(**batch_query)

qs.extend(list(torch.unbind(embeddings_query.to("cpu"))))

# run evaluation

retriever_evaluator = CustomEvaluator(is_multi_vector=True)

scores = retriever_evaluator.evaluate(qs, ds)

print(scores.argmax(axis=1))

if __name__ == "__main__":

typer.run(main)

Advanced Usage

# If you need to further train ColPali from this adapter, you should run:

lora_config = LoraConfig.from_pretrained("vidore/colpali-v1.1")

lora_config.inference_mode = False # force training mode for fine-tuning

model = get_peft_model(model, lora_config)

print("after")

model.print_trainable_parameters()

📚 Documentation

Version specificity

This version is trained with colpali-engine==0.2.0. Compared to colpali, it is trained with right padding for queries to fix unwanted tokens in the query encoding. It also stems from the fixed vidore/colpaligemma-3b-mix-448-base to guarantee deterministic projection layer initialization. The data is the same as the ColPali data described in the paper.

Model Description

This model is iteratively built starting from an off - the - shelf SigLIP model. It is finetuned to create BiSigLIP, and the patch - embeddings output by SigLIP are fed to an LLM, PaliGemma-3B to create BiPali. Inputting image patch embeddings through a language model maps them to a latent space similar to textual input (query), enabling the use of the ColBERT strategy to compute interactions between text tokens and image patches, which improves performance compared to BiPali.

Model Training

Dataset

Our training dataset of 127,460 query - page pairs consists of train sets from openly available academic datasets (63%) and a synthetic dataset made up of pages from web - crawled PDF documents and augmented with VLM - generated (Claude - 3 Sonnet) pseudo - questions (37%). The training set is fully English, allowing us to study zero - shot generalization to non - English languages. We ensure no multi - page PDF document is used in both ViDoRe and the train set to prevent evaluation contamination. A validation set is created with 2% of the samples to tune hyperparameters.

Note: Multilingual data is present in the pretraining corpus of the language model (Gemma - 2B) and may occur during PaliGemma - 3B's multimodal training.

Parameters

All models are trained for 1 epoch on the train set. Unless otherwise specified, we train models in bfloat16 format, use low - rank adapters (LoRA) with alpha = 32 and r = 32 on the transformer layers from the language model, as well as the final randomly initialized projection layer, and use a paged_adamw_8bit optimizer. We train on an 8 GPU setup with data parallelism, a learning rate of 5e - 5 with linear decay and 2.5% warmup steps, and a batch size of 32.

🔧 Technical Details

- Focus: The model mainly focuses on PDF - type documents and high - resources languages, which may limit its generalization to other document types or less represented languages.

- Support: The model relies on multi - vector retrieval derived from the ColBERT late interaction mechanism. Adapting it to widely used vector retrieval frameworks without native multi - vector support may require engineering efforts.

📄 License

ColPali's vision language backbone model (PaliGemma) is under gemma license as specified in its model card. The adapters attached to the model are under MIT license.

Contact

- Manuel Faysse: manuel.faysse@illuin.tech

- Hugues Sibille: hugues.sibille@illuin.tech

- Tony Wu: tony.wu@illuin.tech

Citation

If you use any datasets or models from this organization in your research, please cite the original dataset as follows:

@misc{faysse2024colpaliefficientdocumentretrieval,

title={ColPali: Efficient Document Retrieval with Vision Language Models},

author={Manuel Faysse and Hugues Sibille and Tony Wu and Bilel Omrani and Gautier Viaud and Céline Hudelot and Pierre Colombo},

year={2024},

eprint={2407.01449},

archivePrefix={arXiv},

primaryClass={cs.IR},

url={https://arxiv.org/abs/2407.01449},

}

Transformers

Transformers Transformers English

Transformers English Transformers

Transformers Transformers

Transformers Transformers

Transformers Transformers

Transformers Transformers Supports Multiple Languages

Transformers Supports Multiple Languages Transformers

Transformers