🚀 Depth Anything V2 (Fine-tuned for Metric Depth Estimation) - Transformers Version

This model is a fine-tuned variant of Depth Anything V2 designed for outdoor metric depth estimation. It utilizes synthetic Virtual KITTI datasets and is compatible with the transformers library.

Depth Anything V2, introduced in the paper of the same name by Lihe Yang et al., shares the architecture of the original Depth Anything. However, it uses synthetic data and a larger-capacity teacher model to achieve more precise and robust depth predictions. This fine-tuned version for metric depth estimation was first released in this repository.

✨ Features

- Multiple Model Scales: Six metric depth models of three scales are available for both indoor and outdoor scenes.

- State-of-the-Art Performance: Trained on a large amount of synthetic and real data, it achieves state-of-the-art results in relative and absolute depth estimation.

📦 Installation

Requirements

transformers>=4.45.0

Alternatively, you can install the latest version of transformers from the source:

pip install git+https://github.com/huggingface/transformers

💻 Usage Examples

Basic Usage

Here is how to use this model to perform zero-shot depth estimation:

from transformers import pipeline

from PIL import Image

import requests

pipe = pipeline(task="depth-estimation", model="depth-anything/Depth-Anything-V2-Metric-Outdoor-Small-hf")

url = 'http://images.cocodataset.org/val2017/000000039769.jpg'

image = Image.open(requests.get(url, stream=True).raw)

depth = pipe(image)["depth"]

Advanced Usage

You can also use the model and processor classes:

from transformers import AutoImageProcessor, AutoModelForDepthEstimation

import torch

import numpy as np

from PIL import Image

import requests

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

image = Image.open(requests.get(url, stream=True).raw)

image_processor = AutoImageProcessor.from_pretrained("depth-anything/Depth-Anything-V2-Metric-Outdoor-Small-hf")

model = AutoModelForDepthEstimation.from_pretrained("depth-anything/Depth-Anything-V2-Metric-Outdoor-Small-hf")

inputs = image_processor(images=image, return_tensors="pt")

with torch.no_grad():

outputs = model(**inputs)

predicted_depth = outputs.predicted_depth

prediction = torch.nn.functional.interpolate(

predicted_depth.unsqueeze(1),

size=image.size[::-1],

mode="bicubic",

align_corners=False,

)

For more code examples, please refer to the documentation.

📚 Documentation

Model description

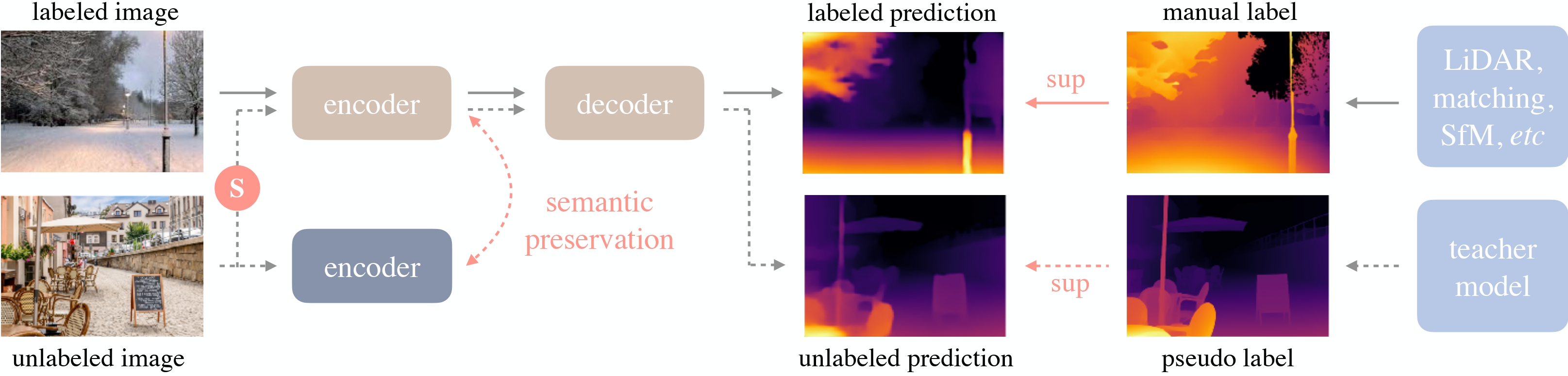

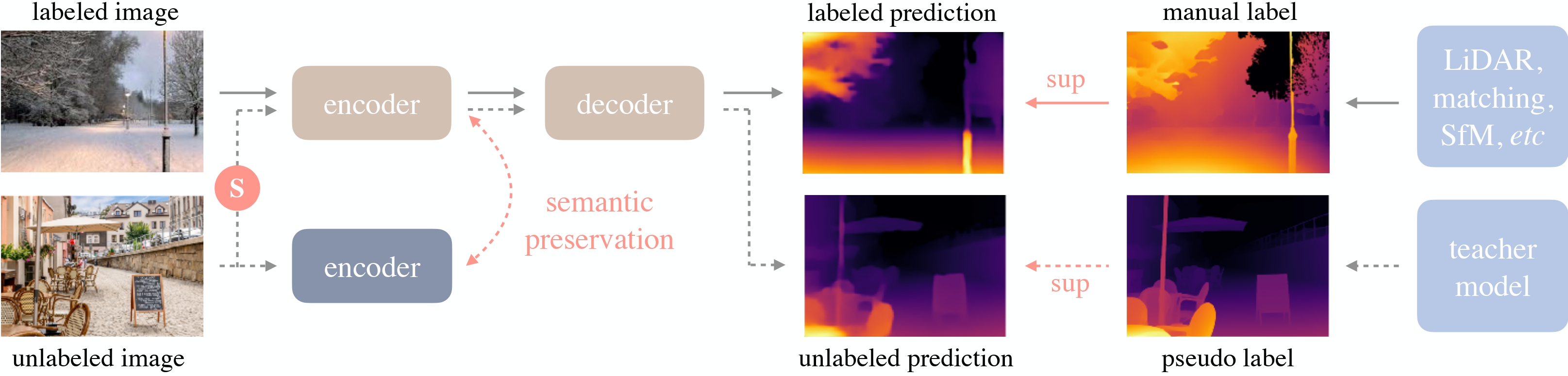

Depth Anything V2 is built on the DPT architecture with a DINOv2 backbone. It is trained on approximately 600K synthetic labeled images and 62 million real unlabeled images, delivering excellent performance in depth estimation tasks.

Depth Anything overview. Taken from the original paper.

Depth Anything overview. Taken from the original paper.

Intended uses & limitations

You can use the raw model for zero-shot depth estimation. Check the model hub for other versions that suit your needs.

📄 License

The model is subject to the relevant license terms of the original paper and the repository.

📦 Available Models

📖 Citation

@article{depth_anything_v2,

title={Depth Anything V2},

author={Yang, Lihe and Kang, Bingyi and Huang, Zilong and Zhao, Zhen and Xu, Xiaogang and Feng, Jiashi and Zhao, Hengshuang},

journal={arXiv:2406.09414},

year={2024}

}

@inproceedings{depth_anything_v1,

title={Depth Anything: Unleashing the Power of Large-Scale Unlabeled Data},

author={Yang, Lihe and Kang, Bingyi and Huang, Zilong and Xu, Xiaogang and Feng, Jiashi and Zhao, Hengshuang},

booktitle={CVPR},

year={2024}

}

Depth Anything overview. Taken from the

Depth Anything overview. Taken from the