Model Overview

Model Features

Model Capabilities

Use Cases

🚀 Hymba-1.5B-Base

Hymba-1.5B-Base is a base text - to - text model designed for various natural language generation tasks. It features a hybrid architecture and meta tokens, enhancing its performance. This model is commercially available.

🚀 Quick Start

Step 1: Environment Setup

Since Hymba-1.5B-Base employs FlexAttention, which relies on Pytorch2.5 and other related dependencies, we provide two ways to setup the environment:

- [Local install] Install the related packages using our provided

setup.sh(support CUDA 12.1/12.4):

wget --header="Authorization: Bearer YOUR_HF_TOKEN" https://huggingface.co/nvidia/Hymba-1.5B-Base/resolve/main/setup.sh

bash setup.sh

- [Docker] A docker image is provided with all of Hymba's dependencies installed. You can download our docker image and start a container using the following commands:

docker pull ghcr.io/tilmto/hymba:v1

docker run --gpus all -v /home/$USER:/home/$USER -it ghcr.io/tilmto/hymba:v1 bash

Step 2: Chat with Hymba-1.5B-Base

After setting up the environment, you can use the following script to chat with our Model

from transformers import LlamaTokenizer, AutoModelForCausalLM, AutoTokenizer, AutoModel

import torch

# Load the tokenizer and model

repo_name = "nvidia/Hymba-1.5B-Base"

tokenizer = AutoTokenizer.from_pretrained(repo_name, trust_remote_code=True)

model = AutoModelForCausalLM.from_pretrained(repo_name, trust_remote_code=True)

model = model.cuda().to(torch.bfloat16)

# Chat with Hymba

prompt = input()

inputs = tokenizer(prompt, return_tensors="pt").to('cuda')

outputs = model.generate(**inputs, max_length=64, do_sample=False, temperature=0.7, use_cache=True)

response = tokenizer.decode(outputs[0][inputs['input_ids'].shape[1]:], skip_special_tokens=True)

print(f"Model response: {response}")

✨ Features

- Hybrid Architecture: The model has a hybrid architecture with Mamba and Attention heads running in parallel.

- Meta Tokens: A set of learnable tokens prepended to every prompt, which help improve the efficacy of the model.

- Shared KV Cache: The model shares KV cache between 2 layers and between heads in a single layer.

- Sliding Window Attention: 90% of attention layers are sliding window attention.

- Commercial Use: This model is ready for commercial use.

📚 Documentation

Model Overview

Hymba-1.5B-Base is a base text - to - text model that can be adopted for a variety of natural language generation tasks.

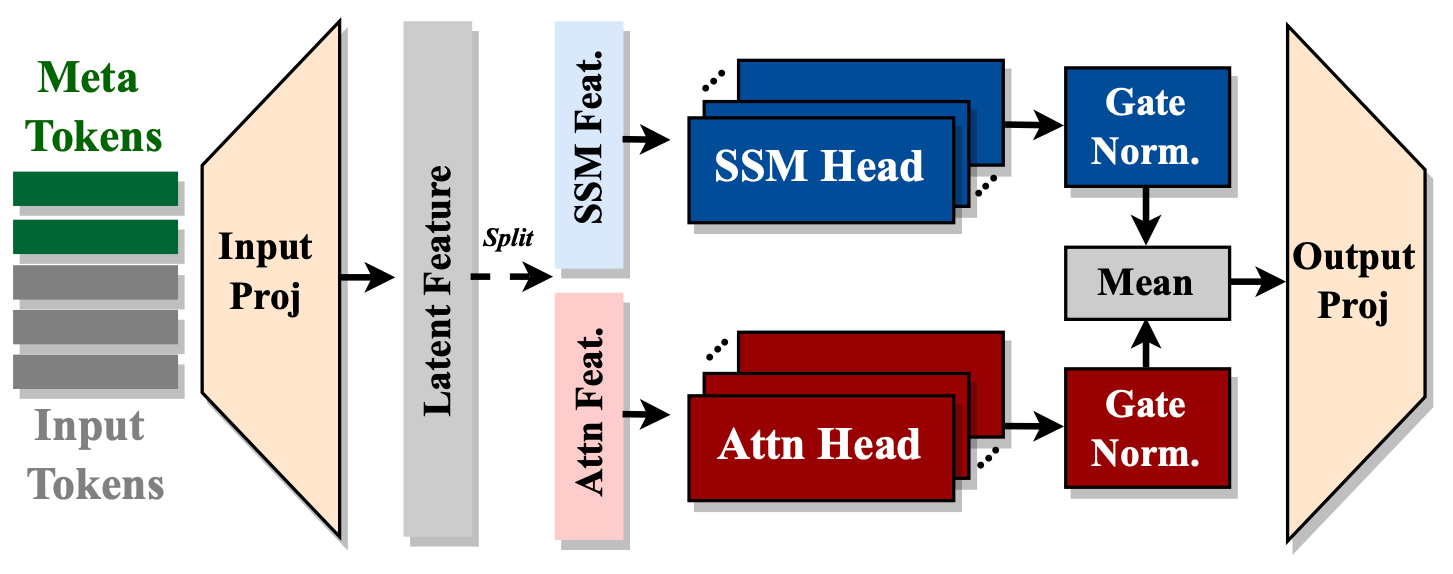

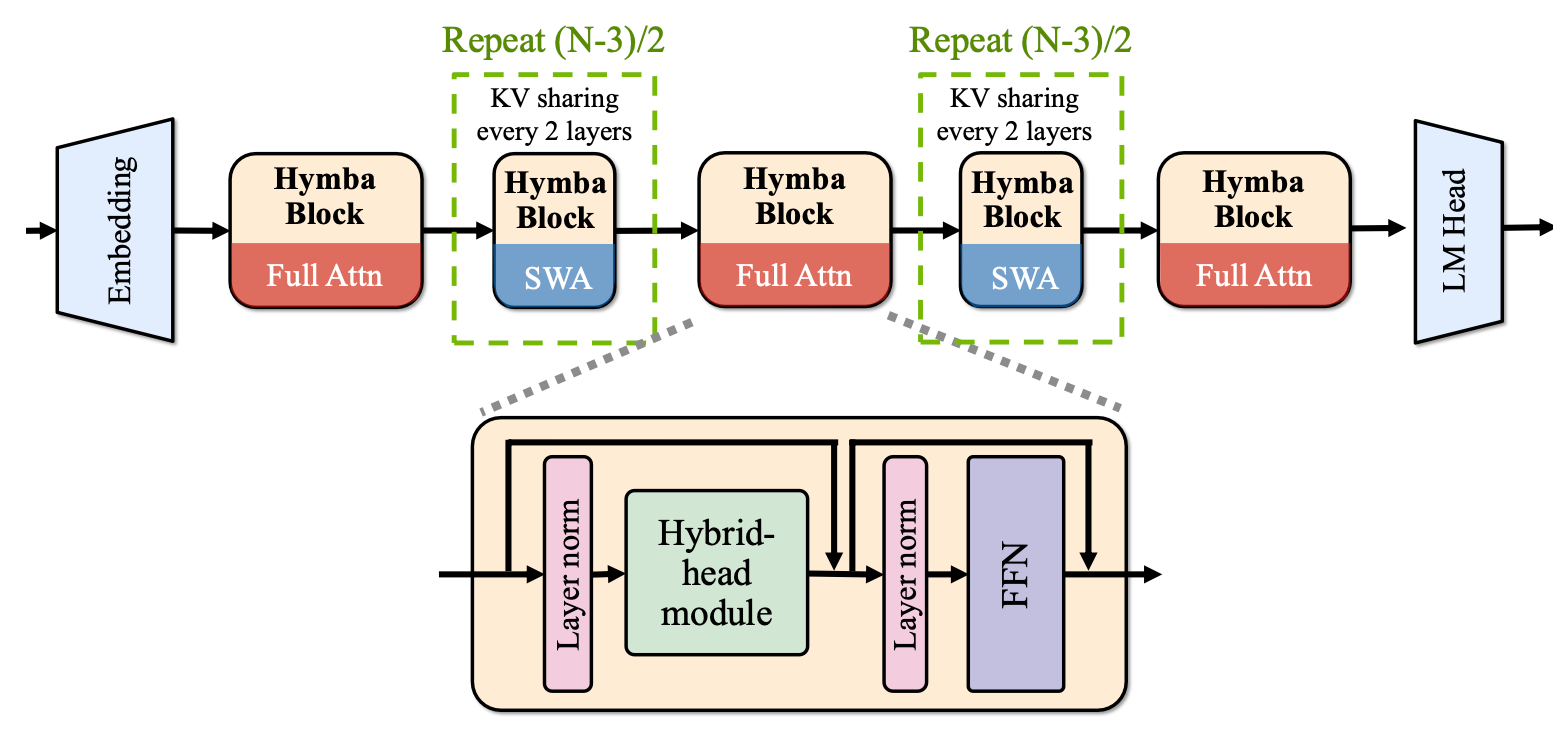

The model has hybrid architecture with Mamba and Attention heads running in parallel. Meta tokens, a set of learnable tokens prepended to every prompt, help improve the efficacy of the model. The model shares KV cache between 2 layers and between heads in a single layer. 90% of attention layers are sliding window attention.

This model is ready for commercial use.

Model Developer: NVIDIA Model Dates: Hymba-1.5B-Base was trained between September 1, 2024 and November 10th, 2024. License: This model is released under the NVIDIA Open Model License Agreement.

Model Architecture

We've released a minimal implementation of Hymba on GitHub to help developers understand and implement its design principles in their own models. Check it out! barebones-hymba.

Hymba-1.5B-Base has a model embedding size of 1600, 25 attention heads, and an MLP intermediate dimension of 5504, with 32 layers in total, 16 SSM states, 3 full attention layers, the rest are sliding window attention. Unlike the standard Transformer, each attention layer in Hymba has a hybrid combination of standard attention heads and Mamba heads in parallel. Additionally, it uses Grouped - Query Attention (GQA) and Rotary Position Embeddings (RoPE).

Features of this architecture:

- Fused Processing: Fuse attention heads and SSM heads within the same layer, offering parallel and complementary processing of the same inputs.

-

Meta Tokens: Introduce meta tokens that are prepended to the input sequences and interact with all subsequent tokens, thus storing important information and alleviating the burden of "forced - to - attend" in attention.

-

Efficient Memory and Computation: Integrate with cross - layer KV sharing and global - local attention to further boost memory and computation efficiency.

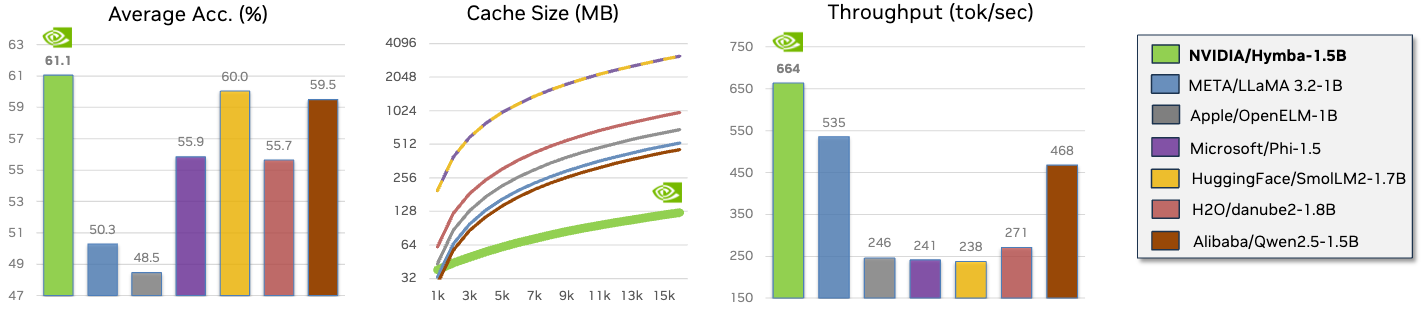

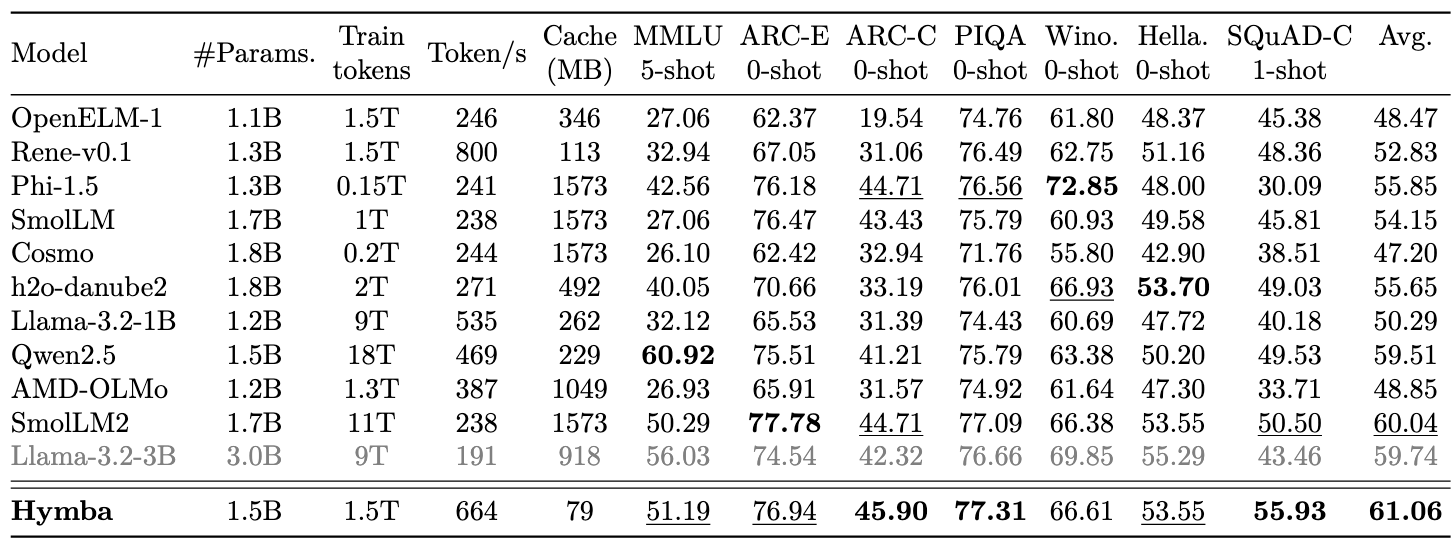

Performance Highlights

- Hymba-1.5B-Base outperforms all sub - 2B public models.

Finetuning Hymba

LMFlow is a complete pipeline for fine - tuning large language models.

The following steps provide an example of how to fine - tune the Hymba-1.5B-Base models using LMFlow.

- Using Docker

docker pull ghcr.io/tilmto/hymba:v1

docker run --gpus all -v /home/$USER:/home/$USER -it ghcr.io/tilmto/hymba:v1 bash

- Install LMFlow

git clone https://github.com/OptimalScale/LMFlow.git

cd LMFlow

conda create -n lmflow python=3.9 -y

conda activate lmflow

conda install mpi4py

pip install -e .

- Fine - tune the model using the following command.

cd LMFlow

bash ./scripts/run_finetune_hymba.sh

With LMFlow, you can also fine - tune the model on your custom dataset. The only thing you need to do is transform your dataset into the LMFlow data format. In addition to full - finetuniing, you can also fine - tune hymba efficiently with DoRA, LoRA, LISA, Flash Attention, and other acceleration techniques. For more details, please refer to the LMFlow for Hymba documentation.

Evaluation

We use LM Evaluation Harness to evaluate the model. The evaluation commands are as follows:

git clone --depth 1 https://github.com/EleutherAI/lm-evaluation-harness

git fetch --all --tags

git checkout tags/v0.4.4 # squad completion task is not compatible with the latest version

cd lm-evaluation-harness

pip install -e .

lm_eval --model hf --model_args pretrained=nvidia/Hymba-1.5B-Base,dtype=bfloat16,trust_remote_code=True \

--tasks mmlu \

--num_fewshot 5 \

--batch_size 1 \

--output_path ./hymba_HF_base_lm-results \

--log_samples

lm_eval --model hf --model_args pretrained=nvidia/Hymba-1.5B-Base,dtype=bfloat16,trust_remote_code=True \

--tasks arc_easy,arc_challenge,piqa,winogrande,hellaswag \

--num_fewshot 0 \

--batch_size 1 \

--output_path ./hymba_HF_base_lm-results \

--log_samples

lm_eval --model hf --model_args pretrained=nvidia/Hymba-1.5B-Base,dtype=bfloat16,trust_remote_code=True \

--tasks squad_completion \

--num_fewshot 1 \

--batch_size 1 \

--output_path ./hymba_HF_base_lm-results \

--log_samples

Limitations

The model was trained on data that contains toxic language, unsafe content, and societal biases originally crawled from the internet. Therefore, the model may amplify those biases and return toxic responses especially when prompted with toxic prompts. The model may generate answers that may be inaccurate, omit key information, or include irrelevant or redundant text producing socially unacceptable or undesirable text, even if the prompt itself does not include anything explicitly offensive.

The testing suggests that this model is susceptible to jailbreak attacks. If using this model in a RAG or agentic setting, we recommend strong output validation controls to ensure security and safety risks from user - controlled model outputs are consistent with the intended use cases.

Ethical Considerations

NVIDIA believes Trustworthy AI is a shared responsibility and we have established policies and practices to enable development for a wide array of AI applications. When downloaded or used in accordance with our terms of service, developers should work with their internal model team to ensure this model meets requirements for the relevant industry and use case and addresses unforeseen product misuse. Please report security vulnerabilities or NVIDIA AI Concerns here.

📄 License

This model is released under the NVIDIA Open Model License Agreement.

📚 Citation

@misc{dong2024hymbahybridheadarchitecturesmall,

title={Hymba: A Hybrid-head Architecture for Small Language Models},

author={Xin Dong and Yonggan Fu and Shizhe Diao and Wonmin Byeon and Zijia Chen and Ameya Sunil Mahabaleshwarkar and Shih-Yang Liu and Matthijs Van Keirsbilck and Min-Hung Chen and Yoshi Suhara and Yingyan Lin and Jan Kautz and Pavlo Molchanov},

year={2024},

eprint={2411.13676},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2411.13676},

}

| Property | Details |

|---|---|

| Model Type | Text - to - text generation model |

| Training Dates | Between September 1, 2024 and November 10th, 2024 |

| License | NVIDIA Open Model License Agreement |

⚠️ Important Note

The model was trained on data with toxic language, unsafe content, and societal biases. It may generate toxic responses, especially when given toxic prompts. Also, it's susceptible to jailbreak attacks. Strong output validation controls are recommended in RAG or agentic settings.

💡 Usage Tip

When fine - tuning the model using LMFlow, make sure to transform your custom dataset into the LMFlow data format.

Transformers

Transformers Transformers Supports Multiple Languages

Transformers Supports Multiple Languages