🚀 Minerva-7B-instruct-v1.0

Minerva is the first family of large language models (LLMs) pretrained from scratch on Italian. Developed by Sapienza NLP in the Future Artificial Intelligence Research (FAIR) project, in collaboration with CINECA, with additional contributions from Babelscape and the CREATIVE PRIN Project. The Minerva models are truly-open (data and model) Italian-English LLMs, with about half of the pretraining data being Italian text.

🚀 Quick Start

This is the model card for Minerva-7B-instruct-v1.0, a 7 billion parameter model trained on nearly 2.5 trillion tokens (1.14 trillion in Italian, 1.14 trillion in English, and 200 billion in code).

✨ Features

Model Family

This model is part of the Minerva LLM family:

Model Architecture

Minerva-7B-base-v1.0 is a Transformer model based on the Mistral architecture. Check the configuration file for a detailed breakdown of the hyperparameters.

The Minerva LLM family details:

| Model Name |

Tokens |

Layers |

Hidden Size |

Attention Heads |

KV Heads |

Sliding Window |

Max Context Length |

| Minerva-350M-base-v1.0 |

70B (35B it + 35B en) |

16 |

1152 |

16 |

4 |

2048 |

16384 |

| Minerva-1B-base-v1.0 |

200B (100B it + 100B en) |

16 |

2048 |

16 |

4 |

2048 |

16384 |

| Minerva-3B-base-v1.0 |

660B (330B it + 330B en) |

32 |

2560 |

32 |

8 |

2048 |

16384 |

| Minerva-7B-base-v1.0 |

2.48T (1.14T it + 1.14T en + 200B code) |

32 |

4096 |

32 |

8 |

None |

4096 |

Model Training

Minerva-7B-base-v1.0 was trained using llm-foundry 0.8.0 from MosaicML. The hyperparameters:

| Model Name |

Optimizer |

lr |

betas |

eps |

weight decay |

Scheduler |

Warmup Steps |

Batch Size (Tokens) |

Total Steps |

| Minerva-350M-base-v1.0 |

Decoupled AdamW |

2e-4 |

(0.9, 0.95) |

1e-8 |

0.0 |

Cosine |

2% |

4M |

16,690 |

| Minerva-1B-base-v1.0 |

Decoupled AdamW |

2e-4 |

(0.9, 0.95) |

1e-8 |

0.0 |

Cosine |

2% |

4M |

47,684 |

| Minerva-3B-base-v1.0 |

Decoupled AdamW |

2e-4 |

(0.9, 0.95) |

1e-8 |

0.0 |

Cosine |

2% |

4M |

157,357 |

| Minerva-7B-base-v1.0 |

AdamW |

3e-4 |

(0.9, 0.95) |

1e-5 |

0.1 |

Cosine |

2000 |

4M |

591,558 |

SFT Training

The SFT model was trained using Llama-Factory. The data mix:

| Dataset |

Source |

Code |

English |

Italian |

| Glaive-code-assistant |

Link |

100,000 |

0 |

0 |

| Alpaca-python |

Link |

20,000 |

0 |

0 |

| Alpaca-cleaned |

Link |

0 |

50,000 |

0 |

| Databricks-dolly-15k |

Link |

0 |

15,011 |

0 |

| No-robots |

Link |

0 |

9,499 |

0 |

| OASST2 |

Link |

0 |

29,000 |

528 |

| WizardLM |

Link |

0 |

29,810 |

0 |

| LIMA |

Link |

0 |

1,000 |

0 |

| OPENORCA |

Link |

0 |

30,000 |

0 |

| Ultrachat |

Link |

0 |

50,000 |

0 |

| MagpieMT |

Link |

0 |

30,000 |

0 |

| Tulu-V2-Science |

Link |

0 |

7,000 |

0 |

| Aya_datasets |

Link |

0 |

3,944 |

738 |

| Tower-blocks_it |

Link |

0 |

0 |

7,276 |

| Bactrian-X |

Link |

0 |

0 |

67,000 |

| Magpie (Translated by us) |

Link |

0 |

0 |

59,070 |

| Everyday-conversations (Translated by us) |

Link |

0 |

0 |

2,260 |

| alpaca-gpt4-it |

Link |

0 |

0 |

15,000 |

| capybara-claude-15k-ita |

Link |

0 |

0 |

15,000 |

| Wildchat |

Link |

0 |

0 |

5,000 |

| GPT4_INST |

Link |

0 |

0 |

10,000 |

| Italian Safety Instructions |

- |

0 |

0 |

21,426 |

| Italian Conversations |

- |

0 |

0 |

4,843 |

For more details, check our tech page.

Online DPO Training

This model card is for our DPO model. Direct Preference Optimization (DPO) refines models based on user feedback, similar to Reinforcement Learning from Human Feedback (RLHF) but without reinforcement learning complexity. Online DPO allows real-time adaptation during training, continuously refining the model with new feedback. We used the Hugging Face TRL library and Online DPO, with the Skywork/Skywork-Reward-Llama-3.1-8B-v0.2 model as the judge for optimization. For this stage, we used prompts from HuggingFaceH4/ultrafeedback_binarized (English), efederici/evol-dpo-ita (Italian), and Babelscape/ALERT translated to Italian, plus manually curated safety data.

For more details, check our tech page.

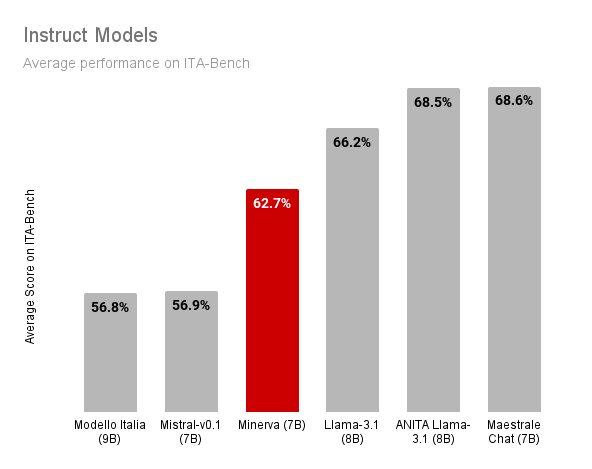

Model Evaluation

For Minerva's evaluation, we used ITA-Bench, a new suite for testing Italian-speaking models. ITA-Bench has 18 benchmarks for assessing language model performance on tasks like scientific knowledge, commonsense reasoning, and mathematical problem-solving.

💻 Usage Examples

Basic Usage

import transformers

import torch

model_id = "sapienzanlp/Minerva-7B-instruct-v1.0"

pipeline = transformers.pipeline(

model=model_id,

model_kwargs={"torch_dtype": torch.bfloat16},

device_map="auto",

)

input_conv = [{"role": "user", "content": "Qual è la capitale dell'Italia?"}]

output = pipeline(

input_conv,

max_new_tokens=128,

)

output

[{'generated_text': [{'role': 'user', 'content': "Qual è la capitale dell'Italia?"}, {'role': 'assistant', 'content': "La capitale dell'Italia è Roma."}]}]

🔧 Technical Details

Bias, Risks, and Limitations

This is a chat foundation model with alignment and safety risk mitigation strategies. However, the model may:

- Overrepresent some viewpoints and underrepresent others

- Contain stereotypes

- Contain personal information

- Generate:

- Racist and sexist content

- Hateful, abusive, or violent language

- Discriminatory or prejudicial language

- Content inappropriate for all settings, including sexual content

- Make errors, including producing incorrect information or historical facts as if factual

- Generate irrelevant or repetitive outputs

We're aware of biases and potential problematic/toxic content in current pretrained large language models. As probabilistic models of (Italian and English) languages, they reflect and amplify training data biases. For more information, refer to our survey:

📄 License

The model is licensed under the Apache-2.0 license.

.png)