Model Overview

Model Features

Model Capabilities

Use Cases

🚀 Whisper-Large-V3-Distil-Italian-v0.2

A distilled version of Whisper, optimized for Italian speech-to-text, with extended training for long-form transcription.

This is a distilled version of Whisper with 2 decoder layers, specifically optimized for Italian speech-to-text conversion. The training of this version has been extended to 30-second audio segments to maintain the ability for long-form transcription. During the distillation process, a "patient" teacher was employed, which means longer training times and more aggressive data augmentation, resulting in improved overall performance.

The model uses openai/whisper-large-v3 as the teacher model while keeping the encoder architecture unchanged. This makes it suitable as a draft model for speculative decoding, potentially achieving 2x inference speed while maintaining identical outputs by only adding 2 extra decoder layers and running the encoder just once. It can also serve as a standalone model to trade some accuracy for better efficiency, running 5.8x faster while using only 49% of the parameters. A paper also suggests that the distilled model may actually produce fewer hallucinations than the full model during long-form transcription.

The model has been converted into multiple formats to ensure broad compatibility across libraries including transformers, openai-whisper, faster-whisper, whisper.cpp, candle, mlx.

📚 Documentation

Performance

The model was evaluated on both short and long-form transcriptions, using in-distribution (ID) and out-of-distribution (OOD) datasets to assess accuracy, generalizability, and robustness.

Note that Word Error Rate (WER) results shown here are post-normalization, which includes converting text to lowercase and removing symbols and punctuation.

All evaluation results on the public datasets can be found here.

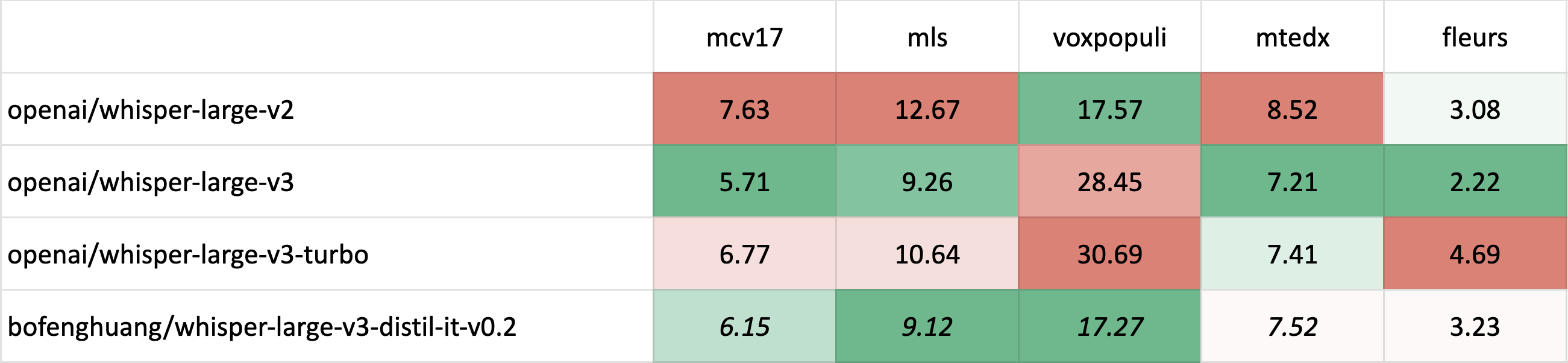

Short-Form Transcription

Italic indicates in-distribution (ID) evaluation, where test sets correspond to data distributions seen during training, typically yielding higher performance than out-of-distribution (OOD) evaluation. Italic and strikethrough denotes potential test set contamination - for example, when training and evaluation use different versions of Common Voice, raising the possibility of overlapping data.

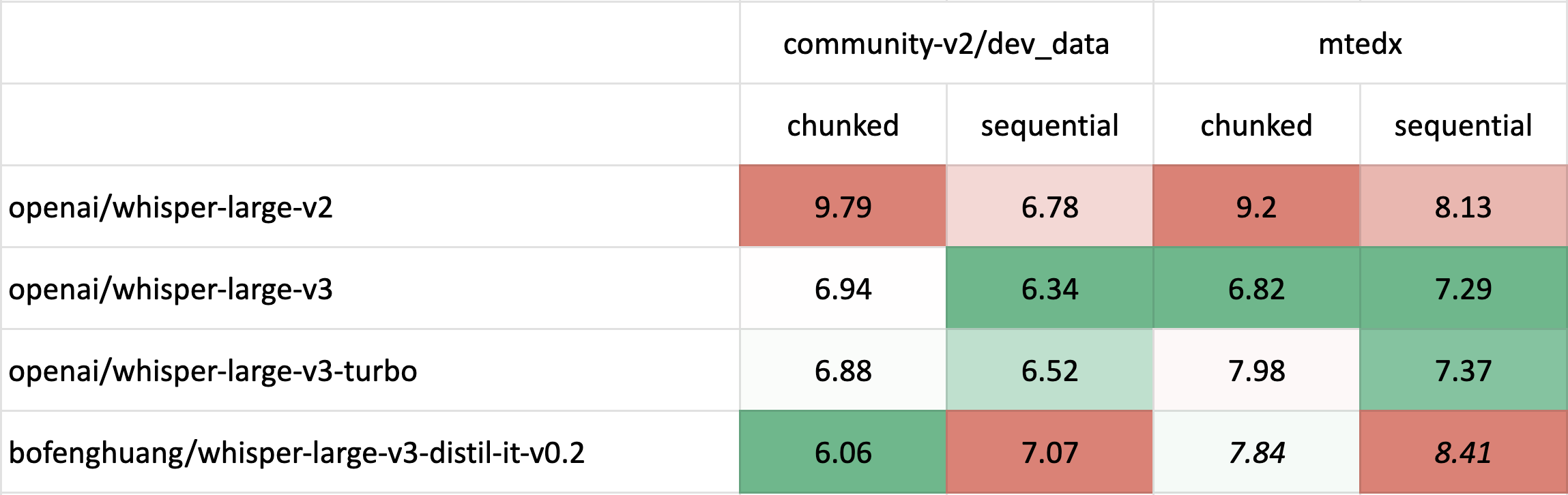

Long-Form Transcription

Long-form transcription evaluation used the 🤗 Hugging Face pipeline with both chunked (chunk_length_s = 30) and original sequential decoding methods.

💻 Usage Examples

Hugging Face Pipeline

The model can be easily used with the 🤗 Hugging Face pipeline class for audio transcription. For long-form transcription (over 30 seconds), it will perform sequential decoding as described in OpenAI's paper. If you need faster inference, you can use the chunk_length_s argument for chunked parallel decoding, which provides 9x faster inference speed but may slightly compromise performance compared to OpenAI's sequential algorithm.

import torch

from datasets import load_dataset

from transformers import AutoModelForSpeechSeq2Seq, AutoProcessor, pipeline

device = "cuda:0" if torch.cuda.is_available() else "cpu"

torch_dtype = torch.float16 if torch.cuda.is_available() else torch.float32

# Load model

model_name_or_path = "bofenghuang/whisper-large-v3-distil-it-v0.2"

processor = AutoProcessor.from_pretrained(model_name_or_path)

model = AutoModelForSpeechSeq2Seq.from_pretrained(

model_name_or_path,

torch_dtype=torch_dtype,

low_cpu_mem_usage=True,

)

model.to(device)

# Init pipeline

pipe = pipeline(

"automatic-speech-recognition",

model=model,

feature_extractor=processor.feature_extractor,

tokenizer=processor.tokenizer,

torch_dtype=torch_dtype,

device=device,

# chunk_length_s=30, # for chunked decoding

max_new_tokens=128,

)

# Example audio

dataset = load_dataset("bofenghuang/asr-dummy", "it", split="test")

sample = dataset[0]["audio"]

# Run pipeline

result = pipe(sample)

print(result["text"])

Hugging Face Low-level APIs

You can also use the 🤗 Hugging Face low-level APIs for transcription, offering greater control over the process, as demonstrated below:

import torch

from datasets import load_dataset

from transformers import AutoModelForSpeechSeq2Seq, AutoProcessor

device = "cuda:0" if torch.cuda.is_available() else "cpu"

torch_dtype = torch.float16 if torch.cuda.is_available() else torch.float32

# Load model

model_name_or_path = "bofenghuang/whisper-large-v3-distil-it-v0.2"

processor = AutoProcessor.from_pretrained(model_name_or_path)

model = AutoModelForSpeechSeq2Seq.from_pretrained(

model_name_or_path,

torch_dtype=torch_dtype,

low_cpu_mem_usage=True,

)

model.to(device)

# Example audio

dataset = load_dataset("bofenghuang/asr-dummy", "it", split="test")

sample = dataset[0]["audio"]

# Extract feautres

input_features = processor(

sample["array"], sampling_rate=sample["sampling_rate"], return_tensors="pt"

).input_features

# Generate tokens

predicted_ids = model.generate(

input_features.to(dtype=torch_dtype).to(device), max_new_tokens=128

)

# Detokenize to text

transcription = processor.batch_decode(predicted_ids, skip_special_tokens=True)[0]

print(transcription)

Speculative Decoding

Speculative decoding can be achieved using a draft model, essentially a distilled version of Whisper. This approach guarantees identical outputs to using the main Whisper model alone, offers a 2x faster inference speed, and incurs only a slight increase in memory overhead.

Since the distilled Whisper has the same encoder as the original, only its decoder need to be loaded, and encoder outputs are shared between the main and draft models during inference.

Using speculative decoding with the Hugging Face pipeline is simple - just specify the assistant_model within the generation configurations.

import torch

from datasets import load_dataset

from transformers import (

AutoModelForCausalLM,

AutoModelForSpeechSeq2Seq,

AutoProcessor,

pipeline,

)

device = "cuda:0" if torch.cuda.is_available() else "cpu"

torch_dtype = torch.float16 if torch.cuda.is_available() else torch.float32

# Load model

model_name_or_path = "openai/whisper-large-v3"

processor = AutoProcessor.from_pretrained(model_name_or_path)

model = AutoModelForSpeechSeq2Seq.from_pretrained(

model_name_or_path,

torch_dtype=torch_dtype,

low_cpu_mem_usage=True,

)

model.to(device)

# Load draft model

assistant_model_name_or_path = "bofenghuang/whisper-large-v3-distil-it-v0.2"

assistant_model = AutoModelForCausalLM.from_pretrained(

assistant_model_name_or_path,

torch_dtype=torch_dtype,

low_cpu_mem_usage=True,

)

assistant_model.to(device)

# Init pipeline

pipe = pipeline(

"automatic-speech-recognition",

model=model,

feature_extractor=processor.feature_extractor,

tokenizer=processor.tokenizer,

torch_dtype=torch_dtype,

device=device,

generate_kwargs={"assistant_model": assistant_model},

max_new_tokens=128,

)

# Example audio

dataset = load_dataset("bofenghuang/asr-dummy", "it", split="test")

sample = dataset[0]["audio"]

# Run pipeline

result = pipe(sample)

print(result["text"])

OpenAI Whisper

You can also employ the sequential long-form decoding algorithm with a sliding window and temperature fallback, as outlined by OpenAI in their original paper.

First, install the openai-whisper package:

pip install -U openai-whisper

Then, download the converted model:

huggingface-cli download --include original_model.pt --local-dir ./models/whisper-large-v3-distil-it-v0.2 bofenghuang/whisper-large-v3-distil-it-v0.2

Now, you can transcirbe audio files by following the usage instructions provided in the repository:

import whisper

from datasets import load_dataset

# Load model

model_name_or_path = "./models/whisper-large-v3-distil-it-v0.2/original_model.pt"

model = whisper.load_model(model_name_or_path)

# Example audio

dataset = load_dataset("bofenghuang/asr-dummy", "it", split="test")

sample = dataset[0]["audio"]["array"].astype("float32")

# Transcribe

result = model.transcribe(sample, language="it")

print(result["text"])

Faster Whisper

Faster Whisper is a reimplementation of OpenAI's Whisper models and the sequential long-form decoding algorithm in the CTranslate2 format.

Compared to openai-whisper, it offers up to 4x faster inference speed, while consuming less memory. Additionally, the model can be quantized into int8, further enhancing its efficiency on both CPU and GPU.

First, install the faster-whisper package:

pip install faster-whisper

Then, download the model converted to the CTranslate2 format:

huggingface-cli download --include ctranslate2/* --local-dir ./models/whisper-large-v3-distil-it-v0.2 bofenghuang/whisper-large-v3-distil-it-v0.2

Now, you can transcirbe audio files by following the usage instructions provided in the repository:

from datasets import load_dataset

from faster_whisper import WhisperModel

# Load model

model_name_or_path = "./models/whisper-large-v3-distil-it-v0.2/ctranslate2"

model = WhisperModel(model_name_or_path, device="cuda", compute_type="float16") # Run on GPU with FP16

# Example audio

dataset = load_dataset("bofenghuang/asr-dummy", "it", split="test")

sample = dataset[0]["audio"]["array"].astype("float32")

segments, info = model.transcribe(sample, beam_size=5, language="it")

for segment in segments:

print("[%.2fs -> %.2fs] %s" % (segment.start, segment.end, segment.text))

Whisper.cpp

Whisper.cpp is a reimplementation of OpenAI's Whisper models, crafted in plain C/C++ without any dependencies. It offers compatibility with various backends and platforms.

Additionally, the model can be quantized to either 4-bit or 5-bit integers, further enhancing its efficiency.

First, clone and build the whisper.cpp repository:

git clone https://github.com/ggerganov/whisper.cpp.git

cd whisper.cpp

# build the main example

make

Next, download the converted ggml weights from the Hugging Face Hub:

# Download model quantized with Q5_0 method

huggingface-cli download --include ggml-model* --local-dir ./models/whisper-large-v3-distil-it-v0.2 bofenghuang/whisper-large-v3-distil-it-v0.2

Now, you can transcribe an audio file using the following command:

./main -m ./models/whisper-large-v3-distil-it-v0.2/ggml-model-q5_0.bin -l it -f /path/to/audio/file --print-colors

Candle

Candle-whisper is a reimplementation of OpenAI's Whisper models in the candle format - a lightweight ML framework built in Rust.

First, clone the candle repository:

git clone https://github.com/huggingface/candle.git

cd candle/candle-examples/examples/whisper

Transcribe an audio file using the following command:

cargo run --example whisper --release -- --model large-v3 --model-id bofenghuang/whisper-large-v3-distil-it-v0.2 --language it --input /path/to/audio/file

In order to use CUDA add --features cuda to the example command line:

cargo run --example whisper --release --features cuda -- --model large-v3 --model-id bofenghuang/whisper-large-v3-distil-it-v0.2 --language it --input /path/to/audio/file

MLX

MLX-Whisper is a reimplementation of OpenAI's Whisper models in the MLX format - a ML framework on Apple silicon. It supports features like lazy computation, unified memory management, etc.

First, clone the MLX Examples repository: ... (original README didn't finish this part, so it's left as is)

📄 License

The model is released under the MIT license.

| Property | Details |

|---|---|

| Model Type | Distilled Whisper with 2 decoder layers, optimized for Italian speech-to-text |

| Training Data | mozilla-foundation/common_voice_17_0, facebook/multilingual_librispeech, facebook/voxpopuli, espnet/yodas |

| Metrics | Word Error Rate (WER) |

Transformers Supports Multiple Languages

Transformers Supports Multiple Languages Transformers Supports Multiple Languages

Transformers Supports Multiple Languages