🚀 Sana, Sana-Sprint

Sana and Sana-Sprint are ultra - efficient text - to - image diffusion models, reducing inference steps while achieving state - of - the - art performance.

🚀 Quick Start

The source code of Sana and Sana - Sprint is available at https://github.com/NVlabs/Sana. You can refer to it for further development and research.

✨ Features

Demos

Training Pipeline

Model Efficiency

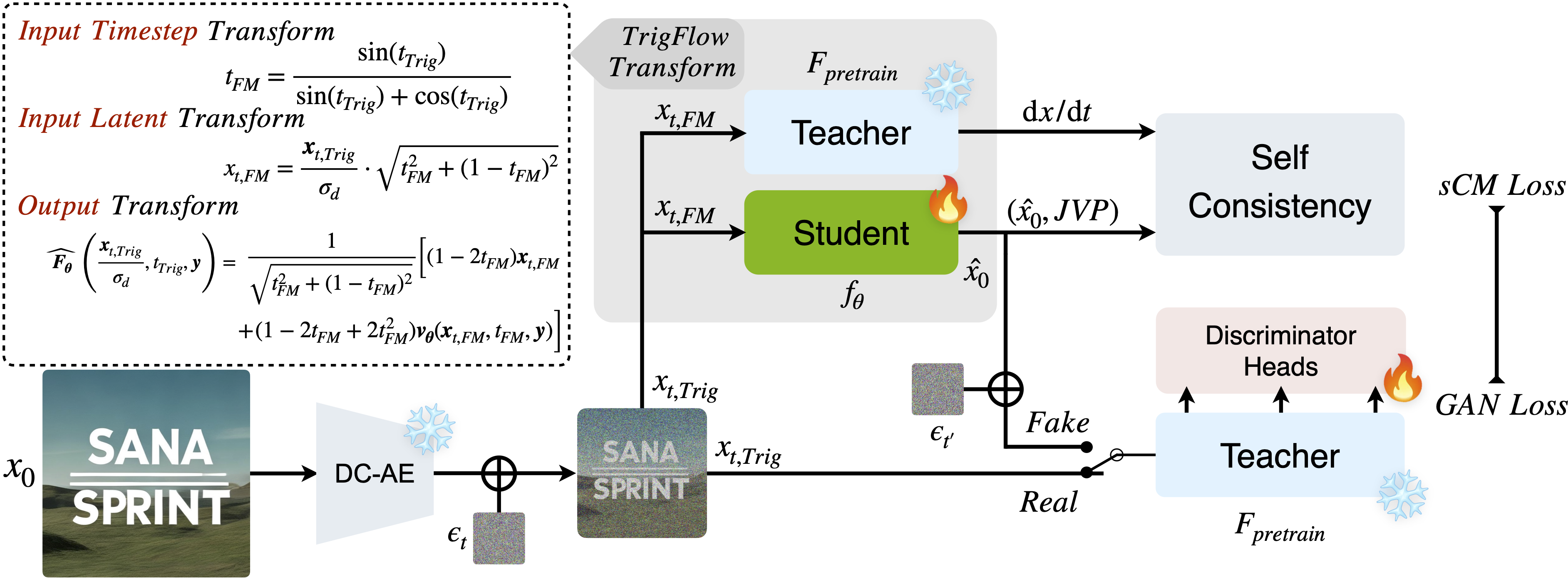

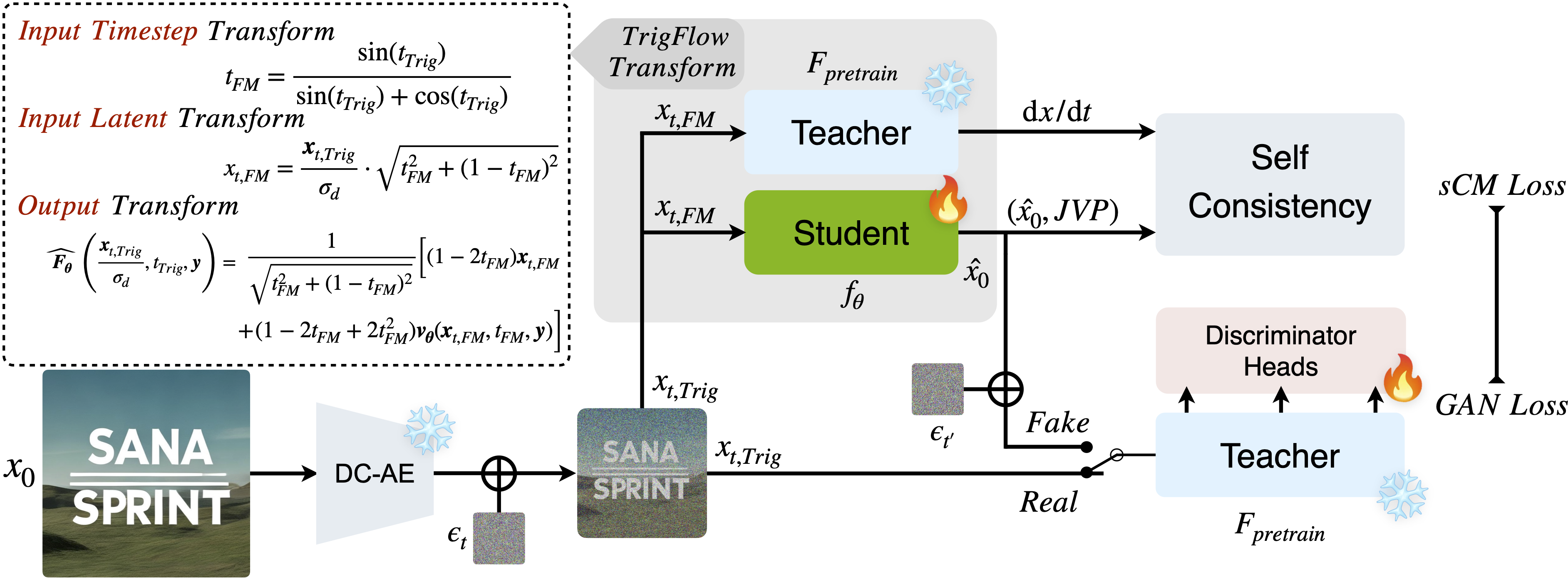

SANA - Sprint is an ultra - efficient diffusion model for text - to - image (T2I) generation, reducing inference steps from 20 to 1 - 4 while achieving state - of - the - art performance.

Key innovations include:

(1) A training - free approach for continuous - time consistency distillation (sCM), eliminating costly retraining;

(2) A unified step - adaptive model for high - quality generation in 1 - 4 steps; and

(3) ControlNet integration for real - time interactive image generation.

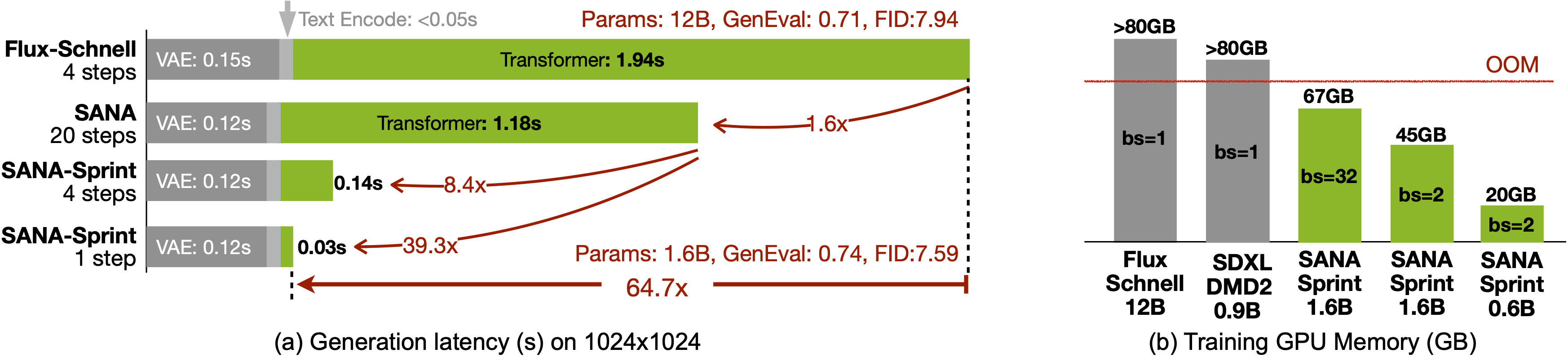

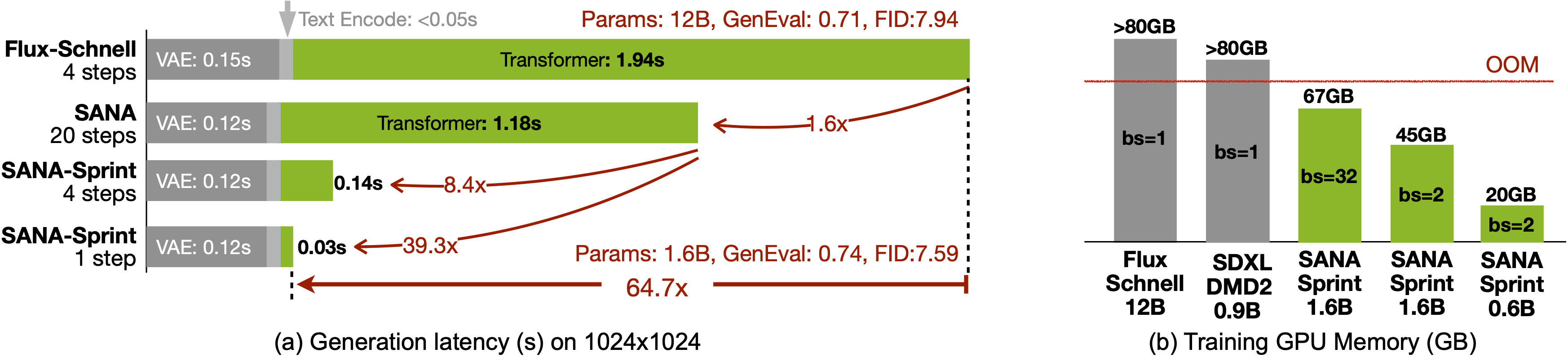

SANA - Sprint achieves 7.59 FID and 0.74 GenEval in just 1 step — outperforming FLUX - schnell (7.94 FID / 0.71 GenEval) while being 10× faster (0.1s vs 1.1s on H100).

With latencies of 0.1s (T2I) and 0.25s (ControlNet) for 1024×1024 images on H100, and 0.31s (T2I) on an RTX 4090, SANA - Sprint is ideal for AI - powered consumer applications (AIPC).

Model Description

| Property |

Details |

| Developed by |

NVIDIA, Sana |

| Model Type |

One - Step Diffusion with Continuous - Time Consistency Distillation |

| Model Size |

0.6B parameters |

| Model Precision |

torch.bfloat16 (BF16) |

| Model Resolution |

This model is developed to generate 1024px based images with multi - scale heigh and width. |

| License |

NSCL v2 - custom. Governing Terms: NVIDIA License. Additional Information: [Gemma Terms of Use |

| Model Description |

This is a model that can be used to generate and modify images based on text prompts. It is a Linear Diffusion Transformer that uses one fixed, pretrained text encoders ([Gemma2 - 2B - IT](https://huggingface.co/google/gemma - 2 - 2b - it)) and one 32x spatial - compressed latent feature encoder ([DC - AE](https://hanlab.mit.edu/projects/dc - ae)). |

| Resources for more information |

Check out our GitHub Repository and the SANA - Sprint report on arXiv. |

Model Sources

For research purposes, we recommend our generative - models Github repository (https://github.com/NVlabs/Sana), which is more suitable for both training and inference.

[MIT Han - Lab](https://nv - sana.mit.edu/sprint) provides free SANA - Sprint inference.

- Repository: https://github.com/NVlabs/Sana

- Demo: https://nv - sana.mit.edu/sprint

- Guidance: https://github.com/NVlabs/Sana/asset/docs/sana_sprint.md

📚 Documentation

Uses

Direct Use

The model is intended for research purposes only. Possible research areas and tasks include:

- Generation of artworks and use in design and other artistic processes.

- Applications in educational or creative tools.

- Research on generative models.

- Safe deployment of models which have the potential to generate harmful content.

- Probing and understanding the limitations and biases of generative models.

Excluded uses are described below.

Out - of - Scope Use

The model was not trained to be factual or true representations of people or events, and therefore using the model to generate such content is out - of - scope for the abilities of this model.

Limitations and Bias

Limitations

- The model does not achieve perfect photorealism.

- The model cannot render complex legible text.

- Fingers, etc. in general may not be generated properly.

- The autoencoding part of the model is lossy.

Bias

While the capabilities of image generation models are impressive, they can also reinforce or exacerbate social biases.

📄 License

The model is licensed under NSCL v2 - custom. Governing Terms: NVIDIA License. Additional Information: Gemma Terms of Use | Google AI for Developers for Gemma - 2 - 2B - IT, Gemma Prohibited Use Policy | Google AI for Developers.

Transformers

Transformers