🚀 Fine-tuning DeepSeek-R1-Distill-Llama-8B

This project focuses on fine-tuning the DeepSeek-R1-Distill-Llama-8B model for specific tasks, aiming to enhance its performance in targeted domains.

🚀 Quick Start

This README provides an in - depth introduction to the concept, necessity, and effects of large - model fine - tuning, specifically using the fine - tuning of the DeepSeek-R1-Distill-Llama-8B model as an example.

✨ Features

What is Large - Model Fine - Tuning?

Large - model fine - tuning refers to the process of further adjusting the model parameters with a small amount of specialized data for specific tasks or domains based on large - scale pre - trained language models (such as GPT, BERT, PaLM, etc.).

Pre - trained models learn general language rules from massive general data (e.g., Internet text). Fine - tuning allows the model to adapt to specific task requirements (such as medical Q&A, legal document generation) or specific data distributions (such as customer service dialogue data of an enterprise) while retaining its general capabilities.

Why Do We Need Large - Model Fine - Tuning?

Fine - tuning can improve the model's performance in specific tasks, making it more effective and versatile in real - world applications. This process is crucial for customizing existing models to specific tasks or domains.

- Task Adaptability: Pre - trained models acquire general knowledge, but actual tasks (such as sentiment analysis, code generation) require specific skills. Fine - tuning adjusts the model with a small amount of task - specific data to make its output more suitable for the target scenario.

- Domain Specialization: General models may perform poorly in vertical domains (such as medicine, finance). For example, directly using GPT to answer medical questions may not be accurate enough. After fine - tuning with medical literature, the model becomes more professional.

- Data Distribution Alignment: The data in real - world applications may have a different distribution from the pre - trained data (such as user preferences, dialect expressions). Fine - tuning can reduce this difference and improve the model's performance in real - world scenarios.

- Reduced Resource Costs: Training a large model from scratch requires a huge amount of data and computing power, while fine - tuning only needs a small amount of domain - specific data, significantly reducing costs.

- Personalized Requirements: Enterprises or users may want the model to conform to a specific style (such as brand language, writing tone), and fine - tuning can quickly achieve customization.

- Mitigating Hallucination: Fine - tuning for specific tasks (such as factual Q&A) can constrain the model's generation and reduce hallucination.

Effects of Large - Model Fine - Tuning

Hardware Configuration:

- GPU: Tesla T4

- System RAM: 12GB

- GPU RAM: 15.0GB

- Storage: 200GB

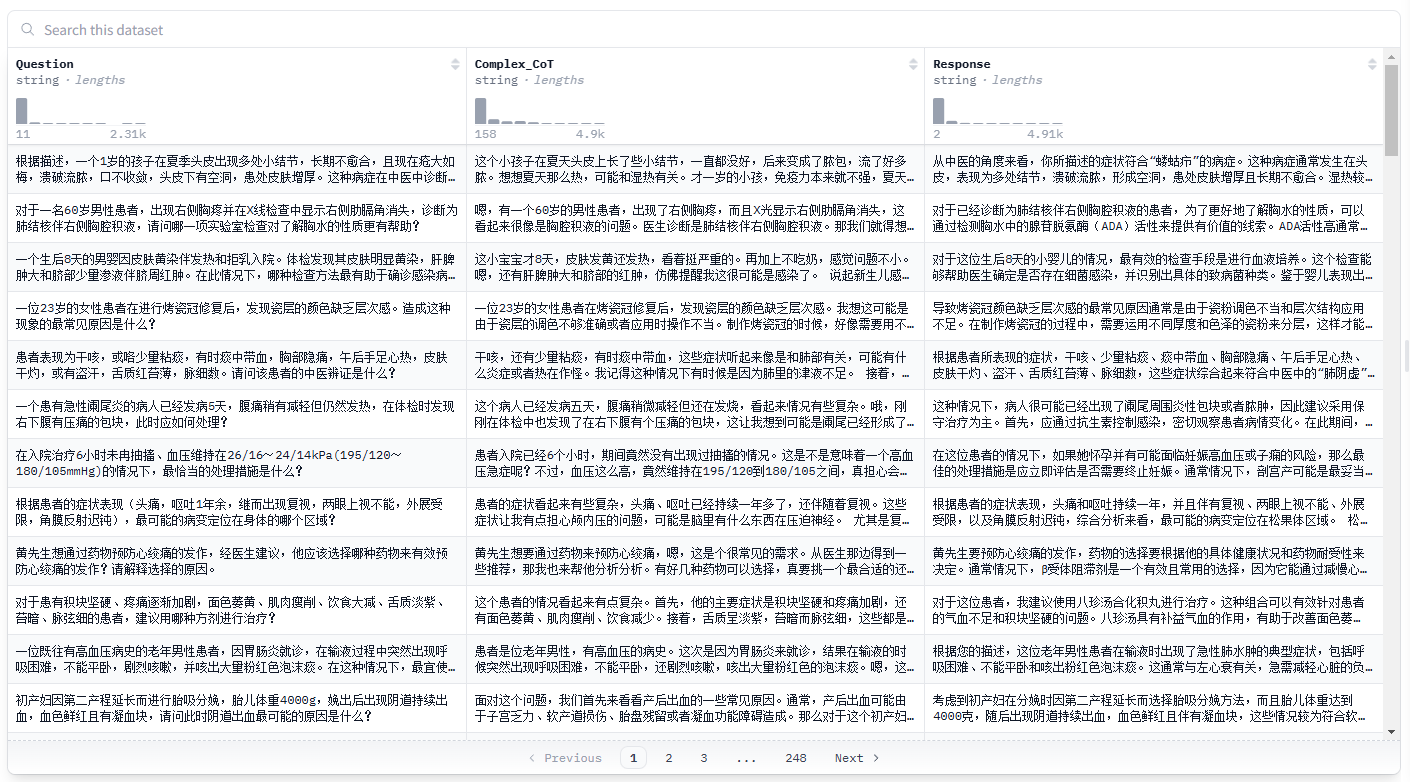

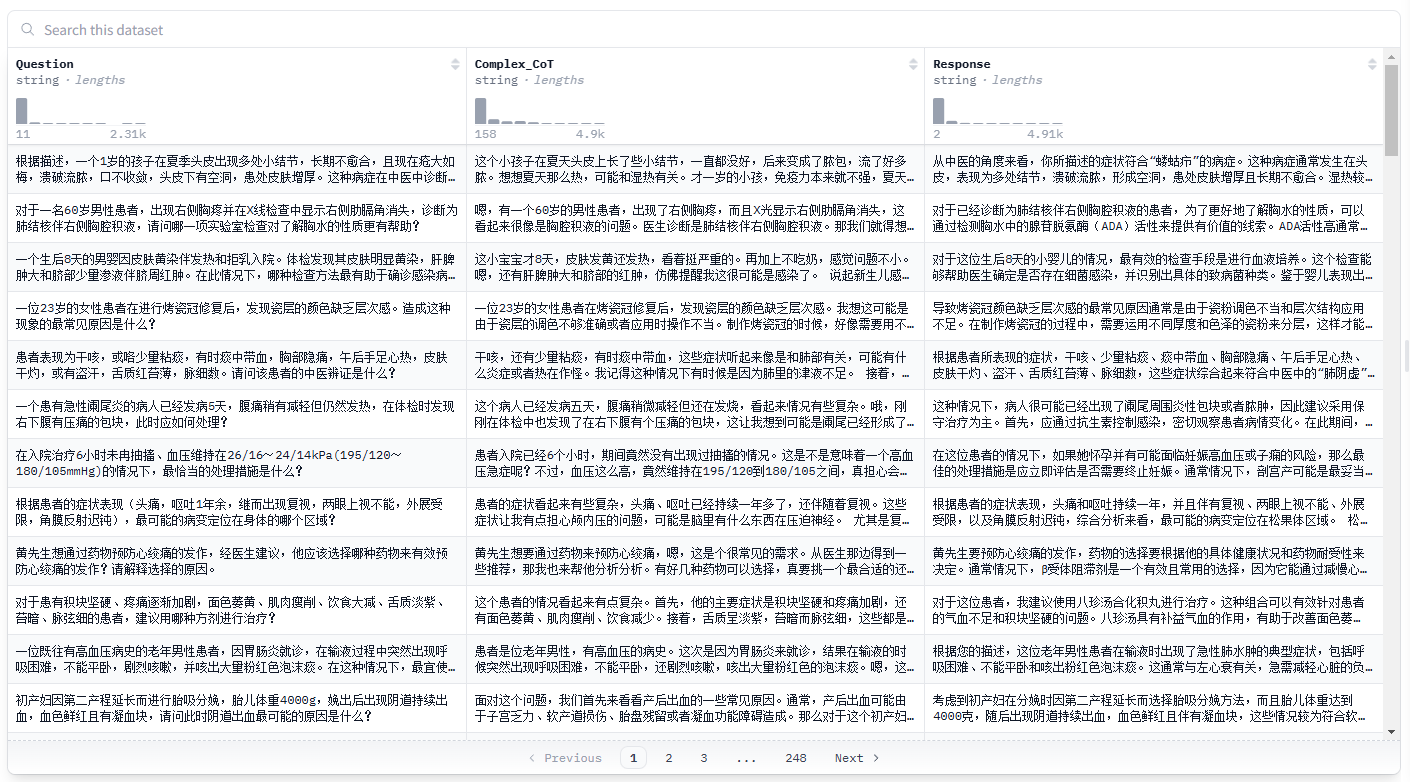

Fine - Tuning Data:

Question:

A patient with acute appendicitis has been ill for 5 days. The abdominal pain has slightly relieved, but the patient still has a fever. A tender mass is found in the right lower abdomen during the physical examination. How should this situation be handled?

Original Data:

Before Fine - Tuning:

After Fine - Tuning:

Explanation:

Currently, only a small part of the test data is used. After fine - tuning, the results are clearly approaching the solution ideas of the original data, and the data format has also been unified. Compared with the responses before fine - tuning, there has been a significant improvement. If more industry data is included in the training, the results will surely be further enhanced.

📄 License

This project is licensed under the Apache - 2.0 license.

| Property |

Details |

| Model Type |

Fine - tuned DeepSeek-R1-Distill-Llama-8B |

| Training Data |

FreedomIntelligence/medical - o1 - reasoning - SFT |

| Base Model |

deepseek - ai/DeepSeek - R1 - Distill - Llama - 8B |

| Pipeline Tag |

question - answering |

Transformers

Transformers