🚀 TF-ID: Table/Figure IDentifier for academic papers

TF-ID is a family of object detection models designed to extract tables and figures from academic papers. It offers multiple versions to meet different needs, providing accurate and efficient extraction capabilities.

🚀 Quick Start

Use the following code to start using the TF-ID model:

import requests

from PIL import Image

from transformers import AutoProcessor, AutoModelForCausalLM

model = AutoModelForCausalLM.from_pretrained("yifeihu/TF-ID-base", trust_remote_code=True)

processor = AutoProcessor.from_pretrained("yifeihu/TF-ID-base", trust_remote_code=True)

prompt = "<OD>"

url = "https://huggingface.co/yifeihu/TF-ID-base/resolve/main/arxiv_2305_10853_5.png?download=true"

image = Image.open(requests.get(url, stream=True).raw)

inputs = processor(text=prompt, images=image, return_tensors="pt")

generated_ids = model.generate(

input_ids=inputs["input_ids"],

pixel_values=inputs["pixel_values"],

max_new_tokens=1024,

do_sample=False,

num_beams=3

)

generated_text = processor.batch_decode(generated_ids, skip_special_tokens=False)[0]

parsed_answer = processor.post_process_generation(generated_text, task="<OD>", image_size=(image.width, image.height))

print(parsed_answer)

To visualize the results, see this tutorial notebook for more details.

✨ Features

- Multiple Model Versions: TF-ID comes in four versions, including models with and without caption text extraction, to meet different application scenarios.

- High Accuracy: Finetuned from microsoft/Florence-2 checkpoints, the models have high accuracy in table and figure extraction.

- Manual Annotation: The training data is manually annotated and checked by humans, ensuring the quality of the data.

📦 Installation

The code example above shows how to load the model using the transformers library. You can install the necessary libraries via the following command:

pip install requests pillow transformers

💻 Usage Examples

Basic Usage

import requests

from PIL import Image

from transformers import AutoProcessor, AutoModelForCausalLM

model = AutoModelForCausalLM.from_pretrained("yifeihu/TF-ID-base", trust_remote_code=True)

processor = AutoProcessor.from_pretrained("yifeihu/TF-ID-base", trust_remote_code=True)

prompt = "<OD>"

url = "https://huggingface.co/yifeihu/TF-ID-base/resolve/main/arxiv_2305_10853_5.png?download=true"

image = Image.open(requests.get(url, stream=True).raw)

inputs = processor(text=prompt, images=image, return_tensors="pt")

generated_ids = model.generate(

input_ids=inputs["input_ids"],

pixel_values=inputs["pixel_values"],

max_new_tokens=1024,

do_sample=False,

num_beams=3

)

generated_text = processor.batch_decode(generated_ids, skip_special_tokens=False)[0]

parsed_answer = processor.post_process_generation(generated_text, task="<OD>", image_size=(image.width, image.height))

print(parsed_answer)

Advanced Usage

For more advanced usage, such as visualizing the results, refer to this tutorial notebook for detailed instructions.

📚 Documentation

Model Summary

TF-ID (Table/Figure IDentifier) is a family of object detection models finetuned to extract tables and figures in academic papers created by Yifei Hu. They come in four versions:

| Model |

Model size |

Model Description |

| TF-ID-base[HF] |

0.23B |

Extract tables/figures and their caption text |

| TF-ID-large[HF] (Recommended) |

0.77B |

Extract tables/figures and their caption text |

| TF-ID-base-no-caption[HF] |

0.23B |

Extract tables/figures without caption text |

| TF-ID-large-no-caption[HF] (Recommended) |

0.77B |

Extract tables/figures without caption text |

| All TF-ID models are finetuned from microsoft/Florence-2 checkpoints. |

|

|

- The models were finetuned with papers from Hugging Face Daily Papers. All bounding boxes are manually annotated and checked by humans.

- TF-ID models take an image of a single paper page as the input, and return bounding boxes for all tables and figures in the given page.

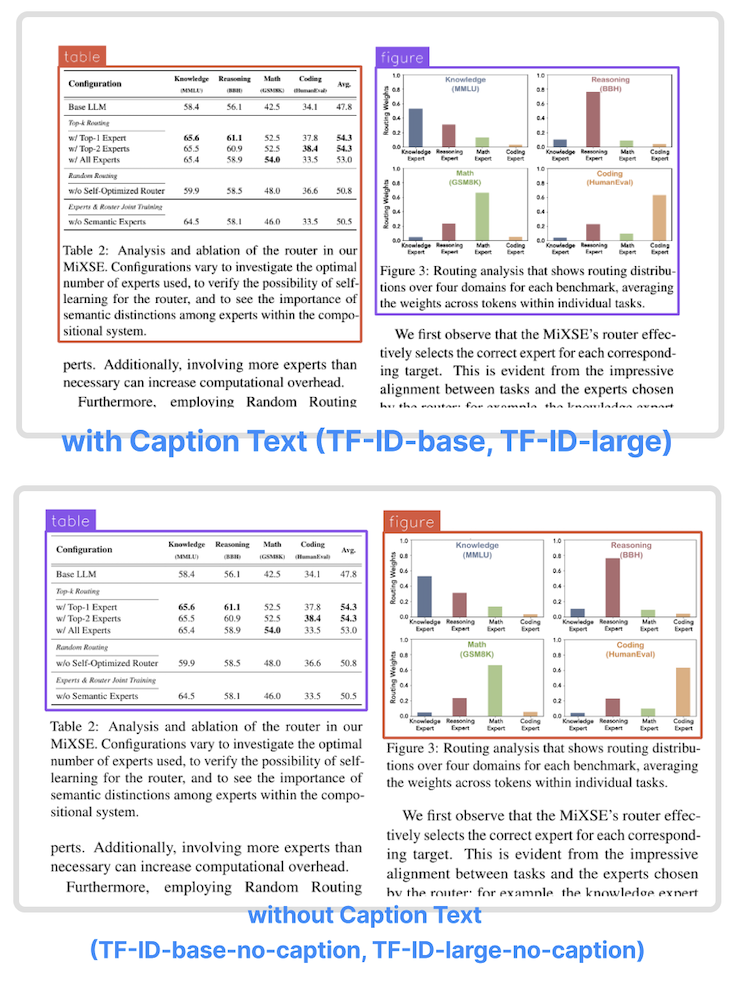

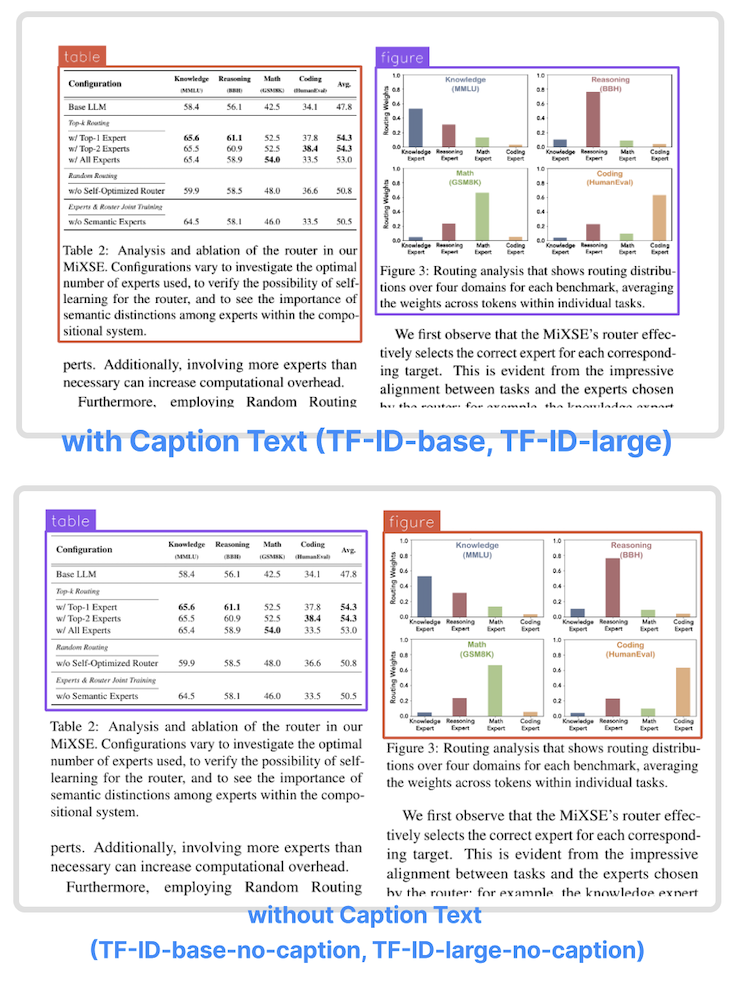

- TF-ID-base and TF-ID-large draw bounding boxes around tables/figures and their caption text.

- TF-ID-base-no-caption and TF-ID-large-no-caption draw bounding boxes around tables/figures without their caption text.

Large models are always recommended!

Object Detection results format:

{'<OD>': {'bboxes': [[x1, y1, x2, y2], ...],

'labels': ['label1', 'label2', ...]} }

Training Code and Dataset

Benchmarks

We tested the models on paper pages outside the training dataset. The papers are a subset of huggingface daily paper.

Correct output - the model draws correct bounding boxes for every table/figure in the given page.

| Model |

Total Images |

Correct Output |

Success Rate |

| TF-ID-base[HF] |

258 |

251 |

97.29% |

| TF-ID-large[HF] |

258 |

253 |

98.06% |

| Model |

Total Images |

Correct Output |

Success Rate |

| TF-ID-base-no-caption[HF] |

261 |

253 |

96.93% |

| TF-ID-large-no-caption[HF] |

261 |

254 |

97.32% |

Depending on the use cases, some "incorrect" output could be totally usable. For example, the model draw two bounding boxes for one figure with two child components.

🔧 Technical Details

- Finetuning: The models are finetuned from microsoft/Florence-2 checkpoints, using papers from Hugging Face Daily Papers as training data.

- Data Annotation: All bounding boxes in the training data are manually annotated and checked by humans, ensuring the accuracy of the data.

- Model Output: The models take an image of a single paper page as the input and return bounding boxes for all tables and figures in the given page.

📄 License

This project is licensed under the MIT License.

BibTex and citation info

@misc{TF-ID,

author = {Yifei Hu},

title = {TF-ID: Table/Figure IDentifier for academic papers},

year = {2024},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/ai8hyf/TF-ID}},

}