🚀 Litus-ai/whisper-small-ita

This model is an optimized version of openai/whisper-small for the Italian language, offering an excellent balance between value and cost. It's ideal for scenarios with limited computational budgets but requiring accurate speech transcription.

✨ Features

This model is a version of openai/whisper-small optimized for the Italian language, trained using a portion of the proprietary data of Litus AI. litus-ai/whisper-small-ita represents a great value/cost compromise and is optimal for contexts where the computational budget is limited, but an accurate transcription of speech is still required.

Special Tokens

The main peculiarity of the model is the integration of special tokens that enrich the transcription with meta - information:

- Paralinguistic elements:

[LAUGH], [MHMH], [SIGH], [UHM]

- Audio quality:

[NOISE], [UNINT] (unintelligible)

- Speech characteristics:

[AUTOCOR] (autocorrections), [L - EN] (English code - switching)

These tokens allow for a richer transcription that captures not only the verbal content but also relevant contextual elements.

Evaluation

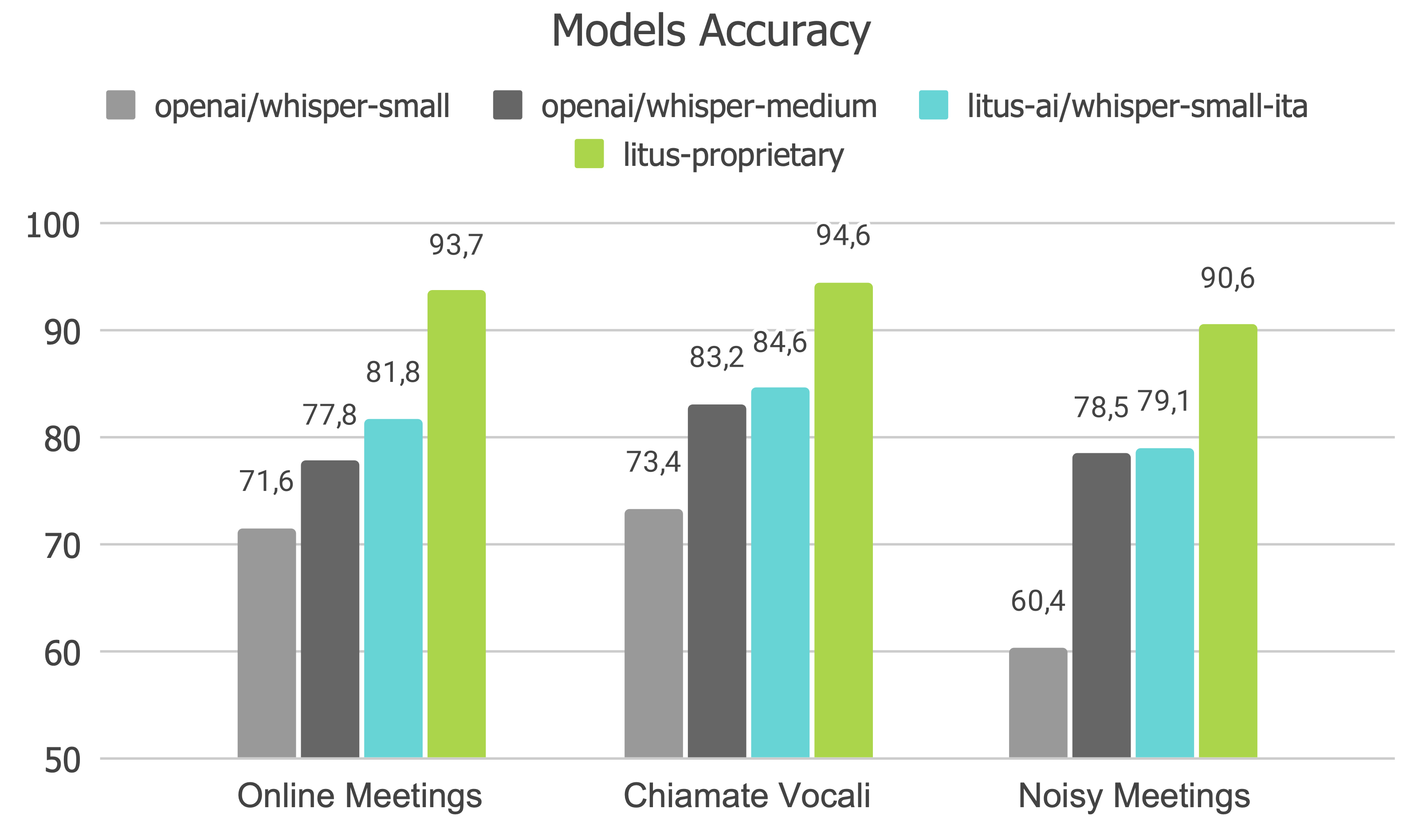

In the following graph, you can find the Accuracy of openai/whisper-small, openai/whisper-medium, litus-ai/whisper-small-ita, and Litus AI's proprietary model, litus-proprietary, on proprietary benchmarks for Italian meetings and voice calls.

📦 Installation

Since this model uses the transformers library, you need to install it if you haven't already. You can install it using pip:

pip install transformers datasets

💻 Usage Examples

Basic Usage

You can use litus-ai/whisper-small-ita through the "automatic-speech-recognition" pipeline of Hugging Face!

from transformers import WhisperProcessor, WhisperForConditionalGeneration

from datasets import load_dataset

model_id = "litus-ai/whisper-small-ita"

processor = WhisperProcessor.from_pretrained(model_id)

model = WhisperForConditionalGeneration.from_pretrained(model_id)

ds = load_dataset("facebook/voxpopuli", "it", split="test")

sample = ds[171]["audio"]

input_features = processor(

sample["array"],

sampling_rate=sample["sampling_rate"],

return_tensors="pt",

).input_features

predicted_ids = model.generate(input_features)

transcription = processor.batch_decode(predicted_ids, skip_special_tokens=False)

📚 Documentation

For any information on the architecture, the data used for pretraining, and the intended use, please refer to the Paper, the Model Card, and the Repository.

📄 License

This model is licensed under the Apache - 2.0 license.

| Property |

Details |

| Model Type |

Optimized version of openai/whisper-small for Italian |

| Training Data |

Part of the proprietary data of Litus AI |

| Pipeline Tag |

automatic - speech - recognition |

| Tags |

audio, automatic - speech - recognition, hf - asr - leaderboard |

| Library Name |

transformers |

| Metrics |

wer |