Model Overview

Model Features

Model Capabilities

Use Cases

🚀 AltDiffusion

AltDiffusion is a bilingual Diffusion model based on Stable Diffusion, supporting multiple languages and capable of generating high - quality images.

🚀 Quick Start

Model Information

| Property | Details |

|---|---|

| Name | AltDiffusion-m9 |

| Task | Multimodal |

| Language(s) | Multilingual (English(En), Chinese(Zh), Spanish(Es), French(Fr), Russian(Ru), Japanese(Ja), Korean(Ko), Arabic(Ar), Italian(It)) |

| Model | Stable Diffusion |

| Github | FlagAI |

We support a Gradio Web UI to run AltDiffusion-m9:

Model Details

We used AltCLIP-m9 and trained a bilingual Diffusion model based on Stable Diffusion, with training data from WuDao dataset and LAION.

Our model performs well in aligning multilanguage and is the strongest open - source version on the market today, retaining most of the stable diffusion capabilities of the original, and in some cases even better than the original model.

AltDiffusion-m9 model is backed by a multilingual CLIP model named AltCLIP-m9, which is also accessible in FlagAI. You can read this tutorial for more information.

Citation

If you find this work helpful, please consider to cite

@article{https://doi.org/10.48550/arxiv.2211.06679,

doi = {10.48550/ARXIV.2211.06679},

url = {https://arxiv.org/abs/2211.06679},

author = {Chen, Zhongzhi and Liu, Guang and Zhang, Bo - Wen and Ye, Fulong and Yang, Qinghong and Wu, Ledell},

keywords = {Computation and Language (cs.CL), FOS: Computer and information sciences},

title = {AltCLIP: Altering the Language Encoder in CLIP for Extended Language Capabilities},

publisher = {arXiv},

year = {2022},

copyright = {arXiv.org perpetual, non - exclusive license}

}

Model Weights

The following weights are automatically downloaded from HF when the AltDiffusion-m9 model is run for the first time:

| Model name | Size | Description |

|---|---|---|

| StableDiffusionSafetyChecker | 1.13G | Safety checker for image |

| AltDiffusion-m9 | 8.0G | Support English(En), Chinese(Zh), Spanish(Es), French(Fr), Russian(Ru), Japanese(Ja), Korean(Ko), Arabic(Ar) and Italian(It) |

| AltCLIP-m9 | 3.22G | Support English(En), Chinese(Zh), Spanish(Es), French(Fr), Russian(Ru), Japanese(Ja), Korean(Ko), Arabic(Ar) and Italian(It) |

💻 Usage Examples

🧨Diffusers Example

AltDiffusion-m9 has been added to 🧨Diffusers!

Our code example is available on Colab. You're welcome to use it.

You can see the documentation page here.

The following example will use the fast DPM scheduler to generate an image in ca. 2 seconds on a V100.

First you should install diffusers main branch and some dependencies:

pip install git+https://github.com/huggingface/diffusers.git torch transformers accelerate sentencepiece

then you can run the following example:

from diffusers import AltDiffusionPipeline, DPMSolverMultistepScheduler

import torch

pipe = AltDiffusionPipeline.from_pretrained("BAAI/AltDiffusion-m9", torch_dtype=torch.float16, revision="fp16")

pipe = pipe.to("cuda")

pipe.scheduler = DPMSolverMultistepScheduler.from_config(pipe.scheduler.config)

prompt = "dark elf princess, highly detailed, d & d, fantasy, highly detailed, digital painting, trending on artstation, concept art, sharp focus, illustration, art by artgerm and greg rutkowski and fuji choko and viktoria gavrilenko and hoang lap"

image = pipe(prompt, num_inference_steps=25).images[0]

image.save("./alt.png")

Transformers Example

import os

import torch

import transformers

from transformers import BertPreTrainedModel

from transformers.models.clip.modeling_clip import CLIPPreTrainedModel

from transformers.models.xlm_roberta.tokenization_xlm_roberta import XLMRobertaTokenizer

from diffusers.schedulers import DDIMScheduler, LMSDiscreteScheduler, PNDMScheduler

from diffusers import StableDiffusionPipeline

from transformers import BertPreTrainedModel,BertModel,BertConfig

import torch.nn as nn

import torch

from transformers.models.xlm_roberta.configuration_xlm_roberta import XLMRobertaConfig

from transformers import XLMRobertaModel

from transformers.activations import ACT2FN

from typing import Optional

class RobertaSeriesConfig(XLMRobertaConfig):

def __init__(self, pad_token_id=1, bos_token_id=0, eos_token_id=2,project_dim=768,pooler_fn='cls',learn_encoder=False, **kwargs):

super().__init__(pad_token_id=pad_token_id, bos_token_id=bos_token_id, eos_token_id=eos_token_id, **kwargs)

self.project_dim = project_dim

self.pooler_fn = pooler_fn

# self.learn_encoder = learn_encoder

class RobertaSeriesModelWithTransformation(BertPreTrainedModel):

_keys_to_ignore_on_load_unexpected = [r"pooler"]

_keys_to_ignore_on_load_missing = [r"position_ids", r"predictions.decoder.bias"]

base_model_prefix = 'roberta'

config_class= XLMRobertaConfig

def __init__(self, config):

super().__init__(config)

self.roberta = XLMRobertaModel(config)

self.transformation = nn.Linear(config.hidden_size, config.project_dim)

self.post_init()

def get_text_embeds(self,bert_embeds,clip_embeds):

return self.merge_head(torch.cat((bert_embeds,clip_embeds)))

def set_tokenizer(self, tokenizer):

self.tokenizer = tokenizer

def forward(self, input_ids: Optional[torch.Tensor] = None) :

attention_mask = (input_ids != self.tokenizer.pad_token_id).to(torch.int64)

outputs = self.base_model(

input_ids=input_ids,

attention_mask=attention_mask,

)

projection_state = self.transformation(outputs.last_hidden_state)

return (projection_state,)

model_path_encoder = "BAAI/RobertaSeriesModelWithTransformation"

model_path_diffusion = "BAAI/AltDiffusion-m9"

device = "cuda"

seed = 12345

tokenizer = XLMRobertaTokenizer.from_pretrained(model_path_encoder, use_auth_token=True)

tokenizer.model_max_length = 77

text_encoder = RobertaSeriesModelWithTransformation.from_pretrained(model_path_encoder, use_auth_token=True)

text_encoder.set_tokenizer(tokenizer)

print("text encode loaded")

pipe = StableDiffusionPipeline.from_pretrained(model_path_diffusion,

tokenizer=tokenizer,

text_encoder=text_encoder,

use_auth_token=True,

)

print("diffusion pipeline loaded")

pipe = pipe.to(device)

prompt = "Thirty years old lee evans as a sad 19th century postman. detailed, soft focus, candle light, interesting lights, realistic, oil canvas, character concept art by munkácsy mihály, csók istván, john everett millais, henry meynell rheam, and da vinci"

with torch.no_grad():

image = pipe(prompt, guidance_scale=7.5).images[0]

image.save("3.png")

More parameters of predict_generate_images for you to adjust are listed below:

| Parameter | Type | Description |

|---|---|---|

| prompt | str | The prompt text |

| out_path | str | The output path to save images |

| n_samples | int | Number of images to be generate |

| skip_grid | bool | If set to true, image gridding step will be skipped |

| ddim_step | int | Number of steps in ddim model |

| plms | bool | If set to true, PLMS Sampler instead of DDIM Sampler will be applied |

| scale | float | This value determines how important the prompt influences generate images |

| H | int | Height of image |

| W | int | Width of image |

| C | int | Number of channels of generated images |

| seed | int | Random seed number |

⚠️ Important Note

The model inference requires a GPU of at least 10G above.

📚 Documentation

More Results

Multilanguage Examples

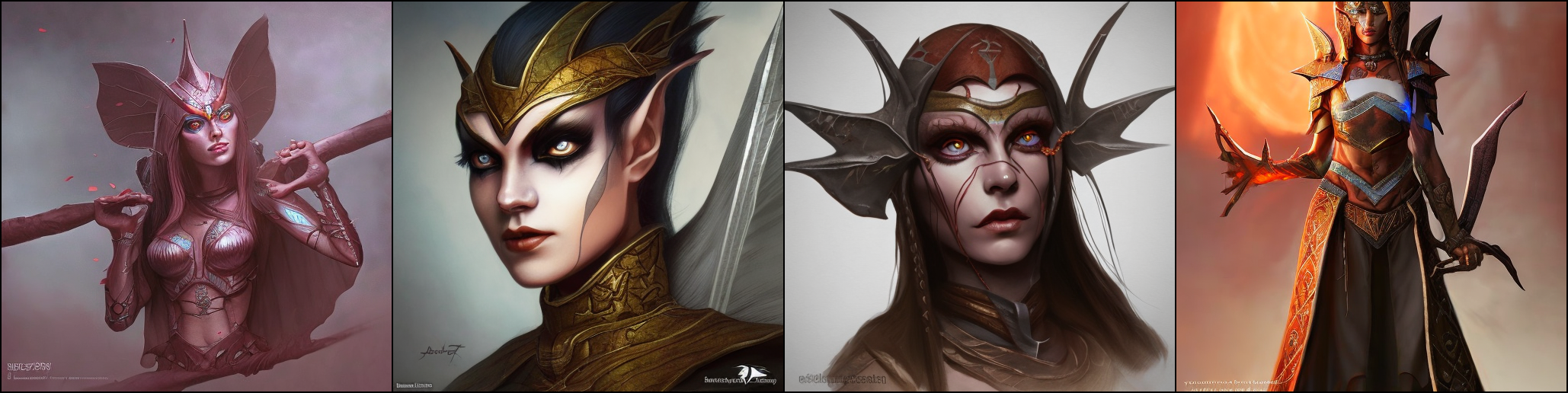

One prompt in different languages generates different faces!

Chinese and English Alignment Ability

-

Prompt: dark elf princess, highly detailed, d & d, fantasy, highly detailed, digital painting, trending on artstation, concept art, sharp focus, illustration, art by artgerm and greg rutkowski and fuji choko and viktoria gavrilenko and hoang lap

-

Generated results from English prompts

-

Prompt: 黑暗精灵公主,非常详细,幻想,非常详细,数字绘画,概念艺术,敏锐的焦点,插图

-

Generated results from Chinese prompts

Chinese Performance

-

Prompt: 带墨镜的男孩肖像,充满细节,8K高清

-

Prompt: 带墨镜的中国男孩肖像,充满细节,8K高清

Ability to Generate Long Images

-

Prompt: 一只带着帽子的小狗

-

Original stable diffusion:

-

Ours:

Note: The long image generation technology here is provided by Right Brain Technology.

📄 License

This model is open access and available to all, with a CreativeML OpenRAIL - M license further specifying rights and usage. The CreativeML OpenRAIL License specifies:

- You can't use the model to deliberately produce nor share illegal or harmful outputs or content

- The authors claim no rights on the outputs you generate, you are free to use them and are accountable for their use which must not go against the provisions set in the license

- You may re - distribute the weights and use the model commercially and/or as a service. If you do, please be aware you have to include the same use restrictions as the ones in the license and share a copy of the CreativeML OpenRAIL - M to all your users (please read the license entirely and carefully)

Please read the full license carefully here: https://huggingface.co/spaces/CompVis/stable-diffusion-license

Transformers

Transformers Transformers English

Transformers English Transformers

Transformers Transformers

Transformers Transformers

Transformers Transformers

Transformers Transformers Supports Multiple Languages

Transformers Supports Multiple Languages Transformers

Transformers