🚀 OneFormer

OneFormer is a model trained on the ADE20k dataset (tiny-sized version, Swin backbone). It addresses the challenges in universal image segmentation, offering a unified solution for multiple segmentation tasks.

🚀 Quick Start

OneFormer is a remarkable model trained on the ADE20k dataset, specifically the tiny - sized version with a Swin backbone. It was first introduced in the paper OneFormer: One Transformer to Rule Universal Image Segmentation by Jain et al. and initially released in this repository.

✨ Features

Model description

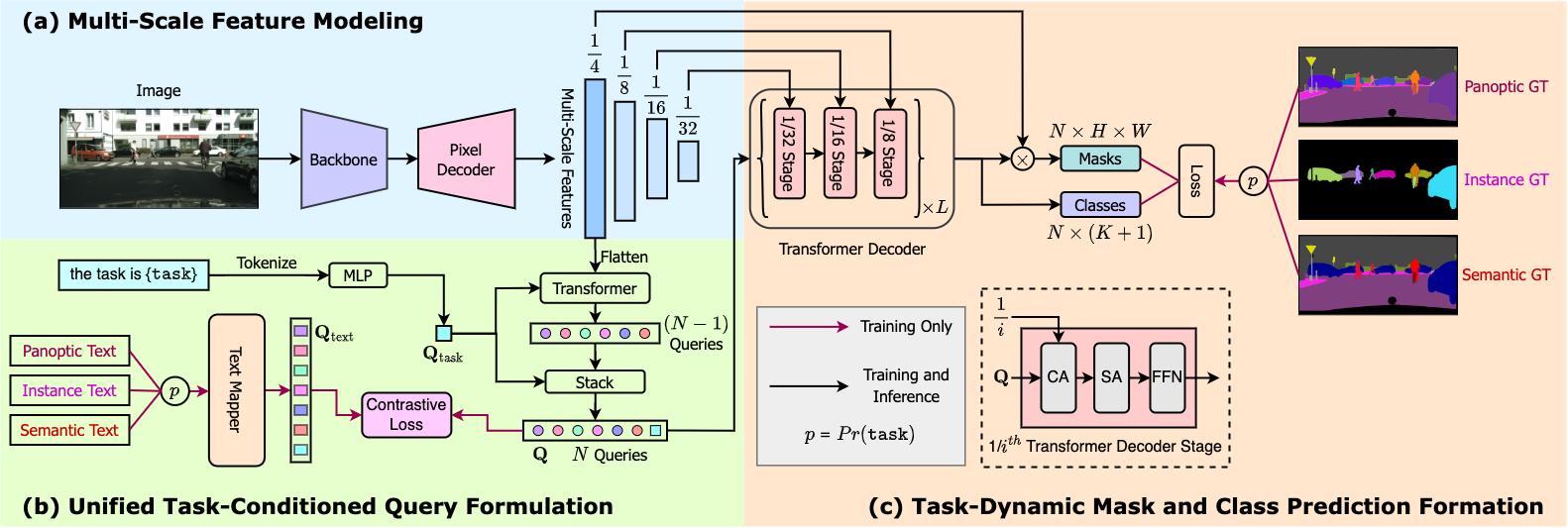

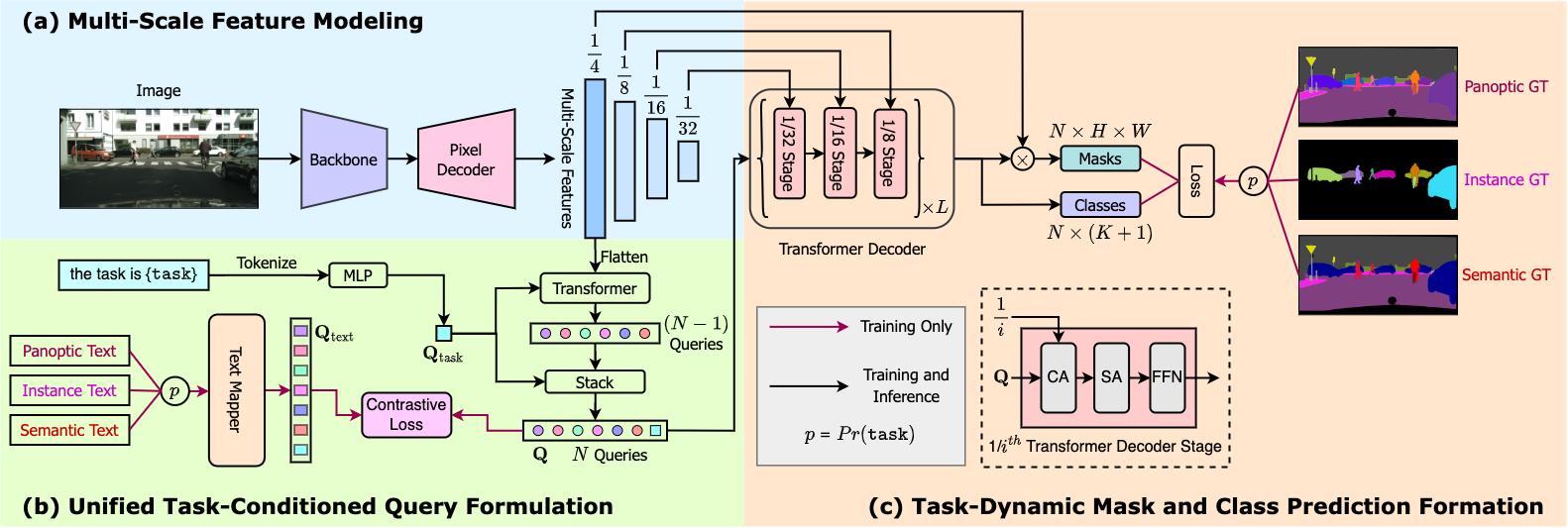

OneFormer stands out as the first multi - task universal image segmentation framework. With a single universal architecture, a single model, and training on a single dataset just once, it outperforms existing specialized models across semantic, instance, and panoptic segmentation tasks. It uses a task token to condition the model on the task at hand, making the architecture task - guided during training and task - dynamic during inference, all within a single model.

Intended uses & limitations

You can utilize this specific checkpoint for semantic, instance, and panoptic segmentation. To find other fine - tuned versions on different datasets, check out the model hub.

💻 Usage Examples

Basic Usage

from transformers import OneFormerProcessor, OneFormerForUniversalSegmentation

from PIL import Image

import requests

url = "https://huggingface.co/datasets/shi-labs/oneformer_demo/blob/main/ade20k.jpeg"

image = Image.open(requests.get(url, stream=True).raw)

processor = OneFormerProcessor.from_pretrained("shi-labs/oneformer_ade20k_swin_tiny")

model = OneFormerForUniversalSegmentation.from_pretrained("shi-labs/oneformer_ade20k_swin_tiny")

semantic_inputs = processor(images=image, task_inputs=["semantic"], return_tensors="pt")

semantic_outputs = model(**semantic_inputs)

predicted_semantic_map = processor.post_process_semantic_segmentation(outputs, target_sizes=[image.size[::-1]])[0]

instance_inputs = processor(images=image, task_inputs=["instance"], return_tensors="pt")

instance_outputs = model(**instance_inputs)

predicted_instance_map = processor.post_process_instance_segmentation(outputs, target_sizes=[image.size[::-1]])[0]["segmentation"]

panoptic_inputs = processor(images=image, task_inputs=["panoptic"], return_tensors="pt")

panoptic_outputs = model(**panoptic_inputs)

predicted_semantic_map = processor.post_process_panoptic_segmentation(outputs, target_sizes=[image.size[::-1]])[0]["segmentation"]

For more examples, please refer to the documentation.

📚 Documentation

Citation

@article{jain2022oneformer,

title={{OneFormer: One Transformer to Rule Universal Image Segmentation}},

author={Jitesh Jain and Jiachen Li and MangTik Chiu and Ali Hassani and Nikita Orlov and Humphrey Shi},

journal={arXiv},

year={2022}

}

📄 License

This project is licensed under the MIT license.

| Property |

Details |

| Model Type |

OneFormer model trained on the ADE20k dataset (tiny - sized version, Swin backbone) |

| Training Data |

scene_parse_150 |