🚀 Conditional DETR model with ResNet-50 backbone

A Conditional DEtection TRansformer (DETR) model trained end-to-end on COCO 2017 object detection, offering fast training convergence.

🚀 Quick Start

The Conditional DETR model can be used for object detection. You can find all available Conditional DETR models on the model hub.

✨ Features

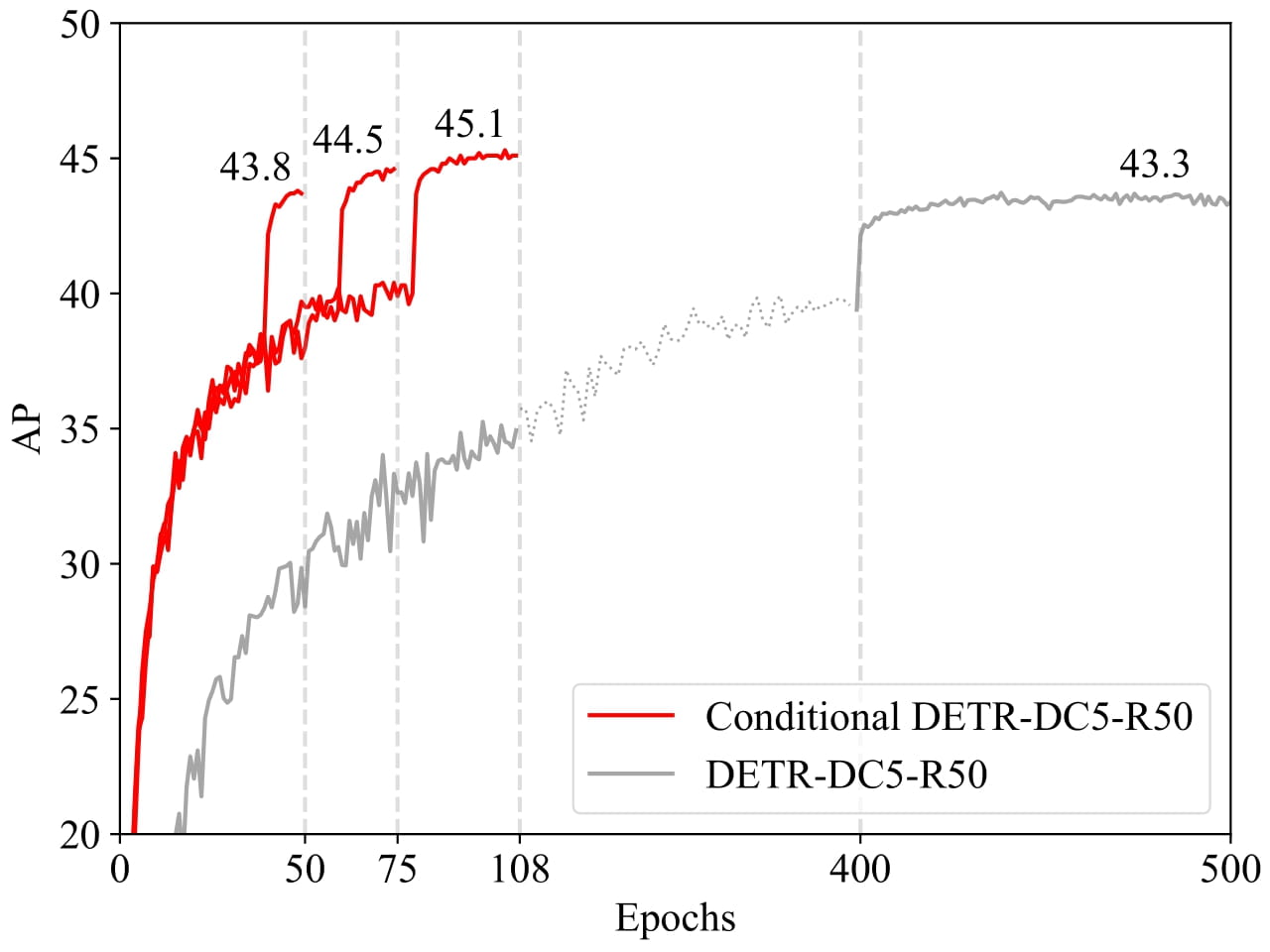

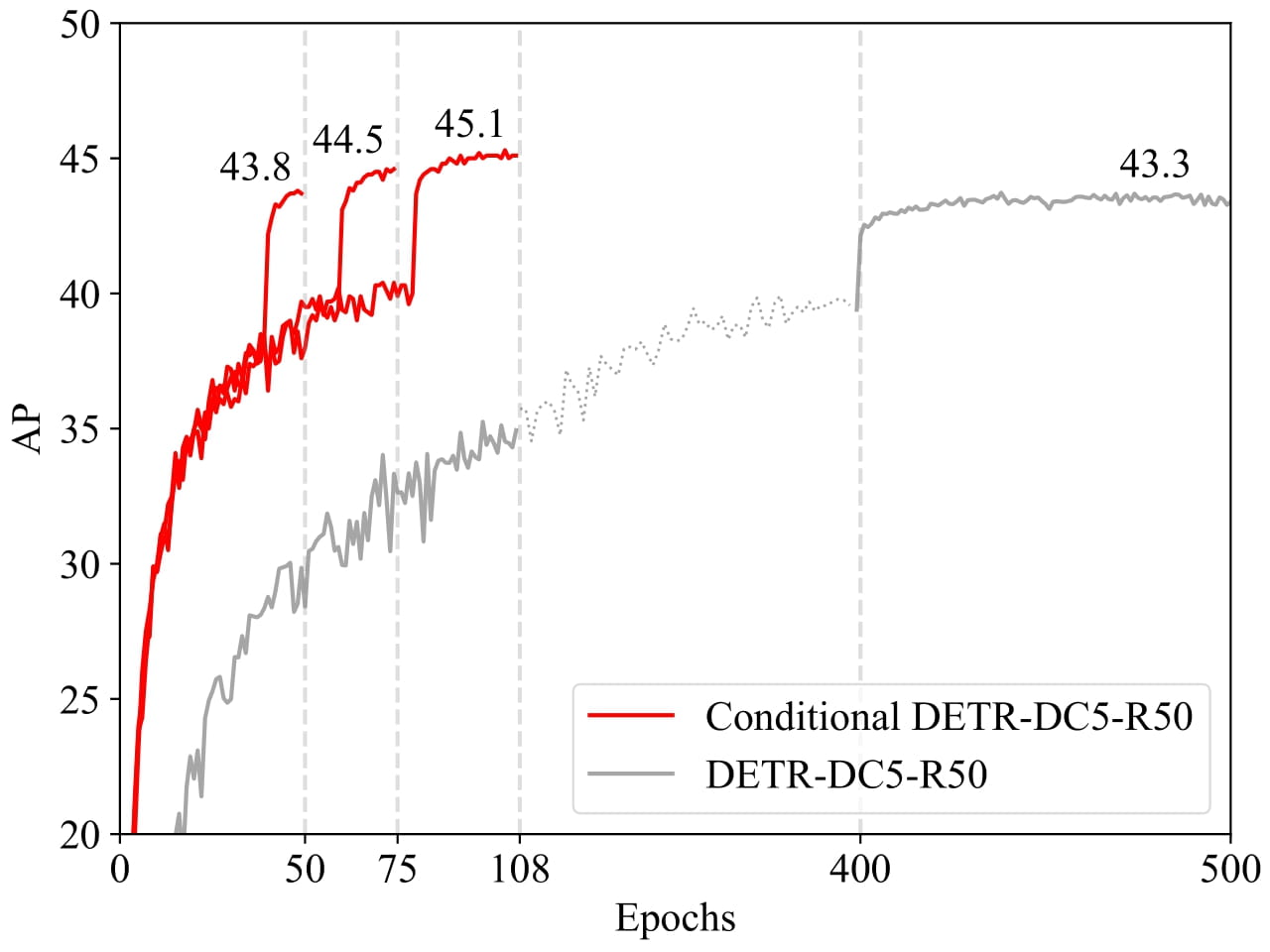

- Fast Training Convergence: Conditional DETR converges 6.7× faster for the backbones R50 and R101 and 10× faster for stronger backbones DC5 - R50 and DC5 - R101.

- Relaxed Dependence on Content Embeddings: By learning a conditional spatial query, it narrows down the spatial range for object classification and box regression.

📦 Installation

There is no specific installation steps provided in the original document.

💻 Usage Examples

Basic Usage

from transformers import AutoImageProcessor, ConditionalDetrForObjectDetection

import torch

from PIL import Image

import requests

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

image = Image.open(requests.get(url, stream=True).raw)

processor = AutoImageProcessor.from_pretrained("microsoft/conditional-detr-resnet-50")

model = ConditionalDetrForObjectDetection.from_pretrained("microsoft/conditional-detr-resnet-50")

inputs = processor(images=image, return_tensors="pt")

outputs = model(**inputs)

target_sizes = torch.tensor([image.size[::-1]])

results = processor.post_process_object_detection(outputs, target_sizes=target_sizes, threshold=0.7)[0]

for score, label, box in zip(results["scores"], results["labels"], results["boxes"]):

box = [round(i, 2) for i in box.tolist()]

print(

f"Detected {model.config.id2label[label.item()]} with confidence "

f"{round(score.item(), 3)} at location {box}"

)

Advanced Usage

There is no advanced usage example provided in the original document.

📚 Documentation

Model description

The recently - developed DETR approach applies the transformer encoder and decoder architecture to object detection and achieves promising performance. In this paper, we handle the critical issue, slow training convergence, and present a conditional cross - attention mechanism for fast DETR training. Our approach is motivated by that the cross - attention in DETR relies highly on the content embeddings for localizing the four extremities and predicting the box, which increases the need for high - quality content embeddings and thus the training difficulty. Our approach, named conditional DETR, learns a conditional spatial query from the decoder embedding for decoder multi - head cross - attention. The benefit is that through the conditional spatial query, each cross - attention head is able to attend to a band containing a distinct region, e.g., one object extremity or a region inside the object box. This narrows down the spatial range for localizing the distinct regions for object classification and box regression, thus relaxing the dependence on the content embeddings and easing the training. Empirical results show that conditional DETR converges 6.7× faster for the backbones R50 and R101 and 10× faster for stronger backbones DC5 - R50 and DC5 - R101.

Intended uses & limitations

You can use the raw model for object detection. See the model hub to look for all available Conditional DETR models.

🔧 Technical Details

The DETR approach applies transformer architecture to object detection. However, its cross - attention mechanism highly depends on content embeddings, increasing training difficulty. Conditional DETR addresses this by learning a conditional spatial query from the decoder embedding. This allows each cross - attention head to focus on a specific region, reducing the reliance on content embeddings and speeding up training. Empirical results show significant improvements in training convergence speed for different backbones.

📄 License

This project is licensed under the Apache 2.0 license.

BibTeX entry and citation info

@inproceedings{MengCFZLYS021,

author = {Depu Meng and

Xiaokang Chen and

Zejia Fan and

Gang Zeng and

Houqiang Li and

Yuhui Yuan and

Lei Sun and

Jingdong Wang},

title = {Conditional {DETR} for Fast Training Convergence},

booktitle = {2021 {IEEE/CVF} International Conference on Computer Vision, {ICCV}

2021, Montreal, QC, Canada, October 10-17, 2021},

}

Information Table

| Property |

Details |

| Model Type |

Conditional DETR model with ResNet - 50 backbone |

| Training Data |

COCO 2017 object detection (118k annotated images for training, 5k for validation) |

| Tags |

object - detection, vision |